What is the K-fold in Python?

What is the K-fold in Python?

I'm happy to help you with that!

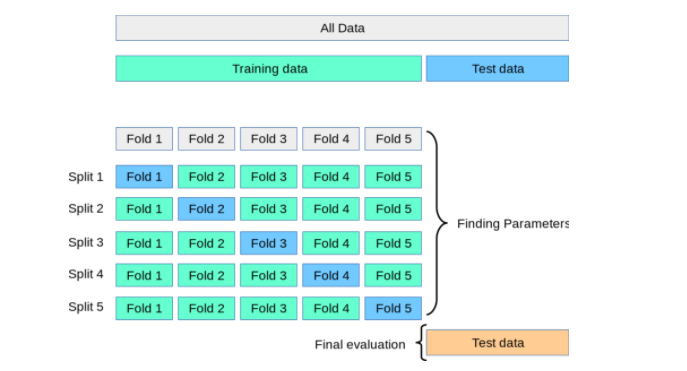

In Python, K-Fold Cross Validation (K-Fold CV) is a popular technique used to evaluate machine learning models' performance. It's a method for estimating model quality without overfitting the training data.

Let me explain it in simple terms:

What is K-Fold?

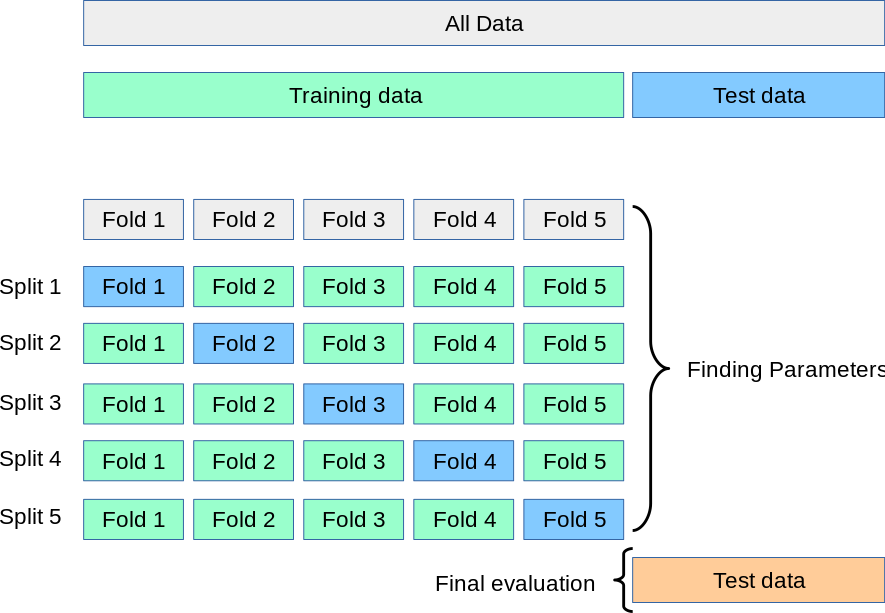

Imagine you have a dataset of n samples, and you want to split it into k equal-sized folds (k=number of folds). Each fold contains approximately 1/k of the total data. You'll then use each fold as a separate test set, while training on the remaining k-1 folds.

How does K-Fold work?

Here's the step-by-step process:

Split the data: Divide your dataset into k folds. Each fold has roughly 1/k of the total data. Train and evaluate: Train a model using k-1 folds (i.e., all but one). Then, use that trained model to make predictions on the remaining fold (the test set). Repeat for each fold: Perform steps 1-2 for each of the k folds. This way, you'll have k sets of predicted values and actual values. Calculate metrics: Use the predicted and actual values from each fold to calculate performance metrics like accuracy, precision, recall, F1-score, etc.Why is K-Fold useful?

K-Fold CV offers several benefits:

Reduces overfitting: By using a portion of the data for testing, you prevent models from becoming too specialized to the training data. Improves model selection: K-Fold helps identify the best-performing model by averaging performance metrics across multiple test sets. Provides more realistic estimates: Since each fold is a representative subset of the overall dataset, your model's performance will be closer to its real-world behavior.K-Fold in Python

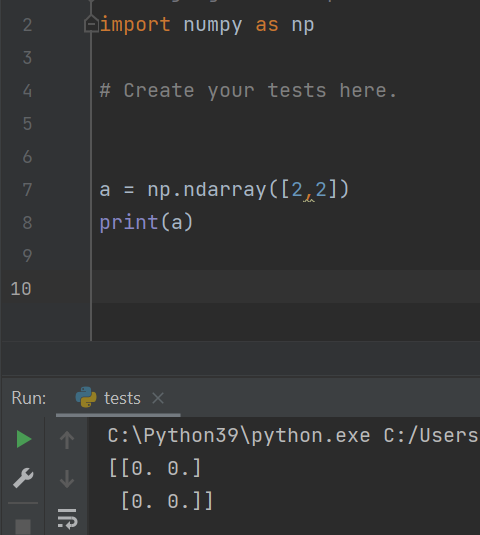

To implement K-Fold CV in Python using popular libraries like scikit-learn or TensorFlow:

Import the necessary library and load your dataset. Split the data into k folds using functions liketrain_test_split() (scikit-learn) or tf.keras.utils.split_to_size() (TensorFlow). Train a model using the training set from each fold, then evaluate its performance on the corresponding test set. Calculate and average your chosen performance metrics across all k folds.

For example, with scikit-learn:

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

Load dataset and split into training and testing sets (k=5)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

Initialize a list to store performance metrics for each fold

folds_performance = []

Perform K-Fold CV (k=5)

for i in range(5):

Split data into training and testing sets for the current fold

X_train_fold, X_test_fold, y_train_fold, y_test_fold = train_test_split(X_train, y_train, test_size=0.2, random_state=i)

Train a model using the training set from this fold

trained_model = train(X_train_fold, y_train_fold)

Evaluate the model on the testing set from this fold

predicted_values = trained_model.predict(X_test_fold)

actual_values = y_test_fold

Calculate accuracy and store it in the list

performance_metric = accuracy_score(actual_values, predicted_values)

folds_performance.append(performance_metric)

Calculate the average performance across all folds

average_accuracy = sum(folds_performance) / len(folds_performance)

print("Average Accuracy:", average_accuracy)

In summary, K-Fold Cross Validation is a robust technique for evaluating machine learning models in Python. It helps prevent overfitting by using separate test sets for each fold and provides a more accurate estimate of model performance.

How to do leave-one-out cross-validation in Python?

I'm happy to help! Here's a comprehensive guide on how to perform Leave-One-Out (LOO) cross-validation using Python:

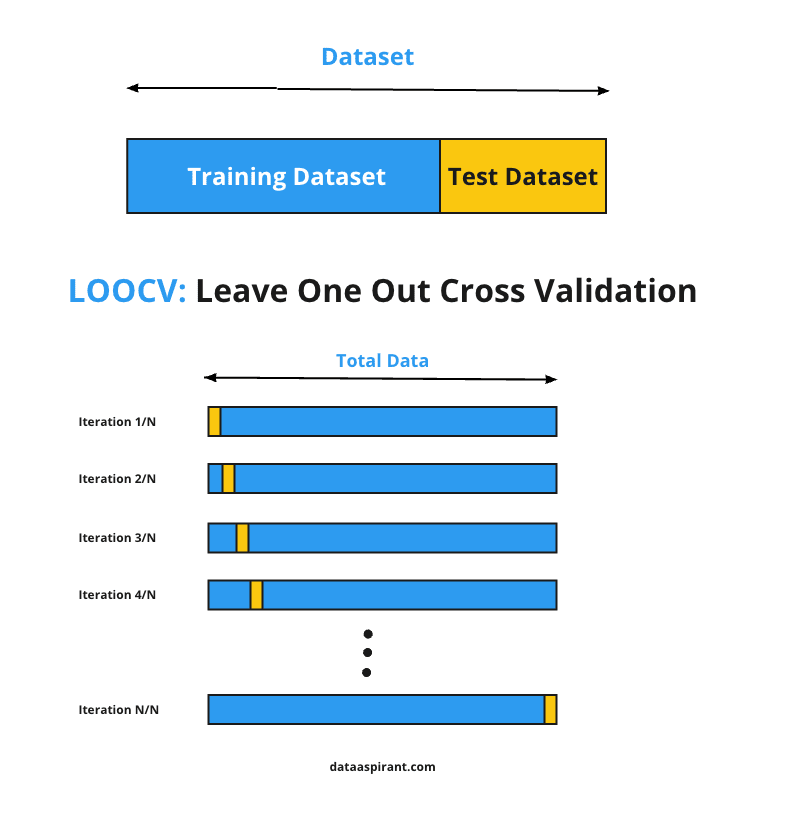

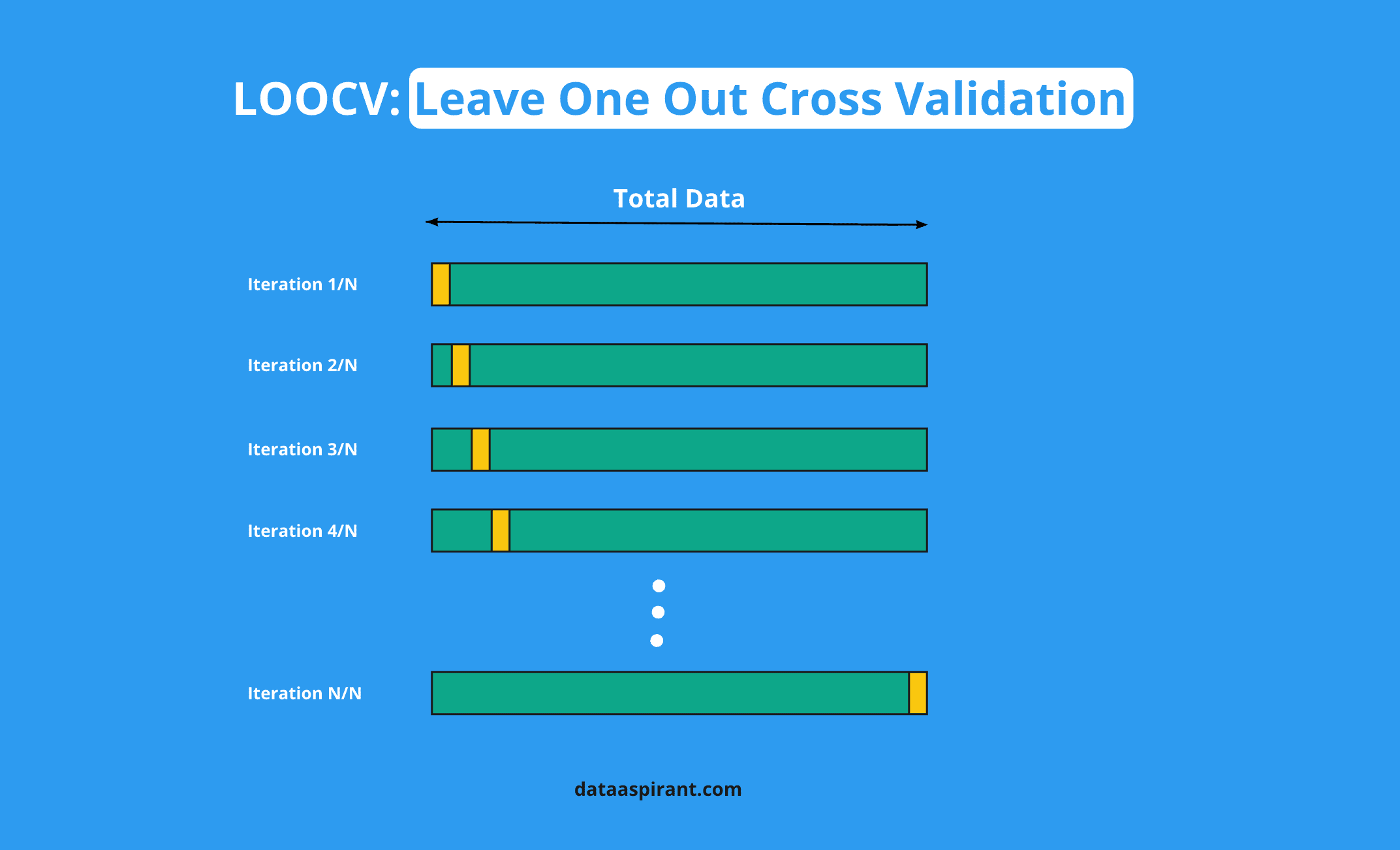

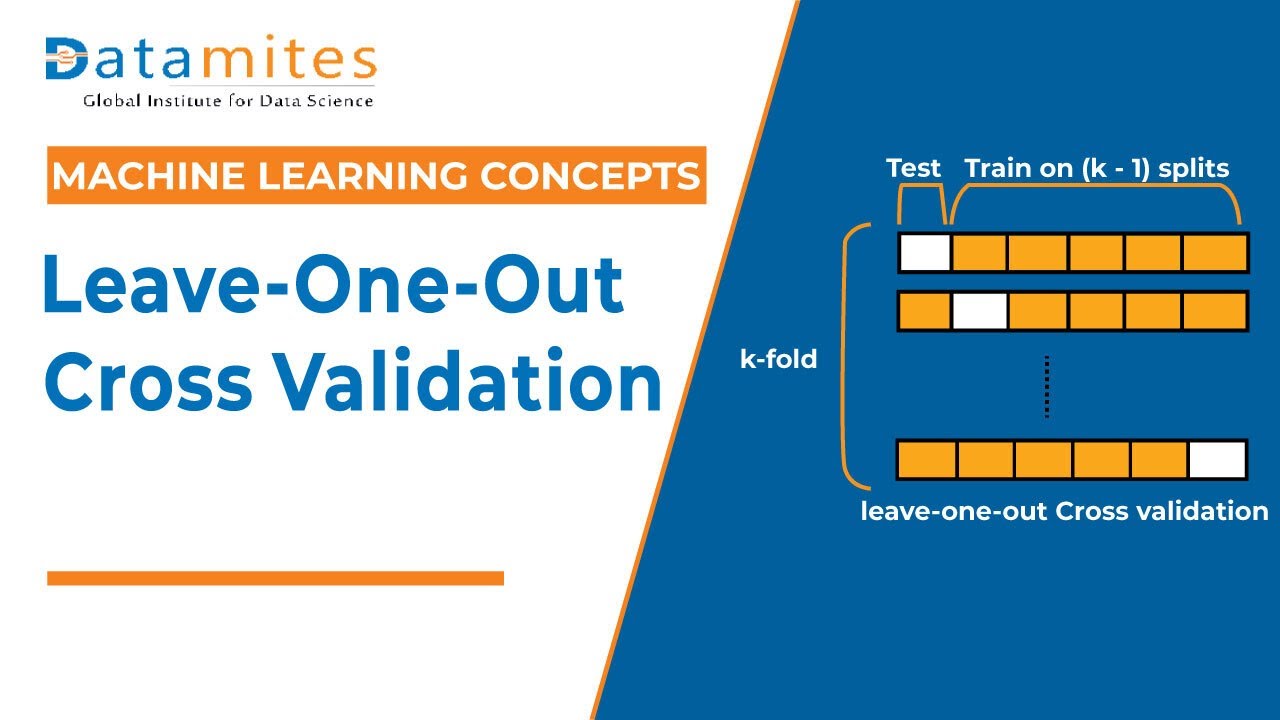

What is Leave-One-Out Cross-Validation?

Leave-One-Out cross-validation is a type of k-fold cross-validation where each sample in the dataset is used exactly once as a test set, and the remaining samples are used for training. This approach is particularly useful when working with small datasets or evaluating model performance on individual data points.

Python Implementation using Scikit-Learn

To perform LOO cross-validation in Python using scikit-learn, you can use the StratifiedShuffleSplit class along with a loop to iterate over each sample. Here's an example:

from sklearn.model_selection import StratifiedShuffleSplit

from sklearn.metrics import accuracy_score

import numpy as np

Load your dataset (X_train, y_train) and test data (X_test, y_test)

Initialize the LOO cross-validation object

splits = StratifiedShuffleSplit(n_splits=1, test_size=0.2, random_state=42, stratify=y_train)

Loop through each split

for train_index, test_index in splits.split(y_train):

X_train_fold, X_test_fold = X_train[train_index], X_train[test_index]

y_train_fold, y_test_fold = y_train[train_index], y_train[test_index]

Train your model (e.g., Logistic Regression) on the training fold

log_reg = LogisticRegression()

log_reg.fit(X_train_fold, y_train_fold)

Evaluate the trained model on the test fold

y_pred = log_reg.predict(X_test_fold)

accuracy = accuracy_score(y_test_fold, y_pred)

print(f"Fold {test_index[0]}: Accuracy = {accuracy:.4f}")

Interpretation of Results

In the above example, you'll get an accuracy score for each fold. Since LOO cross-validation is used, you're essentially evaluating model performance on each individual sample (one at a time). This allows you to analyze how well your model performs on specific data points.

Keep in mind that LOO cross-validation can be computationally expensive and may not be suitable for large datasets due to its high computational complexity. For such cases, k-fold cross-validation with smaller folds (e.g., k=5) is a more efficient alternative.

I hope this helps! If you have any further questions or concerns, feel free to ask.