Python concurrency with asyncio

Python concurrency with asyncio

Python's asyncio library allows developers to create single-threaded concurrent code using coroutines, which can significantly improve the performance and responsiveness of your programs.

What is AsyncIO?

asyncio is a Python library that provides support for writing single-threaded concurrent code. It includes classes and functions that allow you to write asynchronous code that can run concurrently with other tasks.

Key Concepts:

Coroutines: These are special types of functions that can yield control to other coroutines, allowing them to execute concurrently. Coroutines can be used to write asynchronous code that appears to run in parallel. Event Loop: This is the central component ofasyncio, responsible for scheduling and running coroutines. The event loop is essentially a loop that repeatedly executes coroutines until they are done or interrupted by I/O operations. Tasks: These are instances of coroutine functions that have been scheduled to run in the event loop. Tasks can be thought of as separate threads, but since they all share the same memory space, they do not require locks for synchronization.

Advantages:

Concurrency Without Threads:asyncio allows you to write concurrent code without creating multiple threads or processes. This can greatly reduce overhead and improve performance. Easy to Use: Writing asynchronous code with asyncio is relatively straightforward, thanks to the high-level API provided by the library. Improved Responsiveness: By allowing coroutines to yield control to each other, asyncio enables your programs to respond more quickly to user input or network requests.

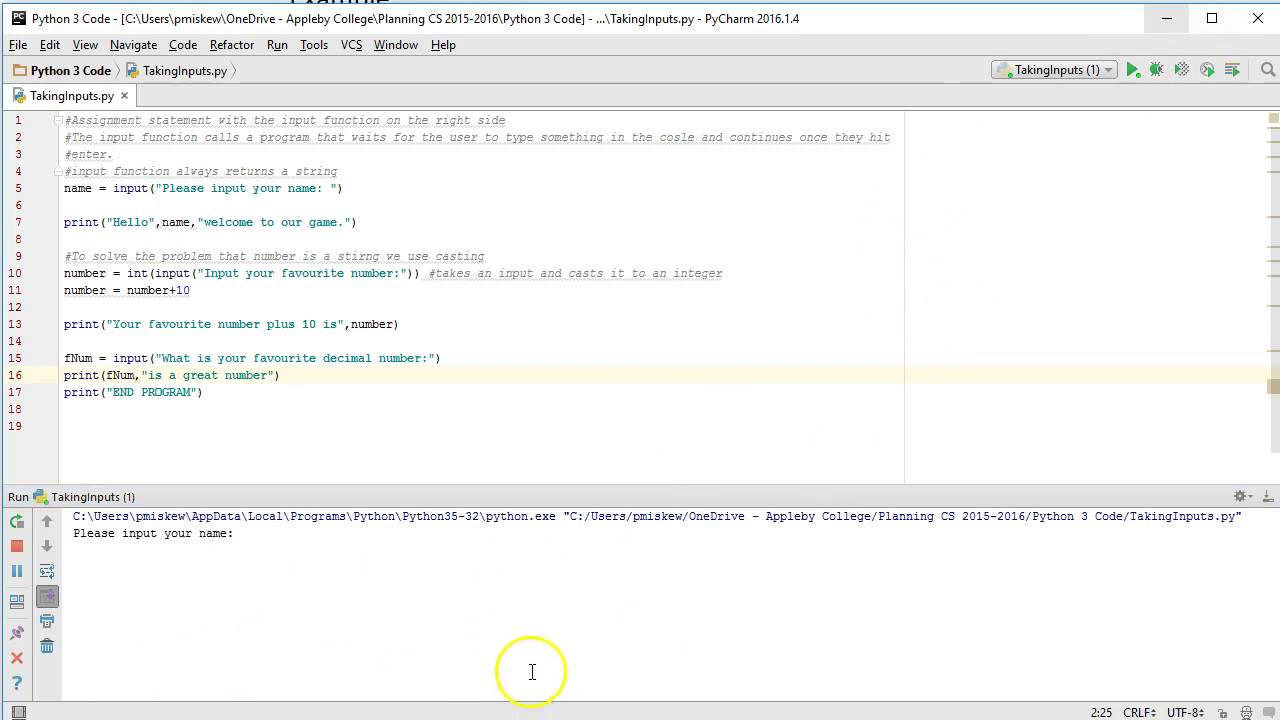

Example:

Here's a simple example of using asyncio to create concurrent tasks:

import asyncio

async def task1():

print("Task 1 started")

await asyncio.sleep(2)

print("Task 1 finished")

async def task2():

print("Task 2 started")

await asyncio.sleep(3)

print("Task 2 finished")

async def main():

await asyncio.gather(task1(), task2())

if name == "main":

asyncio.run(main())

In this example, we define two tasks: task1 and task2. Each task prints a message, waits for a specified amount of time using the await asyncio.sleep() method, and then prints another message. The asyncio.gather() function is used to run both tasks concurrently.

Best Practices:

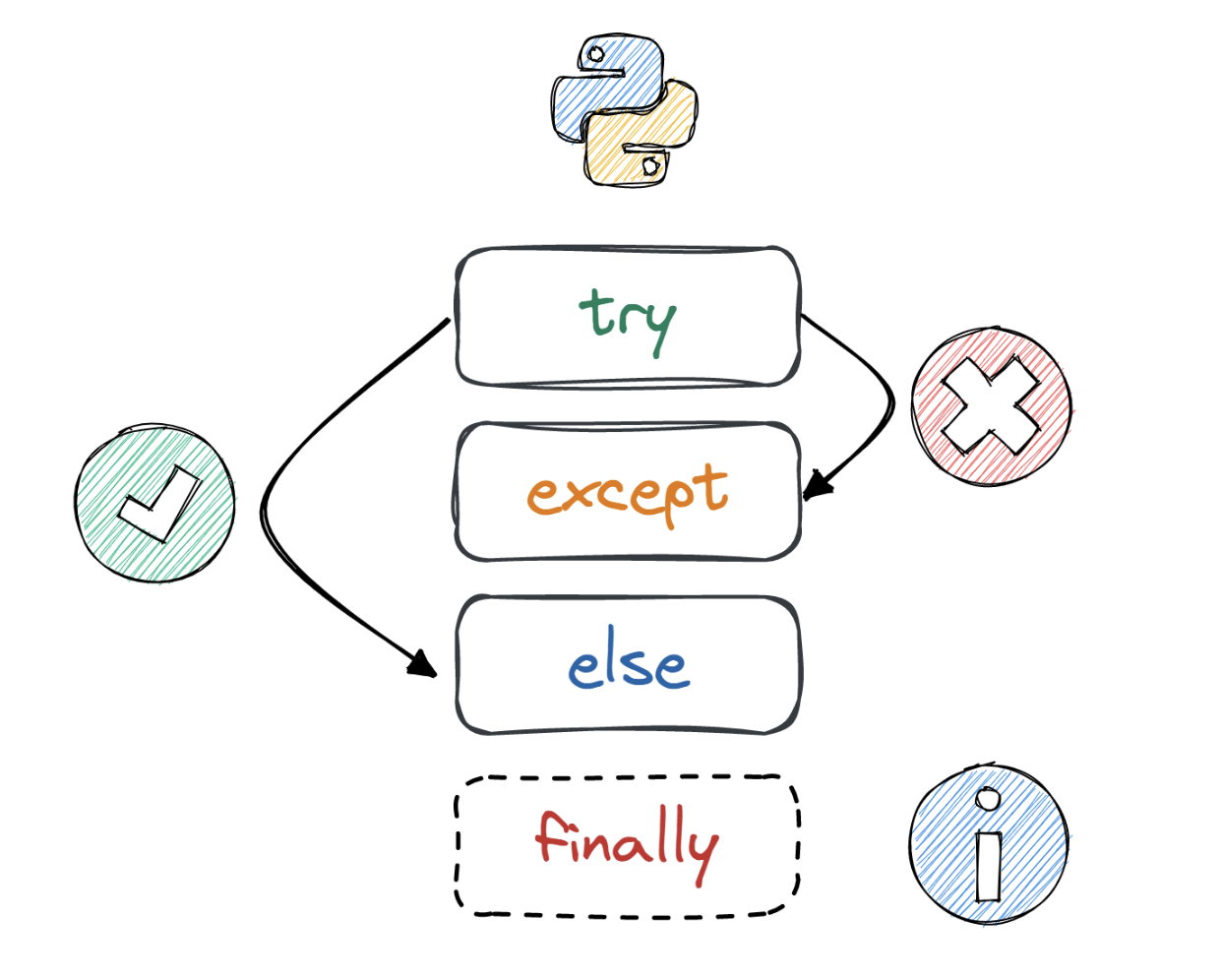

Use await: Always use theawait keyword when calling functions or methods that might block your coroutine. Use async def: Define your coroutines using the async def syntax, and make sure they return a value if you want to use their result in the event loop. Handle Errors: Use try/except blocks to handle any exceptions that might occur during the execution of your coroutines.

By following these guidelines and leveraging the features provided by asyncio, you can write efficient, concurrent Python code that takes full advantage of the capabilities offered by modern CPUs.

Python concurrent futures

Here's an overview of Python concurrent futures:

Python provides a convenient way to handle concurrency using the concurrent.futures module, which was introduced in Python 3.2. This module allows you to easily parallelize and execute asynchronous tasks.

What is concurrent.futures?

The concurrent.futures module is a high-level interface for asynchronously executing callables. It provides a way to schedule the execution of multiple tasks concurrently and collect their results.

When should I use concurrent.futures?

You should consider using concurrent.futures in situations where you have:

concurrent.futures can help you execute them concurrently. I/O-bound operations: If your tasks involve I/O operations (e.g., reading or writing files, making network requests), concurrent.futures can help you utilize the system's resources more effectively by executing multiple tasks at once. CPU-bound operations: If your tasks are CPU-bound and you want to take advantage of multi-core processors, concurrent.futures can help you parallelize your code.

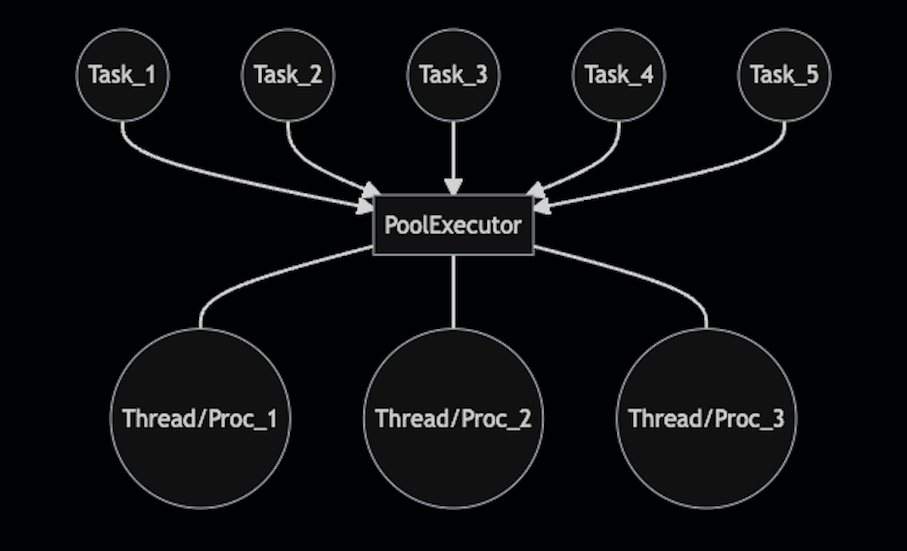

How does concurrent.futures work?

The concurrent.futures module provides two main classes:

ThreadPoolExecutor, but it uses processes instead of threads.

To use concurrent.futures, you create an instance of one of these classes and then submit tasks (functions) for execution using its submit() method. The tasks will be executed asynchronously, and the results can be collected using the result() or done() methods.

Here's a simple example:

import concurrent.futures

import time

def task(x):

print(f"Task {x} started")

time.sleep(2)

print(f"Task {x} finished")

with concurrent.futures.ThreadPoolExecutor(max_workers=3) as executor:

for i in range(5):

executor.submit(task, i)

print("Main thread finished")

In this example, we create a ThreadPoolExecutor with 3 worker threads and submit 5 tasks (functions) to be executed concurrently. The tasks print a message indicating when they start and finish.

Benefits of using concurrent.futures

Using concurrent.futures can bring several benefits:

concurrent.futures provides a high-level interface that simplifies the process of managing concurrency in your code. Easy debugging: With concurrent.futures, you can easily debug your concurrent code by printing log messages or using logging libraries.

Overall, concurrent.futures is a powerful and convenient way to handle concurrency in Python, making it easier for developers to write efficient and scalable programs.