Python ThreadPoolExecutor rate limit

Python ThreadPoolExecutor rate limit

Here's the info you requested:

Python ThreadPoolExecutor Rate Limit

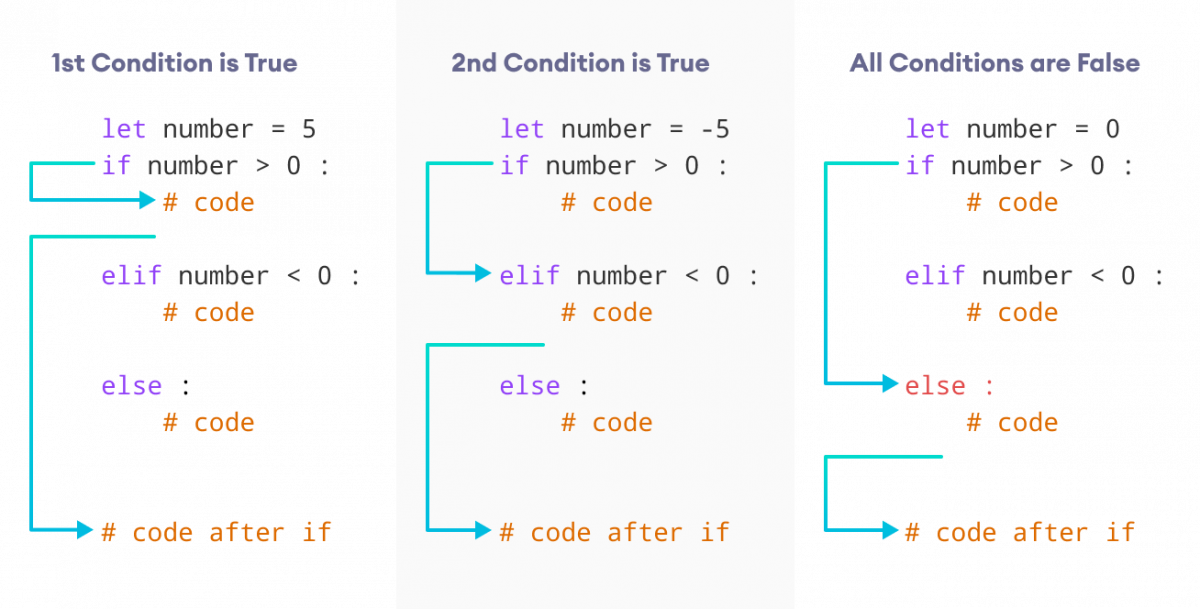

In Python, ThreadPoolExecutor is a powerful tool for managing and executing concurrent tasks efficiently. However, it's essential to understand its limitations, particularly when dealing with rate-limited operations.

The default behavior of ThreadPoolExecutor is to create a pool of worker threads that execute tasks concurrently. While this approach provides excellent performance benefits, it doesn't inherently enforce any rate limits or throttling on task execution.

To achieve rate limiting, you can leverage the max_workers parameter when creating your ThreadPoolExecutor. This sets the maximum number of worker threads in the pool. You can also specify a custom callback function through the before_execute argument. This function can be used to implement rate limiting logic.

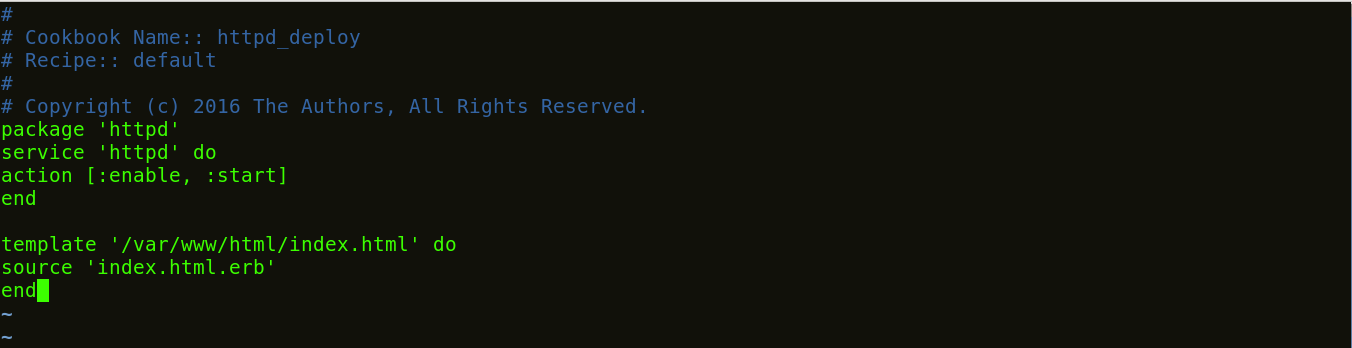

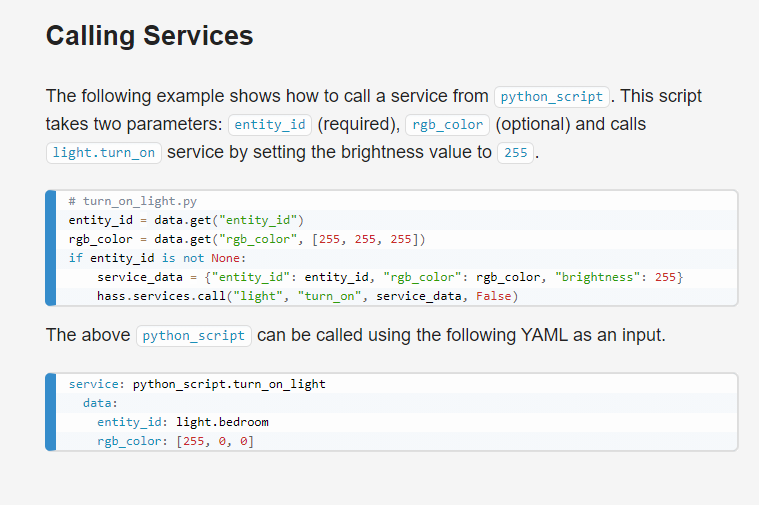

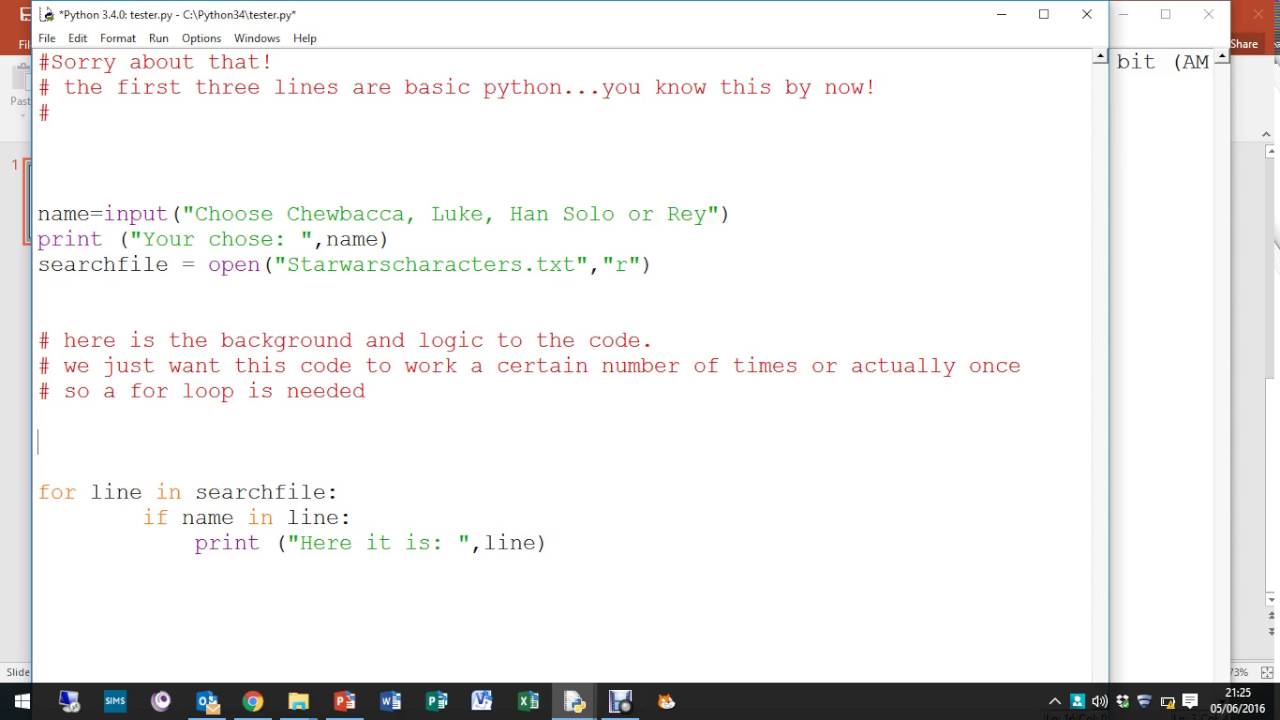

Here's an example of how you might use these features:

import concurrent.futures

class RateLimitedThreadPoolExecutor(concurrent.futures.ThreadPoolExecutor):

def init(self, max_workers, rate_limit=100):

super().init(max_workers=max_workers)

self.rate_limit = rate_limit

def before_execute(self, call_next):

Enforce rate limiting

if self._work_queue.qsize() >= self.rate_limit:

print("Rate limit reached. Waiting for tasks to complete.")

call_next()

return False

else:

return True

def my_task():

Simulate a task that takes some time

import time

time.sleep(1)

return "Task completed!"

with RateLimitedThreadPoolExecutor(max_workers=5, rate_limit=3) as executor:

futures = [executor.submit(my_task) for _ in range(10)]

for future in concurrent.futures.as_completed(futures):

print(future.result())

In this example, we create a custom ThreadPoolExecutor subclass that enforces a rate limit of 3 tasks per second (100 tasks per minute). The before_execute callback is used to implement this logic. When the task queue reaches the rate limit, it waits for existing tasks to complete before allowing new ones.

When executing tasks using this custom executor, you'll see that the tasks are executed at a controlled rate, without overwhelming the system with an excessive number of concurrent requests. This is particularly useful when performing network I/O-bound operations or making API calls that require careful throttling.

Keep in mind that implementing rate limiting can be more complex if you have specific requirements, such as handling backpressure or adjusting the limit dynamically based on workload. However, this example provides a solid foundation for understanding how to achieve rate limiting with Python's ThreadPoolExecutor.

Python FastAPI rate limiting

I'd be happy to help with that! Here's the information you requested about Python and FastAPI rate limiting.

What is Rate Limiting?

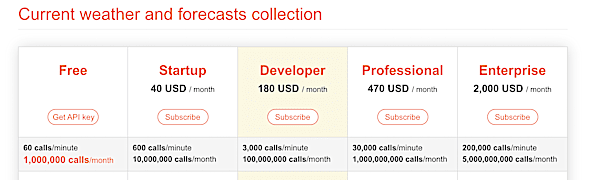

Rate limiting, also known as request throttling or traffic control, is a technique used to regulate the number of requests that can be made to an API or service within a certain time period. This helps prevent abuse and ensures that your resources are not overwhelmed by a large volume of requests.

Why Do I Need Rate Limiting?

In today's fast-paced digital landscape, APIs and services are often targeted by malicious actors who try to exploit them for their own gain. Without rate limiting, these attackers can make an unlimited number of requests to your API, causing performance issues, slowing down your server, or even taking it down.

Rate limiting also helps you manage traffic surges during peak usage periods, such as during a product launch or promotion. By controlling the flow of requests, you can prevent your service from becoming overwhelmed and ensure that legitimate users have access to your resources when they need them.

How Does Rate Limiting Work in FastAPI?

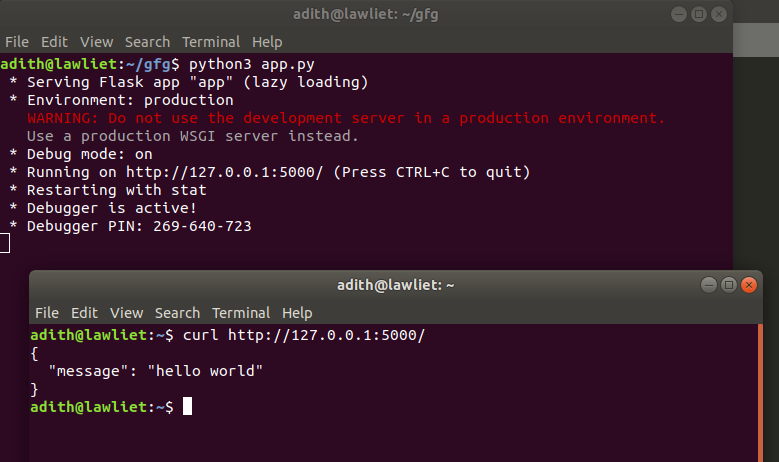

FastAPI is a modern web framework for building APIs with Python 3.7+. To implement rate limiting in FastAPI, you can use the fastapi built-in support for rate limiting, which integrates seamlessly with its powerful routing and authentication features.

Here's an example of how you might implement rate limiting using FastAPI:

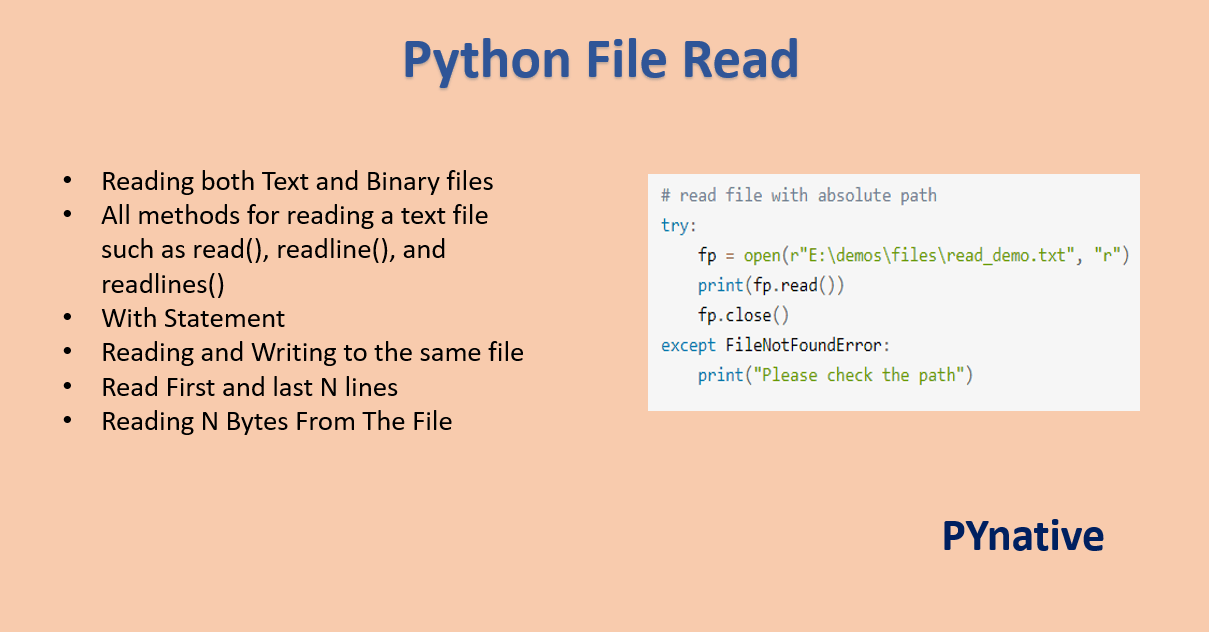

from fastapi import FastAPI, Request, Response

app = FastAPI()

@app.get("/items/{item_id}")

async def get_item(item_id: int):

Check if the request is within the allowed rate limit

if not await check_rate_limit(Request()):

return Response(status_code=429)

Handle the request

...

In this example, the check_rate_limit function checks whether the incoming request is within the allowed rate limit. If it's not, the function returns a 429 "Too Many Requests" response.

You can implement the check_rate_limit function using various rate limiting strategies, such as:

Additional Tips for Effective Rate Limiting

Make it user-friendly: Display an error message or provide guidance to users who hit the rate limit, so they know what's happening. Adjust rate limits dynamically: Based on traffic patterns, adjust your rate limits in real-time to ensure you're not overwhelming your resources. Monitor and analyze usage: Keep track of user behavior, including their request rates and patterns, to identify potential abuse or misbehavior.Conclusion

In this article, we explored the importance of rate limiting for FastAPI APIs and how you can implement it using Python. By controlling the flow of requests, you can prevent performance issues, slow down attacks, and ensure your service is always available when users need it most.

Happy coding!