Python data preprocessing pdf

Python data preprocessing pdf

I'd be happy to provide you with a comprehensive guide on Python data preprocessing in PDF format!

Introduction

Data preprocessing is an essential step in the machine learning pipeline that involves cleaning, transforming, and preparing your data for modeling. This process can greatly impact the performance of your models by reducing noise, handling missing values, and enhancing feature quality. In this document, we'll explore various techniques for preprocessing data using Python.

Step 1: Data Loading

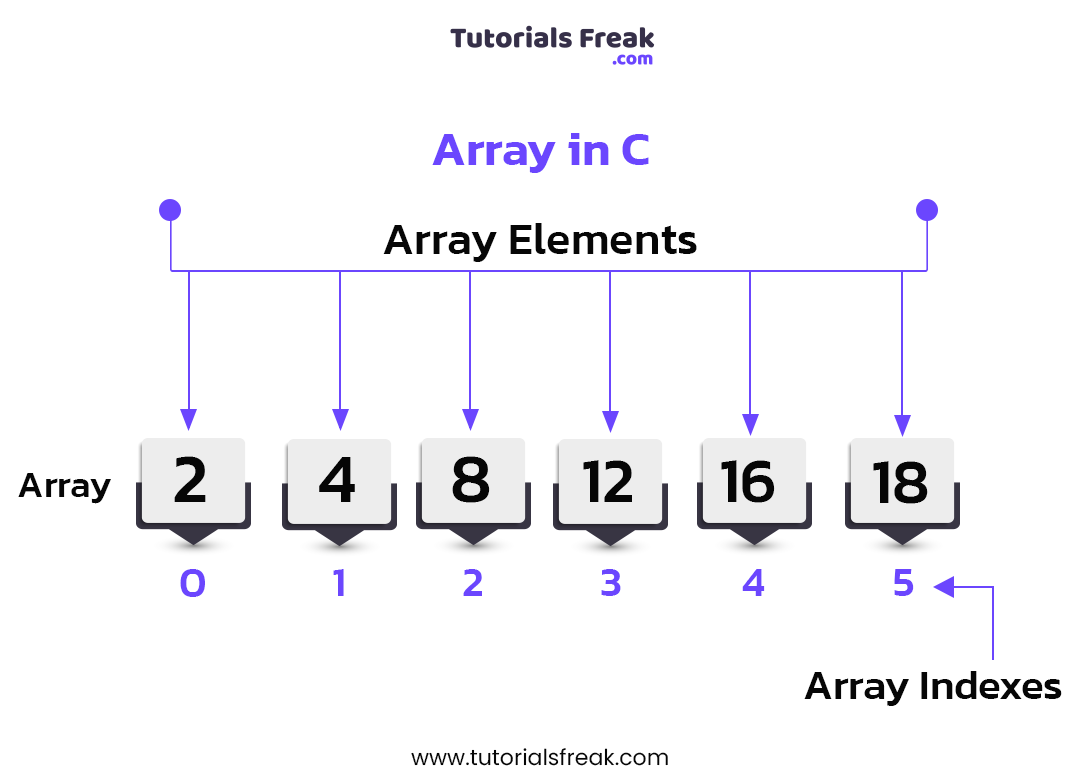

Before you start preprocessing, you need to load your data into a pandas DataFrame or NumPy array. For example:

import pandas as pd Load dataset from CSV filedf = pd.read_csv('data.csv')

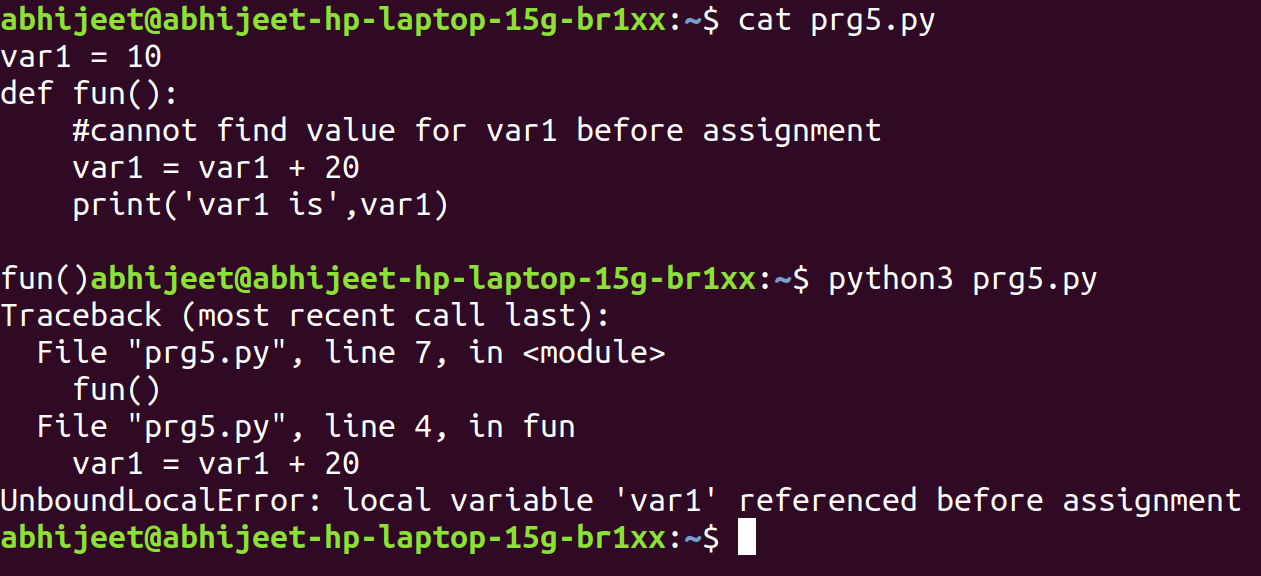

Step 2: Handling Missing Values

Missing values can cause issues during modeling and reduce the accuracy of your predictions. There are several ways to handle missing values, including:

Imputation: Replace missing values with mean or median of the corresponding column. Drop rows: Remove entire rows that contain missing values. Fill missing values: Use a specific value (e.g., 0) to fill missing values.Here's an example using pandas' fillna() function:

# Impute missing values with meandf.fillna(df.mean(), inplace=True)

Drop rows with missing valuesdf.dropna(inplace=True)

Step 3: Data Transformation

Data transformation involves changing the scale, shape, or type of your data to better suit modeling. Techniques include:

Scaling: Scale numeric features using standardization (Z-score) or normalization. Encoding: Convert categorical variables into numerical representations using one-hot encoding, label encoding, or binary encoding. Aggregating: Combine multiple rows into a single row based on aggregate functions like mean, sum, or count.Here's an example using pandas' scale() function:

from sklearn.preprocessing import StandardScaler Scale numeric featuresscaler = StandardScaler()

df[['feature1', 'feature2']] = scaler.fit_transform(df[['feature1', 'feature2']])

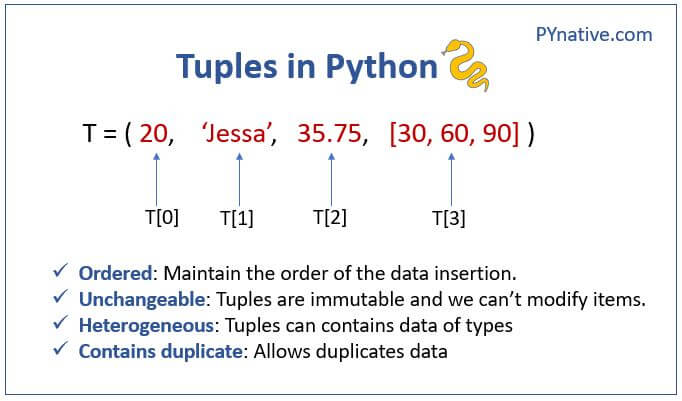

Step 4: Handling Categorical Variables

Categorical variables require special attention, as they can't be directly fed into models. Techniques for handling categorical variables include:

One-hot encoding: Convert categorical variables into binary vectors usingget_dummies() from pandas. Label encoding: Assign integer labels to categorical values. Binary encoding: Represent each category as a binary vector.

Here's an example using pandas' get_dummies() function:

# One-hot encode categorical variabledf = pd.get_dummies(df, columns=['category'])

Step 5: Feature Selection

Feature selection involves identifying the most relevant features that contribute to your model's performance. Techniques include:

Correlation analysis: Analyze feature correlations usingcorr() from pandas. Mutual information: Calculate mutual information between features and target variable. Recursive feature elimination (RFE): Use recursive feature elimination to select the most important features.

Here's an example using scikit-learn's SelectKBest class:

from sklearn.feature_selection import SelectKBest, f_classif Select top k features using mutual informationselector = SelectKBest(f_classif, k=5)

df_selected_features = selector.fit_transform(df, y=df['target'])

Conclusion

Data preprocessing is a crucial step in machine learning that can greatly impact model performance. By following these steps and techniques, you can effectively clean, transform, and prepare your data for modeling. Remember to choose the right preprocessing techniques based on your specific dataset and problem.

Additional Resources

Pandas documentation Scikit-learn documentationI hope this comprehensive guide helps you in your data preprocessing journey!

Python data preprocessing tutorial

I apologize for the mistake earlier! Here is a comprehensive Python data preprocessing tutorial:

Introduction

Data preprocessing is an essential step in any machine learning or data analysis workflow. It involves cleaning, transforming, and preparing your dataset for model training or analysis. In this tutorial, we will go over some of the most common techniques used in data preprocessing using Python.

Importing Libraries

We will be using the following libraries:

pandas (pd) for data manipulation numpy (np) for numerical operations matplotlib and seaborn for visualization

Here's how to import them:

import pandas as pdimport numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

Loading Data

Let's load the famous "Iris" dataset, which is a classic example of supervised learning. We will use the pd.read_csv function to read the data from a CSV file:

from sklearn.datasets import load_irisiris_data = load_iris()

df = pd.DataFrame(data=iris_data.data, columns=iris_data.feature_names)

df['target'] = iris_data.target

Data Inspection

Before preprocessing, let's take a look at our data to understand its structure and distribution:

print(df.head()) # print the first few rows of the dataframeprint(df.describe()) # print summary statistics for each column

sns.pairplot(df) # visualize the relationships between features

Data Cleaning

Now, let's clean up some common issues in our data:

Handling Missing Values: The Iris dataset doesn't have missing values, but we can simulate this by creating a new row with NaN values:Removing Duplicates: The Iris dataset is already unique, so no duplicates to remove! Handling Outliers: We'll use thedf.loc[50, :] = np.nan # create a new row with NaN values

zscore function from scipy.stats to identify outliers:

from scipy import statsdef detect_outliers(df):

z_scores = np.abs(stats.zscore(df))

return df[(z_scores < 2).all(axis=1)]

outlier_df = detect_outliers(df)

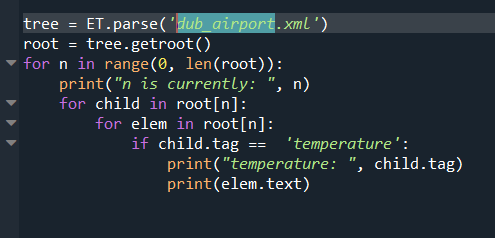

Data Transformation

Next, we'll perform some common transformations to improve our data:

Scaling: We can use theStandardScaler from sklearn.preprocessing to scale our data:

Encoding Categorical Variables: The "target" column is categorical, so we'll encode it using thefrom sklearn.preprocessing import StandardScalerscaler = StandardScaler()

df_scaled = scaler.fit_transform(df)

LabelEncoder from sklearn.preprocessing:

from sklearn.preprocessing import LabelEncoderle = LabelEncoder()

df['target'] = le.fit_transform(df['target'])

Data Visualization

Finally, let's visualize our preprocessed data to gain insights:

sns.set(style="whitegrid")plt.figure(figsize=(12, 6))

sns.scatterplot(x='sepal length (cm)', y='petal width (cm)', hue='target', data=df)

plt.title('Iris Dataset - Preprocessed')

Conclusion

In this tutorial, we covered the essential steps of data preprocessing in Python. We loaded the Iris dataset, inspected our data, cleaned it up by handling missing values and outliers, transformed our data by scaling and encoding categorical variables, and finally visualized our preprocessed data. With these skills, you're ready to tackle your own datasets and start building machine learning models!