Python feature engineering github

Python feature engineering github

I'm happy to respond in English! Here's the information you requested about Python feature engineering and GitHub:

What is Feature Engineering?

Feature engineering is a crucial step in machine learning that involves selecting, transforming, and creating new features from your existing dataset to improve its quality, accuracy, and overall performance. It is an essential process because it allows you to create features that are more relevant to the problem you're trying to solve, which can lead to better model performance.

Why is Feature Engineering Important?

Feature engineering is important for several reasons:

Improves Model Performance: By creating new features or transforming existing ones, you can improve the accuracy and performance of your machine learning models. Increases Data Quality: Feature engineering helps to remove noise and irrelevant data, making it more reliable and accurate. Enables Better Model Interpretability: With feature engineering, you can create features that are more interpretable and easier to understand, making it easier to identify the most important factors affecting your model's performance.How to Implement Feature Engineering in Python?

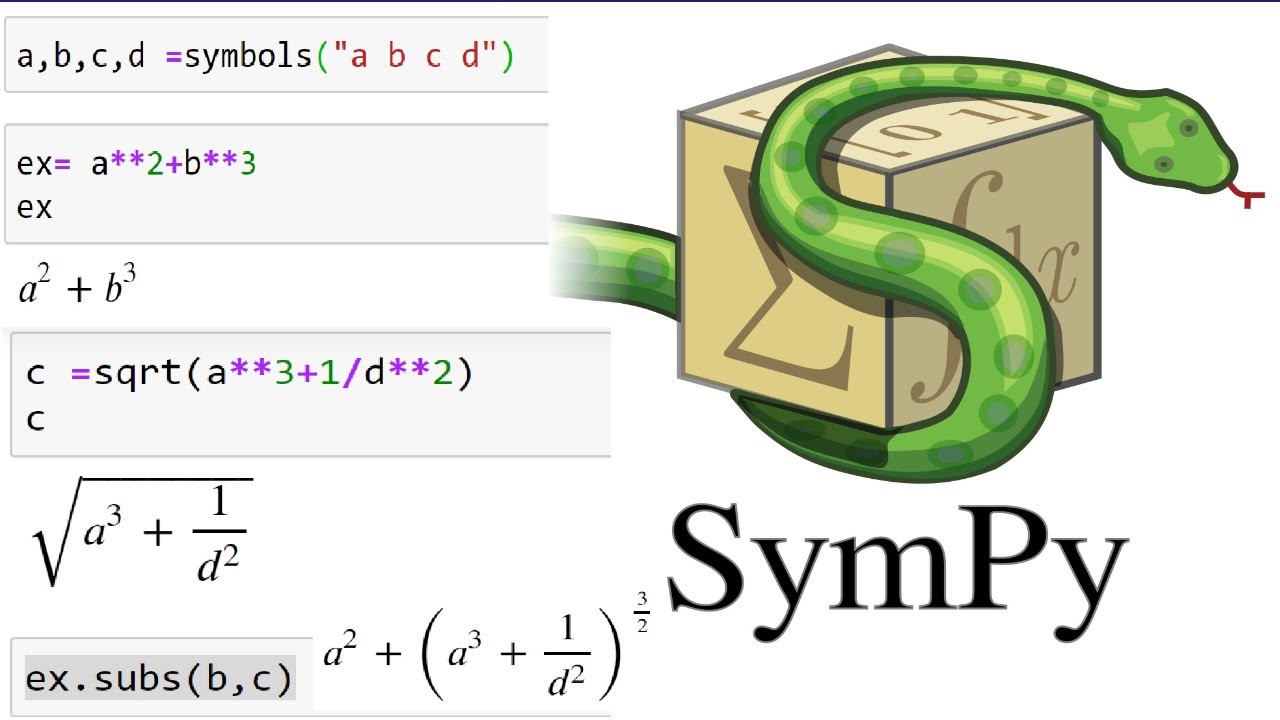

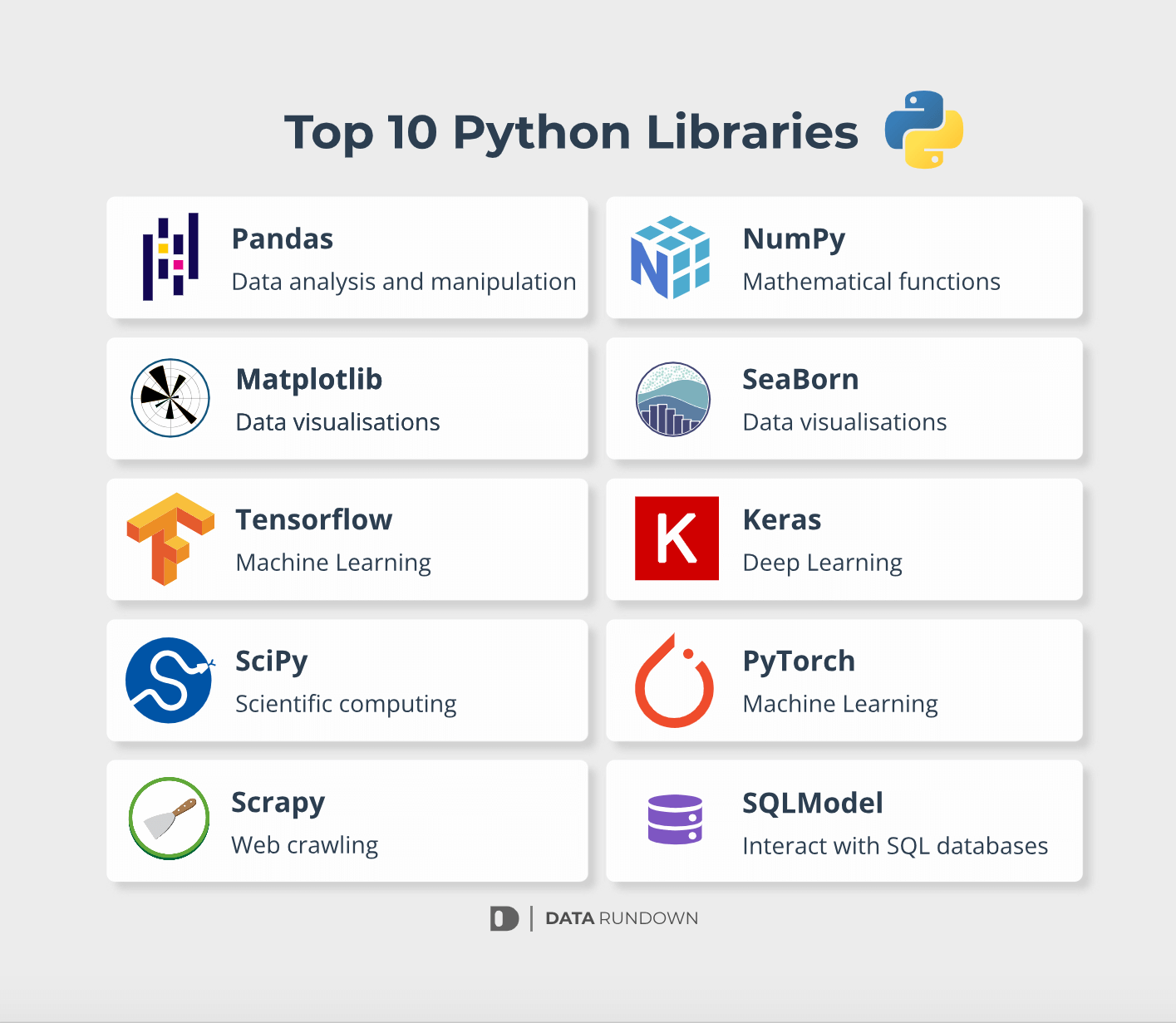

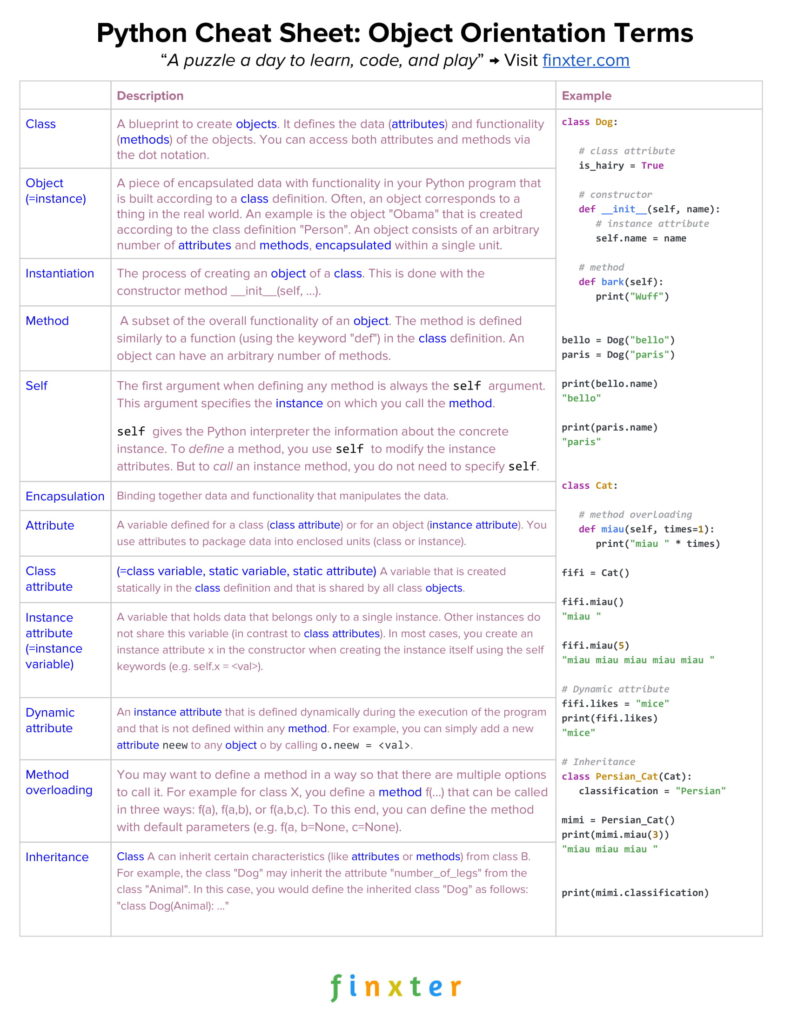

Python has several libraries and tools that make it easy to implement feature engineering. Some popular ones include:

Pandas: A powerful library for data manipulation and analysis. Scikit-learn: A machine learning library that includes many useful feature engineering techniques. NumPy: A library for efficient numerical computations.Here are some examples of feature engineering techniques you can use in Python:

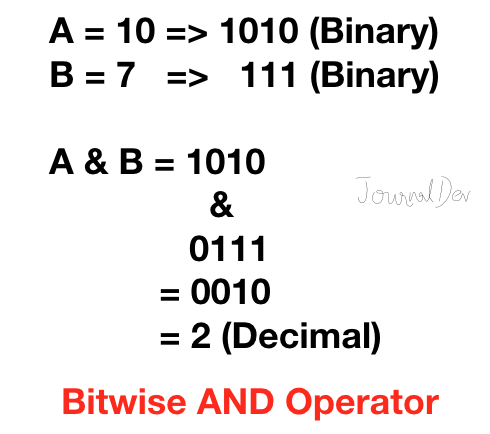

Handling Missing Values: Use thefillna() function from Pandas to handle missing values. Encoding Categorical Variables: Use one-hot encoding or label encoding from Scikit-learn to encode categorical variables. Transforming Data: Use the log() function from NumPy to transform data, such as log-transforming skewed distributions. Creating New Features: Use the numpy library to create new features by combining existing ones.

GitHub Repositories for Feature Engineering

Here are some popular GitHub repositories that showcase feature engineering techniques in Python:

scikit-learn/examples: A repository with example code and datasets for scikit-learn, including many feature engineering techniques. pandas-examples: A repository with example code and datasets for Pandas, including many data manipulation and analysis tasks. feature-engineering: A repository that provides a comprehensive guide to feature engineering in Python.These repositories are excellent resources for learning about feature engineering and implementing it in Python.

Conclusion

Feature engineering is a vital step in machine learning that can significantly improve model performance and increase data quality. Python has many powerful libraries and tools that make it easy to implement feature engineering, such as Pandas, Scikit-learn, and NumPy. By using these techniques and exploring GitHub repositories, you can become proficient in feature engineering and create more accurate and reliable machine learning models.

References:

"Feature Engineering for Machine Learning" by Tom Fearn "Python Machine Learning" by Sebastian Raschka "Scikit-learn Documentation" "Pandas Documentation" "NumPy Documentation"Please let me know if you have any questions or need further clarification on any of these topics.

Python feature engineering tutorial

I'm happy to help!

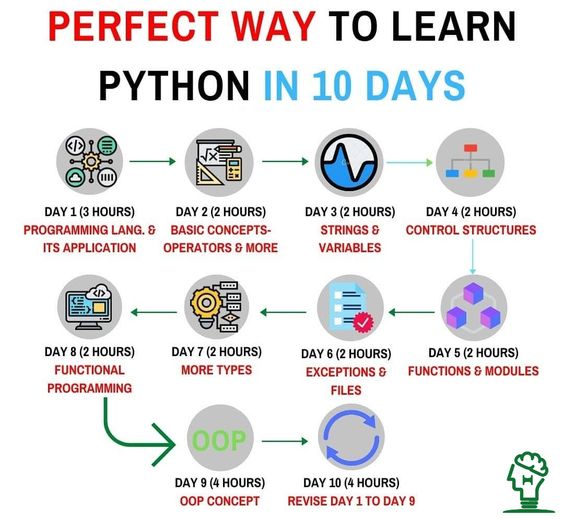

Feature Engineering is a crucial step in the Machine Learning workflow. It's the process of selecting and transforming raw data into features that are informative enough to enable a model to learn meaningful patterns or relationships.

In this tutorial, we'll explore some fundamental concepts and techniques for feature engineering using Python. By the end of this tutorial, you should have a solid understanding of how to engineer your own features!

What is Feature Engineering?

Feature Engineering is all about extracting relevant information from raw data and transforming it into a form that's more suitable for machine learning models. This process can include:

Selecting relevant features: Identify the most important variables in your dataset that will help your model make accurate predictions. Transforming features: Convert categorical data into numerical formats, handle missing values, normalize or standardize values, and apply mathematical transformations (e.g., log, square root) to enhance feature representation.Why is Feature Engineering Important?

Improved model performance: By crafting features that are more informative, you can significantly boost the accuracy of your machine learning models. Reduced overfitting: Well-engineered features can help prevent overfitting by providing a more robust representation of the underlying patterns in your data. Faster training times: With more meaningful features, your model may require fewer iterations to converge, reducing overall training time.Python Feature Engineering Techniques:

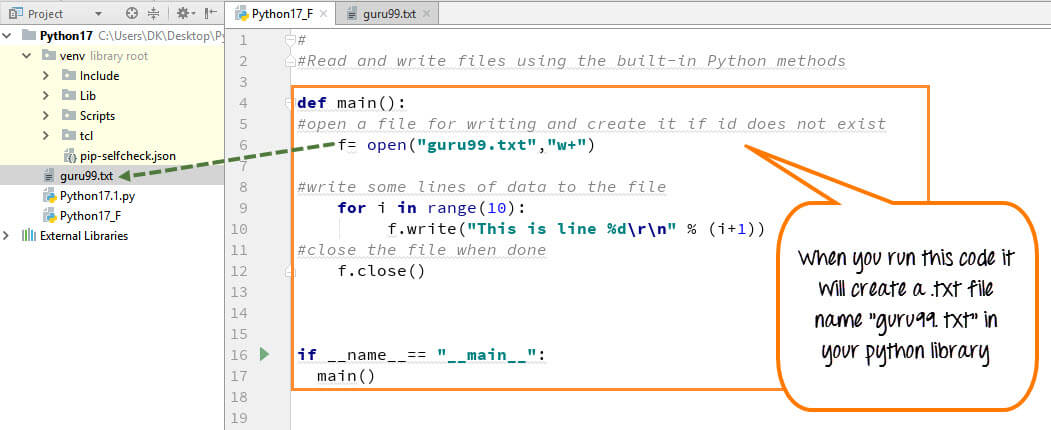

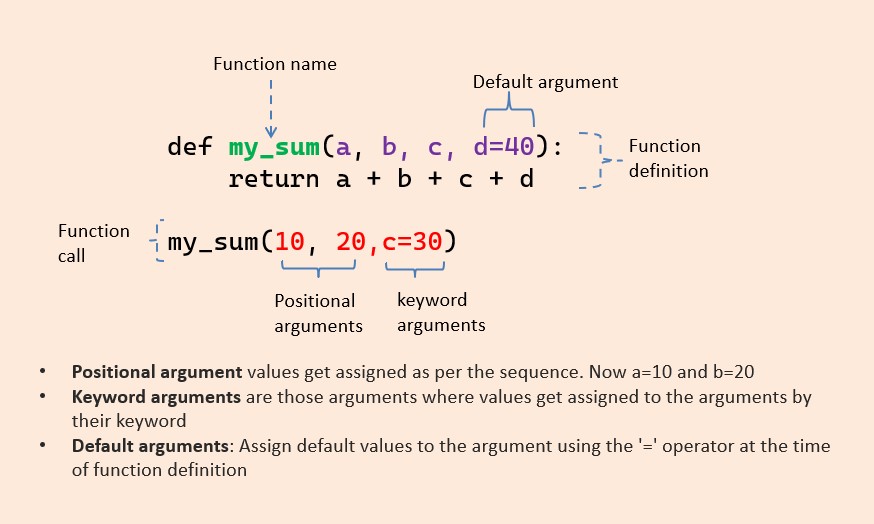

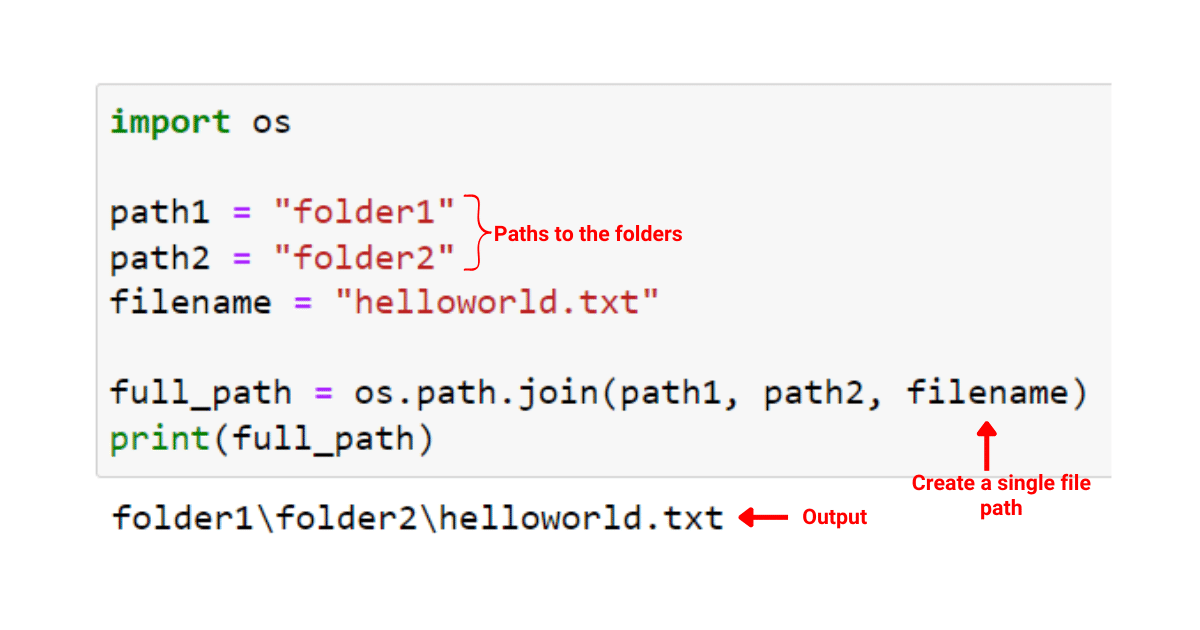

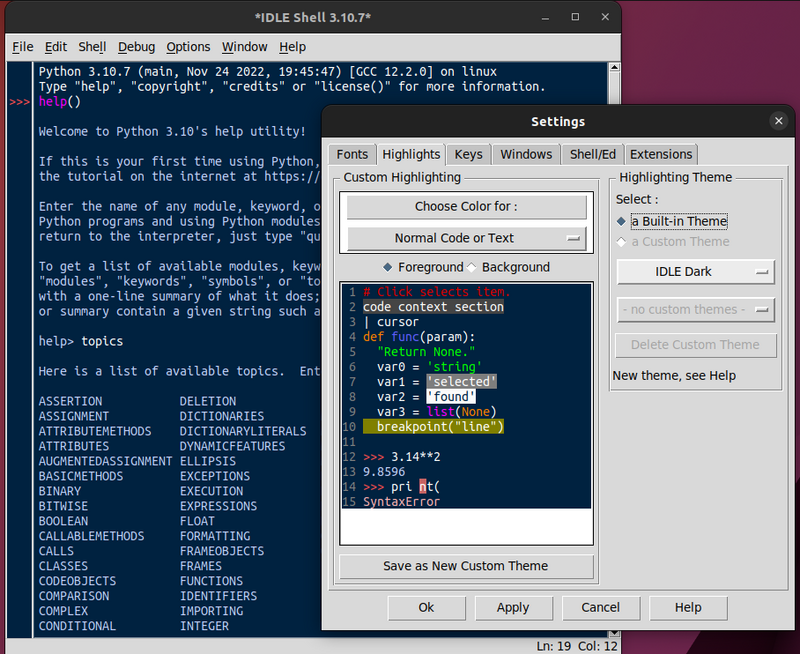

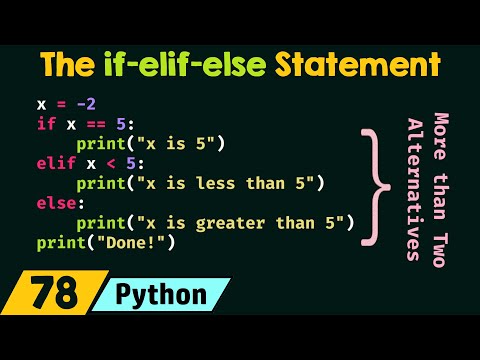

Handling missing values:pandas's fillna() method can replace missing values with mean, median, or custom values. scipy's interpolate() function can perform linear or polynomial interpolation to fill gaps. Transforming categorical data: Convert categorical variables into numerical representations using: One-hot encoding (e.g., pandas.get_dummies()) Label encoding (e.g., LabelEncoder from sklearn.preprocessing) Hashing (e.g., HashingVectorizer from sklearn.feature_extraction.text) Normalization and Standardization: scikit-learn's StandardScaler and MinMaxScaler can help normalize or standardize data. Dimensionality Reduction: Principal Component Analysis (PCA) using sklearn.decomposition.PCA Independent Component Analysis (ICA) using sklearn.decomposition.IdealVector Time Series Feature Engineering: Calculate rolling means, moving averages, and cumulative sums Extract seasonal patterns using Fourier transform or seasonal_decompose from statsmodels.tsa.seasonal

Remember to carefully evaluate the performance of your model on both training and testing sets after engineering features, as over-engineering can lead to overfitting.

This is just a taste of what feature engineering in Python has to offer! With practice and patience, you'll become proficient in crafting meaningful features that will boost the performance of your machine learning models. Happy coding!