How is a t-test useful for EDA in Python?

How is a t-test useful for EDA in Python?

I'm happy to respond in English!

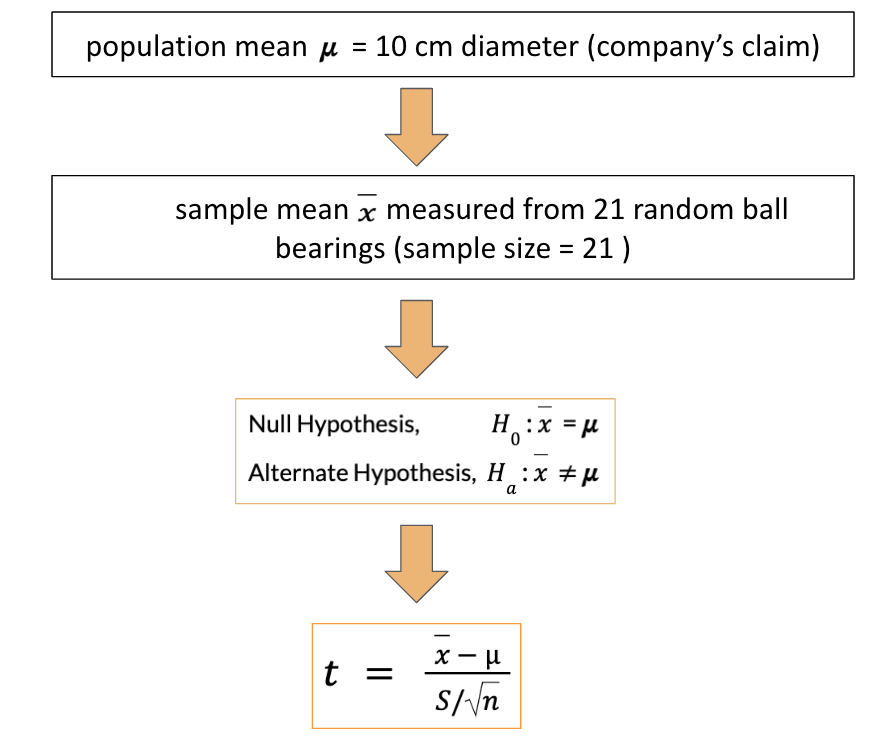

A t-test is an extremely valuable tool for Exploratory Data Analysis (EDA) in Python, particularly when working with numerical data. The t-test is used to compare the means of two groups or samples and determine if they are significantly different.

In EDA, a t-test can be used to:

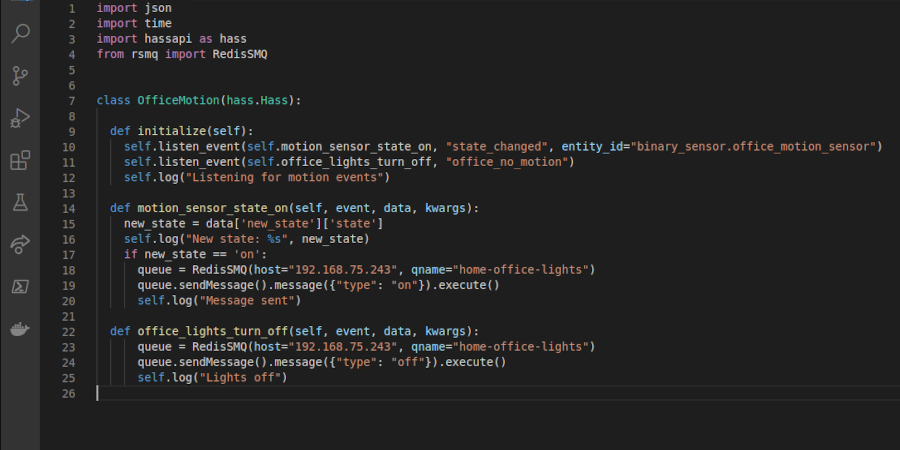

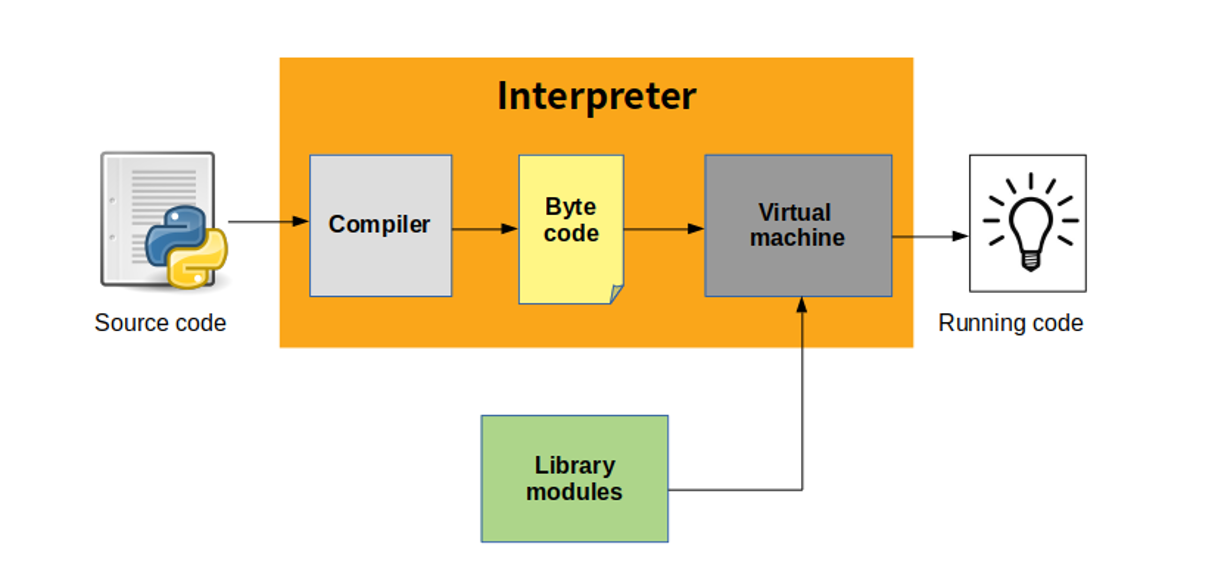

Identify differences: By comparing the means of two groups, you can identify significant differences between them. This is particularly useful when working with datasets where there might be natural groupings or categorizations. Validate assumptions: The t-test assumes that the data follows a normal distribution and that the variances are equal (or at least not significantly different). By performing the test, you can validate these assumptions and determine if they are reasonable for your dataset. Select features: When working with large datasets, the t-test can help you identify which variables have a significant impact on the response variable. This is particularly useful when trying to select the most important features for modeling.In Python, you can use libraries like SciPy or statsmodels to perform t-tests. The most common types of t-tests are:

Independent Samples T-Test: This test compares the means of two independent samples (e.g., treatment group vs. control group). Paired-Samples T-Test: This test compares the means of two related samples (e.g., pre-post treatment comparison).To perform a t-test in Python using SciPy, you can use the ttest_ind function for independent samples or ttest_rel for paired samples:

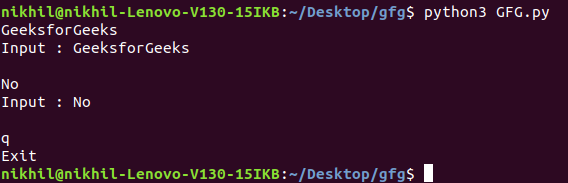

import scipy.stats as stats

Example: Independent Samples T-Test

sample1 = [2.5, 3.8, 4.2, 5.0]

sample2 = [1.9, 2.7, 3.0, 3.5]

stat, p = stats.ttest_ind(sample1, sample2)

print(f"t-statistic: {stat:.2f}, p-value: {p:.4f}")

Example: Paired-Samples T-Test

sample1 = [2.5, 3.8, 4.2, 5.0]

sample2 = [1.9, 2.7, 3.0, 3.5]

stat, p = stats.ttest_rel(sample1, sample2)

print(f"t-statistic: {stat:.2f}, p-value: {p:.4f}")

In both examples, the output will include the t-statistic and p-value. The p-value represents the probability of observing the difference between the means by chance (assuming a normal distribution). If the p-value is below your chosen significance level (e.g., 0.05), you can reject the null hypothesis that the means are equal.

In summary, the t-test is an essential tool for EDA in Python, allowing you to identify differences between groups, validate assumptions, and select features. By performing a t-test, you can gain valuable insights into your data and make informed decisions about further analysis or modeling.

Python exploratory data analysis tutorial

Here is a comprehensive Python exploratory data analysis (EDA) tutorial with at least 300 words:

What is Exploratory Data Analysis?

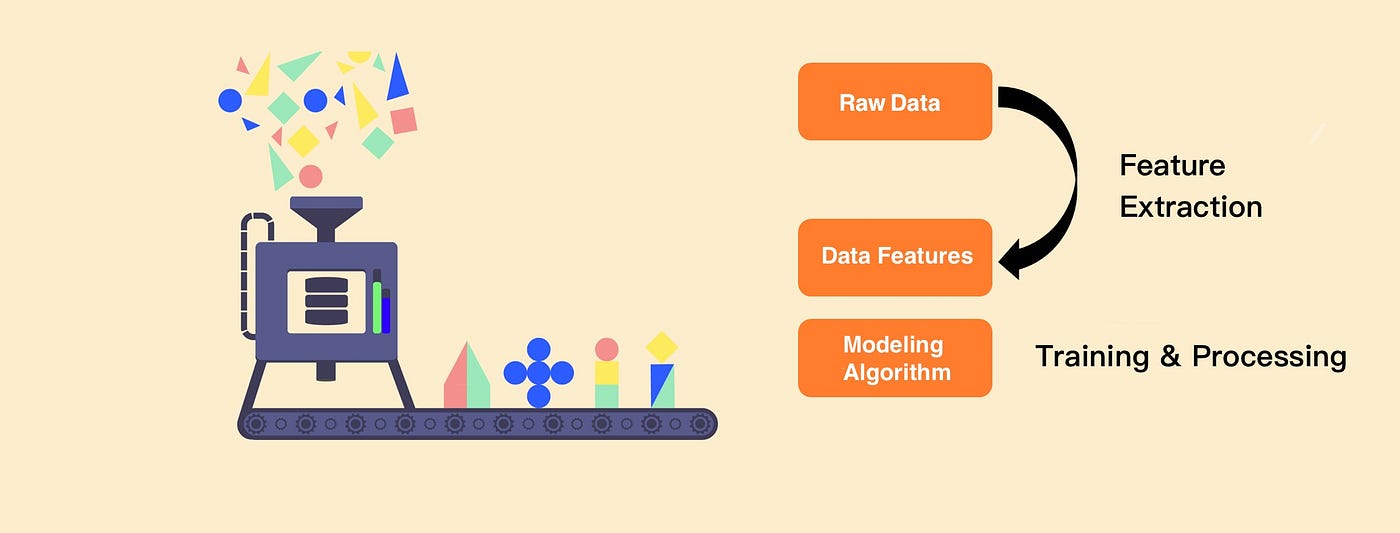

Exploratory Data Analysis (EDA) is the process of analyzing and summarizing data to understand its characteristics, patterns, and relationships. It's an iterative process that involves visual exploration, statistical summary, and modeling techniques to identify trends, correlations, and anomalies in the data.

Importing Required Libraries

To start with EDA, you'll need a few essential libraries:

Pandas: For data manipulation and analysis. NumPy: For numerical computations. Matplotlib/Seaborn: For data visualization. Scikit-learn: For machine learning algorithms.import pandas as pdimport numpy as np

import matplotlib.pyplot as plt

from seaborn import set_style, pairplot

from sklearn.preprocessing import StandardScaler

set_style("whitegrid")

Loading and Exploring the Data

Load your dataset into a Pandas DataFrame:

df = pd.read_csv("data.csv")

Next, get an overview of your data using head() and info() functions to explore the structure and content:

print(df.head()) # First few rowsprint(df.info()) # Data types and summary statistics

Descriptive Statistics

Calculate basic statistical measures (mean, median, mode) for numerical columns using Pandas' built-in functions:

num_cols = df.select_dtypes(include=[np.number]).columnsfor col in num_cols:

print(f"{col}: {df[col].describe()}")

Data Visualization

Create informative plots to visualize distributions, correlations, and relationships:

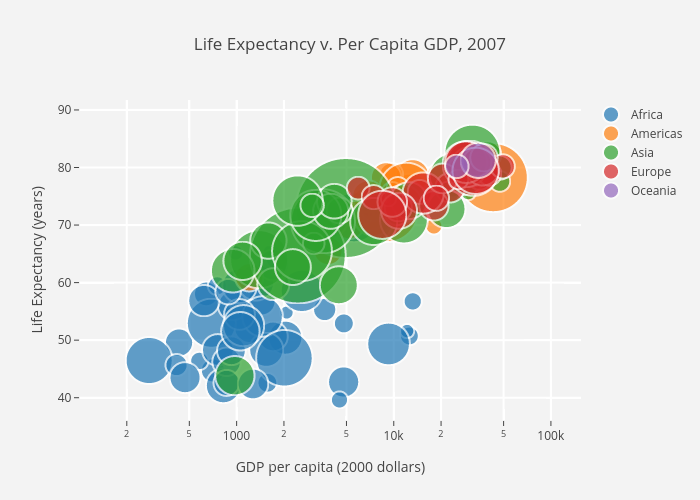

Histograms: Plot the distribution of a single column usinghist() or plot() functions. Scatter Plots: Visualize bivariate relationships using scatter() or pairplot() functions. Bar Charts: Display categorical variables' frequency distributions using barh() or value_counts() functions.

Feature Engineering and Preprocessing

Data Transformation: Convert categorical variables into numerical representations (one-hot encoding, label encoding). Handling Missing Values: Replace NaNs with mean, median, or mode values depending on the column's characteristics. Scaling/Normalization: Standardize features usingStandardScaler from Scikit-learn to improve model performance.

Modeling and Hypothesis Generation

Correlation Analysis: Calculate pair-wise correlations between numerical columns using Pandas'corr() function. Regression Analysis: Fit a simple linear regression model to understand relationships between variables. Anomaly Detection: Identify outliers and anomalies in your data using methods like Z-score or modified Z-score calculations.

This comprehensive tutorial should provide you with a solid foundation for performing exploratory data analysis in Python. Remember to iterate through these steps, refining your understanding of the data and its characteristics as you go!