Huggingface python pip

Huggingface python pip

I'm happy to help!

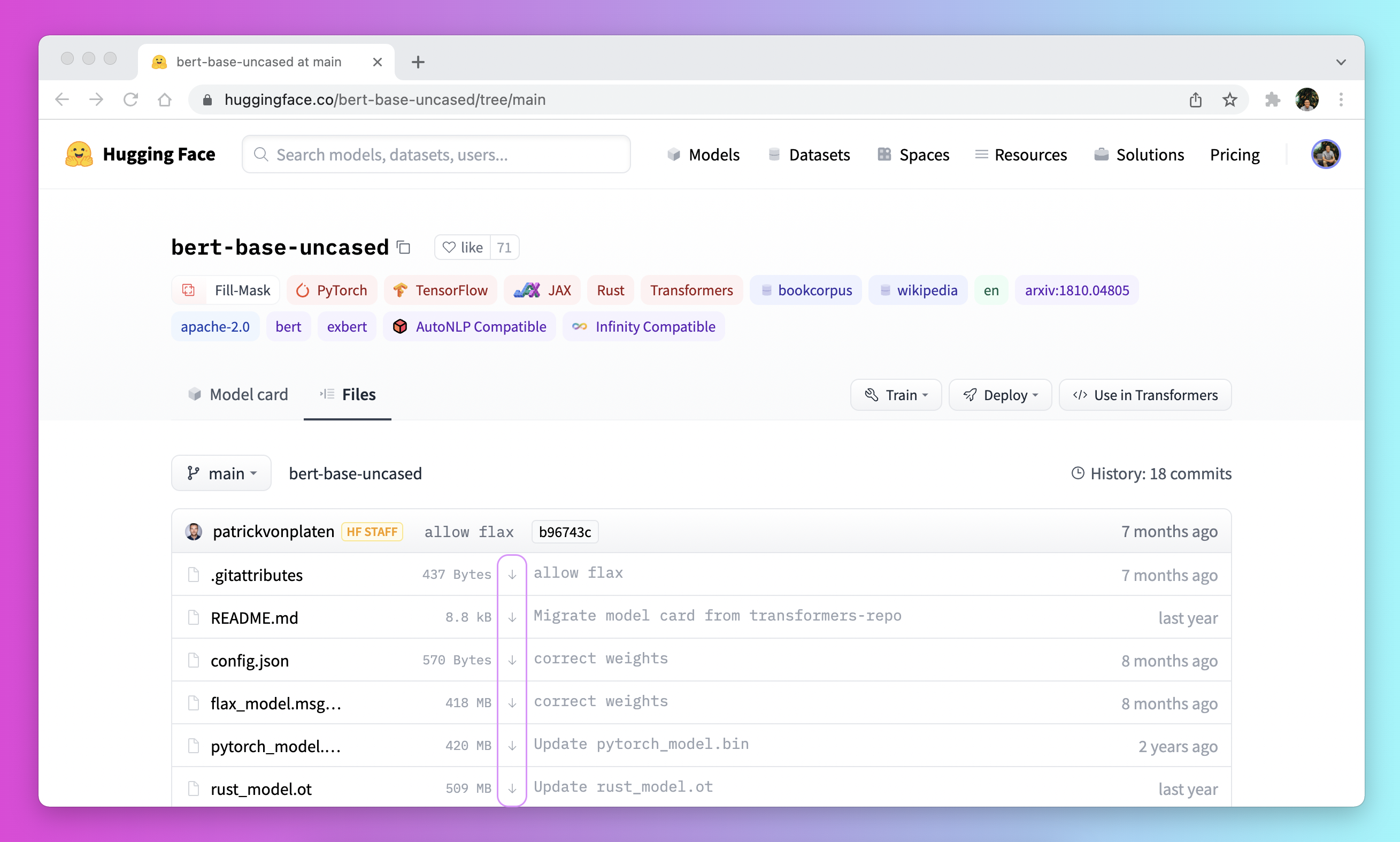

Hugging Face is a popular AI and machine learning library that provides pre-trained models for natural language processing (NLP) tasks, such as text classification, sentiment analysis, question answering, and more! One of the most famous Hugging Face models is BERT (Bidirectional Encoder Representations from Transformers), which has achieved state-of-the-art results in many NLP benchmarks.

To use Hugging Face's pre-trained models with Python, you can install the transformers library using pip:

pip install transformers

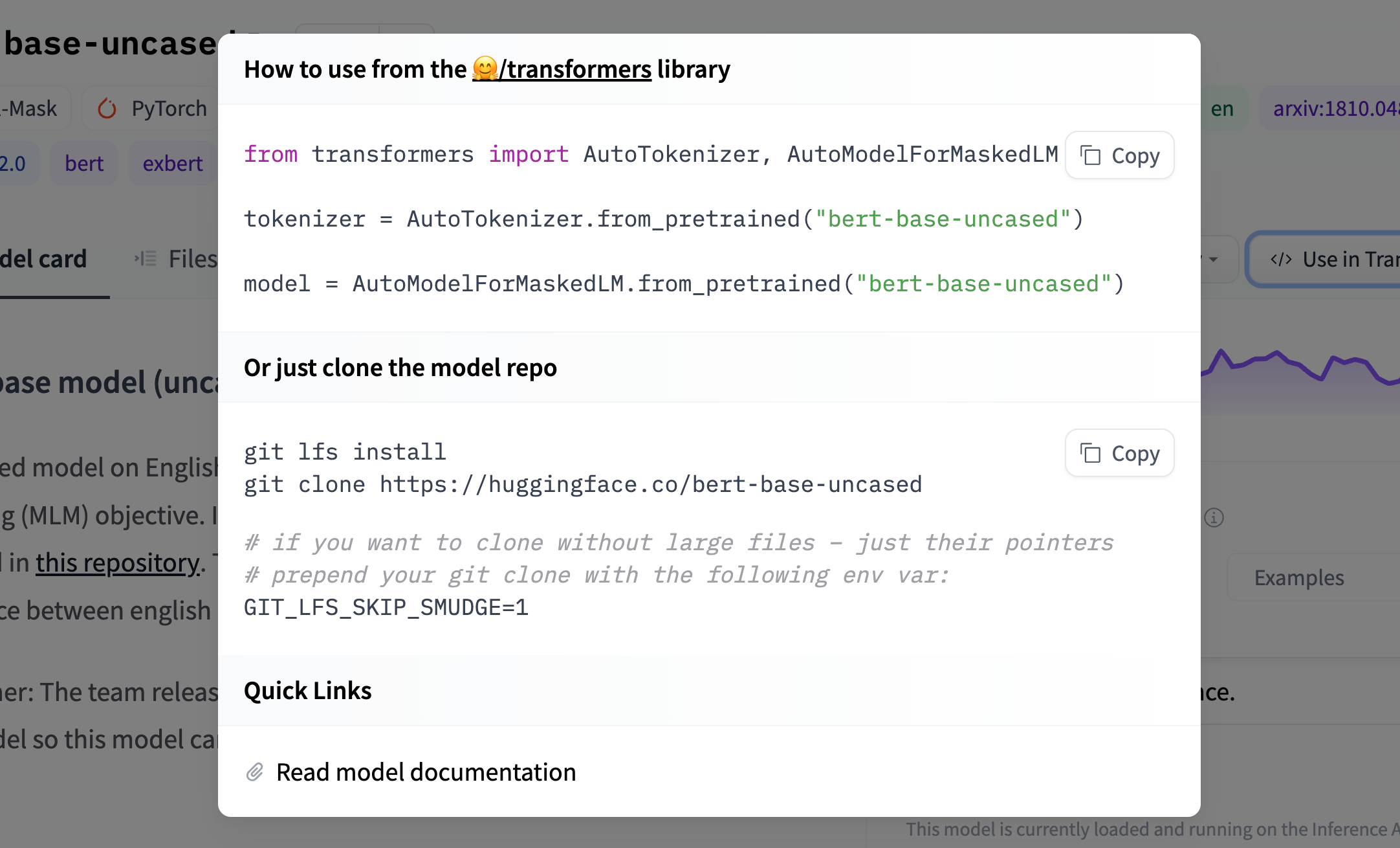

Once installed, you can import the library and load a pre-trained model like this:

import torch

from transformers import BertTokenizer, BertModel

Load pre-trained BERT tokenizer and model

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

model = BertModel.from_pretracted('bert-base-uncased')

Convert input text to BERT's input format (i.e., tokens with attention masks)

input_ids = torch.tensor([tokenizer.encode("Hello, world!")]).long()

attention_mask = torch.tensor([[1, 1, 1, 0, 0]]).long()

Use the model to perform a task, such as sentiment analysis

outputs = model(input_ids, attention_mask)

pooled_output = outputs.pooler_output

sentiment_score = torch.nn.functional.softmax(pooled_output[:, 1], dim=-1)[0]

print("Sentiment score:", sentiment_score.item())

In this example, we load the bert-base-uncased pre-trained model and use it to analyze the sentiment of the input text "Hello, world!" The output is a sentiment score that indicates whether the text has positive or negative sentiment.

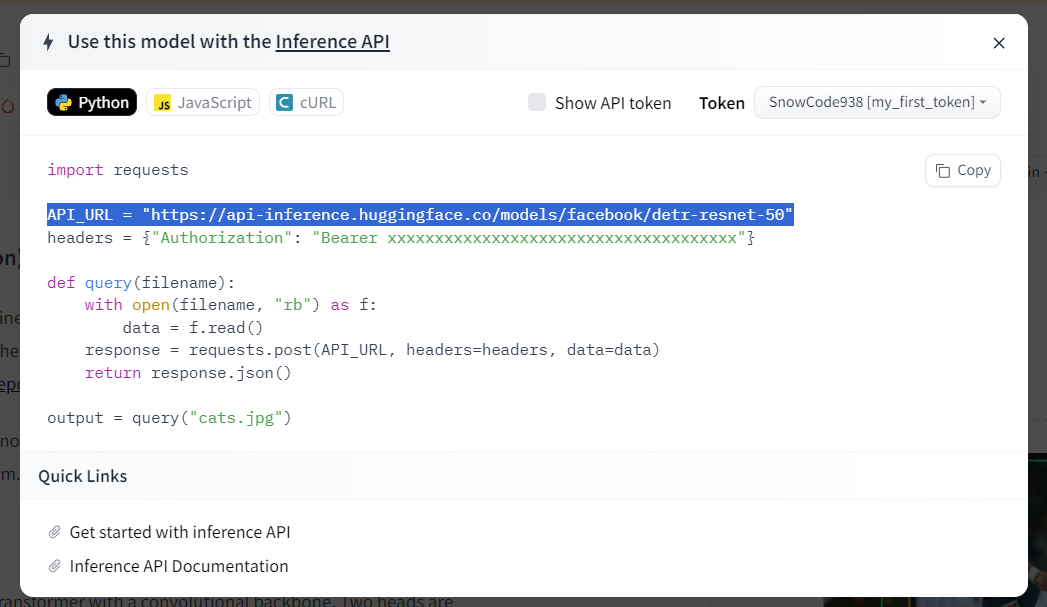

Hugging Face's library also provides many other useful tools for NLP tasks, such as:

Text classification: Use pre-trained models like BERT or RoBERTa to classify text into predefined categories (e.g., spam vs. non-spam emails). Named entity recognition: Identify named entities (e.g., people, locations) in unstructured text using models like BERT or DistilBERT. Question answering: Use pre-trained models like BERT or RoBERTa to answer questions based on a given passage of text.These are just a few examples of what you can do with Hugging Face's library and pre-trained models. If you're interested in exploring more, I encourage you to check out the Hugging Face documentation and tutorials!

How to import Hugging Face in Python?

I'd be happy to help you with that!

To import the Hugging Face library in Python, you'll need to follow these steps:

pip install transformers

This library provides pre-trained models and utilities for natural language processing (NLP) tasks.

Install the Tokenizers Library: You'll also need to install the Tokenizers library, which is used for tokenization (breaking down text into individual words or subwords):pip install tokenizers

import torch

from transformers import AutoModel, AutoTokenizer

model = AutoModel.from_pretrained("bert-base-uncased")

tokenizer = AutoTokenizer.from_pretrained("bert-base-uncased")

print(tokenizer.encode("Hello World!"))

This code imports the Transformers library and uses the AutoModel and AutoTokenizer classes to load a pre-trained BERT model and tokenizer. It then encodes the text "Hello World!" using the tokenizer.

Here's an example of how you might load the BERT model and use it for masked language modeling:

import torch

from transformers import AutoModel, AutoTokenizer

model = AutoModel.from_pretrained("bert-base-uncased")

tokenizer = AutoTokenizer.from_pretracted("bert-base-uncased")

input_ids = tokenizer.encode("Hello World!", return_tensors="pt")[:, :512]

attention_mask = torch.ones_like(input_ids).astype(torch.bool)

outputs = model(input_ids, attention_mask=attention_mask)

This code loads the BERT model and uses it to perform masked language modeling on the input text "Hello World!". The input_ids variable contains the encoded input text, and the attention_mask variable is used to specify which tokens should be considered when computing attention.

The Hugging Face library provides a wealth of resources and examples to help you get started with your NLP project. By following these steps, you'll be well on your way to importing the Hugging Face library and exploring its many features!