Threading vs concurrent futures Python

Threading vs concurrent futures Python

I'd be happy to help you with that!

Threading and concurrency are fundamental concepts in programming that allow us to execute multiple tasks simultaneously, improving the overall performance and responsiveness of our applications. In this response, I'll explore both approaches using Python as the example programming language.

Threading

In Python, the threading module provides a way to create and manage threads, which are lightweight processes that can run concurrently with the main program flow. Each thread has its own stack, allowing it to execute separate code without interfering with other threads.

Here's an example of how you can use threading in Python:

import threading

def worker():

print("Worker thread started")

for i in range(5):

print(f"Thread {threading.current_thread().name} is doing some work...")

time.sleep(1)

threads = []

for i in range(3):

t = threading.Thread(target=worker)

threads.append(t)

t.start()

Wait for all threads to finish

for t in threads:

t.join()

In this example, we define a worker function that simulates some work being done. We then create three separate threads that run the worker function concurrently using the Thread class. The start() method starts each thread's execution, and the join() method ensures that all threads finish before moving on to the next line of code.

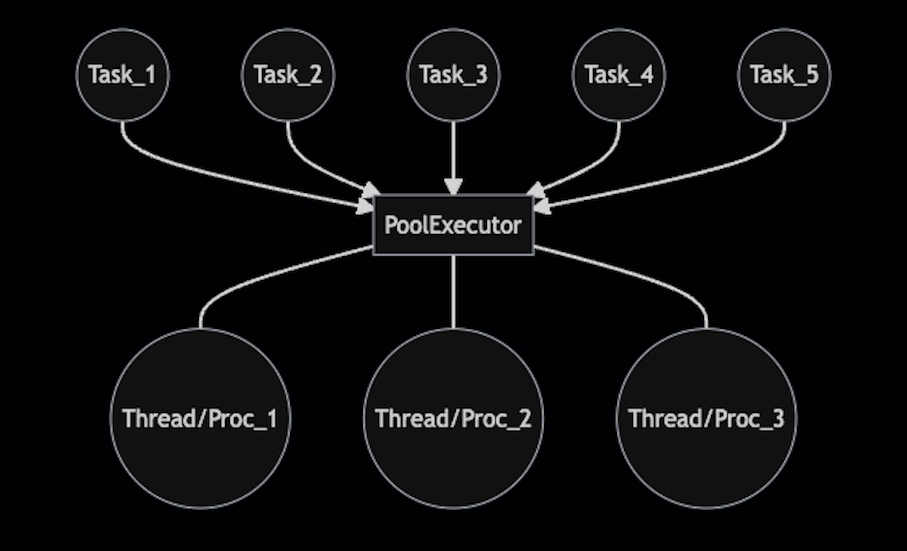

Concurrency with concurrent.futures

Python 3.5 introduced a new module called concurrent.futures, which provides an easier way to write concurrent code using threads or processes. The main advantage of this approach is that you don't need to worry about managing threads and their synchronization manually.

Here's an example of how you can use concurrent.futures in Python:

import concurrent.futures

def worker():

print("Worker started")

for i in range(5):

print(f"Doing some work...")

time.sleep(1)

with concurrent.futures.ThreadPoolExecutor() as executor:

futures = []

for i in range(3):

future = executor.submit(worker)

futures.append(future)

Wait for all tasks to finish

for future in futures:

future.result()

In this example, we create a ThreadPoolExecutor instance that manages the execution of our worker function concurrently. We submit each worker function as an independent task using the submit() method and store the resulting futures in a list. Finally, we use the result() method to wait for all tasks to finish before moving on.

Comparison

Both threading and concurrency with concurrent.futures allow you to execute multiple tasks concurrently, but there are some key differences:

concurrent.futures abstracts away these complexities, making it easier to write concurrent code. Synchronization: When using threading, you typically need to use synchronization primitives (e.g., locks, semaphores) to ensure thread safety. concurrent.futures uses higher-level abstractions and provides built-in support for concurrency, reducing the need for explicit synchronization. Performance: Threading can be more lightweight than process-based concurrency, as threads share the same memory space. However, Python's Global Interpreter Lock (GIL) means that true parallelism is only achieved when using multiple processes.

In conclusion, both threading and concurrent.futures are viable options for writing concurrent code in Python. The choice between them depends on your specific use case and requirements. If you need fine-grained control over threads or want to achieve true parallelism, threading might be a better fit. If you prefer a higher-level abstraction that simplifies thread management and concurrency, concurrent.futures could be the way to go.

I hope this helps! Let me know if you have any further questions.

Python multithreading vs multiprocessing

The age-old debate: Python's multithreading and multiprocesssing!

In a nutshell, both approaches aim to harness the power of parallelism for enhanced performance and efficiency. Let me dive into the details:

Multithreading (MT)

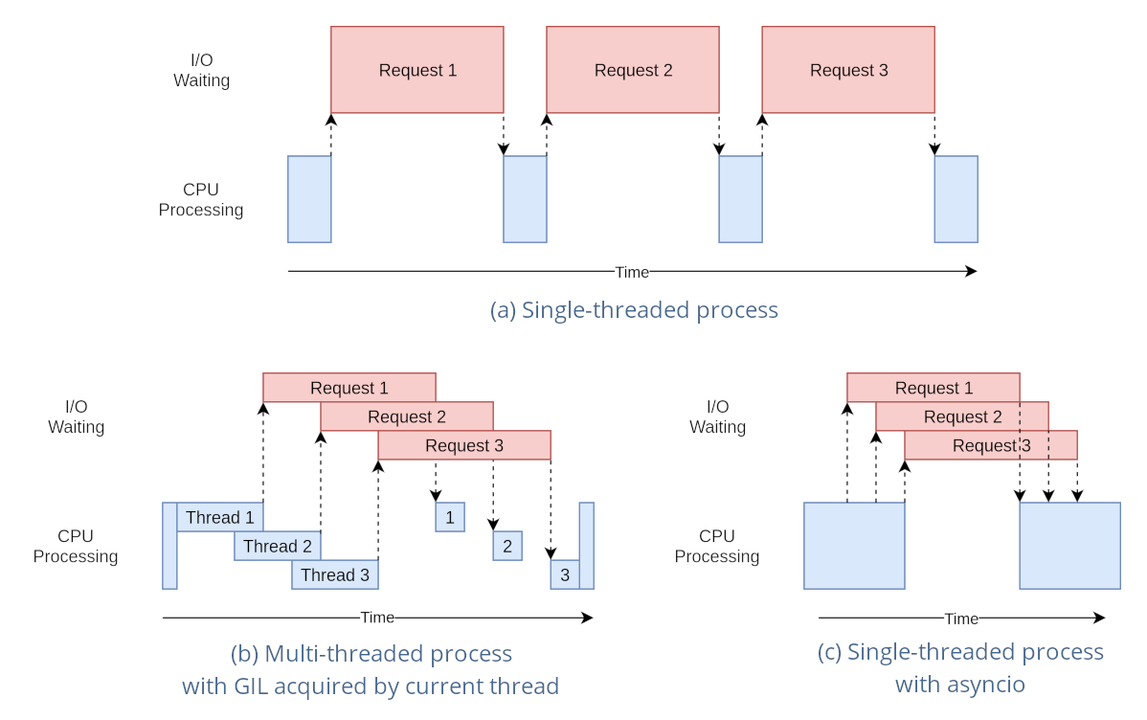

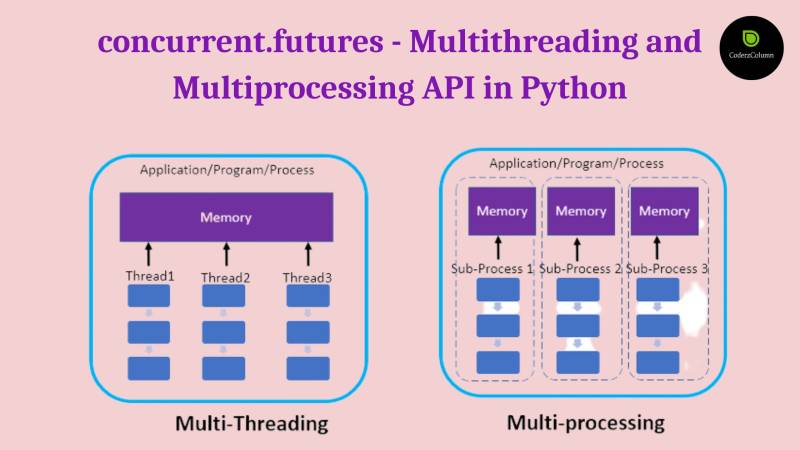

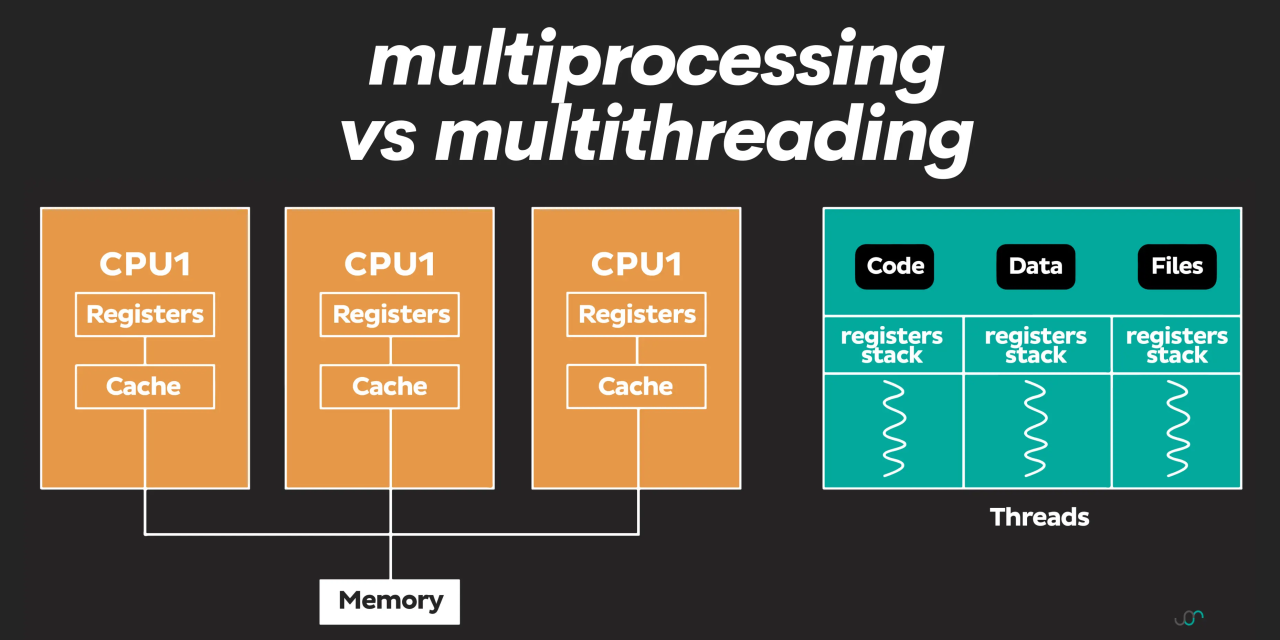

Python uses the Global Interpreter Lock (GIL) to ensure thread safety. In essence, only one thread can execute Python bytecodes at any given time. This means that threads share memory, which simplifies inter-thread communication but also limits concurrency.

Here are some key aspects of multithreading:

Forking: Threads share the same address space and can communicate using shared variables. Locks: Use to synchronize access to shared resources, protecting against race conditions and deadlocks. Scheduling: The operating system manages thread scheduling, allocating CPU time based on priority and availability.Benefits of multithreading:

Improved responsiveness: Each thread can perform I/O operations or computations independently, enhancing the overall responsiveness of your program. Shared memory: Easy inter-thread communication using shared variables makes it an attractive choice for applications where data is frequently accessed in parallel. Less overhead: Creating threads has less overhead compared to creating new processes.However, multithreading also comes with some limitations:

GIL limitation: As mentioned earlier, the GIL restricts concurrency and can lead to thread starvation. Global interpreter lock: When a thread is blocked (e.g., waiting for I/O), other threads are halted until the blocking thread resumes.Multiprocessing (MP)

Python's multiprocessing module uses the os.fork() function to create new processes, allowing each process to have its own Python interpreter and address space. This leads to:

Key aspects of multiprocessing:

Forking: Create new processes usingos.fork(). Inter-process communication (IPC): Use pipes, queues, or shared memory to exchange data between processes. Process management: Manage process creation, termination, and synchronization.

Benefits of multiprocessing:

True parallelism: Multiple CPU cores can be fully utilized, leading to significant performance boosts. No GIL limitations: Processes are not restricted by the GIL, making it suitable for CPU-bound tasks. Isolation: Processes have their own address space and Python interpreter, reducing the risk of shared variable conflicts.However, multiprocessing also has some drawbacks:

Higher overhead: Creating processes requires more system resources and is generally slower than threading. IPC complexity: Exchanging data between processes can be challenging, especially for complex data structures.Comparison Summary

| | Multithreading (MT) | Multiprocessing (MP) |

| --- | --- | --- |

| Concurrency | Limited by GIL | True parallelism, no GIL limitations |

| Memory Sharing | Shared memory space | Each process has its own address space |

| Scheduling | OS-managed thread scheduling | OS-scheduled process scheduling |

| Overhead | Lower overhead for thread creation | Higher overhead for process creation |

| Isolation | Threads share the same address space | Processes have their own address spaces |

In conclusion:

Use multithreading when: You need to improve responsiveness and shared memory-based communication. Your application is I/O-bound or has limited CPU-intensive tasks. Use multiprocessing when: You require true parallelism and can utilize multiple CPU cores effectively. Your application has computationally intensive tasks with minimal I/O operations.Remember, each approach has its strengths and weaknesses. Consider the nature of your application, the importance of responsiveness, and the trade-offs between concurrency, isolation, and performance when choosing between Python's multithreading and multiprocessing options.