Does Python asyncio use epoll?

Does Python asyncio use epoll?

I'll respond in English since you specifically asked for it.

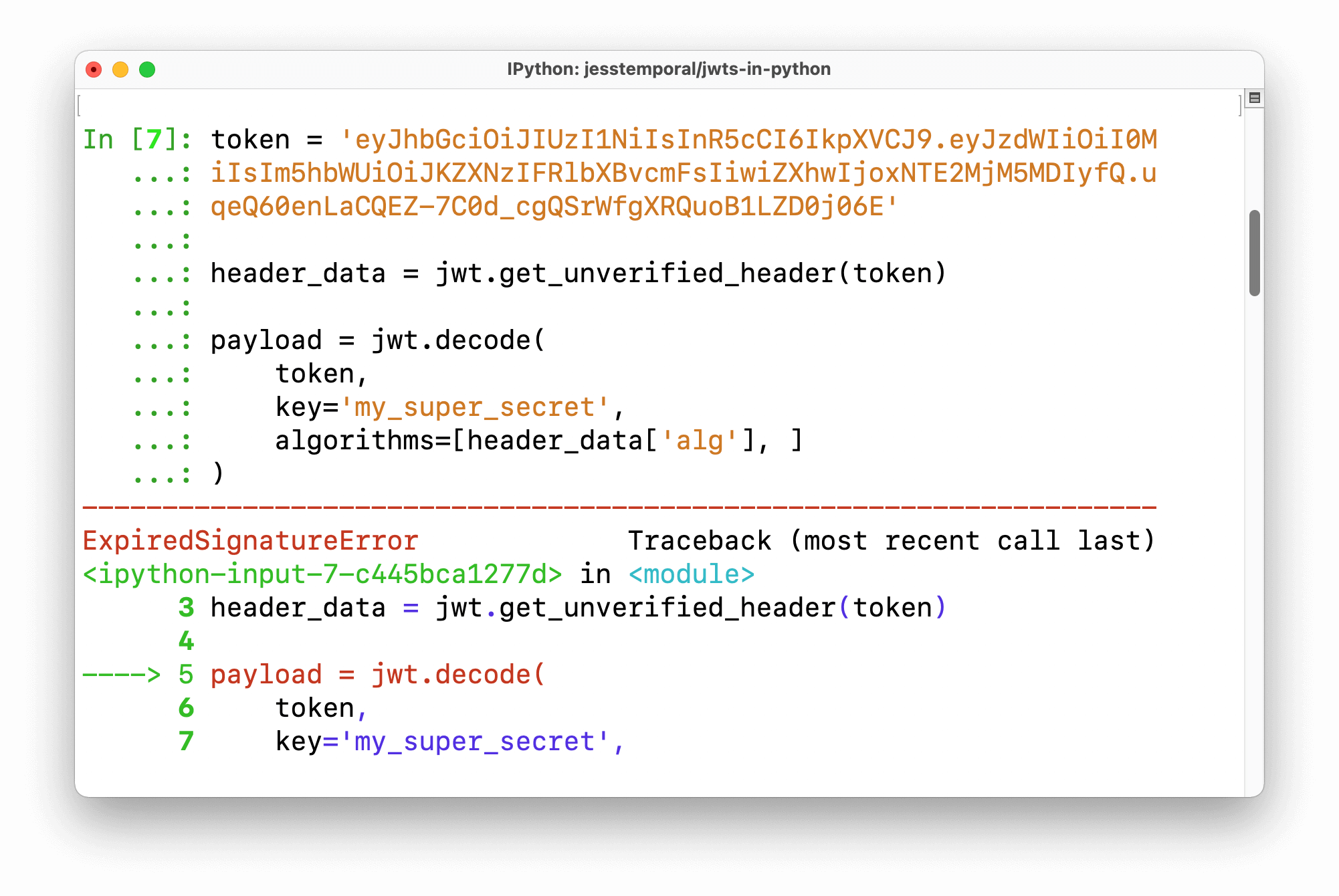

Python's asyncio library, which is part of the standard library, uses a mechanism called epoll on Unix-like systems (such as Linux and macOS) to efficiently handle I/O-bound operations like network I/O or file descriptors. Epoll is a variant of the epoll system call that provides a more efficient and scalable way to monitor multiple file descriptors for readability or writability.

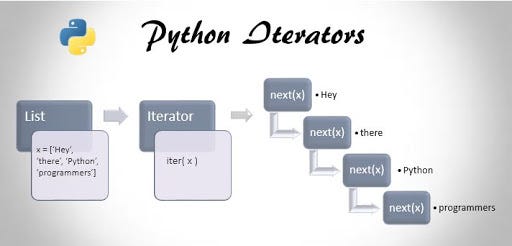

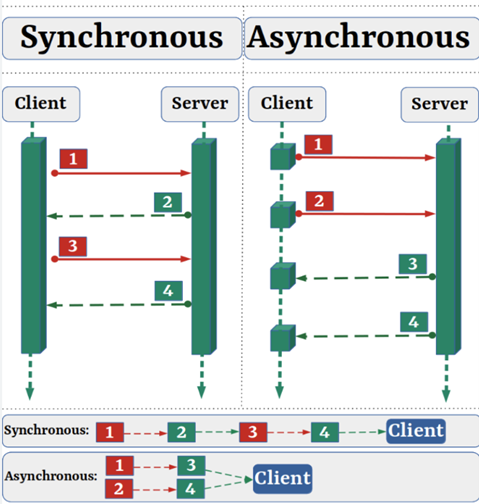

To understand why asyncio uses epoll, let's first discuss how asyncio handles I/O-bound operations. In asyncio, an event loop manages the execution of coroutines (asyncio-compatible functions) and handles I/O-bound operations like network I/O, file I/O, and subprocess execution. When a coroutine yields control to the event loop due to an I/O operation (e.g., waiting for data from a socket), the event loop schedules other ready-to-run coroutines. This allows asyncio to efficiently handle many concurrent connections and tasks.

Epoll is used because it provides a more efficient way to monitor multiple file descriptors than traditional polling mechanisms, such as select or poll. With epoll, you can create an epoll object that monitors multiple file descriptors (called "watched" file descriptors) for readability or writability. When a watched file descriptor becomes readable or writable, the kernel notifies your process by calling your registered callback function.

Here are some key benefits of using epoll in asyncio:

Efficient monitoring: Epoll allows your process to efficiently monitor multiple file descriptors without having to repeatedly check each file descriptor's status, which can be costly. Scalability: With epoll, you can easily handle many concurrent connections and file descriptors without introducing significant overhead or bottlenecks in your event loop. Low latency: Epoll provides a low-latency mechanism for monitoring file descriptors, which is essential when handling real-time I/O-bound operations like network I/O.To illustrate how asyncio uses epoll, let's consider an example: A web server that handles many concurrent HTTP connections. The event loop in the web server's asyncio application would use epoll to monitor multiple socket file descriptors for readability or writability. When a new connection is established, the event loop schedules the corresponding coroutine to handle the request. If a client sends a message, the event loop will wake up and run the corresponding coroutine.

In summary, Python's asyncio library uses epoll on Unix-like systems to efficiently manage I/O-bound operations like network I/O or file descriptors. This allows asyncio to scale well in environments where many concurrent connections are common, such as web servers handling thousands of clients simultaneously.

(Note: If you want to know more about the implementation details of asyncio and its usage of epoll, feel free to ask!)

Does asyncio come with Python?

I'd be happy to help!

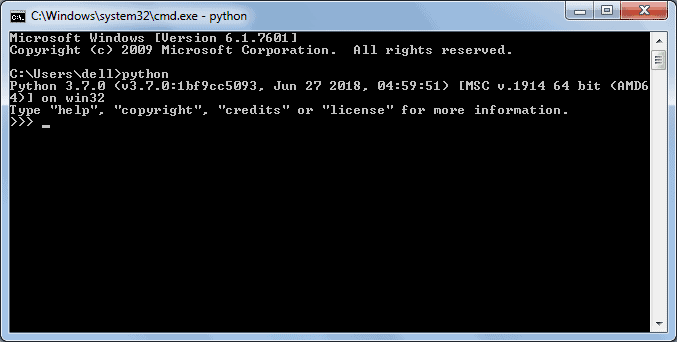

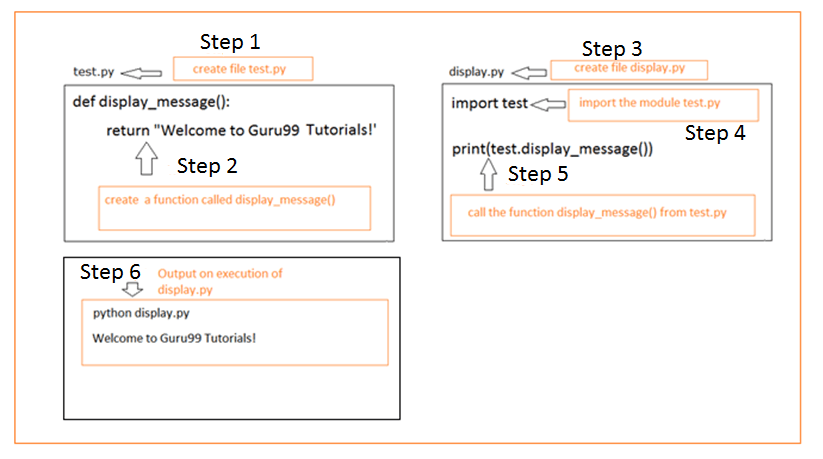

Yes, asyncio is indeed a part of the Python Standard Library since version 3.4. It's designed for asynchronous I/O, which means it can handle multiple tasks concurrently without blocking the main thread. This makes it particularly useful for developing networked applications that require handling multiple connections simultaneously.

In essence, asyncio provides support for writing single-threaded concurrent code using coroutines, multiplexing I/O access over sockets and other resources, and implementing network clients and servers.

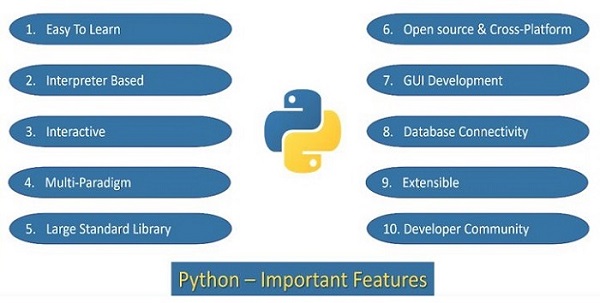

Some key features of asyncio include:

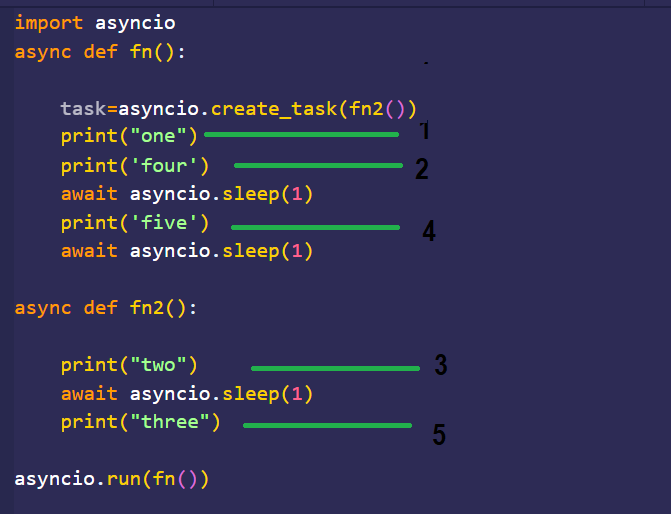

Coroutines: A coroutine is a function that can be paused at any point and resumed later from where it left off. This allows for efficient use of system resources and makes it easier to write concurrent code. Event Loop: The event loop manages the execution of coroutines, handling tasks like scheduling, running, and pausing them as needed. It's also responsible for processing incoming data and triggering callback functions. Tasks: Tasks are lightweight objects that represent a coroutine in the process of being executed. They can be used to create and manage concurrent code.When you use asyncio, your Python program doesn't have to be single-threaded; instead, it can handle multiple tasks concurrently without creating new threads or processes. This is especially useful for applications that need to handle many simultaneous connections, such as web servers, chat platforms, or online games.

As a bonus, since asyncio is part of the Standard Library, you don't need to install any additional packages to start using it! You can simply import the asyncio module and begin writing your concurrent code.

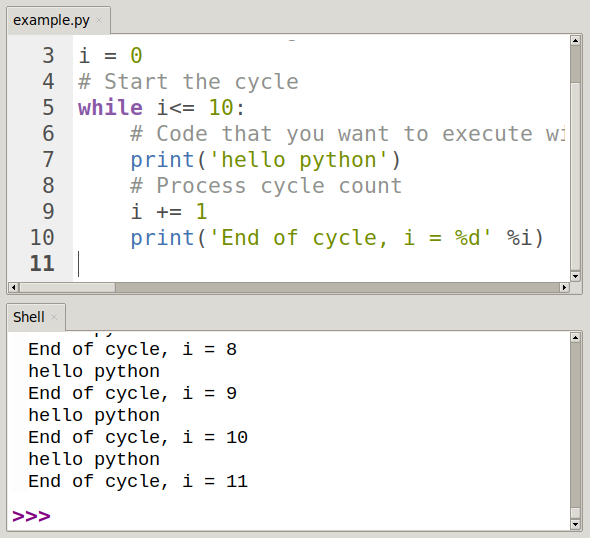

Here's a simple example of how you might use asyncio to create a concurrent web server:

import asyncio

@asyncio.coroutine

def handle_connection(reader, writer):

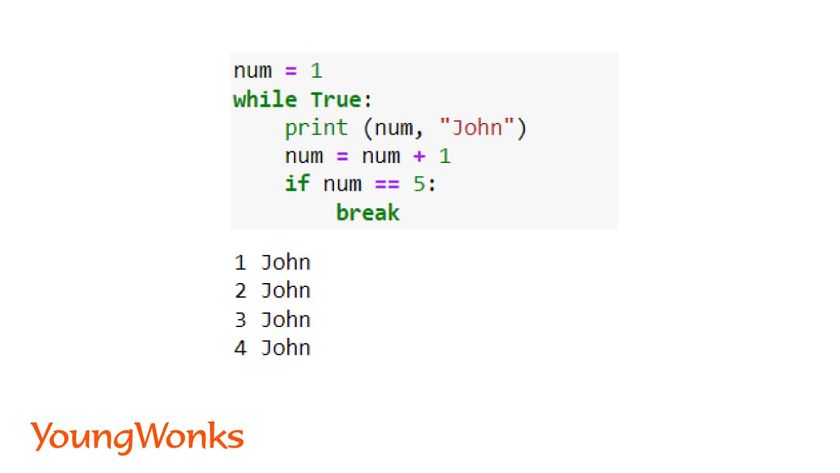

while True:

data = yield from reader.readline()

if not data:

break

print(data.decode())

writer.write(data)

@asyncio.coroutine

def run_server(port):

loop = asyncio.get_event_loop()

server = await loop.create_server(handle_connection, '127.0.0.1', port)

print(f"Server started on {server.sockets[0].getsockname()}...")

try:

await server.wait_closed()

finally:

server.close()

asyncio.run_coroutine_threadsafe(run_server(8080), loop.current_task().coro)

In this example, the handle_connection function represents a single connection to your web server. The run_server function creates and runs the server. When you run this code, it will start an asynchronous web server listening on port 8080.

Overall, asyncio is a powerful tool for building concurrent applications in Python, making it easier to handle multiple tasks simultaneously without sacrificing performance or stability.

I hope that helps!