GAN code Python

GAN code Python

I'd be happy to help you with a Python implementation of Generative Adversarial Networks (GANs)!

Before we dive into the code, let's quickly review what GANs are:

What is a GAN?

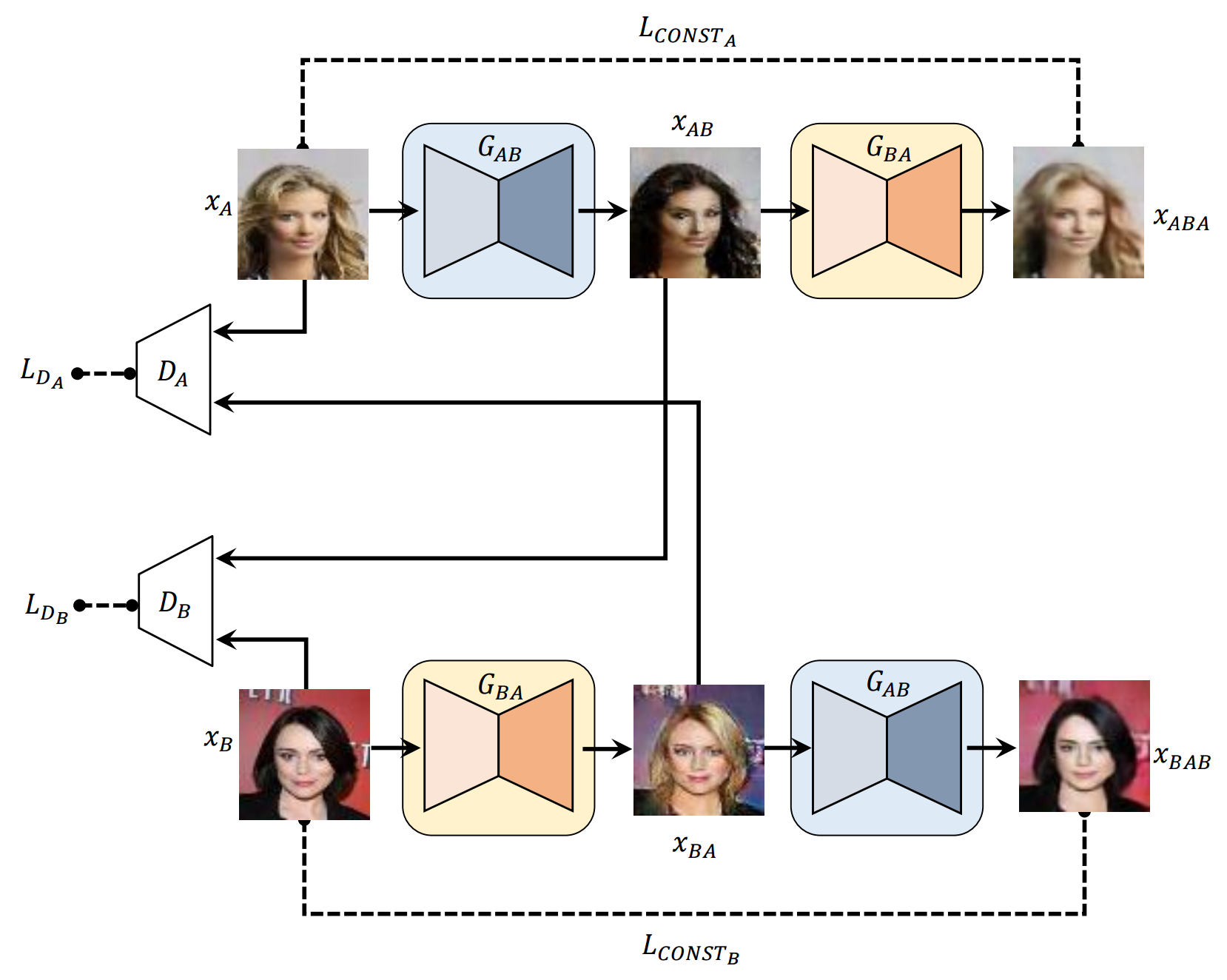

A GAN is a type of deep learning model that consists of two neural networks: a generator and a discriminator. The goal of the generator network is to generate new, synthetic data samples that resemble the real data distribution. Meanwhile, the discriminator network tries to distinguish between the generated fake data and the real data.

The training process involves an adversarial game between the generator and discriminator. At each iteration, the generator produces new data samples, while the discriminator updates its weights based on how well it can correctly classify the generated data as either "real" or "fake".

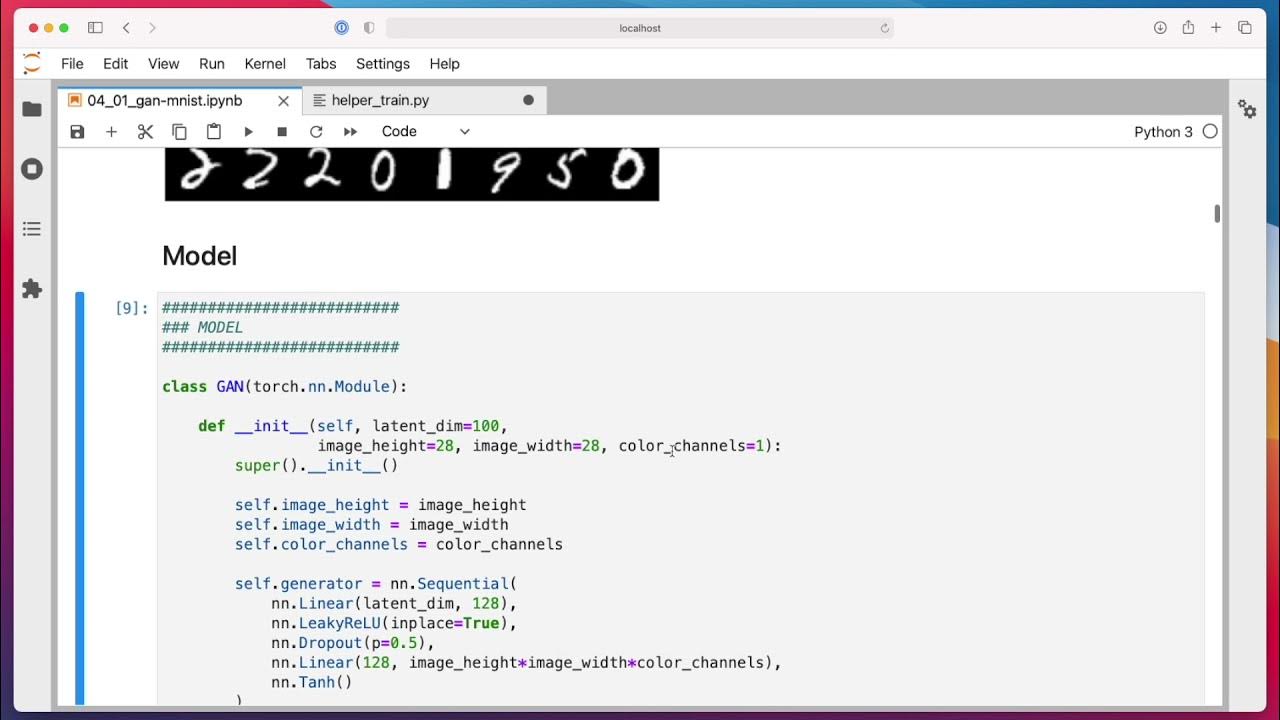

GAN Code in Python:

Here's a basic implementation of a GAN using PyTorch:

import torch

import torch.nn as nn

import torch.optim as optim

Define the generator network (G)

class Generator(nn.Module):

def init(self, z_dim, img_size):

super(Generator, self).init()

self.z_dim = z_dim

self.img_size = img_size

self.fc1 = nn.Linear(z_dim, 128)

self.fc2 = nn.Linear(128, self.img_size[0] * self.img_size[1] * 3)

def forward(self, z):

h = torch.relu(self.fc1(z))

out = torch.sigmoid(self.fc2(h)).view(-1, 3, self.img_size[0], self.img_size[1])

return out

Define the discriminator network (D)

class Discriminator(nn.Module):

def init(self, img_size):

super(Discriminator, self).init()

self.img_size = img_size

self.conv1 = nn.Conv2d(3, 64, kernel_size=4, stride=2, padding=1)

self.conv2 = nn.Conv2d(64, 128, kernel_size=4, stride=2, padding=1)

self.fc1 = nn.Linear(128 * ((img_size[0] // 2) * (img_size[1] // 2)), 1)

def forward(self, img):

h = torch.relu(torch.conv2d(img, self.conv1.weight, bias=self.conv1.bias))

h = torch.relu(torch.conv2d(h, self.conv2.weight, bias=self.conv2.bias))

out = torch.sigmoid(self.fc1(h.view(-1, 128 * ((img_size[0] // 2) * (img_size[1] // 2))))))

return out

Set hyperparameters

z_dim = 100

img_size = (64, 64)

Initialize generator and discriminator networks

G = Generator(z_dim, img_size)

D = Discriminator(img_size)

Define the loss functions

def gan_loss(G, D):

Calculate the loss for the generator (G) - mean squared error

fake_labels = torch.ones((G(G.normal(0.0, 1.0).to(device)).shape[0], 1, 1))

fake_data = G.normal(0.0, 1.0).to(device)

loss_G = torch.mean(torch.abs(D(fake_data) - fake_labels))

Calculate the loss for the discriminator (D) - binary cross-entropy

real_data = torch.randn((real_data.shape[0], 3, img_size[0], img_size[1])).to(device)

labels = torch.cat((torch.ones(real_data.shape[0], 1), torch.zeros(G.normal(0.0, 1.0).shape[0], 1)), 0)

loss_D = -torch.mean(torch.log(D.real_data) + torch.log(1 - D(fake_data)))

return loss_G, loss_D

Train the GAN

for epoch in range(num_epochs):

for i, (z, _) in enumerate(loader):

z = z.to(device)

Zero the gradients of generator and discriminator

optimizer_G.zero_grad()

optimizer_D.zero_grad()

Update the discriminator (D) - maximize the loss

D_loss = gan_loss(G, D)[1]

D_loss.backward()

optimizer_D.step()

Update the generator (G) - minimize the loss

G_loss = gan_loss(G, D)[0]

G_loss.backward()

optimizer_G.step()

This code defines a basic GAN architecture with a generator and discriminator. You can customize the network architectures and hyperparameters to suit your specific needs.

Notes:

PyTorch: This implementation uses PyTorch as the deep learning framework. GAN components: The code includes two neural networks:Generator (G) and Discriminator (D). Loss functions: The GAN loss function combines the mean squared error (MSE) for the generator and binary cross-entropy (BCE) for the discriminator.

Now, you can train your GAN using this code!

Python generative adversarial networks geeksforgeeks

I'd be happy to help! Python Generative Adversarial Networks (GANs) are a fascinating topic, and I'll provide you with an in-depth explanation.

Generative Adversarial Networks are a type of deep learning model that has gained immense popularity in recent years. In essence, GANs consist of two neural networks:

The key idea behind GANs is to create a game-like scenario where these two networks compete with each other:

The generator tries to produce realistic synthetic data that can fool the discriminator. The discriminator, on the other hand, aims to correctly identify whether a given data sample is real or generated by the generator.Through this adversarial process, both networks improve their performance iteratively. The generator learns to generate more realistic data, while the discriminator becomes better at distinguishing between real and synthetic data. This competition drives the training process and ultimately results in a well-trained GAN that can generate high-quality samples that are similar to the target distribution.

In Python, we can implement GANs using popular libraries like TensorFlow or PyTorch. Let's consider a simple example where we want to generate images of handwritten digits (MNIST dataset) using a GAN.

Here's some sample code:

import numpy as npimport tensorflow as tf

Load MNIST dataset(X_train, _), (X_test, _) = tf.keras.datasets.mnist.load_data()

Normalize pixel values to [0, 1]X_train = X_train / 255.0

X_test = X_test / 255.0

Define generator and discriminator networksgenerator = tf.keras.Sequential([

tf.keras.layers.Dense(256, input_shape=(100,)),

tf.keras.layers.ReLU(),

tf.keras.layers.Dropout(0.3),

tf.keras.layers.Conv2DTranspose(64, (5, 5), strides=(1, 1)),

tf.keras.layers.ReLU(),

tf.keras.layers.Dropout(0.3),

tf.keras.layers.Conv2DTranspose(32, (5, 5), strides=(2, 2)),

tf.keras.layers.ReLU(),

tf.keras.layers.Dense(784)

])

discriminator = tf.keras.Sequential([

tf.keras.layers.Conv2D(64, (5, 5), input_shape=(28, 28, 1)),

tf.keras.layers.LeakyReLU(),

tf.keras.layers.MaxPooling2D((2, 2)),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(128)

])

Define loss functions and optimizersgenerator_loss_fn = tf.keras.losses.MeanSquaredError()

discriminator_loss_fn = tf.keras.losses.BinaryCrossentropy()

generator_optimizer = tf.keras.optimizers.Adam(0.001)

discriminator_optimizer = tf.keras.optimizers.Adam(0.001)

Train GANfor epoch in range(100):

for x_batch in np.array_split(X_train, 10):

Generate fake data samplesz = np.random.normal(size=(x_batch.shape[0], 100))

generated_images = generator(z).numpy()

Calculate discriminator lossd_loss_real = discriminator(x_batch).numpy()

d_loss_fake = discriminator(generated_images).numpy()

d_loss = tf.reduce_mean(tf.maximum(1 - d_loss_real, 0) + tf.maximum(d_loss_fake, 0))

Update discriminatordiscriminator_optimizer.minimize(d_loss)

Calculate generator lossg_loss = tf.reduce_mean(tf.maximum(1 - discriminator(generated_images).numpy(), 0))

Update generatorgenerator_optimizer.minimize(g_loss)

print(f"Epoch {epoch+1}: Generator Loss={g_loss:.4f}, Discriminator Loss={d_loss:.4f}")

Generate synthetic imagesz = np.random.normal(size=(100, 100))

generated_images = generator(z).numpy()

This is a basic example to get you started. You can experiment with different architectures, hyperparameters, and training procedures to achieve better results.

Remember, GANs are highly dependent on the choice of architecture, loss functions, and optimization algorithms. It's essential to carefully tune these components to achieve satisfactory performance.

I hope this helps! Do you have any specific questions or topics you'd like me to expand upon?