How to perform XGBoost in Python?

How to perform XGBoost in Python?

Here's a detailed guide on how to perform XGBoost in Python:

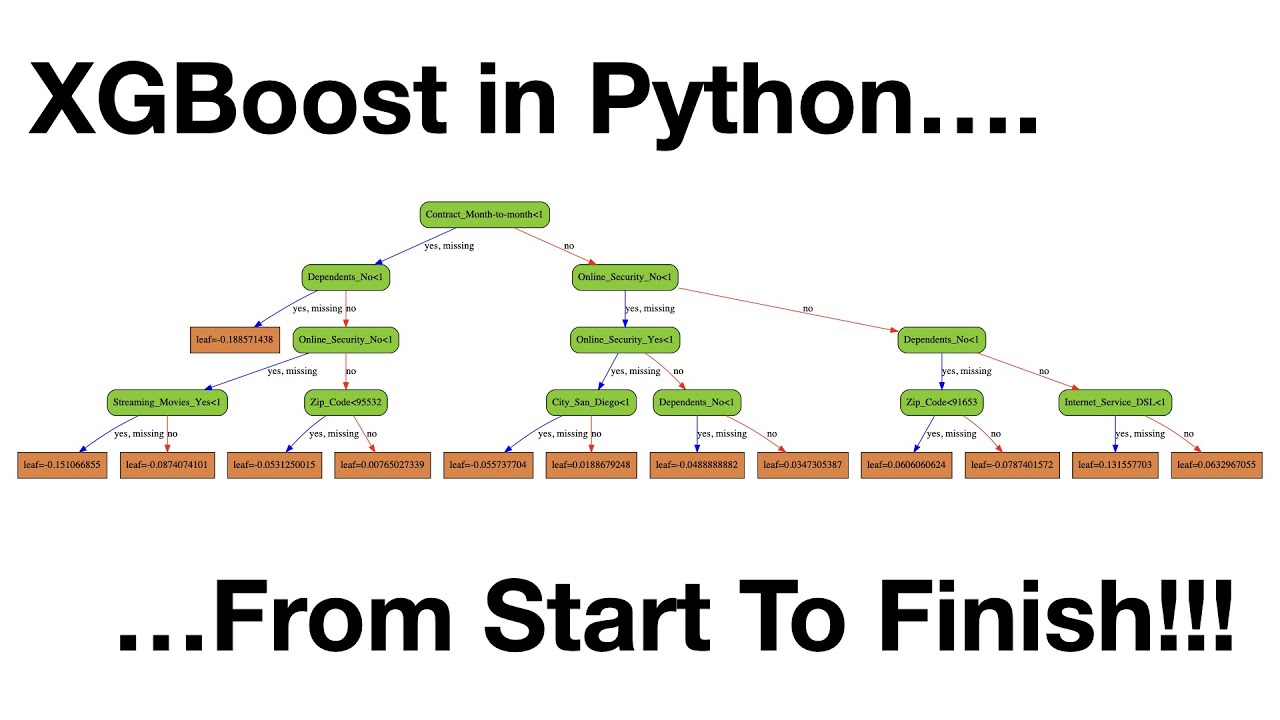

What is XGBoost?

XGBoost (Extreme Gradient Boosting) is an open-source, high-performance gradient boosting library developed by Microsoft Research Asia. It provides efficient and scalable parallel processing of tree-based models, making it particularly useful for large-scale machine learning tasks.

Why use XGBoost in Python?

Python is a popular language for data science due to its ease of use, flexibility, and extensive libraries. Combining XGBoost with Python offers the following advantages:

Scalability: XGBoost's parallel processing capabilities can be leveraged by using Python's multiprocessing library, enabling efficient training on large datasets. Interoperability: Python allows seamless integration with other popular machine learning libraries and tools, such as scikit-learn, TensorFlow, or Keras, making it easy to use XGBoost within larger workflows.How to perform XGBoost in Python

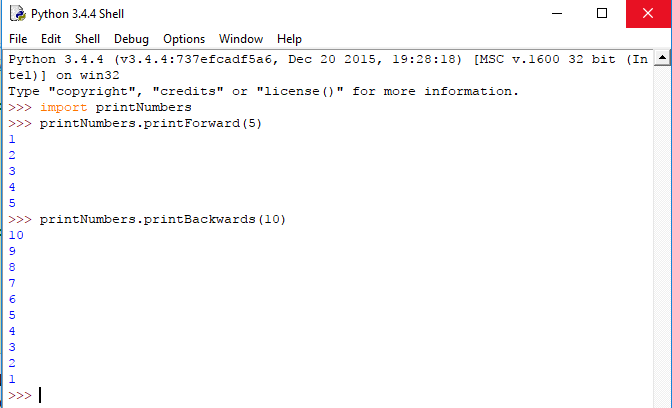

Here's a step-by-step guide on how to use XGBoost in Python:

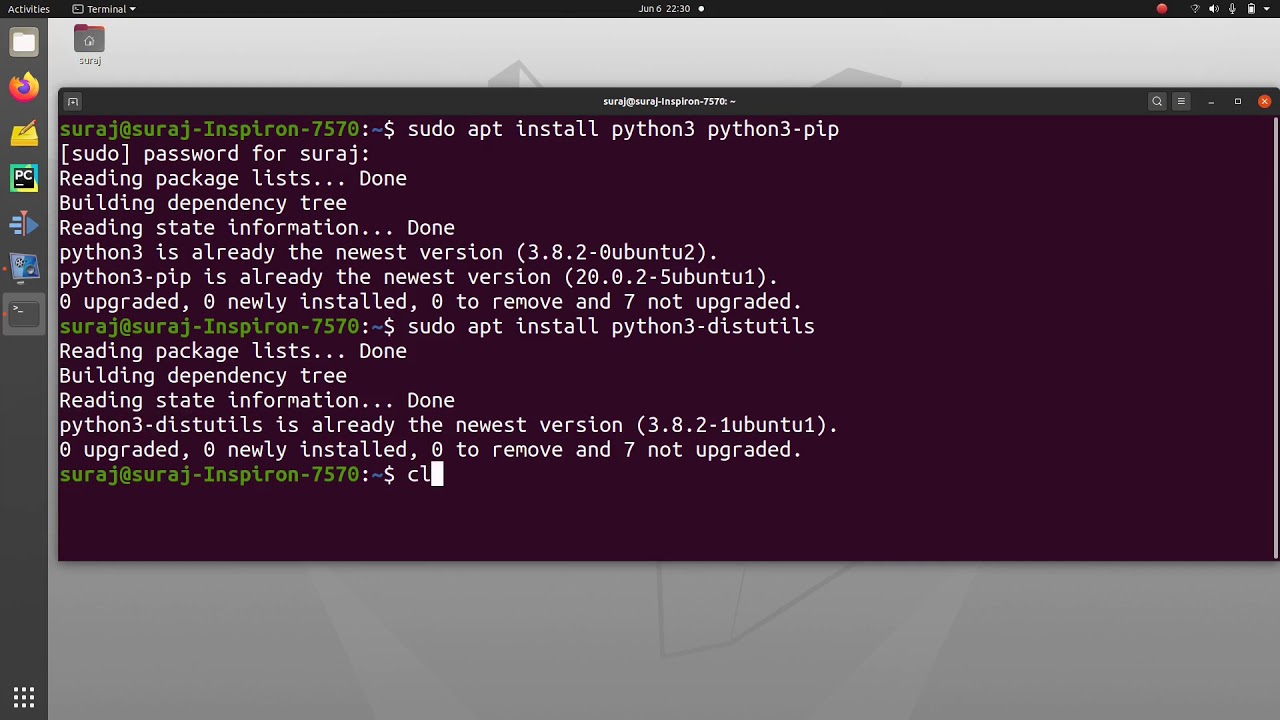

1. Installation

You can install XGBoost using pip:

pip install xgboost

2. Importing the library

In your Python script, import the XGBoost library:

import xgboost as xgb

3. Loading data

Load your dataset (e.g., a CSV file) into a Pandas DataFrame or NumPy array:

import pandas as pd

Load CSV file

df = pd.read_csv('data.csv')

Convert to XGBoost-compatible format

train_data = df.drop('target', axis=1).values

train_labels = df['target'].values

4. Creating the XGBoost model

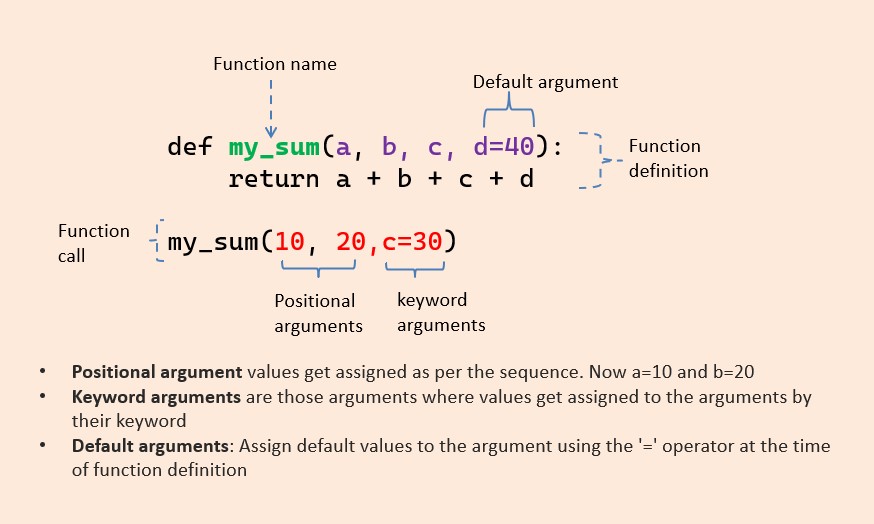

Create an XGBoost model instance, specifying hyperparameters such as learning rate, maximum depth of trees, and number of boosting rounds:

# Create the XGBoost model

xgb_model = xgb.XGBClassifier(

objective='binary:logloss',

max_depth=6,

learning_rate=0.1,

n_estimators=100,

gamma=0,

min_child_weight=3,

subsample=0.8,

colsample_bytree=0.9,

reg_lambda=10^-4,

seed=42

)

5. Training the model

Train the XGBoost model using the loaded data:

# Train the model

xgb_model.fit(train_data, train_labels)

6. Making predictions

Use the trained model to make predictions on new, unseen data:

# Make predictions on test set

test_pred = xgb_model.predict(test_data)

7. Evaluating the model

Evaluate your XGBoost model using metrics like accuracy, precision, recall, F1 score, or AUC-ROC:

from sklearn.metrics import accuracy_score

Evaluate the model

accuracy = accuracy_score(test_labels, test_pred)

print("Accuracy:", accuracy)

Conclusion

This guide provides a comprehensive introduction to performing XGBoost in Python. With its scalability and ease of use, XGBoost has become a popular choice for large-scale machine learning tasks. By following these steps, you can leverage the power of XGBoost to build accurate predictive models and gain insights from your data.

Python xgboost sklearn github

I'd be happy to help!

XGBoost is an open-source machine learning library that provides a fast and efficient way to train gradient boosting models, including decision trees, linear regression, and generalized additive models. It's widely used in the industry for tasks such as classification, regression, ranking, and clustering.

In Python, you can use XGBoost along with Scikit-learn (sklearn) library to build machine learning models. Here's a high-level overview of how you can use these libraries together:

Install the necessary libraries: You'll need to install XGBoost and sklearn using pip: pip install xgboost scikit-learn

import xgboost as xgb

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

iris = load_iris()

X = iris.data[:, :2] # we only take the first two features.

y = iris.target

Then, split your data into training and testing sets using train_test_split:

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

xgb_model = xgb.XGBClassifier(max_depth=6, learning_rate=0.1, n_estimators=100, objective='binary:logistic', eval_metric='auc')

xgb_model.fit(X_train, y_train)

y_pred = xgb_model.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)

print('Accuracy:', accuracy)

new_data = [[1, 2], [3, 4]]

predictions = xgb_model.predict(new_data)

In the above example, we used XGBoost's binary classification mode with logistic regression as the objective function. However, you can use other objective functions like multi:softmax for multi-class classification or ranking:pairwise for pairwise ranking tasks.

GitHub is a web-based platform that allows developers to share and collaborate on software projects. You can find many open-source implementations of XGBoost in Python on GitHub, including the official XGBoost repository.

Remember to explore more about these libraries and their functionalities by checking out their documentation and examples!

Here's some additional information you might find useful:

For a detailed comparison between XGBoost and other popular machine learning libraries like LightGBM and CatBoost, check out this blog post: https://github.com/dmlc/xgboost/blob/master/doc/compare.md For more advanced topics on using XGBoost with Scikit-learn, such as handling categorical data or optimizing hyperparameters, explore these resources: XGBoost documentation (https://xgboost.readthedocs.io/en/latest/tutorials/index.html) Scikit-learn documentation (https://scikit-learn.org/stable/) A blog post on using XGBoost for text classification: https://www.analyticsvidhya.com/blog/2020/06/xgboost-for-text-classification/Feel free to ask me any questions or clarify any doubts you might have!