Python multithreading GIL

Python multithreading GIL

Understanding the Global Interpreter Lock (GIL) in Python Multithreading

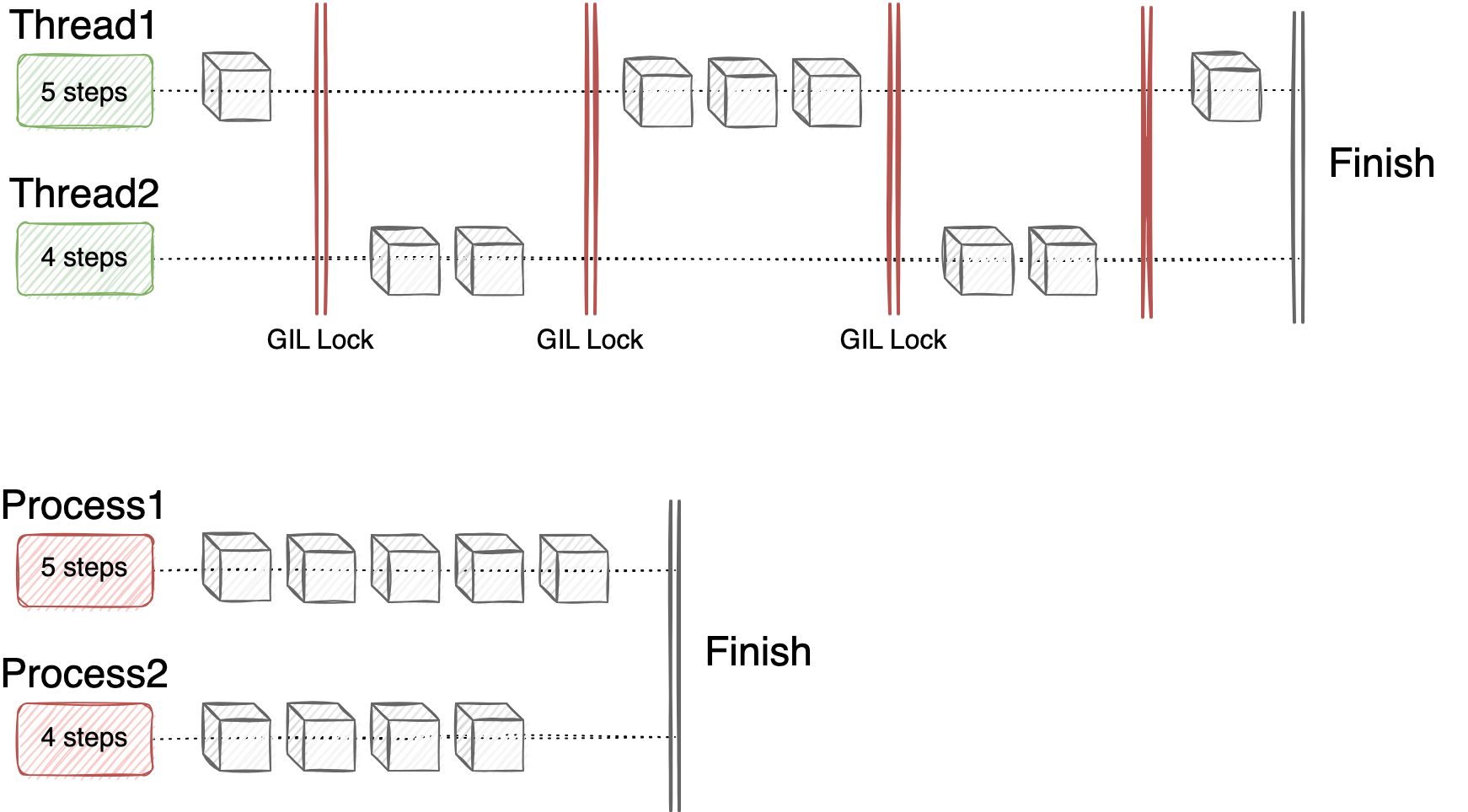

When it comes to programming languages that support multithreading, one crucial concept is the Global Interpreter Lock (GIL). In Python, the GIL is a mechanism that prevents multiple threads from executing Python bytecodes at once. This might seem counterintuitive for a language touted as being capable of concurrent execution.

Let's dive deeper into what this means and why it exists in the first place.

What is the Global Interpreter Lock (GIL)?

The GIL is a lock implemented by the CPython interpreter, which prevents multiple threads from executing Python bytecodes simultaneously. This is crucial for ensuring that shared resources are accessed safely and consistently, as it prevents multiple threads from modifying or reading data at the same time.

In simpler terms, think of the GIL like a virtual "thread execution token." Only one thread can hold this token at any given moment, allowing only one thread to execute Python code at a time.

Why is the GIL present in Python?

The reason for the existence of the GIL dates back to the early days of Python. When the language was first designed, it aimed to provide an efficient way to write single-threaded applications with a high-level syntax. The original intention wasn't to create a multi-threaded environment.

However, as Python's popularity grew and developers began using it for more complex tasks, the need for concurrency became increasingly important. CPython, being the most popular implementation of the language, was already deeply integrated with the GIL concept.

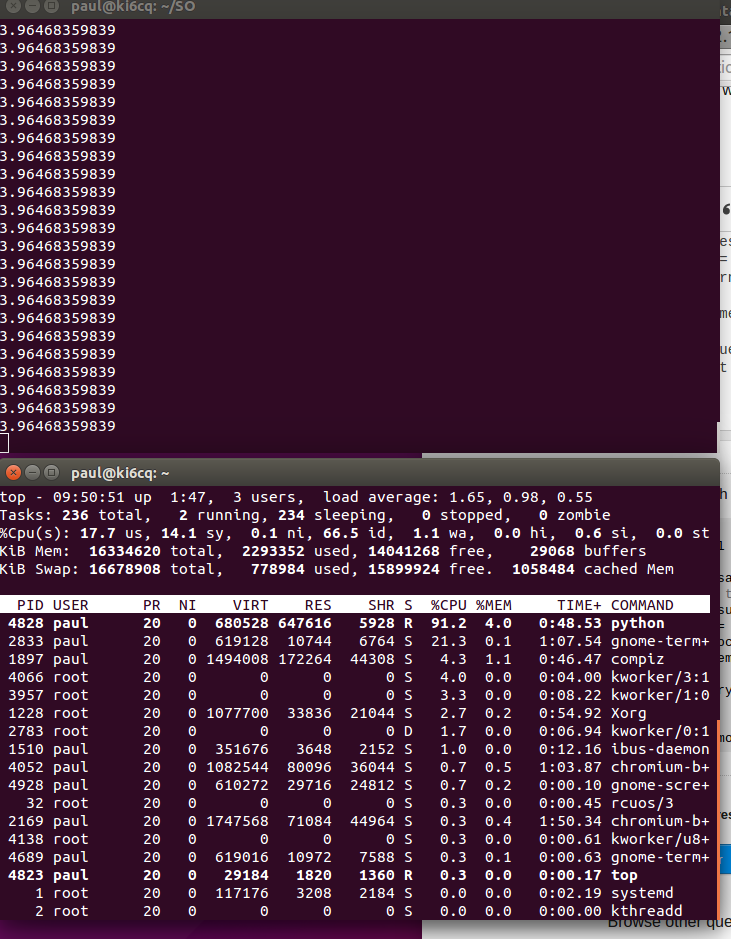

Consequences of the Global Interpreter Lock (GIL)

The presence of the GIL in Python has some significant implications:

Limited parallel execution: With only one thread able to execute Python code at a time, this means that many computationally intensive tasks may not be able to take full advantage of multi-core processors. Performance bottlenecks: Since the GIL limits the amount of work that can be done simultaneously, it might lead to performance bottlenecks, especially when dealing with CPU-bound operations. Less efficient I/O operations: The GIL can also impact the efficiency of I/O operations, as multiple threads trying to access shared resources (e.g., disk storage) may experience contention.Alternatives and Workarounds

If you need to take full advantage of multi-core processors or overcome the limitations imposed by the GIL, consider using:

Jython, a Java-based implementation of Python that doesn't have a GIL. PyPy, another implementation that also lacks a GIL. Multiprocessing modules: Python's built-in multiprocessing module provides processes instead of threads and effectively bypasses the GIL.Conclusion

The Global Interpreter Lock (GIL) in Python is an essential mechanism for ensuring thread safety, but it can limit the parallel execution capabilities of your code. If you need to overcome these limitations or take full advantage of multi-core processors, consider using alternative implementations, modules, or libraries designed specifically for concurrency.

Remember that even with the GIL, Python's ease of use and rapid development cycle make it an excellent choice for many projects. Just be aware of its limitations when working on computationally intensive tasks!

Is multiprocessing possible in Python?

Yes, multiprocessing is indeed possible in Python! In fact, Python has a built-in module called multiprocessing that allows you to create multiple processes and execute tasks concurrently.

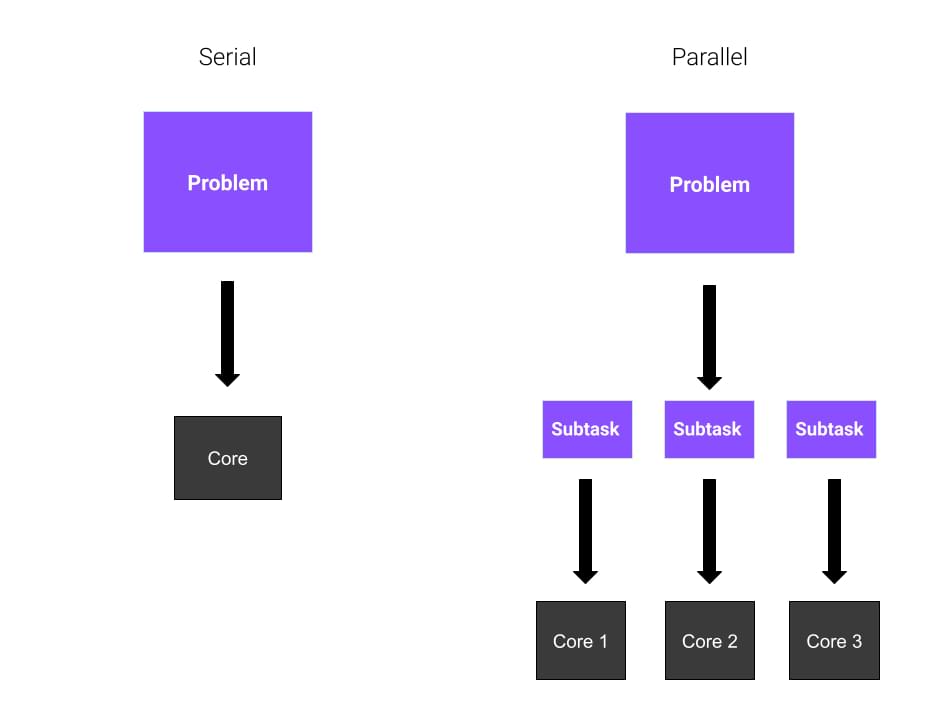

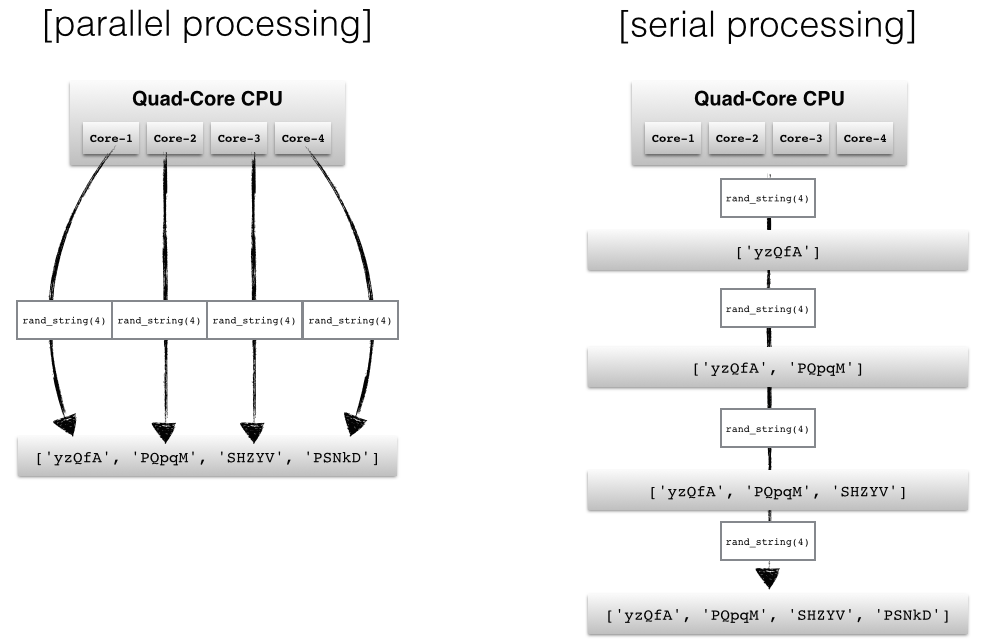

To understand the basics of multiprocessing in Python, let's first explore what parallel processing is all about. Parallel processing refers to the execution of multiple tasks simultaneously using multiple CPU cores or processors. This approach can significantly improve the performance and efficiency of your program, especially for computationally intensive tasks that are well-suited for parallelization.

Now, back to Python! The multiprocessing module provides a way to create processes, which are essentially independent programs that run concurrently with each other. You can use these processes to execute tasks in parallel, thus taking advantage of the available CPU resources.

Here's a simple example of how you might use the multiprocessing module:

import multiprocessing

def worker(num):

"""Worker function"""

print('Worker:', num)

return num * 2

if name == 'main':

jobs = []

for i in range(5):

p = multiprocessing.Process(target=worker, args=(i,))

jobs.append(p)

p.start()

for job in jobs:

job.join()

In this example, we define a worker function that takes an integer as input and returns its double. We then create five processes, each running the same worker function with different inputs (0 through 4).

When you run this code, you'll see that the output is not necessarily in order, because the processes are executing concurrently. This demonstrates how multiprocessing can be used to perform tasks in parallel.

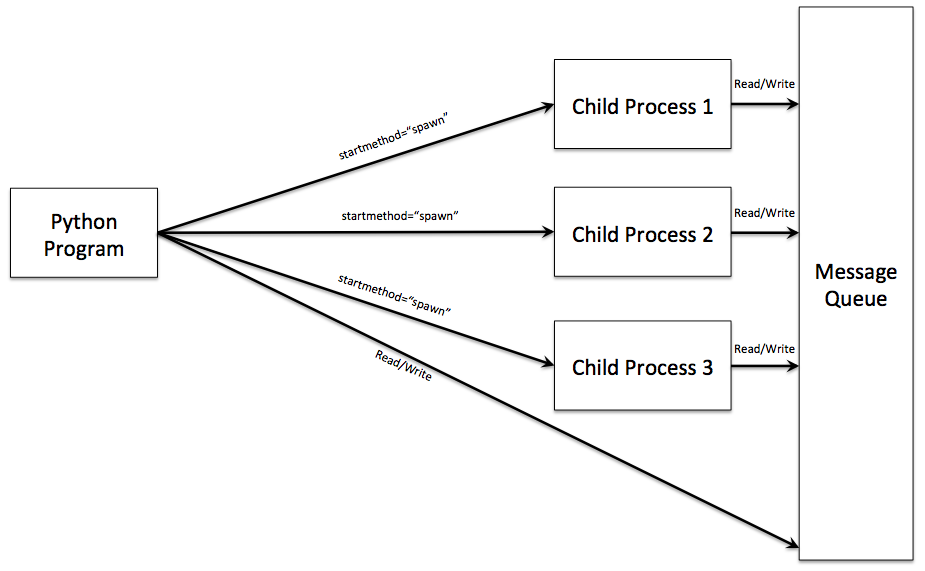

But wait! There's more! The multiprocessing module also provides a way to share data between processes using pipes, queues, and other mechanisms. For instance, you can use a queue to send and receive data between processes:

import multiprocessing

def worker(num, job_queue):

"""Worker function"""

while True:

try:

item = job_queue.get()

if item is None: # sentinel value

break

print('Worker:', num, 'processing', item)

do some work with the item

except Exception as e:

print('Exception in worker:', num, e)

if name == 'main':

job_queue = multiprocessing.JoinableQueue()

processes = []

for i in range(5):

p = multiprocessing.Process(target=worker, args=(i, job_queue))

processes.append(p)

p.start()

send some work items

for i in range(10):

job_queue.put(i)

done sending; propagate the sentinel value

for _ in range(5):

job_queue.put(None)

close and join all threads

for p in processes:

job_queue.close()

p.join()

In this example, we create a queue and five processes that will consume items from the queue. We then send ten work items to the queue (numbers 0 through 9). Once we've sent all the work items, we propagate a sentinel value (None) to each process, indicating that there's no more work.

The multiprocessing module also supports inter-process communication (IPC) using shared memory segments, which can be used for sharing large amounts of data between processes.

In conclusion, Python's multiprocessing module provides a powerful toolset for creating and managing multiple processes in parallel. By leveraging the available CPU resources, you can significantly improve the performance of your program and tackle complex computational tasks that require concurrency. So go ahead and give multiprocessing a try!