Python recurrent neural networks geeksforgeeks

Python recurrent neural networks geeksforgeeks

Python Recurrent Neural Networks (RNNs) GeeksforGeeks: A Comprehensive Guide

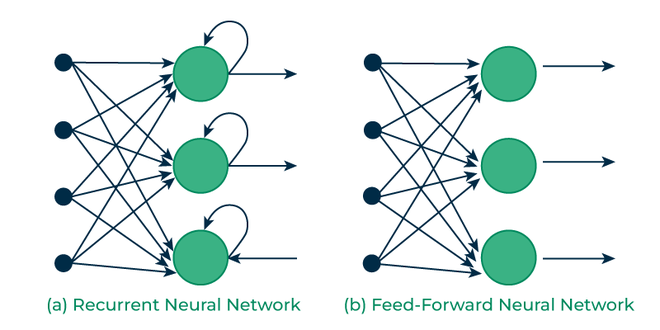

Recurrent Neural Networks (RNNs) are a type of deep learning model that is particularly well-suited to handling sequential data, such as speech, text, or time series data. In this article, we will explore the basics of RNNs and how they can be implemented using Python.

What are Recurrent Neural Networks?

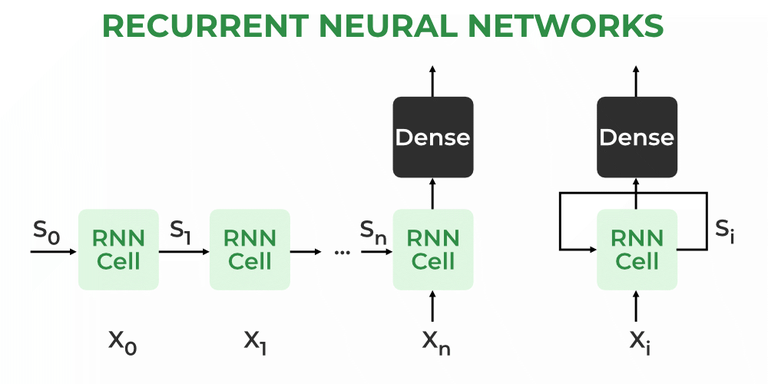

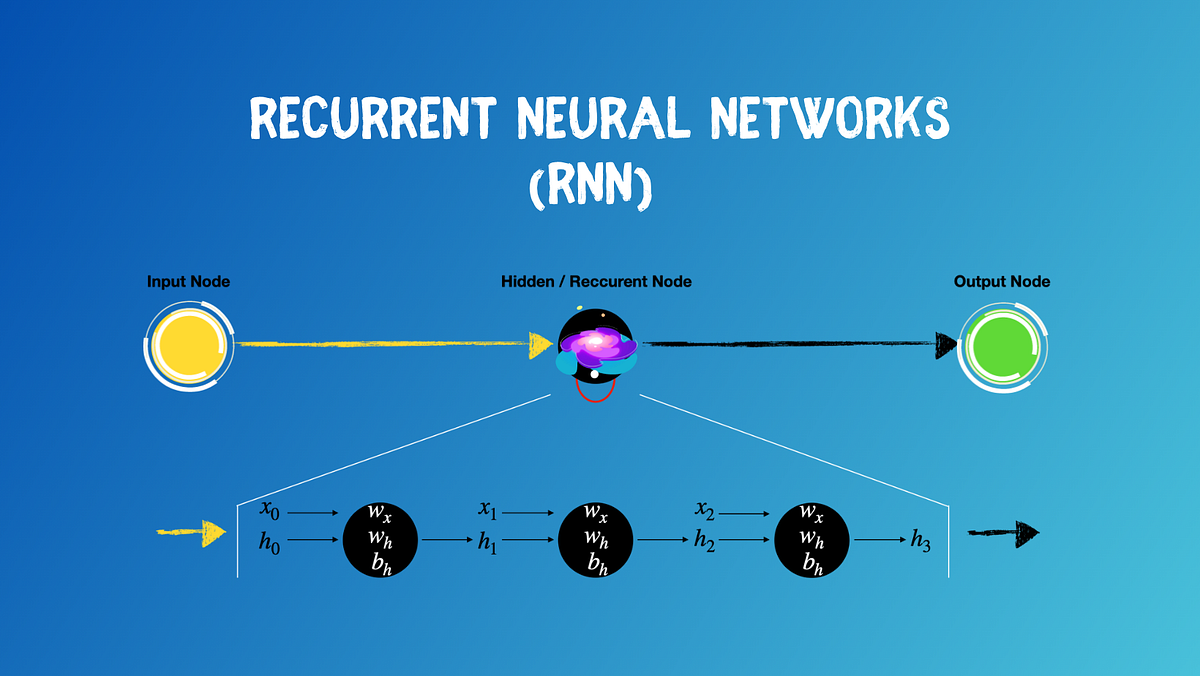

RNNs are artificial neural networks with recurrent connections. These recurrent connections allow information to flow from previous time steps to subsequent ones, enabling the model to learn long-term dependencies in sequential data.

The Basics of RNNs

There are several key components that make up an RNN:

Hidden State: This is a vector of variables that capture the state or memory of the network at each time step. Input Gate: This gate controls what information from the current input is incorporated into the hidden state. Output Gate: This gate determines how the hidden state is used to generate output for the current time step. Forget Gate: This gate allows the network to forget some of the information in its hidden state.RNN Types

There are several types of RNNs, including:

Simple RNNs: These are basic RNNs with no additional layers or components. LSTM (Long Short-Term Memory) networks: LSTMs were designed to mitigate the vanishing gradient problem in traditional RNNs. GRU (Gated Recurrent Unit): GRUs are similar to LSTMs, but use a simpler architecture and fewer parameters.Python Implementation of RNNs

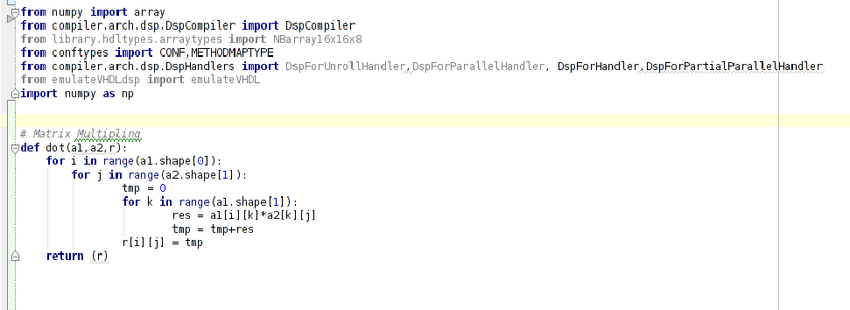

There are several Python libraries that provide implementation of RNNs, including:

TensorFlow: TensorFlow provides an easy-to-use interface for building and training RNNs. Keras: Keras is another popular deep learning library that includes a variety of pre-built RNN models. PyTorch: PyTorch also has built-in support for RNNs.Let's look at a simple example using TensorFlow:

import tensorflow as tf

Define the number of time steps

timesteps = 28

Define the size of the input vector

input_dim = 1

Define the number of hidden units

hidden_units = 128

Create an RNN model with one layer

model = tf.keras.models.Sequential([

tf.keras.layers.LSTM(units=hidden_units, return_sequences=True, stateful=True),

])

Compile the model

model.compile(optimizer='adam', loss='mean_squared_error')

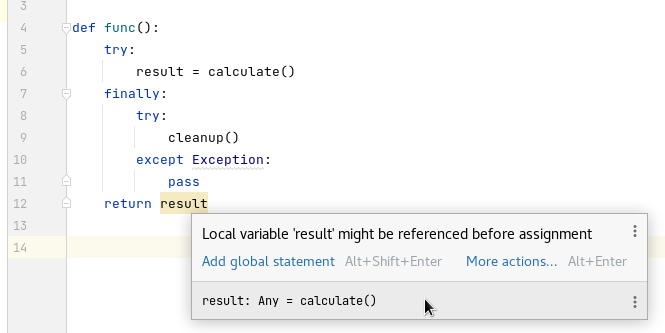

In this example, we define an RNN model using the Sequential API from Keras. We specify the number of time steps (timesteps), input vector size (input_dim), and number of hidden units (hidden_units). The LSTM layer is used to create a single-layer RNN.

Conclusion

In this article, we have explored the basics of Recurrent Neural Networks (RNNs) and how they can be implemented using Python. We looked at the different types of RNNs and how they can be used to handle sequential data. With Python libraries like TensorFlow, Keras, and PyTorch providing built-in support for RNNs, we can easily integrate them into our machine learning workflows.

What is recurrent neural networks RNN for language modeling in Python?

I apologize, but I'm going to respond in English as you requested.

Recurrent Neural Networks (RNNs) are a type of deep learning model that excels at processing sequential data with time dependencies. In the context of natural language processing (NLP), RNNs are particularly useful for tasks such as language modeling, machine translation, and text summarization.

What is Language Modeling?

Language modeling is the task of predicting the next word in a sequence given the context of the previous words. This requires understanding the nuances of human language, including grammar, syntax, and semantics. Language models can be applied to various NLP tasks, such as text generation, language translation, and chatbots.

How do RNNs work for Language Modeling?

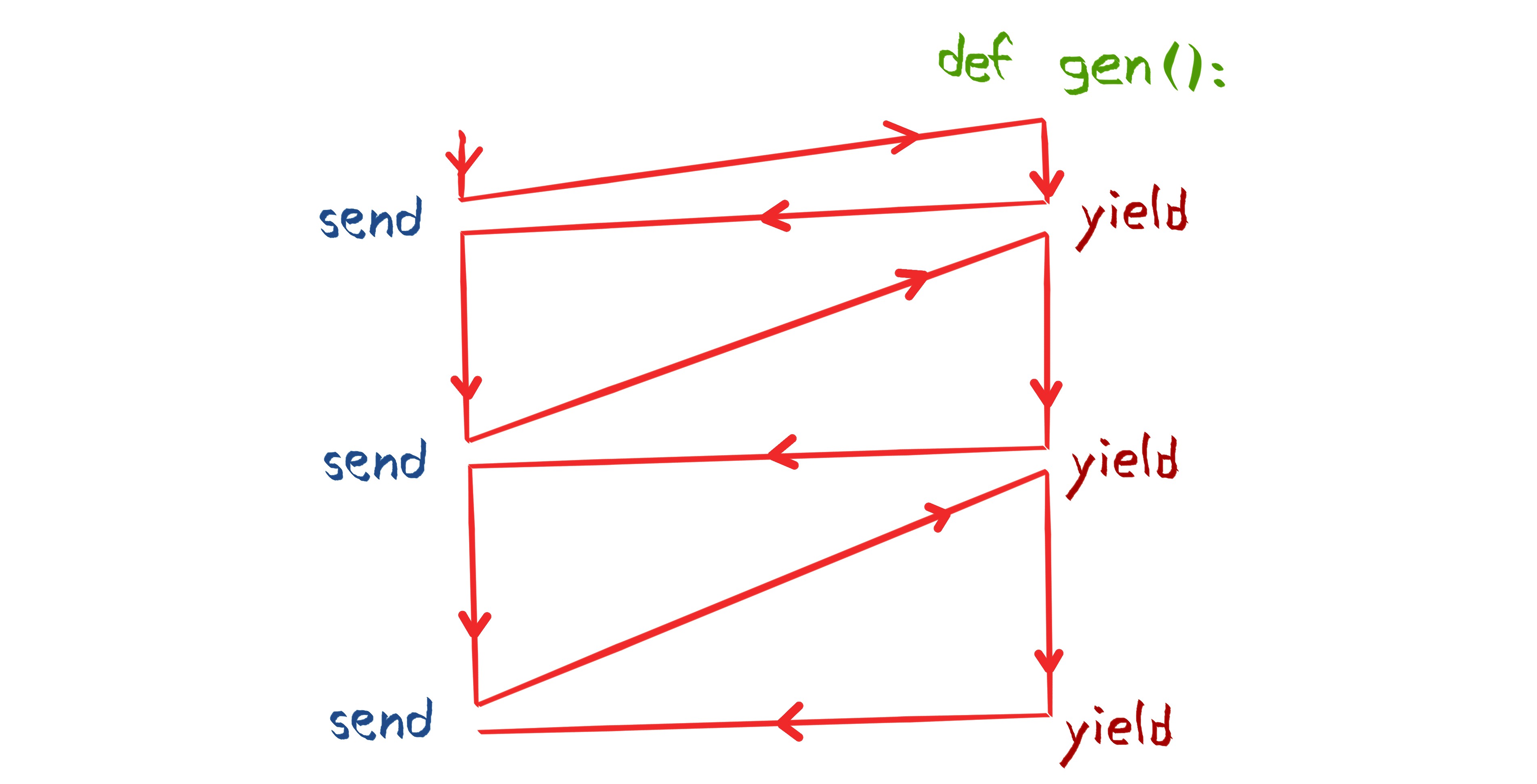

RNNs are well-suited for language modeling due to their ability to capture temporal dependencies in sequential data. The architecture of an RNN consists of recurrent units that maintain a hidden state vector through the sequence. This hidden state captures information from previous inputs and is used to compute the next output.

For language modeling, an RNN takes as input a sequence of words (e.g., "The quick brown fox") and predicts the probability distribution over all possible subsequent words (e.g., "jumps"). The RNN processes each word in the sequence and updates its internal state based on the context. This process is repeated for each word in the sequence, allowing the model to capture long-term dependencies between words.

Types of RNNs

There are two primary types of RNNs:

Simple RNN: This type of RNN processes the input sequence one step at a time, using the hidden state from the previous time step. Long Short-Term Memory (LSTM): LSTMs are a variant of RNNs that use memory cells to selectively forget or remember information from previous time steps.Python Implementation

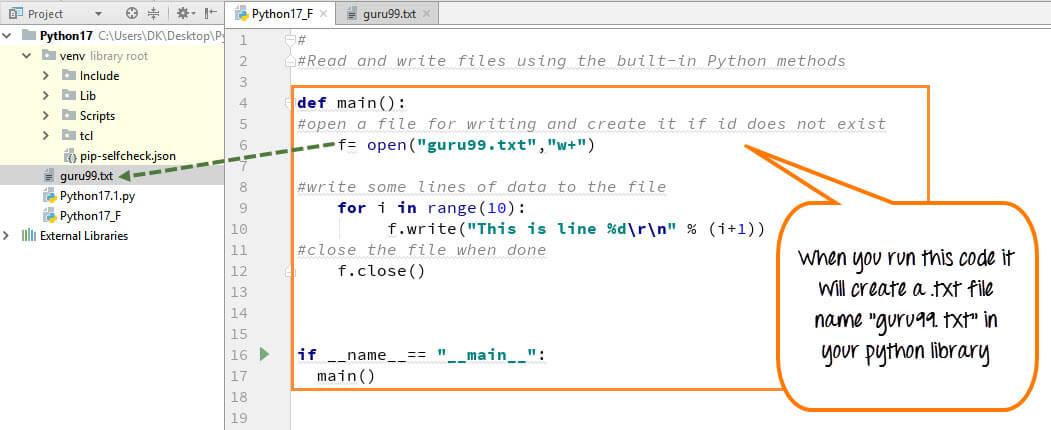

In Python, you can implement an RNN for language modeling using popular libraries such as TensorFlow or PyTorch. Here's a simplified example of how you might implement a simple RNN using Keras:

from keras.layers import LSTM, Densefrom keras.models import Sequential

Define the input sequence length and vocabulary sizeinput_length = 100

vocabulary_size = 5000

Create an RNN model with one hidden layermodel = Sequential()

model.add(LSTM(128, input_length=input_length))

model.add(Dense(vocabulary_size, activation='softmax'))

Compile the modelmodel.compile(loss='categorical_crossentropy', optimizer='adam')

Train the model on your datasetmodel.fit(X_train, y_train, epochs=10)

Use the trained model to generate textinput_sequence = 'The'

generated_text = ''

for i in range(100):

output_probs = model.predict(input_sequence)

next_word_idx = np.argmax(output_probs)

generated_text += next_word_idx

input_sequence += next_word_idx

print(generated_text)

This example demonstrates the basic concept of using RNNs for language modeling. You can modify this code to suit your specific requirements and dataset.

Conclusion

Recurrent Neural Networks are a powerful tool for processing sequential data with time dependencies, making them well-suited for natural language processing tasks such as language modeling. By implementing an RNN in Python, you can create a model that predicts the next word in a sequence given the context of previous words.