How to run an ONNX file in Python?

How to run an ONNX file in Python?

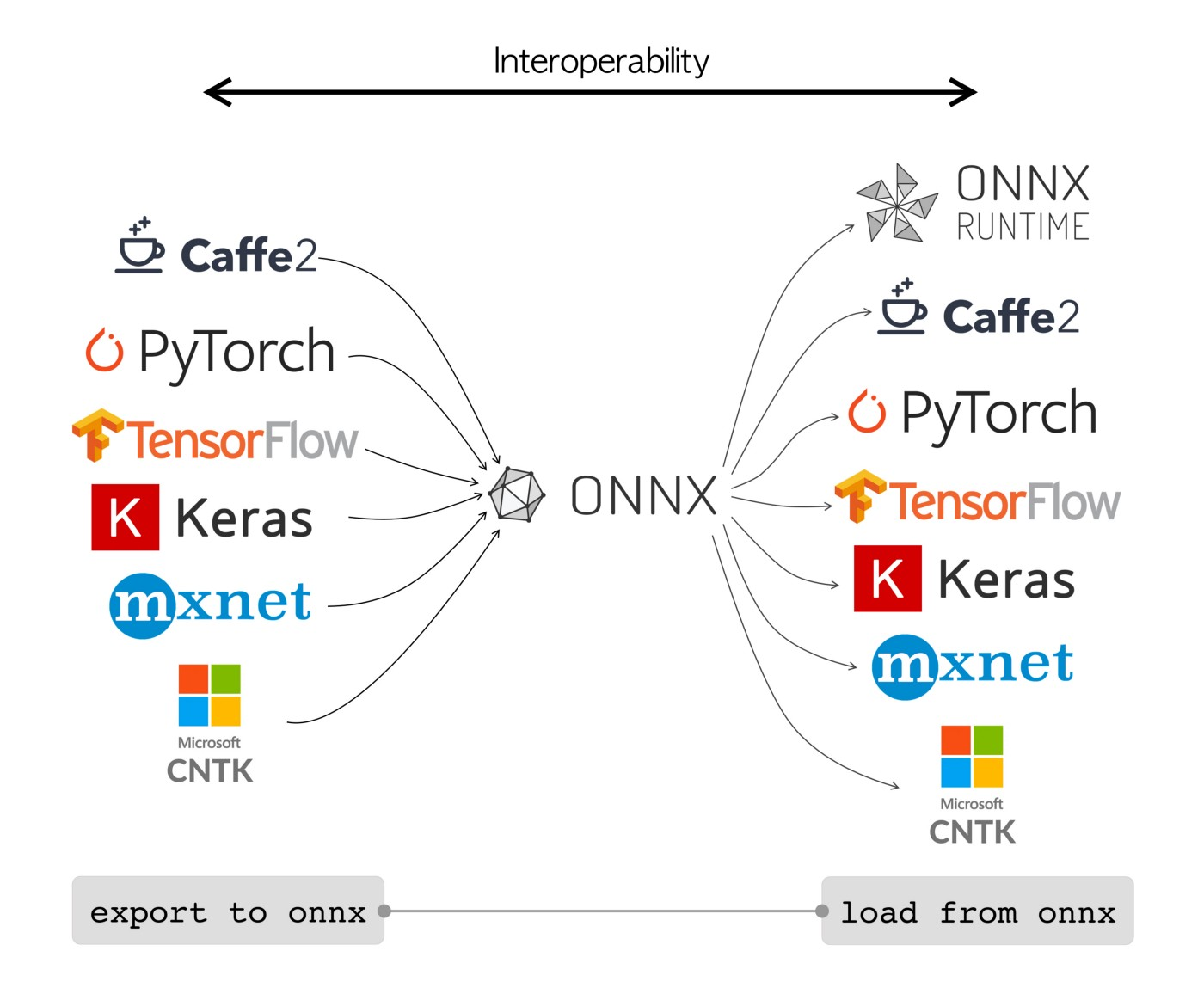

To run an ONNX (Open Neural Network Exchange) file in Python, you can use the onnx and onnxruntime libraries. Here's a step-by-step guide on how to do it:

Install Required Libraries

First, make sure you have installed the required libraries. You can install them using pip:

pip install onnx onnxruntime

Load the ONNX Model

Now that you have the libraries installed, let's load the ONNX model:

import onnx

Load the ONNX file

onnx_model = onnx.load("path/to/your/model.onnx")

Replace "path/to/your/model.onnx" with the actual path to your ONNX file.

Create an ONNX Runtime Session

Next, create an ONNX runtime session:

import onnxruntime as ort

Create a new session

session = ort.InferenceSession(onnx_model.SerializeToString())

The SerializeToString() method converts the ONNX model into a string that can be used to create a new session.

Run the Model

Now you're ready to run the model:

# Get the input name and output names from the model

input_name = onnx_model.graph.input[0].name

output_names = [onx.name for onx in onnx_model.graph.output]

Create a new input tensor with the same shape as the model's input

input_data = np.random.rand(1, 28, 28).astype(np.float32)

Run the model

result = session.run(output_names=output_names, input_feed={input_name: input_data})

Get the output tensors from the result

output_tensors = [tensor for tensor in result]

In this example, we're assuming that your ONNX model takes a single float32 tensor as input and produces a single float32 tensor as output. You may need to modify this code depending on the specifics of your model.

Postprocessing

Finally, you can perform any post-processing tasks you like:

# Convert the output tensors to NumPy arrays

output_arrays = [array.from_buffer(obj) for obj in output_tensors]

Do something with the output arrays...

print(output_arrays)

And that's it! You've successfully run an ONNX file in Python using the onnx and onnxruntime libraries.

Inference ONNX model Python

I'm happy to respond in English!

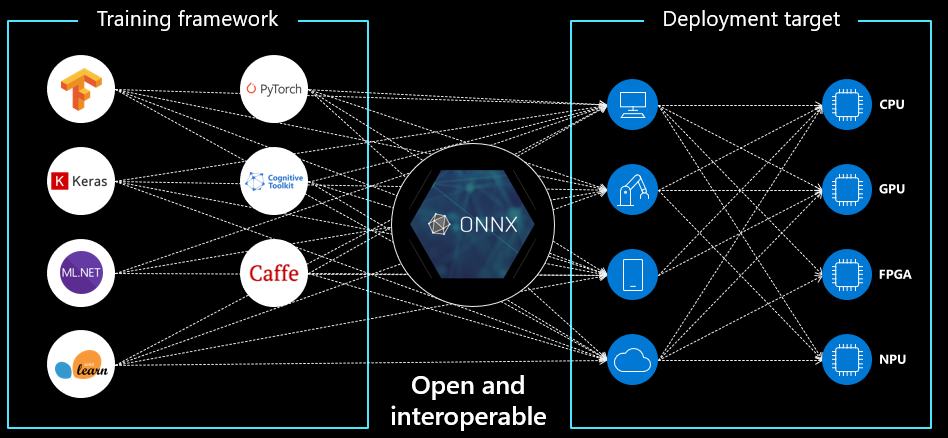

Inference with ONNX models using Python is a popular task, especially for deep learning applications. To get started, you'll need to install the necessary libraries:

onnx: This library is responsible for converting your model into the ONNX format. You can install it using pip:pip install onnx numpy: This library provides support for numerical computations and is often used in machine learning applications. Install it with pip: pip install numpy onnxruntime: This library provides an execution engine for ONNX models. Install it with pip: pip install onnxruntime

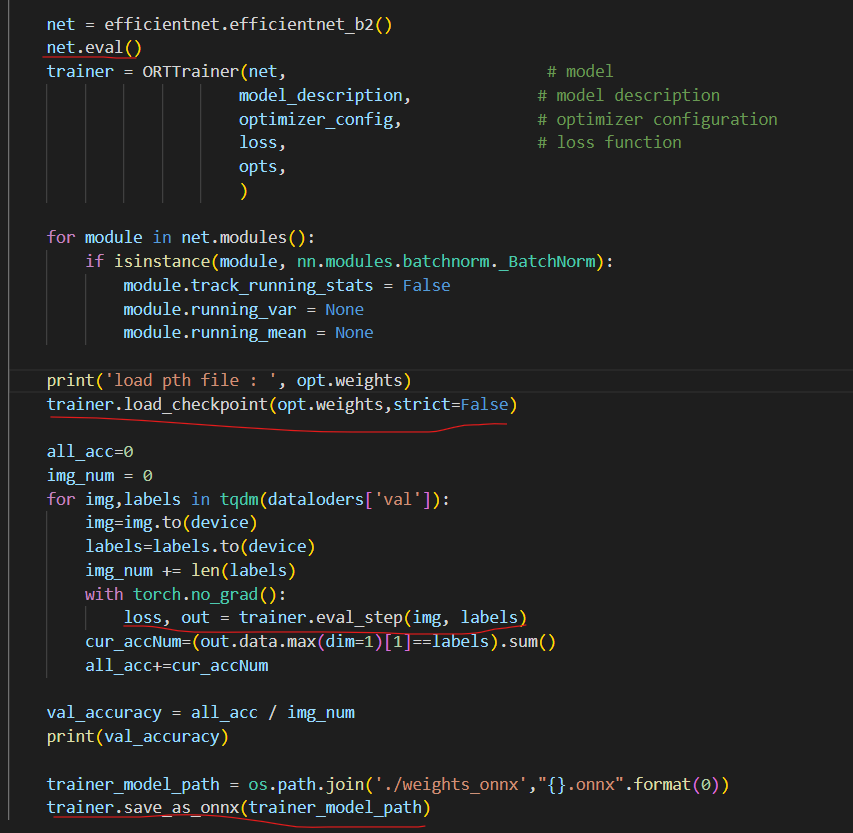

Now, let's dive into the process of inferring with an ONNX model using Python:

Convert your model to ONNX format: Use the following code snippet to convert your trained model (e.g., TensorFlow or PyTorch) to the ONNX format:import onnx

from onnx import helper, numpy_helper

Load your trained model

model_path = 'path/to/model'

model = tf.keras.models.load_model(model_path)

Convert the model to ONNX format

onnx_model = onnx.convert_tf(model)

with open('output.onnx', 'wb') as f:

f.write(onnx_model.SerializeToString())

import onnxruntime as ort

Load the ONNX model

ort_session = ort.InferenceSession('output.onnx')

import numpy as np

Create some sample input data (e.g., images)

input_data = np.array([[0.1, 0.2, 0.3], [0.4, 0.5, 0.6]])

# Perform inference on the input data

output = ort_session.run(None, {'input': input_data})

print(output)

This will output the predicted values based on the input data.

Tips and Tricks:

Make sure you're using the correct version of the libraries, as compatibility issues may arise. If your model is not a standard ONNX-supported framework (e.g., TensorFlow or PyTorch), you might need to use additional tools for conversion. Inference times can be optimized by adjusting model parameters, input data shapes, and hardware configurations.That's it! With these steps, you should be able to perform inference with an ONNX model using Python. Happy inferencing!