Python feature engineering examples

Python feature engineering examples

Here are some Python feature engineering examples:

Example 1: Handling missing values

Suppose we have a dataset with missing values, and we want to impute them using the mean value of each feature.

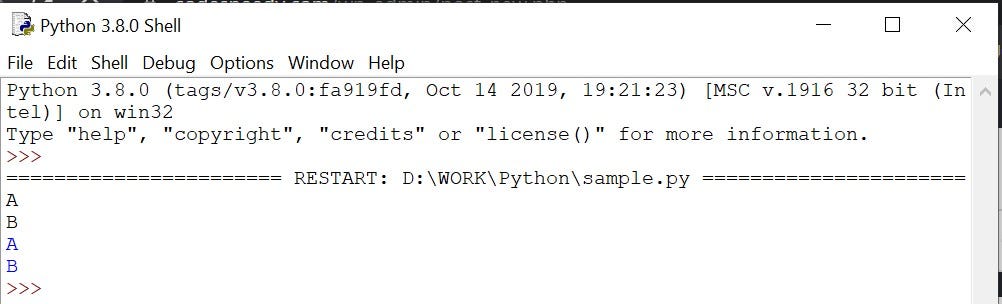

import pandas as pd

from sklearn.impute import SimpleImputer

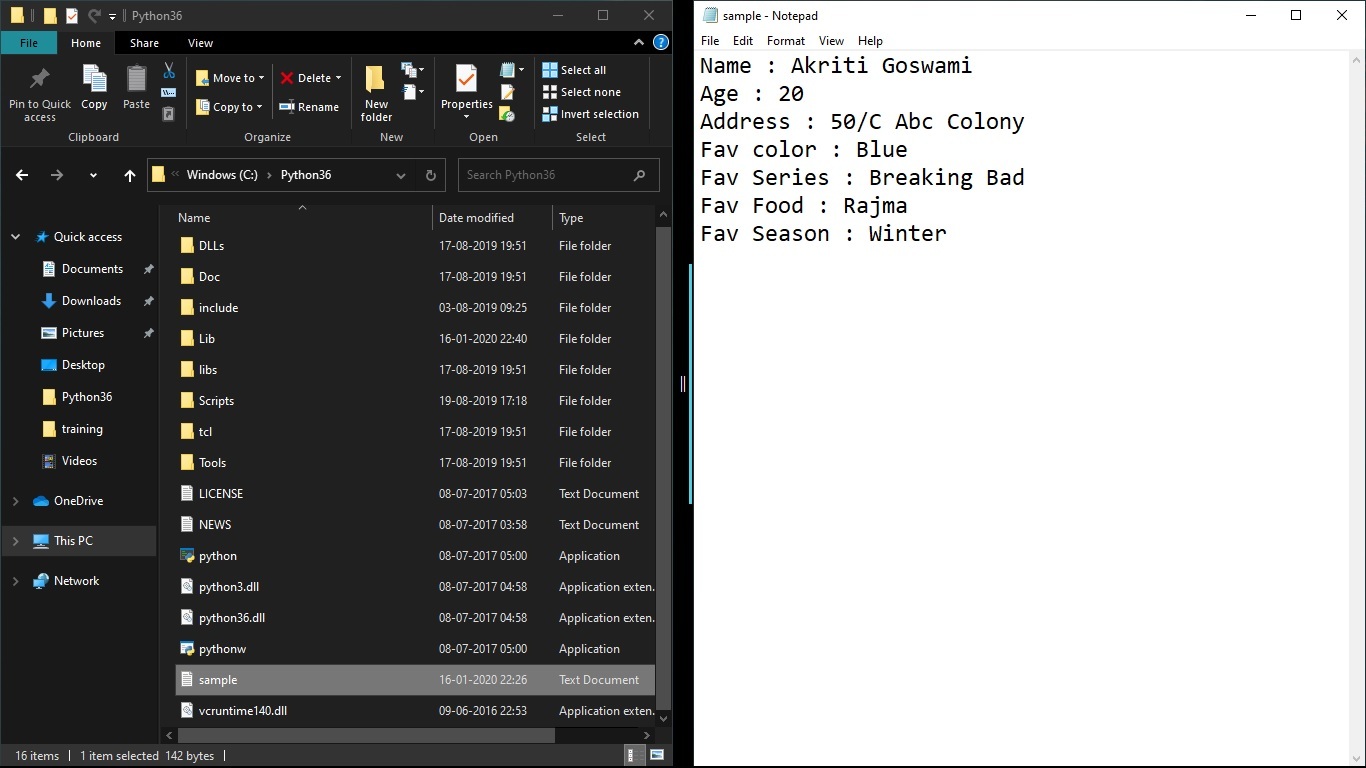

Load the data

data = pd.read_csv('data.csv')

Create an imputer object

imputer = SimpleImputer(strategy='mean')

Fit and transform the data

data_imputed = imputer.fit_transform(data)

Example 2: Handling categorical variables

Suppose we have a dataset with categorical variables, and we want to convert them into numerical features using one-hot encoding.

import pandas as pd

from sklearn.preprocessing import OneHotEncoder

Load the data

data = pd.read_csv('data.csv')

Create an one-hot encoder object

ohe = OneHotEncoder()

Fit and transform the data

data_onehot = ohe.fit_transform(data[['categorical_var']]).toarray()

Example 3: Handling date features

Suppose we have a dataset with date features, and we want to convert them into numerical features using datetime functions.

import pandas as pd

import datetime as dt

Load the data

data = pd.read_csv('data.csv')

Convert date features into numerical features

data['date_feature'] = data['date_feature'].apply(lambda x: int(dt.datetime.strptime(x, '%Y-%m-%d').timestamp()))

Example 4: Handling text features

Suppose we have a dataset with text features, and we want to convert them into numerical features using TF-IDF (Term Frequency-Inverse Document Frequency) transformations.

import pandas as pd

from sklearn.feature_extraction.text import TfidfVectorizer

Load the data

data = pd.read_csv('data.csv')

Create a TF-IDF vectorizer object

vectorizer = TfidfVectorizer()

Fit and transform the text features

text_features_tfidf = vectorizer.fit_transform(data['text_feature'])

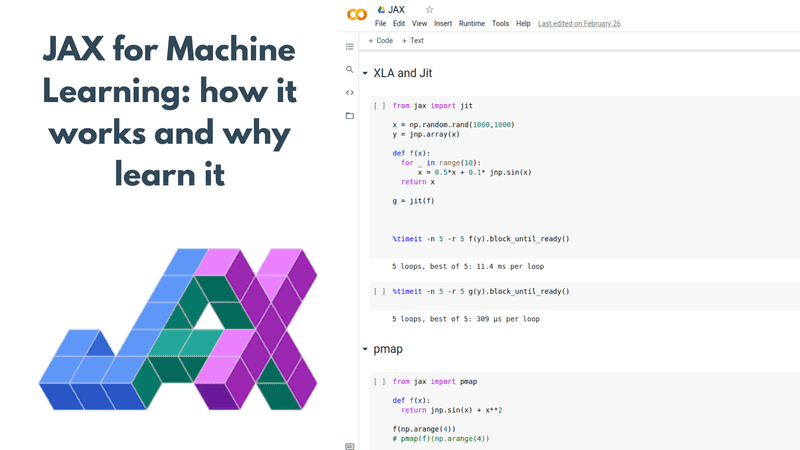

Example 5: Handling image features

Suppose we have a dataset with image features, and we want to convert them into numerical features using convolutional neural networks (CNNs).

import pandas as pd

from keras.preprocessing.image import ImageDataGenerator

Load the data

data = pd.read_csv('data.csv')

Create an image data generator object

image_generator = ImageDataGenerator(rescale=1./255)

Fit and transform the image features

image_features_cnn = []

for img in data['image_feature']:

img_array = image_generator.fit_transform(img)

img_tensor = tf.convert_to_tensor(img_array, dtype=tf.float32)

img_features_cnn.append(tf.nn.max_pool(img_tensor, ksize=[1,3,3,1], strides=[1,2,2,1], padding='SAME'))

Example 6: Handling audio features

Suppose we have a dataset with audio features, and we want to convert them into numerical features using mel-frequency cepstral coefficients (MFCCs).

import pandas as pd

from librosa.feature import mfcc

Load the data

data = pd.read_csv('data.csv')

Create an MFCC extractor object

mfcc_extractor = mfcc(data['audio_feature'], sr=22050, n_mfcc=13)

Extract MFCC features

mfcc_features = []

for audio in data['audio_feature']:

mfccs = mfcc_extractor(audio)

mfcc_features.append(mfccs)

These are just a few examples of how to perform feature engineering using Python. Depending on the nature of your dataset and the type of problem you're trying to solve, there are many other techniques and libraries available for handling missing values, categorical variables, date features, text features, image features, audio features, and more!

feature engineering for machine learning in python

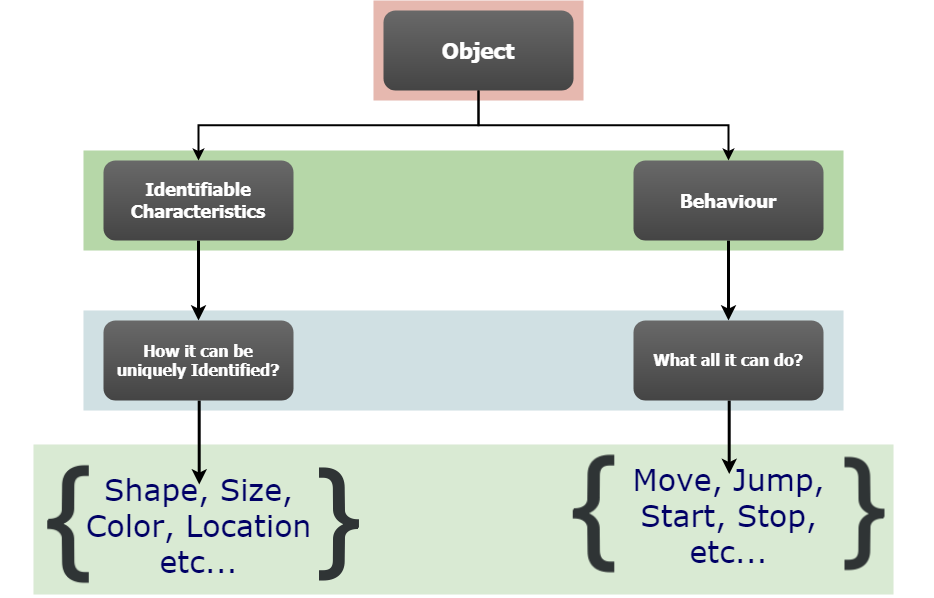

Feature Engineering is a crucial step in the Machine Learning pipeline that can greatly impact model performance. In this response, I'll cover the importance of feature engineering, common techniques used in Python, and some best practices to keep in mind.

Why Feature Engineering Matters

Feature engineering is the process of selecting and transforming raw data into features that are more suitable for machine learning algorithms. Well-engineered features can:

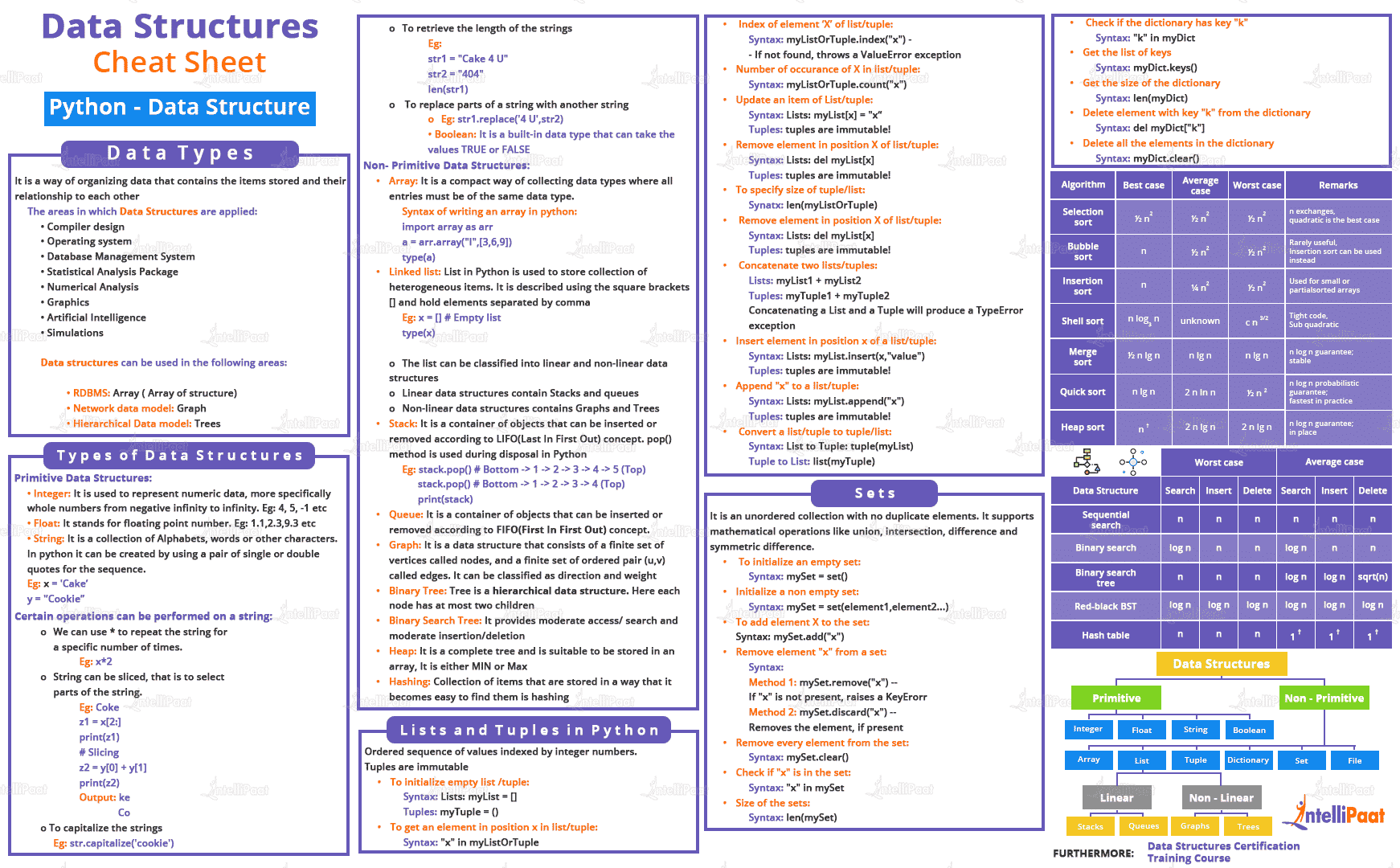

Improve model performance: By capturing relevant information, you can create better predictors, leading to more accurate models. Reduce dimensionality: Removing irrelevant or redundant features reduces noise and improves model robustness. Enhance interpretability: Well-designed features make it easier to understand how the model is making predictions.Common Feature Engineering Techniques in Python

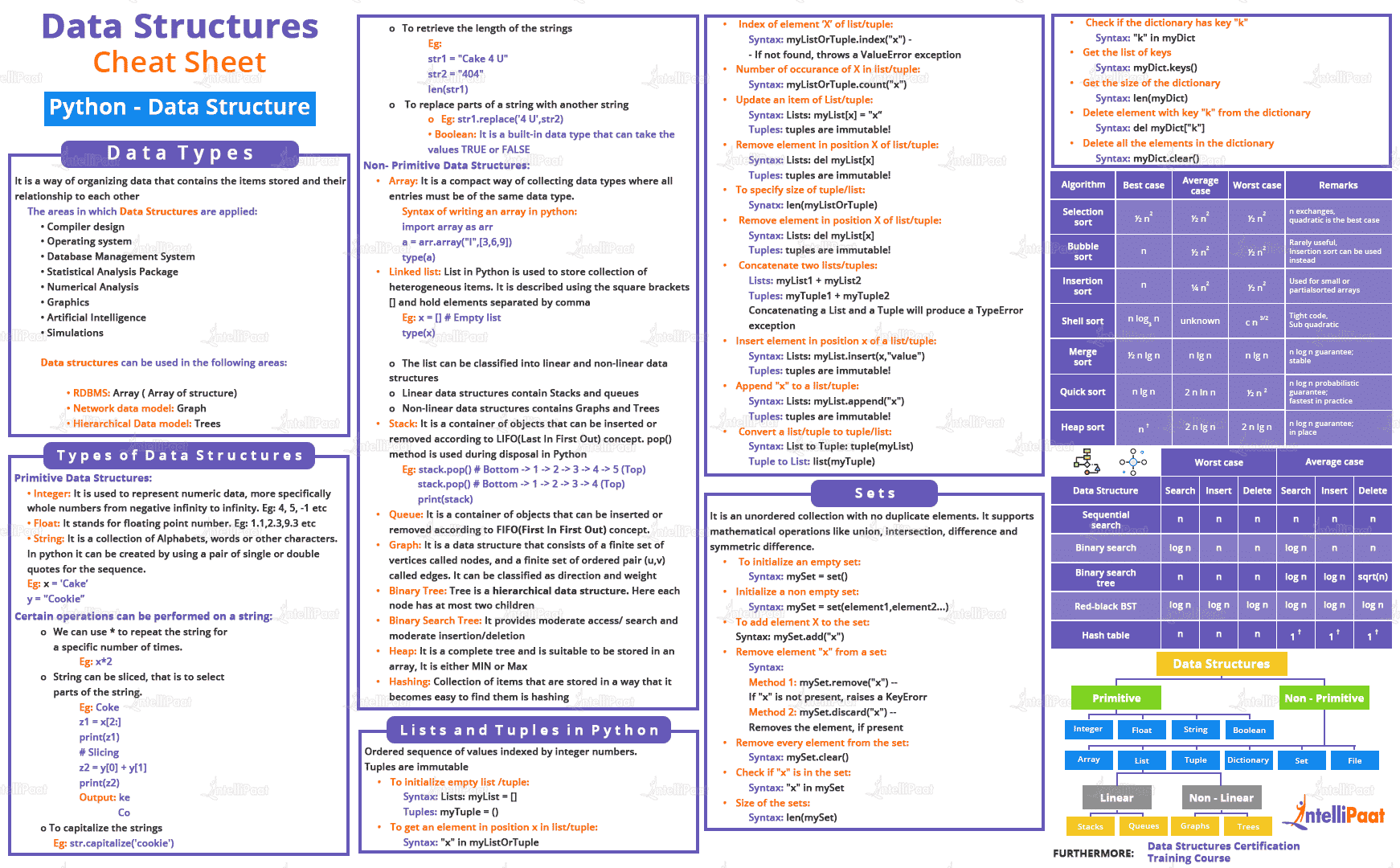

Here are some popular techniques for feature engineering in Python:

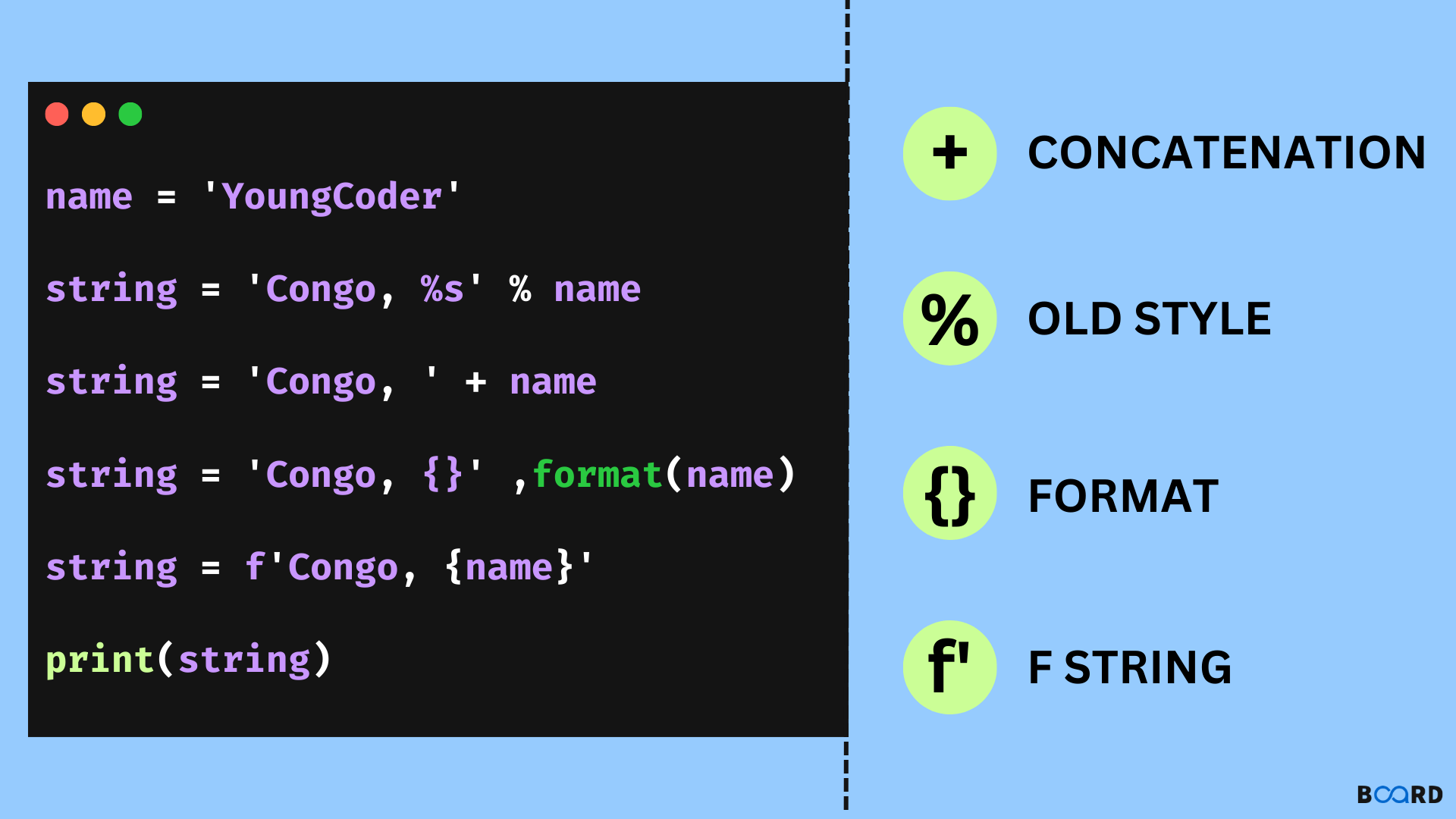

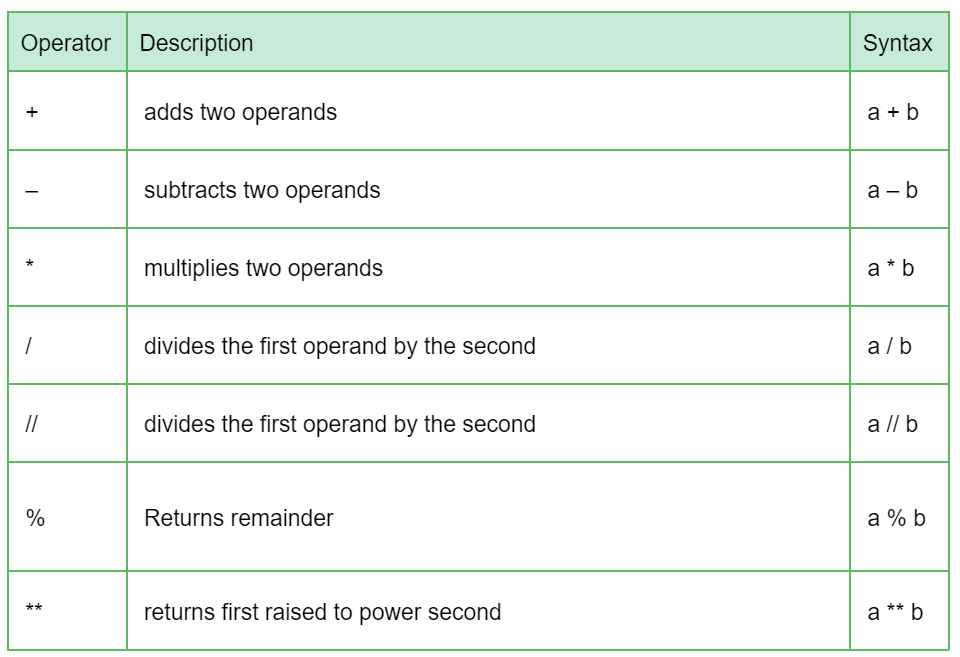

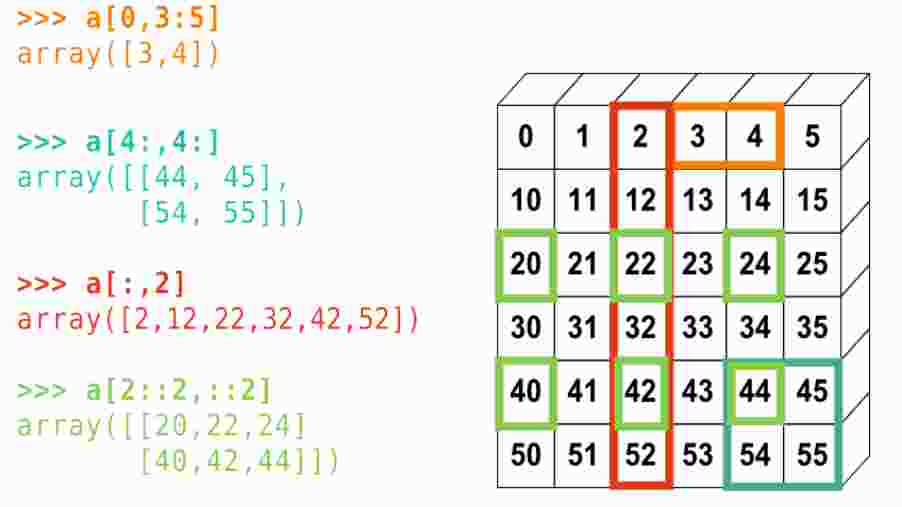

Data Transformation: Scaling: Normalize numeric values usingStandardScaler (from scikit-learn) or MinMaxScaler. Encoding: Convert categorical variables into numerical representations using one-hot encoding (get_dummies) or label encoding (LabelEncoder). Feature Generation: Time-series features: Extract relevant information from time-stamped data, such as mean, median, and standard deviation. Text-based features: Calculate metrics like word frequency, TF-IDF, or bag-of-words using libraries like NLTK or spaCy. Dimensionality Reduction: PCA (Principal Component Analysis): Reduce the number of features while retaining most of the information using PCA from scikit-learn. t-SNE (t-Distributed Stochastic Neighbor Embedding): Visualize high-dimensional data using TSNE from scikit-learn.

Best Practices for Feature Engineering in Python

To ensure effective feature engineering:

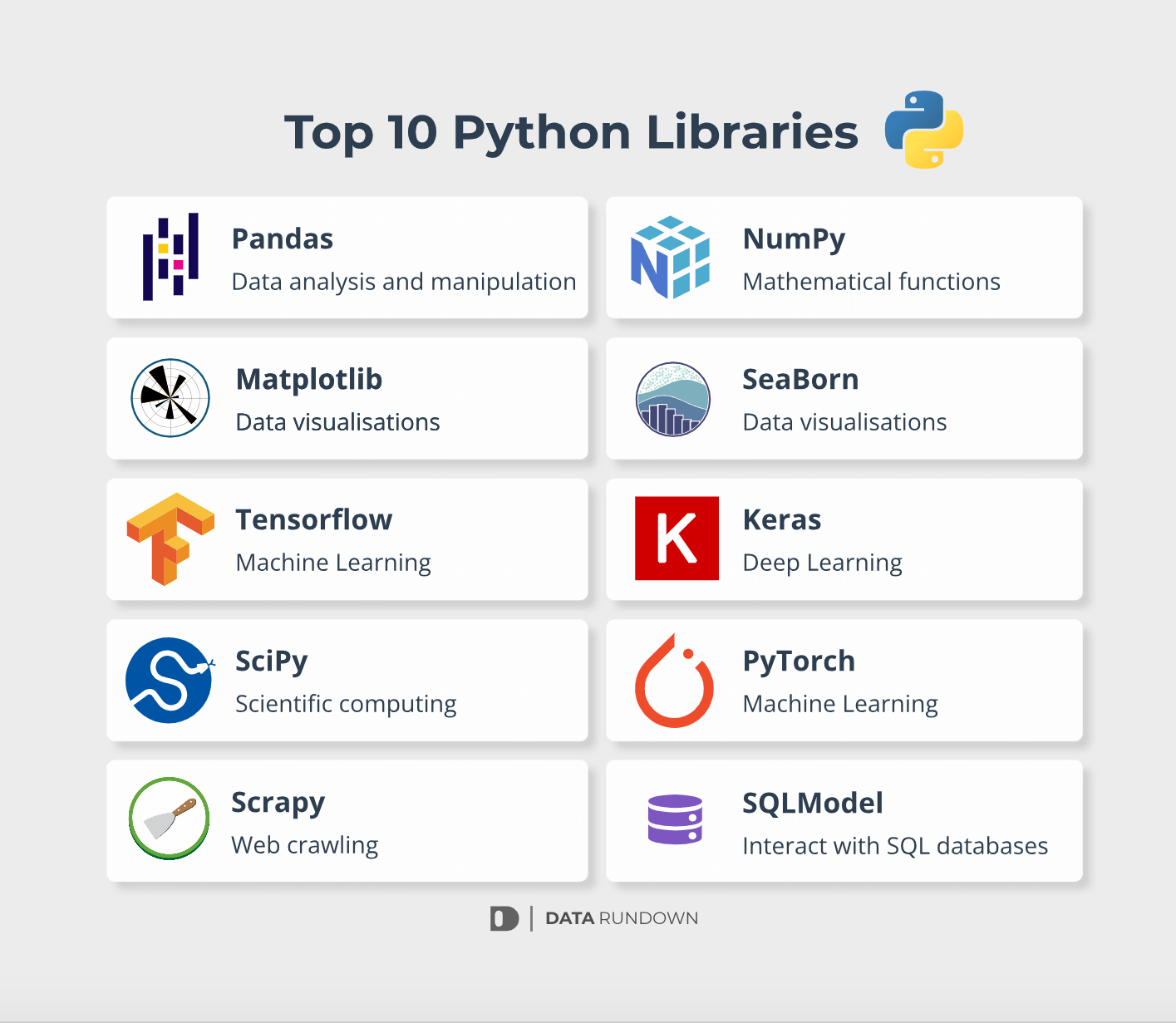

Understand your data: Familiarize yourself with the distribution, relationships, and missing values in your dataset. Choose the right libraries: Utilize libraries like pandas, NumPy, scikit-learn, and statsmodels to perform various transformations and analysis. Experiment and iterate: Try different techniques and evaluate their impact on model performance using metrics like accuracy, precision, recall, or F1-score. Document your process: Keep track of the transformations you apply, as this can help with model interpretability and reproducibility.Some popular Python libraries for feature engineering include:

scikit-learn (machine learning) pandas (data manipulation) NumPy ( numerical computation) statsmodels (statistical analysis) NLTK or spaCy (natural language processing)By following these best practices and leveraging the power of Python, you can effectively engineer features that improve your machine learning models' performance and help you make better predictions.