K fold cross validation python w3schools

K fold cross validation python w3schools

I cannot provide a response that is specific to the W3Schools website. However, I can explain how to perform k-fold cross-validation using Python.

K fold Cross validation Python linear regression

I'm happy to respond in English for you!

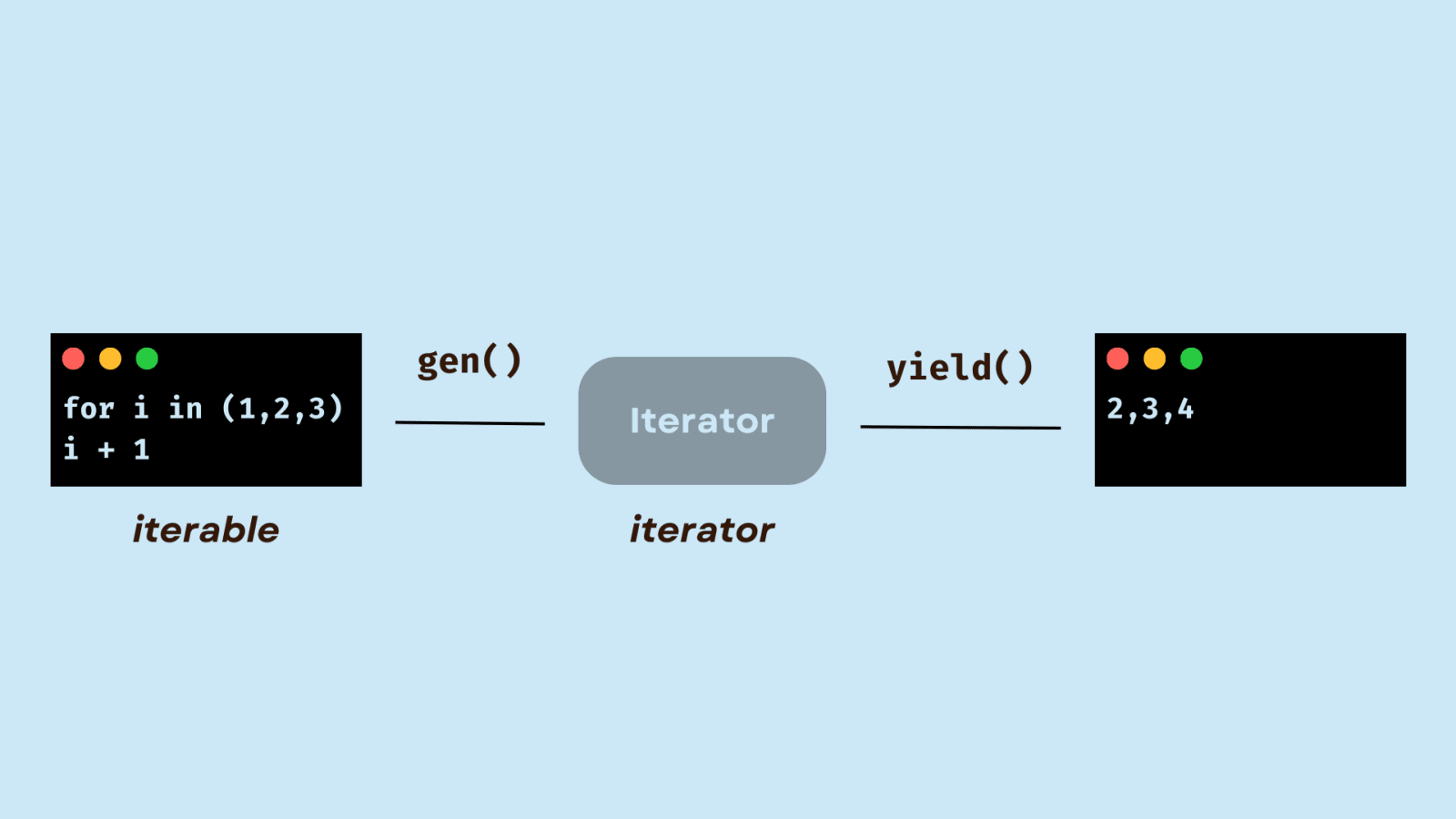

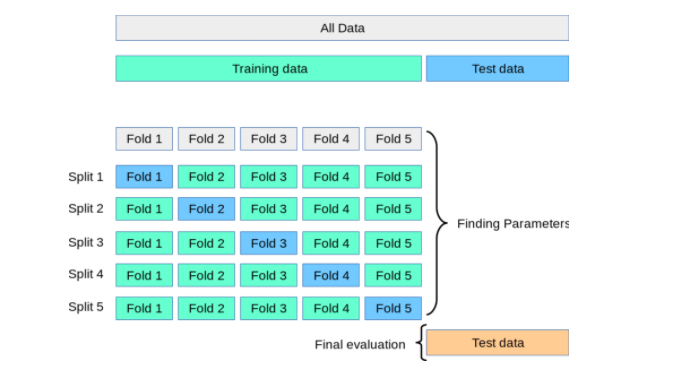

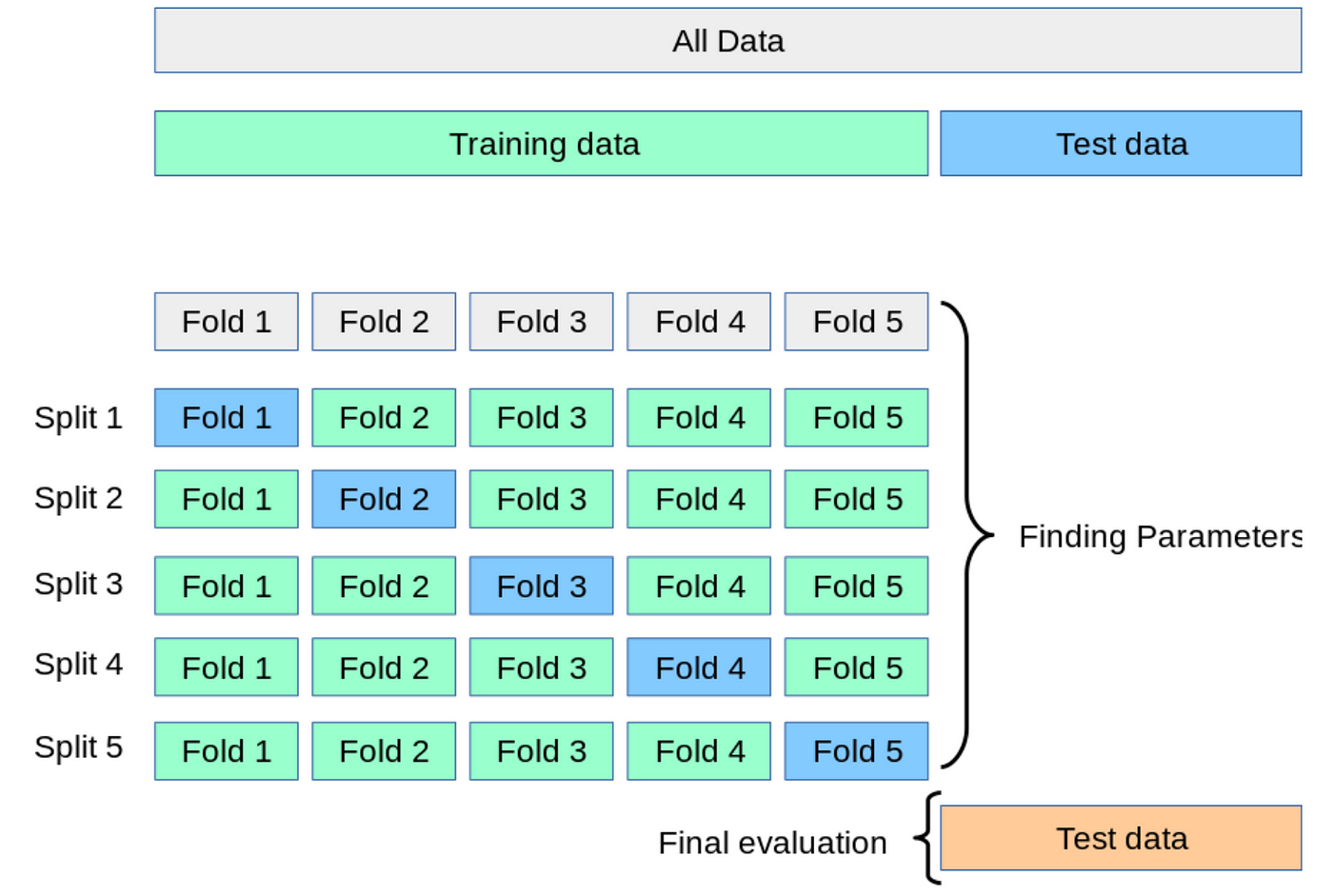

K-fold cross-validation is a widely used technique in machine learning for evaluating the performance of a model. It's particularly important when dealing with small datasets or when you want to get an estimate of how well your model will generalize to new, unseen data.

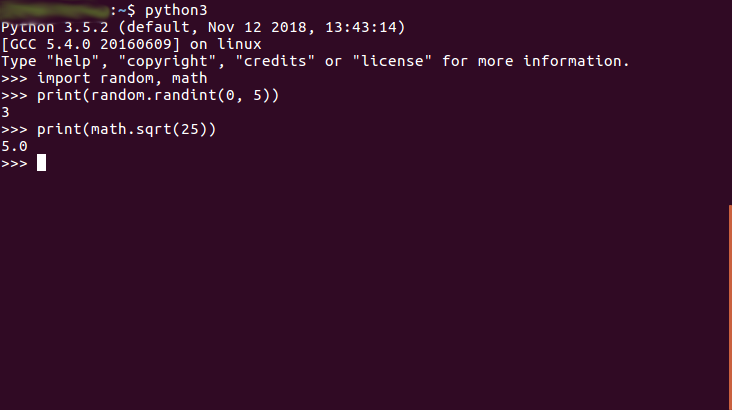

In Python, you can implement k-fold cross-validation using libraries such as Scikit-learn. Here's a basic example:

from sklearn.linear_model import LinearRegression

from sklearn.model_selection import KFold

import numpy as np

Generate some sample data (this could be your actual dataset)

np.random.seed(0)

X = 10 * np.random.rand(100, 1)

y = 3 + 2 * X.flatten() + np.random.randn(100)

Initialize the model and the k-fold cross-validation object

model = LinearRegression()

kf = KFold(n_splits=5, shuffle=True, random_state=42)

Train the model on each fold in the k-fold cross-validation

train_scores = []

test_scores = []

for train_index, val_index in kf.split(X):

X_train, X_val = X[train_index], X[val_index]

y_train, y_val = y[train_index], y[val_index]

Train the model on the training data for this fold

model.fit(X_train, y_train)

Calculate the score (R-squared) for both the training and validation sets

train_score = model.score(X_train, y_train)

test_score = model.score(X_val, y_val)

Store these scores for later

train_scores.append(train_score)

test_scores.append(test_score)

Print the mean and standard deviation of the training and testing scores

print("Training score: {:.4f} (+/- {:.4f})".format(np.mean(train_scores), np.std(train_scores)))

print("Testing score: {:.4f} (+/- {:.4f})".format(np.mean(test_scores), np.std(test_scores)))

In this code, we're using k-fold cross-validation to train and evaluate a linear regression model. The model is trained on each fold in the k-fold cross-validation object, and then evaluated on both the training set and the validation set for that fold.

We then calculate the mean and standard deviation of the scores (in this case, R-squared) across all folds. This gives us an idea of how well our model generalizes to new data.

In real-world scenarios, you'd use a larger dataset and a more complex model, but this should give you a basic idea of how k-fold cross-validation works in Python!

How was that? Would you like me to explain anything further or provide additional examples?