Can Python do multithreading?

Can Python do multithreading?

I'm happy to help!

Python does support multithreading to some extent, but it's a bit more complicated than you might think.

In Python, you can use the threading module to create multiple threads that can run concurrently. However, due to the Global Interpreter Lock (GIL), true parallel execution of multiple threads is not possible.

The GIL is a mechanism built into the CPython interpreter that allows for efficient management and synchronization of threads. It's designed to provide a sense of concurrency while ensuring thread-safe access to Python objects. This means that only one thread can execute Python bytecodes at any given time, even if there are multiple CPU cores available.

This doesn't mean you can't write multi-threaded programs in Python; you just won't be able to truly parallelize CPU-bound operations across multiple threads.

That being said, Python does provide some alternatives for achieving concurrency and parallelism:

Multiprocessing: You can use themultiprocessing module to create multiple processes that can run concurrently, without the limitations imposed by the GIL. Each process has its own Python interpreter instance, so you can truly parallelize CPU-bound operations. Asyncio: The asyncio library provides a way to write asynchronous code using coroutines. This allows for single-threaded event-driven programming, which can be used to create concurrent programs that don't rely on threading or multiprocessing.

When to use multithreading in Python:

I/O-bound operations: If your program involves I/O operations (e.g., reading/writing files, network communication), you can still benefit from using multiple threads. The GIL won't be a significant bottleneck, and your program will likely see performance improvements. CPU-bound operations with high-level concurrency: If your program involves CPU-intensive tasks that don't rely on low-level parallelism (e.g., image processing, scientific computations), you can still use Python'sthreading module to achieve concurrency. The GIL won't be a significant issue in this case.

In conclusion, while Python's threading module is not suitable for truly parallelizing CPU-bound operations due to the GIL, it still offers a way to create concurrent programs that can benefit from I/O-bound or high-level concurrency scenarios.

Happy coding!

Python concurrent futures

Here's an overview of Python concurrent futures:

Python provides a convenient way to handle concurrency using the concurrent.futures module, which was introduced in Python 3.2. This module allows you to easily parallelize and execute asynchronous tasks.

What is concurrent.futures?

The concurrent.futures module is a high-level interface for asynchronously executing callables. It provides a way to schedule the execution of multiple tasks concurrently and collect their results.

When should I use concurrent.futures?

You should consider using concurrent.futures in situations where you have:

concurrent.futures can help you execute them concurrently. I/O-bound operations: If your tasks involve I/O operations (e.g., reading or writing files, making network requests), concurrent.futures can help you utilize the system's resources more effectively by executing multiple tasks at once. CPU-bound operations: If your tasks are CPU-bound and you want to take advantage of multi-core processors, concurrent.futures can help you parallelize your code.

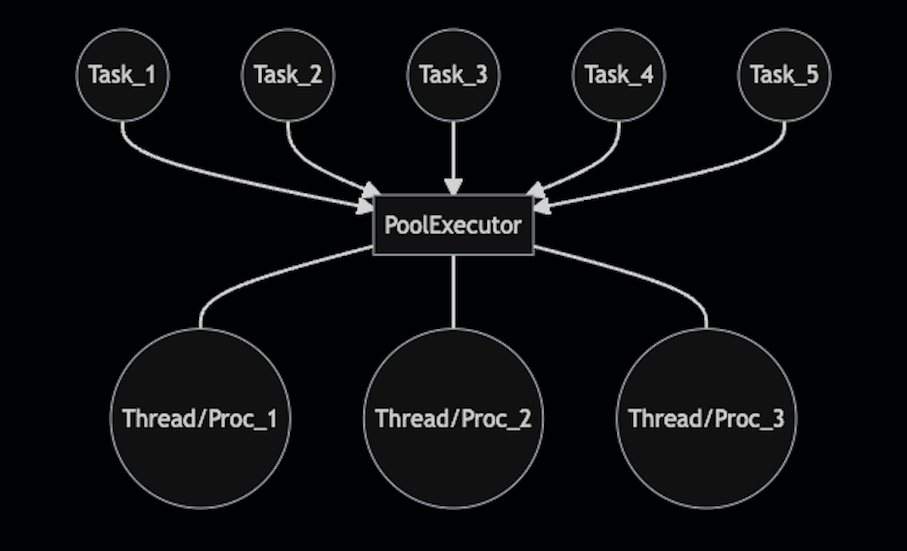

How does concurrent.futures work?

The concurrent.futures module provides two main classes:

ThreadPoolExecutor, but it uses processes instead of threads.

To use concurrent.futures, you create an instance of one of these classes and then submit tasks (functions) for execution using its submit() method. The tasks will be executed asynchronously, and the results can be collected using the result() or done() methods.

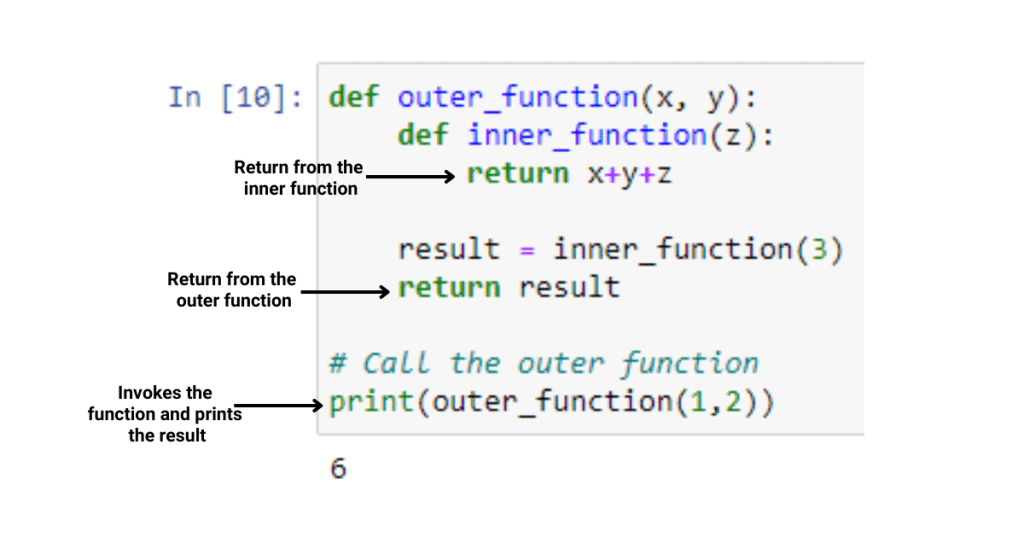

Here's a simple example:

import concurrent.futures

import time

def task(x):

print(f"Task {x} started")

time.sleep(2)

print(f"Task {x} finished")

with concurrent.futures.ThreadPoolExecutor(max_workers=3) as executor:

for i in range(5):

executor.submit(task, i)

print("Main thread finished")

In this example, we create a ThreadPoolExecutor with 3 worker threads and submit 5 tasks (functions) to be executed concurrently. The tasks print a message indicating when they start and finish.

Benefits of using concurrent.futures

Using concurrent.futures can bring several benefits:

concurrent.futures provides a high-level interface that simplifies the process of managing concurrency in your code. Easy debugging: With concurrent.futures, you can easily debug your concurrent code by printing log messages or using logging libraries.

Overall, concurrent.futures is a powerful and convenient way to handle concurrency in Python, making it easier for developers to write efficient and scalable programs.