Python gensim word2vec

Python gensim word2vec

I'm glad you're interested in learning about word embeddings! Word2Vec is a powerful technique used in natural language processing (NLP) to convert text data into numerical vectors that capture the meaning and context of individual words.

What are word embeddings?

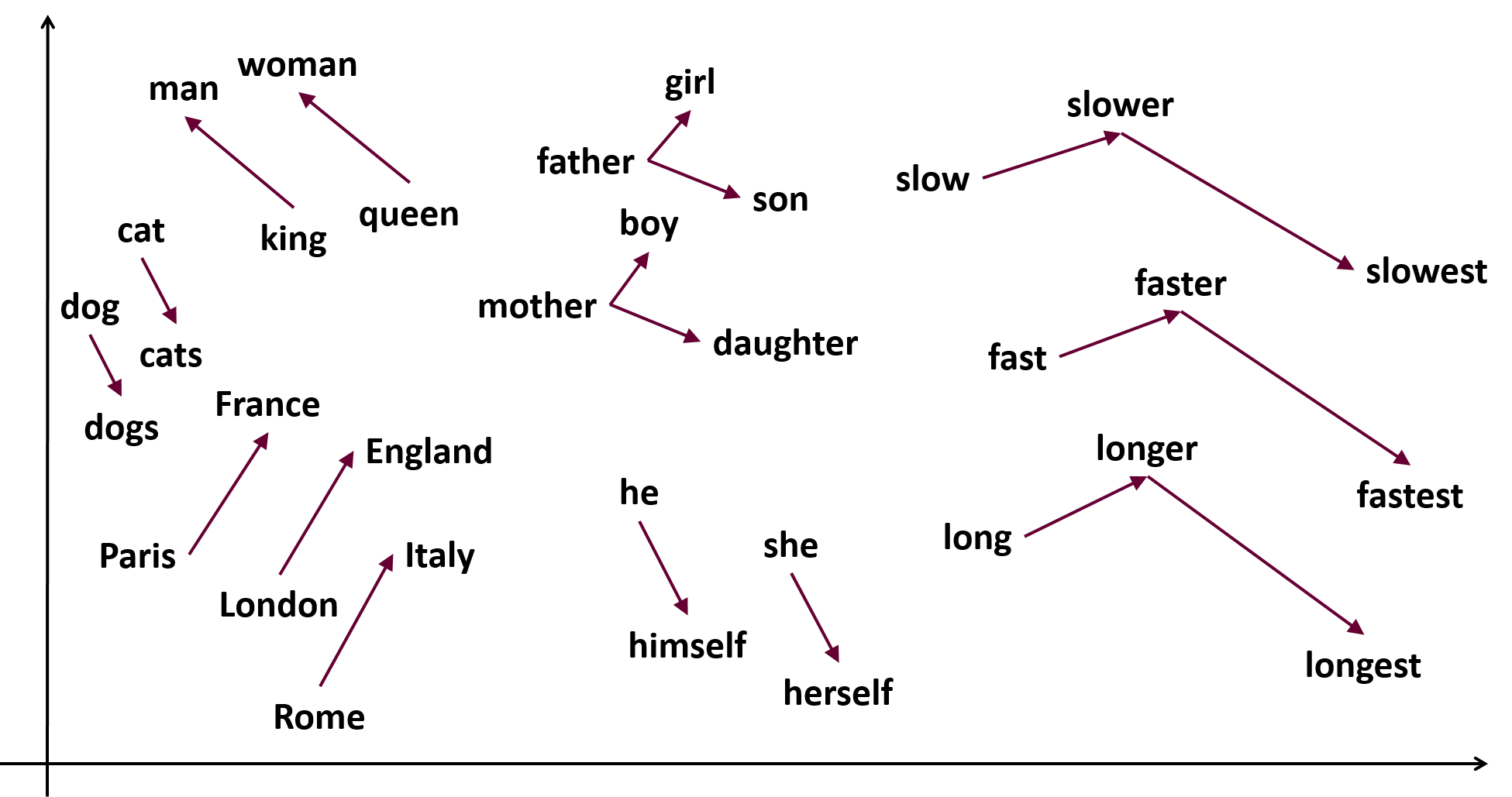

Word2Vec is a method for creating these word embeddings by analyzing large amounts of text data. The idea is to create a vector representation of each unique word in your corpus, where similar words have similar vectors. These vectors can be used as input features for various NLP tasks such as language modeling, sentiment analysis, and text classification.

How does Word2Vec work?

Word2Vec is based on the concept of predicting a target word given its context. This means that you feed the algorithm with a dataset of sentence pairs (e.g., "I am going to..." , "the park"), where one sentence contains the target word and another sentence contains its context.

The algorithm then calculates the similarity between these sentences based on how well they capture the meaning of the target word. To do this, it uses two neural network models:

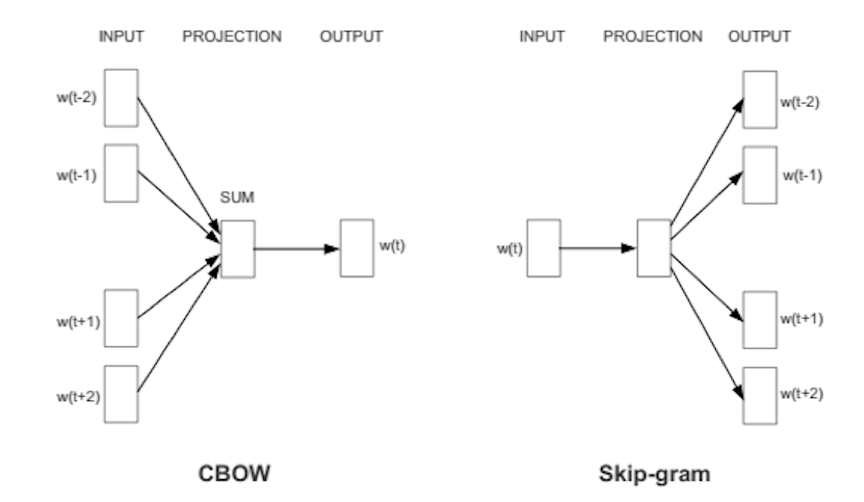

Continuous Bag-of-Words (CBOW): This model predicts a target word given its context by averaging the vectors of words in that context. Skip-Gram: This model predicts a set of context words given a target word.How to implement Word2Vec with Python and Gensim

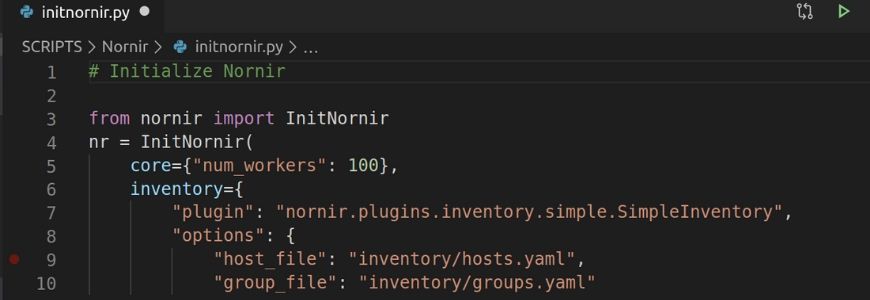

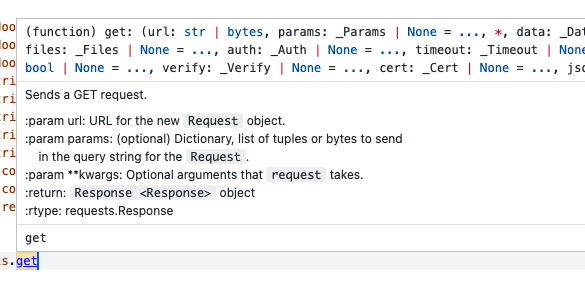

Gensim is an open-source Python library for topic modeling, document similarity analysis, and text manipulation. It has built-in support for the Word2Vec algorithm. Here's a basic example of how to use Gensim to create word embeddings:

from gensim.models import Word2Vec

from gensim.summarization.keyphrases import keywords

Load your dataset (e.g., sentences, documents)

sentences = [[word for word in document.split()] for document in your_dataset]

Create a Word2Vec model with default parameters (you can adjust these)

model = Word2Vec(sentences, size=100, window=5, min_count=1, workers=4)

Train the model

model.train(sentences, total_examples=len(your_dataset), epochs=10)

Get word embeddings

word_embeddings = {}

for word in model.wv.vocab:

word_embeddings[word] = model.wv[word]

print(word_embeddings)

What can you do with word embeddings?

Now that you have your word embeddings, you can use them as input features for various NLP tasks. Here are some examples:

Similarity search: Use cosine or euclidean distance to find the most similar words to a given target word. Language modeling: Train a language model (e.g., RNN, LSTM) on your word embeddings and use it for generation, classification, or regression tasks. Sentiment analysis: Train a classifier using word embeddings as input features and predict sentiment scores for new text data. Text classification: Use word embeddings to classify text into predefined categories (e.g., spam vs. ham emails).These are just a few examples of the many things you can do with word embeddings. Remember that word2vec is just one technique among many for representing text data as numerical vectors.

I hope this helps! Let me know if you have any further questions.

Python gensim tutorial

I'd be happy to provide a detailed tutorial on using Gensim for natural language processing (NLP) tasks in Python!

What is Gensim?

Gensim (Generative Syntax-based Information Model) is an open-source Python library for topic modeling and document similarity analysis. It allows you to perform various NLP tasks, such as:

Topic Modeling: Identify the underlying topics or themes in a large corpus of text data. Document Similarity Analysis: Measure the similarity between two documents based on their content.Prerequisites

Before we dive into the tutorial, make sure you have Python 3.x installed and familiar with basic Python concepts.

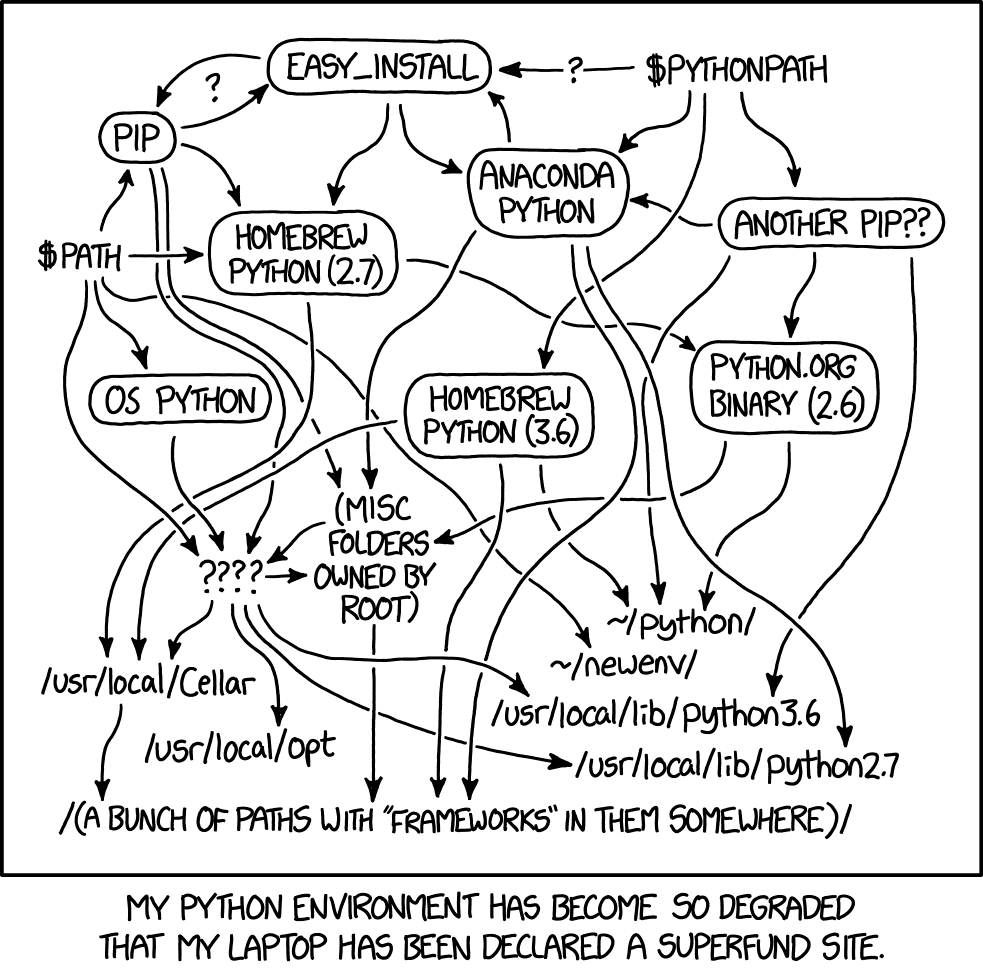

Step 1: Installing Gensim

To install Gensim, use pip:

pip install gensim

Step 2: Preparing the Data

For this tutorial, we'll use a sample corpus of text files (e.g., .txt or .md) stored in a directory. Create a new directory for your project and add your text files to it.

Next, create a Python script (e.g., gensim_tutorial.py) with the following code:

import osfrom gensim import corpora

Set the path to your corpus directorycorpus_dir = 'path/to/your/corpus/directory'

Create a list of file namesfile_names = [os.path.join(corpus_dir, f) for f in os.listdir(corpus_dir)]

print("Files found:", len(file_names))

Step 3: Preprocessing the Data

Modify the script to preprocess your text data. For example:

Tokenization: Split each text file into individual words (tokens). Stopword removal: Remove common stopwords like "the", "and", etc. Stemming or Lemmatizing: Reduce words to their base form.Here's an updated script:

import osfrom gensim import corpora, utils

from nltk.tokenize import word_tokenize

Set the path to your corpus directorycorpus_dir = 'path/to/your/corpus/directory'

Create a list of file namesfile_names = [os.path.join(corpus_dir, f) for f in os.listdir(corpus_dir)]

print("Files found:", len(file_names))

Initialize an empty list to store preprocessed documentsdocs = []

for filename in file_names:

with open(filename, 'r') as file:

text = file.read()

Tokenize the texttokens = word_tokenize(text.lower())

Remove stop words and punctuationtokens = [t for t in tokens if not utils.is_stop(t) and not t.isalpha()]

Add the preprocessed document to the listdocs.append(tokens)

print("Preprocessed documents:", len(docs))

Step 4: Creating a Corpus

Now that you have your preprocessed data, create a Gensim corpus object:

from gensim import corpora Create a dictionary from the preprocessed documentsdict = corpora.Dictionary(docs)

Convert the list of documents into a Gensim corpuscorpus = [dict.doc2bow(doc) for doc in docs]

Step 5: Training a Topic Model

Choose a topic modeling algorithm (e.g., Latent Dirichlet Allocation (LDA)) and train it on your corpus:

from gensim.models import TfidfModel, LdaModel Create a TF-IDF model to transform the datatfidf_model = TfidfModel(corpus)

Convert the corpus into TF-IDF representationcorpus_tfidf = [tfidf_model[doc] for doc in corpus]

Train an LDA topic model on the TF-IDF corpuslda_model = LdaModel(corpus_tfidf, id2word=dict, passes=15)

Step 6: Analyzing the Results

Use the trained topic model to:

Identify topics: Get a list of the top words for each topic. Compute document similarities: Calculate the similarity between two documents based on their topic distributions.Here's an example code snippet:

# Get the top words for each topictopic_words = [(topic_id, [word for word, score in topics[topic_id]] )

for topic_id in range(lda_model.num_topics)]

print("Topic words:", topic_words)

Compute document similaritiesdocument_similarities = []

for i in range(len(corpus)):

for j in range(i + 1, len(corpus)):

similarity = lda_model.doc_similarity(corpus[i], corpus[j])

document_similarities.append((i, j, similarity))

print("Document similarities:", document_similarities)

That's it! This tutorial has covered the basic steps of using Gensim for topic modeling and document similarity analysis. You can now explore more advanced topics (pun intended!), such as:

Topic evolution: Track changes in topic distributions over time. Document clustering: Group documents based on their topic similarities.Remember to keep your data well-preprocessed, and you'll be amazed at the insights Gensim can uncover!