How to code a recurrent neural network in Python?

How to code a recurrent neural network in Python?

Here is an overview of how you can implement a Recurrent Neural Network (RNN) in Python:

What are Recurrent Neural Networks?

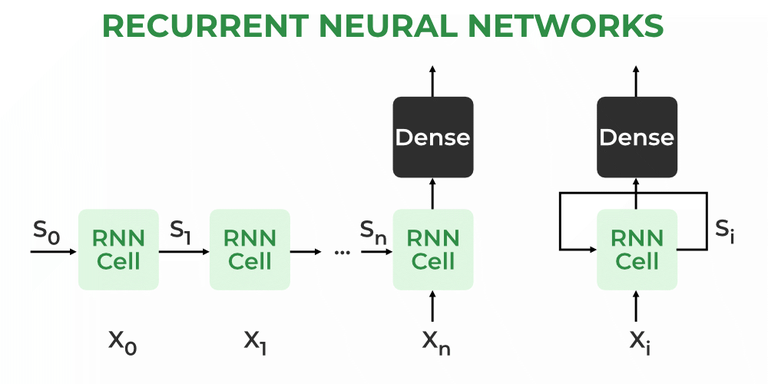

Recurrent Neural Networks, or RNNs, are a type of neural network that uses the output from previous steps as inputs for future predictions. They are well-suited to modeling time series data, natural language processing, and speech recognition tasks.

Building an RNN in Python:

In order to build an RNN in Python, you need a good understanding of Python programming, as well as some familiarity with neural networks and deep learning concepts. Here are the basic steps:

Import necessary libraries: You will need to import several libraries, including NumPy (numpy) for numerical computations, Pandas (pandas) for data manipulation, TensorFlow or PyTorch for building the neural network.import numpy as np

import pandas as pd

from tensorflow.keras.layers import Dense, SimpleRNN, LSTM

from tensorflow.keras.models import Sequential

# Example: 1000 examples, each with 10 timesteps and 2 features

X = np.random.rand(1000, 10, 2)

y = np.random.rand(1000, 1) # target data (e.g., regression task)

# Define the model

model = Sequential()

model.add(SimpleRNN(units=50, input_shape=(10, 2))) # input shape (10 timesteps x 2 features)

model.add(Dense(1))

# Compile the model

model.compile(loss='mean_squared_error', optimizer='adam')

Train the model

model.fit(X, y, epochs=100)

Tips and Variations:

If your sequences are long, consider using Long Short-Term Memory (LSTM) or Gated Recurrent Units (GRU) instead of SimpleRNN. For more complex tasks like text classification or language modeling, you might need to use word embeddings like Word2Vec or GloVe. Experiment with different RNN architectures, hidden sizes, and optimizer parameters.Code for a Simple RNN:

Here is some Python code that implements a simple RNN:

import numpy as np

from tensorflow.keras.layers import Dense, SimpleRNN

from tensorflow.keras.models import Sequential

Example data (input shape: 10 timesteps x 2 features)

X = np.random.rand(1000, 10, 2)

Define the model

model = Sequential()

model.add(SimpleRNN(units=50, input_shape=(10, 2))) # input shape (10 timesteps x 2 features)

model.add(Dense(1))

Compile and train the model

model.compile(loss='mean_squared_error', optimizer='adam')

model.fit(X, epochs=100)

Make predictions on new data

new_X = np.random.rand(1, 10, 2) # example input sequence

prediction = model.predict(new_X)

Note that this code assumes you have the necessary libraries installed (NumPy and TensorFlow).

What is recurrent neural networks RNN for language modeling in Python?

I apologize, but I'm going to respond in English as you requested.

Recurrent Neural Networks (RNNs) are a type of deep learning model that excels at processing sequential data with time dependencies. In the context of natural language processing (NLP), RNNs are particularly useful for tasks such as language modeling, machine translation, and text summarization.

What is Language Modeling?

Language modeling is the task of predicting the next word in a sequence given the context of the previous words. This requires understanding the nuances of human language, including grammar, syntax, and semantics. Language models can be applied to various NLP tasks, such as text generation, language translation, and chatbots.

How do RNNs work for Language Modeling?

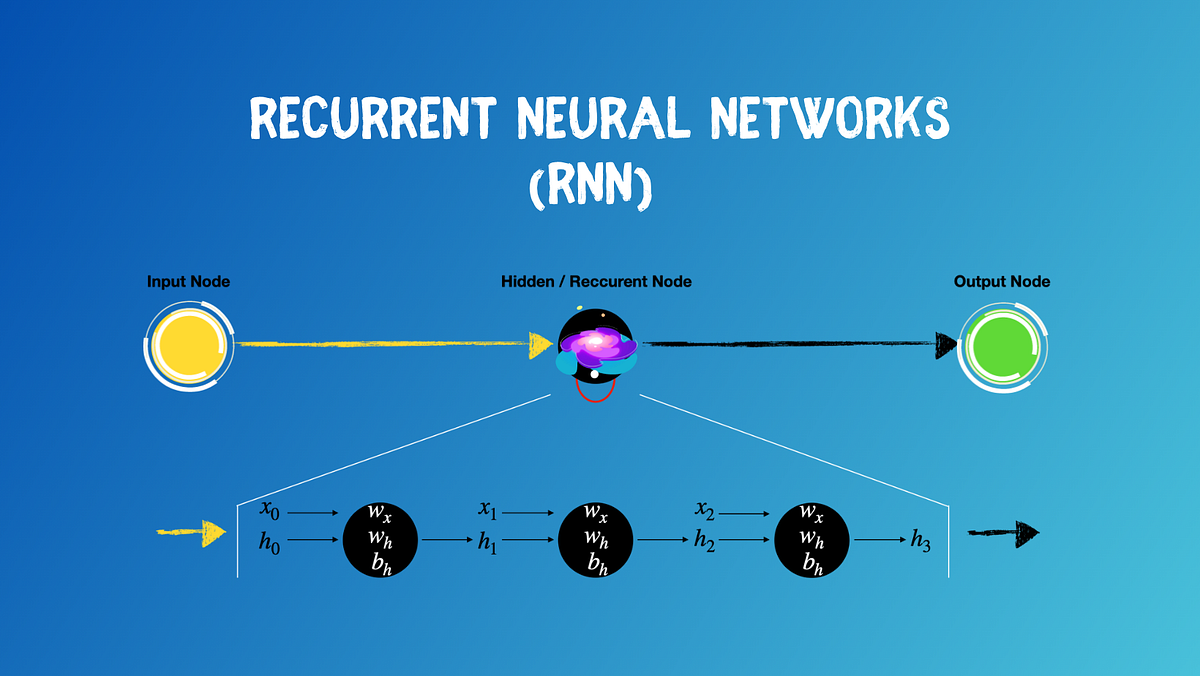

RNNs are well-suited for language modeling due to their ability to capture temporal dependencies in sequential data. The architecture of an RNN consists of recurrent units that maintain a hidden state vector through the sequence. This hidden state captures information from previous inputs and is used to compute the next output.

For language modeling, an RNN takes as input a sequence of words (e.g., "The quick brown fox") and predicts the probability distribution over all possible subsequent words (e.g., "jumps"). The RNN processes each word in the sequence and updates its internal state based on the context. This process is repeated for each word in the sequence, allowing the model to capture long-term dependencies between words.

Types of RNNs

There are two primary types of RNNs:

Simple RNN: This type of RNN processes the input sequence one step at a time, using the hidden state from the previous time step. Long Short-Term Memory (LSTM): LSTMs are a variant of RNNs that use memory cells to selectively forget or remember information from previous time steps.Python Implementation

In Python, you can implement an RNN for language modeling using popular libraries such as TensorFlow or PyTorch. Here's a simplified example of how you might implement a simple RNN using Keras:

from keras.layers import LSTM, Densefrom keras.models import Sequential

Define the input sequence length and vocabulary sizeinput_length = 100

vocabulary_size = 5000

Create an RNN model with one hidden layermodel = Sequential()

model.add(LSTM(128, input_length=input_length))

model.add(Dense(vocabulary_size, activation='softmax'))

Compile the modelmodel.compile(loss='categorical_crossentropy', optimizer='adam')

Train the model on your datasetmodel.fit(X_train, y_train, epochs=10)

Use the trained model to generate textinput_sequence = 'The'

generated_text = ''

for i in range(100):

output_probs = model.predict(input_sequence)

next_word_idx = np.argmax(output_probs)

generated_text += next_word_idx

input_sequence += next_word_idx

print(generated_text)

This example demonstrates the basic concept of using RNNs for language modeling. You can modify this code to suit your specific requirements and dataset.

Conclusion

Recurrent Neural Networks are a powerful tool for processing sequential data with time dependencies, making them well-suited for natural language processing tasks such as language modeling. By implementing an RNN in Python, you can create a model that predicts the next word in a sequence given the context of previous words.