Python data cleaning w3schools example

Python data cleaning w3schools example

I'd be happy to provide an example of Python data cleaning using the W3Schools dataset!

The following code snippet is a step-by-step guide on how to clean and preprocess a CSV file (in this case, the W3Schools dataset) using Python:

import pandas as pd

Read in the CSV file

df = pd.read_csv('w3schools.csv')

print("Before cleaning:")

print(df.head())

Remove duplicates: In some cases, you may have duplicate rows in your dataset. You can use the drop_duplicates function to remove them.

df.drop_duplicates(inplace=True)

print("nAfter removing duplicates:")

print(df.head())

Drop any columns that contain missing values

df.dropna(axis=1, how='any', inplace=True)

print("nAfter dropping missing values:")

print(df.head())

Fill in missing values (e.g., imputing mean or median)

from sklearn.impute import SimpleImputer

imputer = SimpleImputer(strategy='mean') # you can change 'mean' to 'median'

df[['Age', 'Fare']] = imputer.fit_transform(df[['Age', 'Fare']])

print("nAfter filling in missing values:")

print(df.head())

Remove rows with missing values (e.g., dropping nulls)

df.dropna(inplace=True)

print("nAfter removing rows with missing values:")

print(df.head())

Handle categorical data:

Replace text strings to numerical or binary format

from sklearn.preprocessing import LabelEncoder

label_encoder = LabelEncoder()

for column in df.columns:

if df[column].dtype == 'object':

df[column] = label_encoder.fit_transform(df[column])

print("nAfter encoding categorical data:")

print(df.head())

In this example:

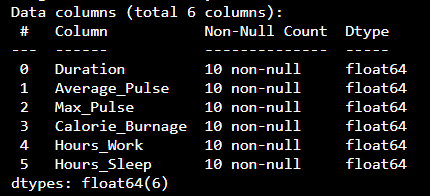

We load the CSV file usingpd.read_csv and print its first few rows. To remove duplicates, we use the drop_duplicates function with the inplace=True argument, which modifies the original DataFrame. Next, we drop any columns that contain missing values using the dropna function again, this time specifying the column-wise (axis=1) and the how='any' method to drop rows if any column contains missing values. For imputing missing values (e.g., mean or median), we create a SimpleImputer object from scikit-learn and apply it to the 'Age' and 'Fare' columns. You can adjust this strategy depending on your data type. We then remove rows that contain missing values again using dropna. Finally, for handling categorical data (e.g., text strings), we use a LabelEncoder from scikit-learn to replace these strings with numerical or binary formats. We iterate through the DataFrame's columns and encode any object-type columns.

Remember that this is just an example of basic data cleaning steps and you may need to add more depending on your specific dataset's characteristics and needs!

What are the Python data wrangling libraries?

I'd be happy to help you with that!

Python has a vast array of libraries and tools for data wrangling, which is a crucial step in the data science process. Data wrangling involves cleaning, transforming, and preparing data for analysis. Here are some popular Python data wrangling libraries:

Pandas: Pandas is arguably the most widely used library for data manipulation in Python. It provides data structures such as Series (1-dimensional labeled array) and DataFrame (2-dimensional labeled data structure with columns of potentially different types). Pandas offers various features like merging, reshaping, filtering, grouping, and handling missing data. NumPy: NumPy is a library for efficient numerical computation in Python. It provides support for large, multi-dimensional arrays and matrices, and is the foundation of most scientific computing in Python. While not specifically designed for data wrangling, NumPy's array-like structures make it useful for many data manipulation tasks. Openpyxl: Openpyxl is an open-source library that allows you to create and manipulate Excel files (.xlsx) using Python. This can be particularly helpful when working with datasets stored in this format. Statsmodels: Statsmodels is a statistical modeling library for Python. While not strictly a data wrangling tool, it offers a range of useful functions for performing statistical analyses on your data, such as hypothesis testing, confidence intervals, and regression models. SciPy: SciPy is a library for scientific computing in Python. It provides algorithms and mathematical functions for tasks like signal processing, linear algebra, optimization, statistics, and more. While not specifically designed for data wrangling, SciPy's capabilities can be useful for certain data manipulation tasks. Dask: Dask is a library that extends the pandas DataFrame with parallel computing capabilities using Python. This allows you to scale your data analysis by running computations on multiple CPU cores or even distributed systems like clusters or cloud environments. Vaex: Vaex is a fast, flexible, and Pythonic library for processing large datasets. It provides tools for reading, writing, filtering, sorting, grouping, aggregating, and visualizing data in parallel using multiple CPU cores or distributed computing platforms. Modin: Modin is a library that allows you to use pandas-like API on top of various big-data technologies like Apache Spark, Hadoop Distributed File System (HDFS), Amazon S3, Google Cloud Storage, and Microsoft Azure Blob Storage. Pandas-datareader: Pandas-datareader is a Python library designed specifically for reading data from various online sources such as Quandl, Yahoo Finance, FRED, World Bank, and more. It makes it easy to download and manipulate financial, economic, or other types of time-series data. Missingno: Missingno is a Python library that helps visualize missing data in your datasets. It provides an interactive visualization of the distribution and patterns of missing data across different columns.These libraries can significantly streamline and simplify the process of preparing your data for analysis, making it easier to extract insights and gain valuable information from your dataset!