How to build a transformer in python using python

How to build a transformer in python using python

Here's an overview of how to build a Transformer model in Python using the popular Hugging Face library, Transformers:

What is a Transformer?

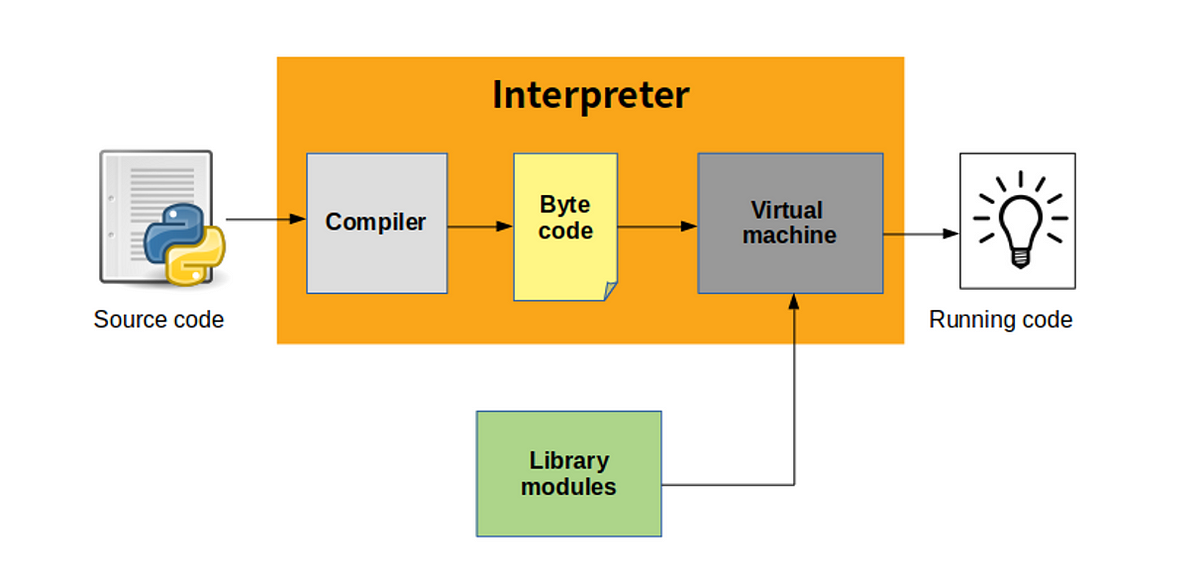

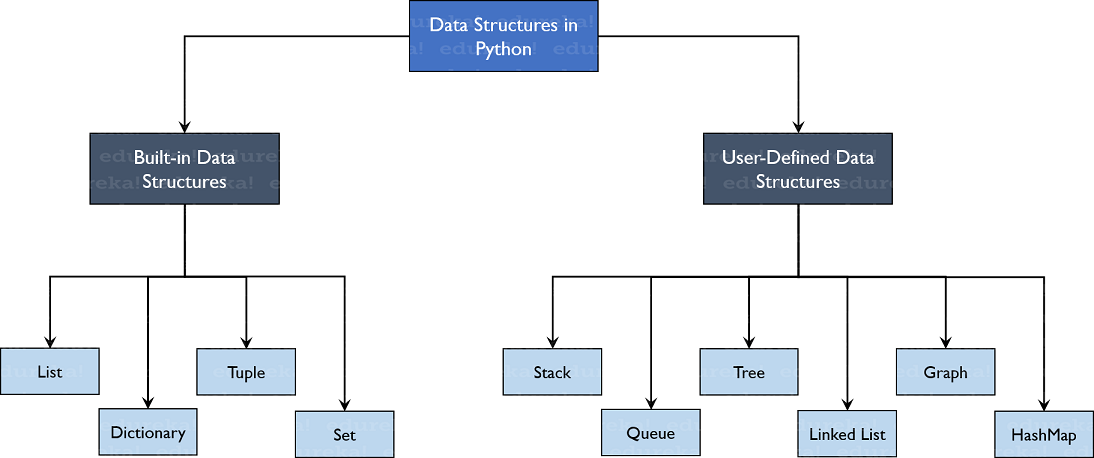

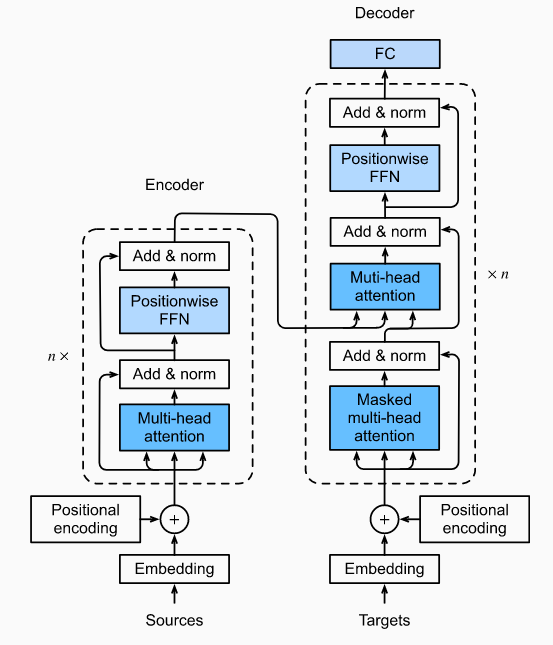

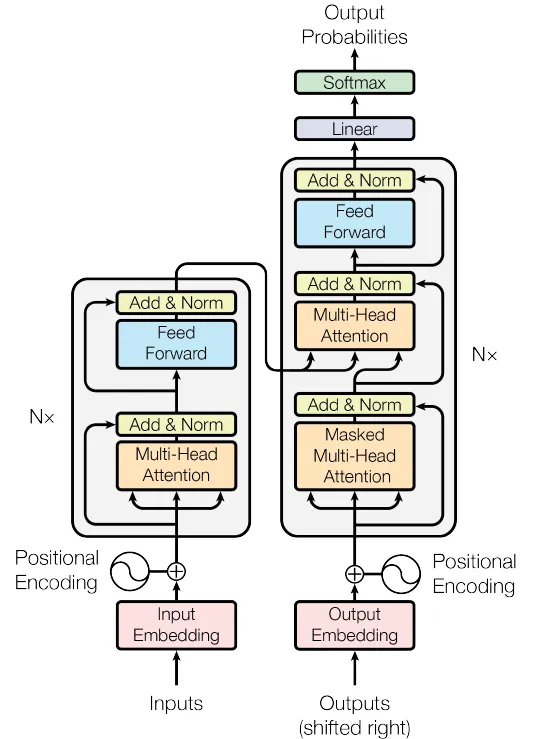

Before we dive into building one, let's briefly explain what a Transformer is. A Transformer is a type of neural network architecture that uses self-attention mechanisms to process input sequences. This allows it to learn complex relationships between different parts of the sequence. Unlike Recurrent Neural Networks (RNNs) and Convolutional Neural Networks (CNNs), Transformers do not rely on sequential processing, making them well-suited for tasks involving long-range dependencies.

Building a Transformer in Python using Hugging Face:

To build a Transformer model in Python, we'll use the Hugging Face Transformers library. This library provides pre-trained models and an easy-to-use API to create your own custom models.

Here's a step-by-step guide:

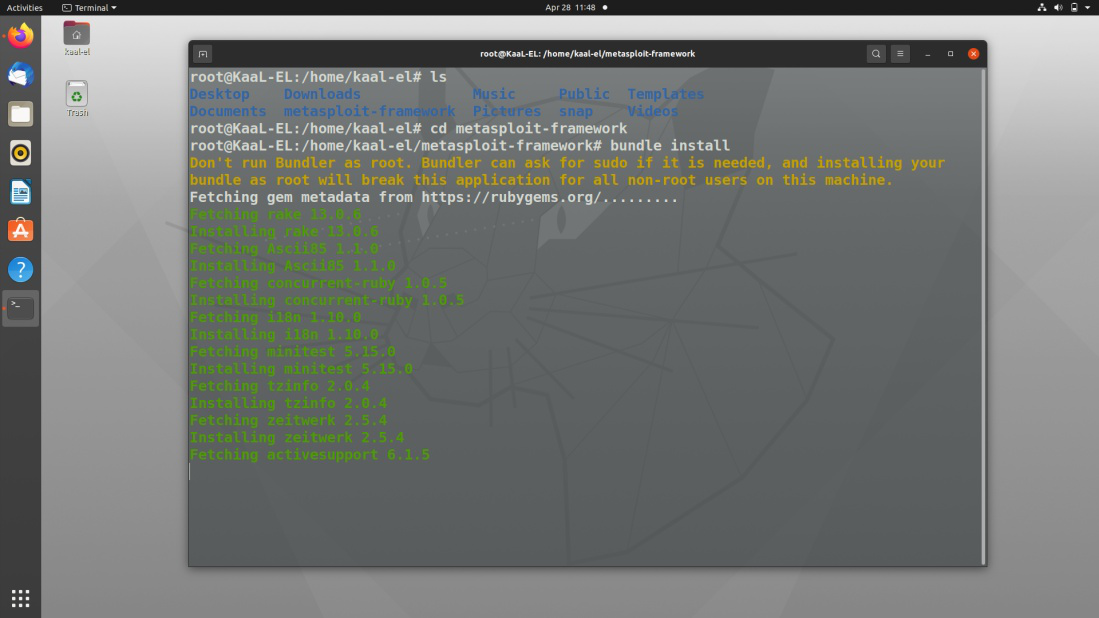

Install Hugging Face Transformers:pip install transformers

import torch

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model_name = 'bert-base-uncased'

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForSequenceClassification.from_pretrained(model_name, num_labels=8) # Change num_labels based on your classification problem

Let's say we have a list of sentences and corresponding labels:

input_ids = []

attention_masks = []

for sentence in sentences:

inputs = tokenizer.encode_plus(sentence,

add_special_tokens=True,

max_length=512,

return_attention_mask=True,

pad_to_max_length=True,

truncation=True)

input_ids.append(inputs['input_ids'])

attention_masks.append(inputs['attention_mask'])

We'll create a custom dataset class to load our data:

class CustomDataset(torch.utils.data.Dataset):

def init(self, input_ids, attention_masks, labels):

self.input_ids = input_ids

self.attention_masks = attention_masks

self.labels = labels

def len(self):

return len(self.labels)

def getitem(self, idx):

input_id = self.input_ids[idx]

attention_mask = self.attention_masks[idx]

label = self.labels[idx]

return {

'input_ids': torch.tensor(input_id),

'attention_mask': torch.tensor(attention_mask),

'labels': torch.tensor(label)

}

dataset = CustomDataset(input_ids, attention_masks, labels)

data_loader = torch.utils.data.DataLoader(dataset, batch_size=32, shuffle=True)

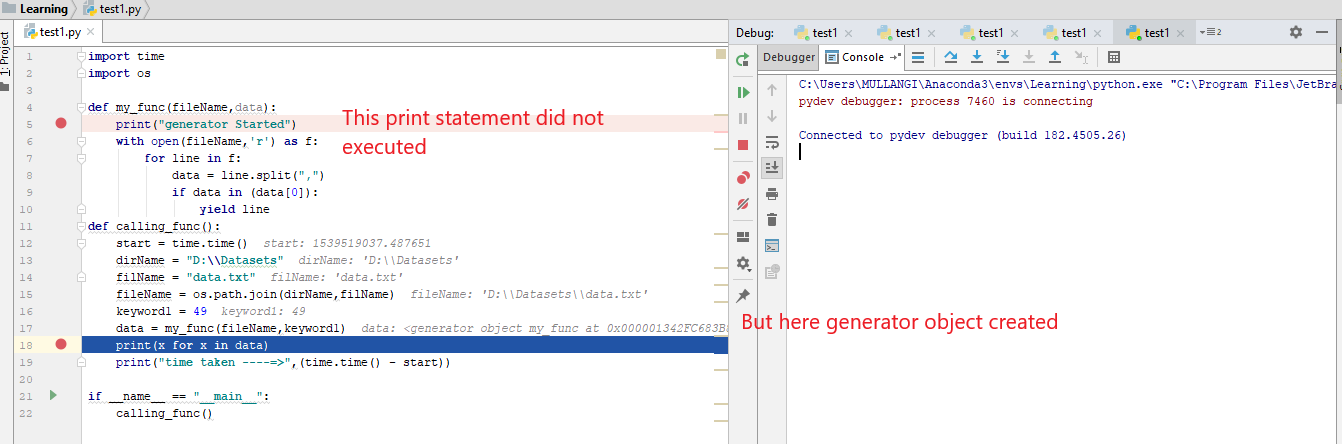

We'll define a custom forward pass that takes in our input sequence and output label:

class TransformerClassifier(torch.nn.Module):

def init(self, model):

super(TransformerClassifier, self).init()

self.model = model

def forward(self, input_ids, attention_mask):

outputs = self.model(input_ids=input_ids, attention_mask=attention_mask)

return outputs.last_hidden_state[:, 0, :]

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model.to(device)

loss_fn = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr=1e-5)

for epoch in range(5):

for batch in data_loader:

input_ids = batch['input_ids'].to(device)

attention_mask = batch['attention_mask'].to(device)

labels = batch['labels'].to(device)

optimizer.zero_grad()

outputs = model(input_ids, attention_mask)

loss = loss_fn(outputs, labels)

loss.backward()

optimizer.step()

print("Training complete!")

That's it! You now have a basic Transformer model in Python using the Hugging Face Transformers library. This is just a starting point, and you'll likely need to fine-tune your model for specific tasks or datasets.

Remember to experiment with different hyperparameters, architectures, and techniques to achieve better results.

How to build a transformer in python using a

Building a Transformer model in Python! Let's dive into the details.

Transformers are a type of sequence-to-sequence (seq2seq) architecture that have revolutionized the field of natural language processing (NLP). They were first introduced by Vaswani et al. in 2017 and have since become incredibly popular in various applications, such as machine translation, text summarization, and question answering.

To build a Transformer model in Python, you'll need to follow these steps:

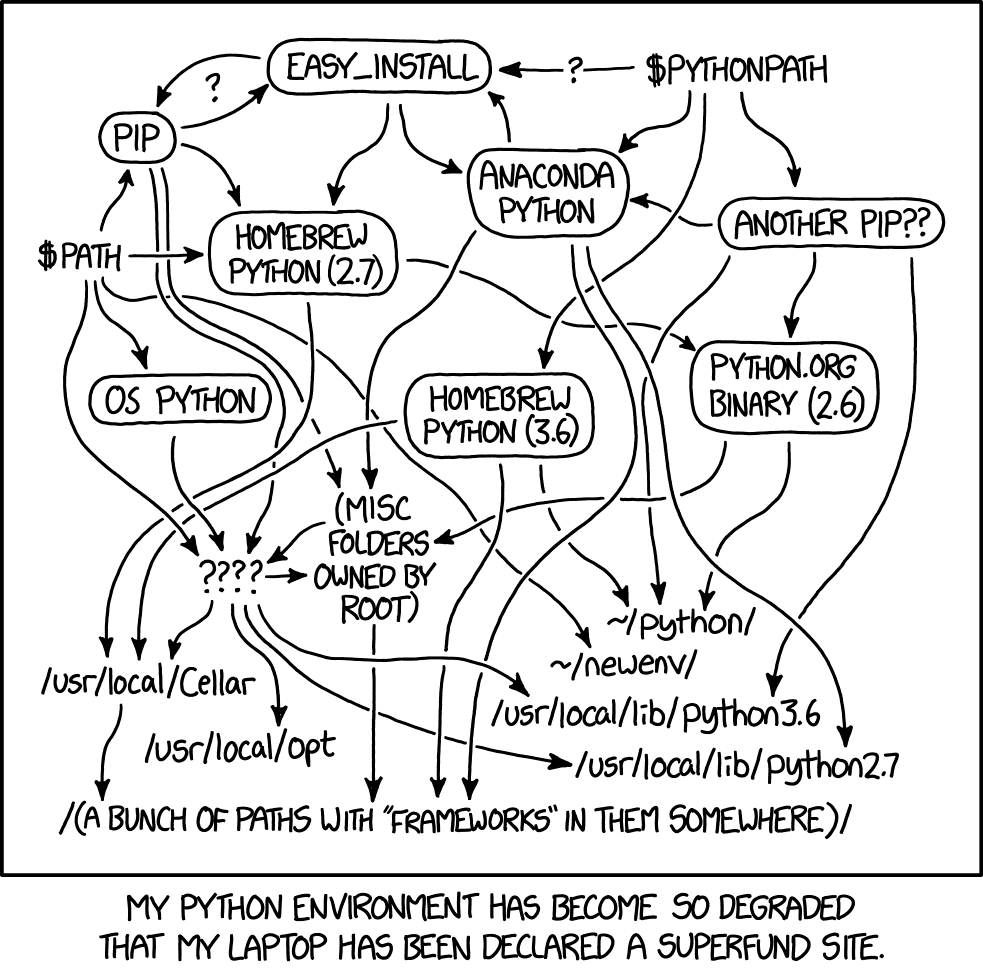

Step 1: Install the necessary libraries

You'll need the following libraries:

TensorFlow (TF) or PyTorch for building the model NumPy and Pandas for data manipulation OpenNMT-py for sequence-to-sequence tasksInstall these libraries using pip:

pip install tensorflow numpy pandas opennmt-py

Step 2: Prepare your dataset

Transformers work best with large datasets. For this example, let's assume you have a simple seq2seq task like English-French translation. You can use the OpenSubtitles corpus or any other dataset of your choice.

Preprocess your data by tokenizing text into subwords (wordpiece), converting everything to lowercase, and creating dictionaries for both languages.

Step 3: Define your Transformer model architecture

For this example, we'll build a basic encoder-decoder architecture. You can modify it as needed:

import tensorflow as tf

class TransformerModel(tf.keras.Model):

def init(self):

super(TransformerModel, self).init()

Encoder

self.encoder = tf.keras.layers.LSTM(

units=512,

return_sequences=True,

return_state=True,

stateful=True

)

Decoder

self.decoder = tf.keras.layers.LSTM(

units=512,

return_sequences=True,

return_state=True,

stateful=True

)

def call(self, inputs):

encoder_output, _, _ = self.encoder(inputs)

decoder_output, _, _ = self.decoder(encoder_output)

return decoder_output

Step 4: Compile your model

Compile the model with an Adam optimizer and binary cross-entropy loss:

model.compile(optimizer='adam', loss='binary_crossentropy')

Step 5: Train your model

Train the model on your preprocessed dataset using the fit method. You can also use early stopping to prevent overfitting.

history = model.fit(train_data, epochs=10, validation_data=test_data)

Step 6: Evaluate your model

Evaluate the performance of your trained model on a test set. For this example, we'll calculate the accuracy:

test_loss, test_acc = model.evaluate(test_data)

print(f"Test Accuracy: {test_acc:.2f}")

That's it! You've successfully built a basic Transformer model in Python using TensorFlow.

Remember to adjust hyperparameters and fine-tune your model for better performance on your specific task. Happy learning!

Please note that this is a simplified example, and you may need to modify the code or add more layers/parameters depending on your specific use case.