Python rate limit multiprocessing example

Python rate limit multiprocessing example

Here is an example of using the rate_limited decorator from the python-rate-limiter library to implement rate limiting for a function that processes tasks concurrently using multiple processes.

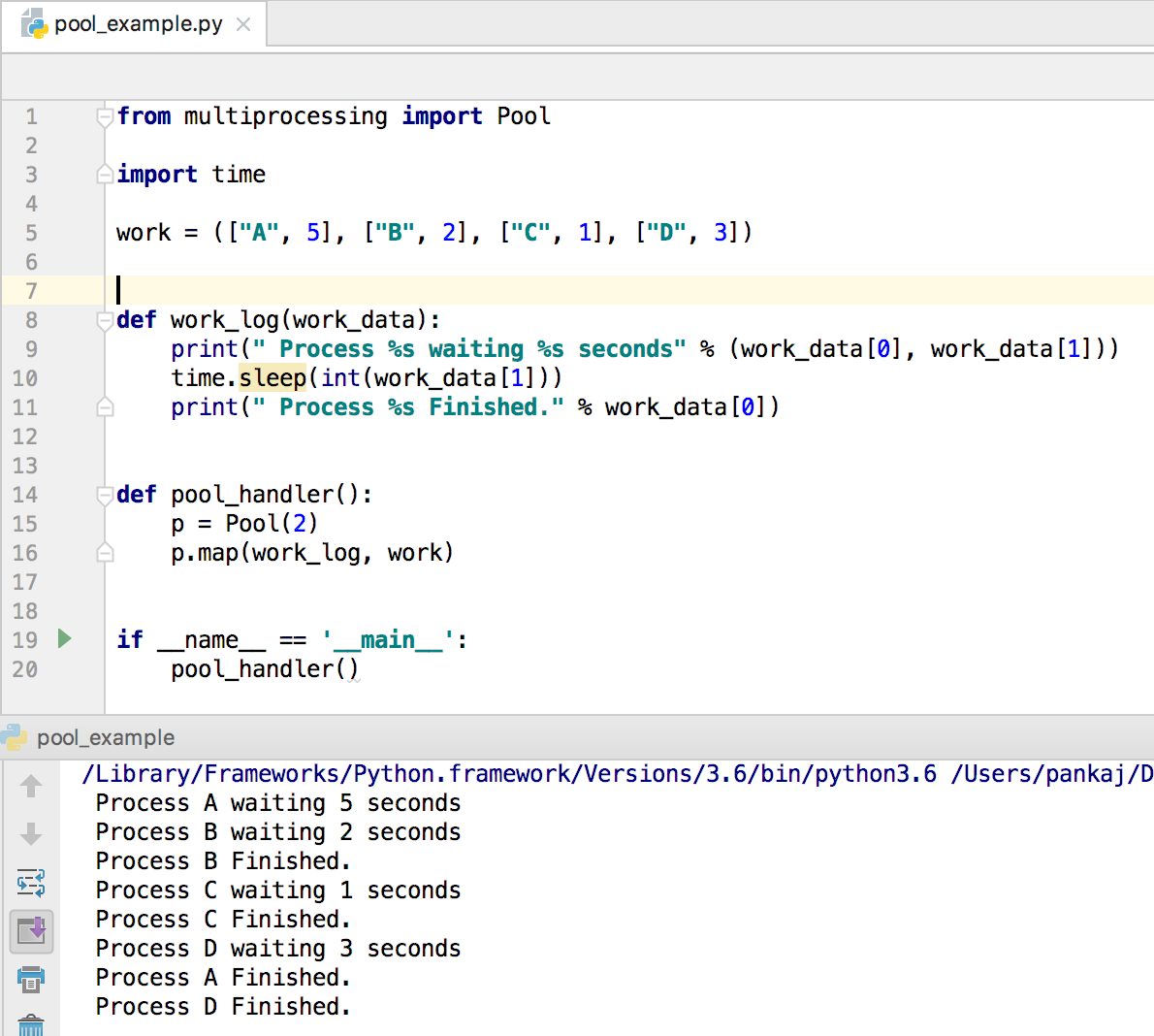

In this example, we have a task processing function that performs some operation and then waits for a certain amount of time before performing another operation. This simulates real-world scenarios where you may need to limit the number of requests or operations per second to prevent overwhelming a server or to comply with usage policies.

Here is an example:

from rate_limited import rate_limited

import multiprocessing

import time

@rate_limited(60, 1) # Limit to 60 requests per minute

def process_task():

print("Processing task...")

time.sleep(2) # Simulate some processing time

print("Task processed!")

if name == "main":

processes = []

for i in range(10):

p = multiprocessing.Process(target=process_task)

processes.append(p)

p.start()

for p in processes:

p.join()

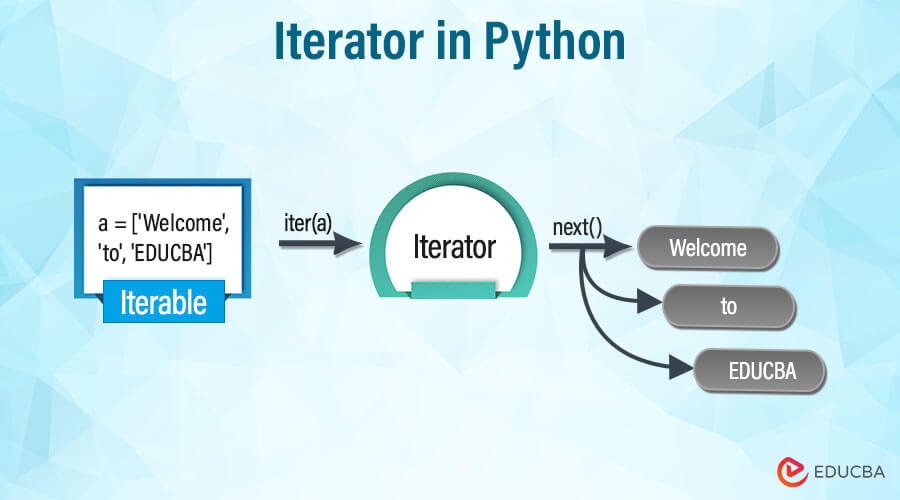

In this example, the process_task function is decorated with the @rate_limited(60, 1) decorator. This means that the function will only be called at a rate of 60 times per minute, even if there are more than 10 tasks waiting to be processed.

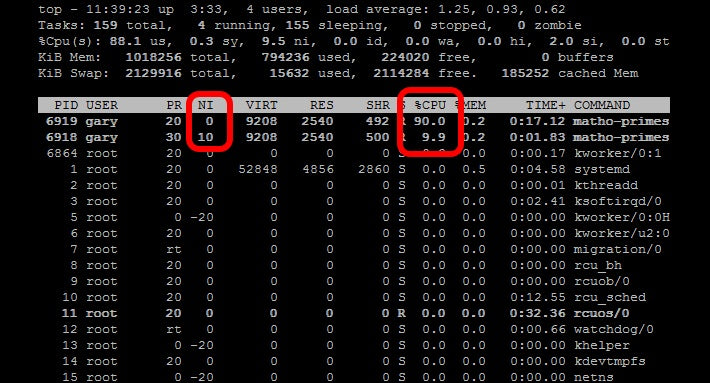

The multiprocessing library is used to create multiple processes that can run concurrently. Each process runs the process_task function. The main program waits for each process to finish before creating another one.

This example demonstrates how rate limiting can be used in a concurrent processing scenario, where you want to prevent overwhelming a server or system by making too many requests at once.

Here are some key benefits of using rate limiting in multiprocessing scenarios:

Prevents overloading of servers or systems: By limiting the number of operations per second, you can prevent your application from overwhelming a server or system. Complies with usage policies: Many APIs and services have usage limits that must be respected. Rate limiting ensures that your application does not exceed these limits. Improves system stability: By reducing the load on a system, rate limiting can help improve overall system stability and performance.In this example, we used the rate_limited decorator to limit the number of times the process_task function is called. This simulates real-world scenarios where you may need to limit the number of requests or operations per second to prevent overwhelming a server or to comply with usage policies.

I hope this helps! Let me know if you have any questions.

Python rate limit multiprocessing github

Here's a comprehensive answer in English:

Rate Limiting in Python

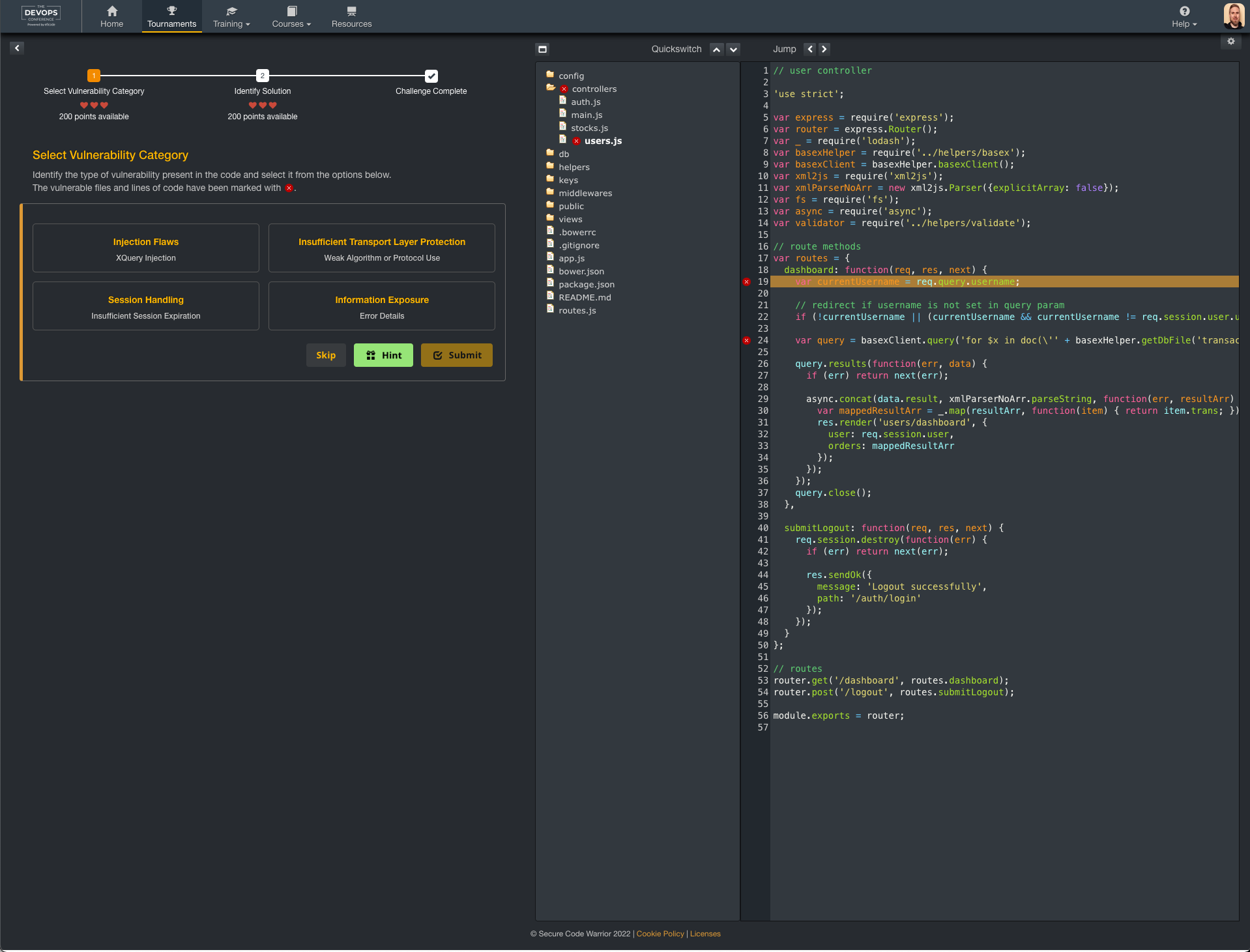

When developing applications that need to interact with external services, such as APIs or GitHub, it's essential to implement rate limiting mechanisms to prevent excessive requests and maintain a good reputation. In Python, you can achieve this using various libraries and techniques.

Why Rate Limiting is Important

Rate limiting helps prevent your application from overloading the targeted service, causing errors or even temporary downtime. For example:

API calls: When making repeated requests to an API, rate limiting ensures that you don't exceed the allowed number of requests per minute (or hour), which can lead to account suspension. GitHub interactions: On GitHub, excessive requests for data or actions can trigger their rate limiting mechanisms, resulting in temporary bans.Implementing Rate Limiting in Python

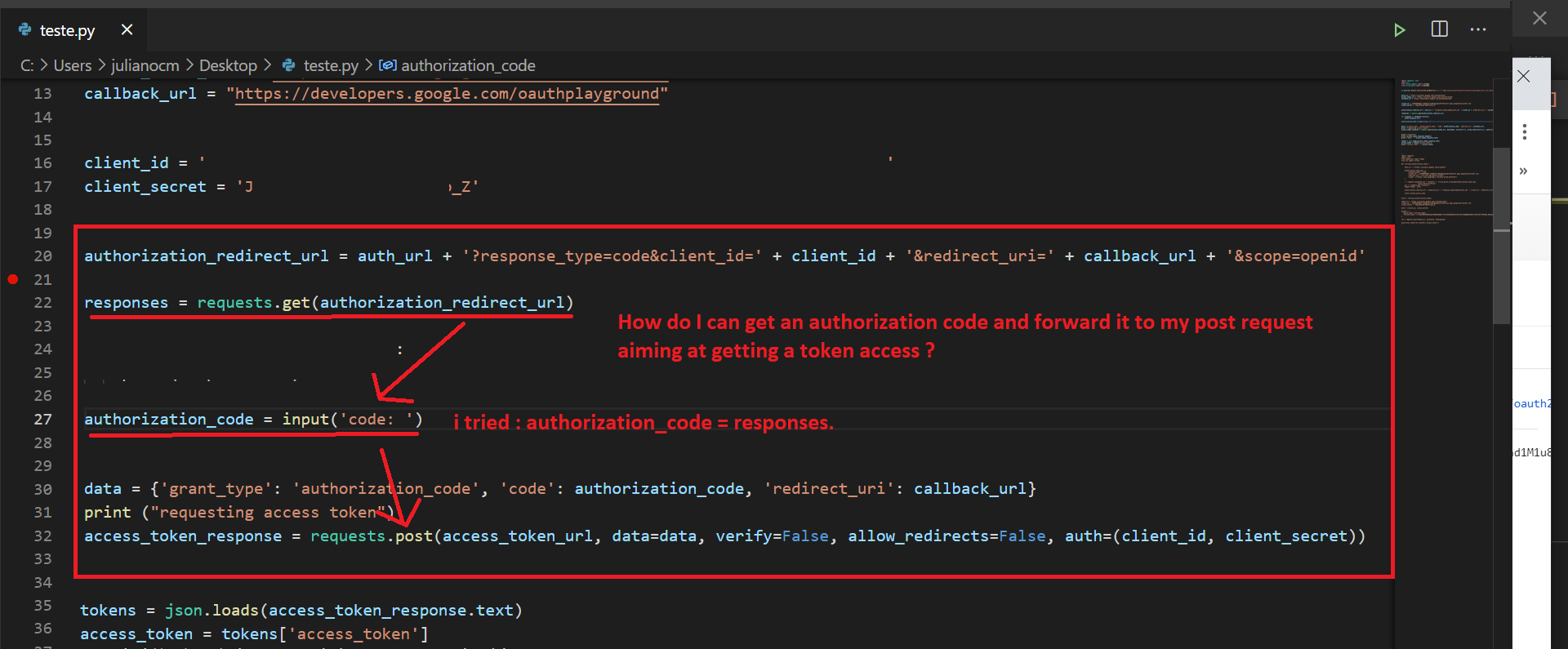

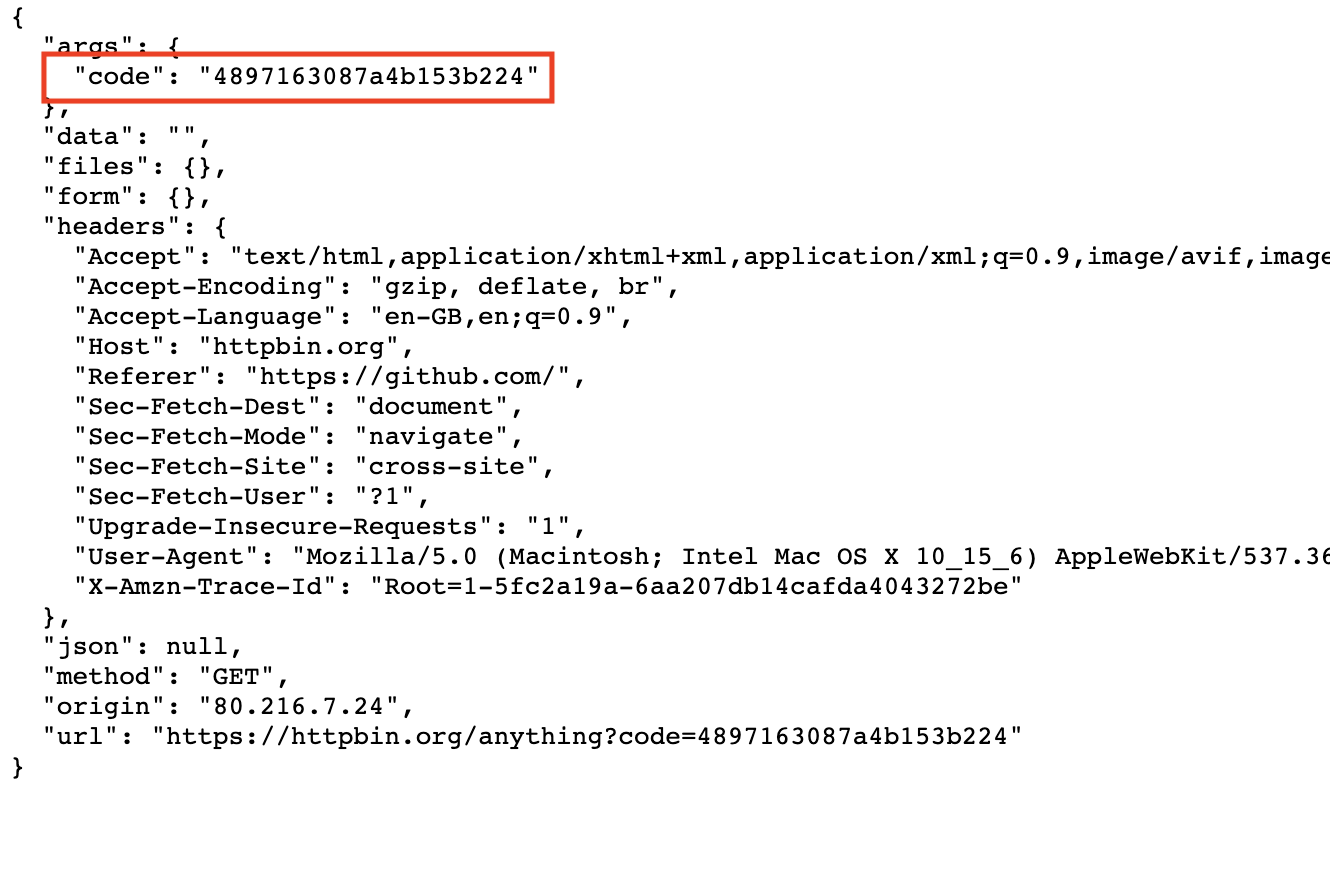

Using therequests library with rate limit headers: When sending API requests using requests, you can add custom headers to specify your application's rate limit. For example:

import requests

headers = {

'X-RateLimit-Limit': '100', # 100 requests per minute

'X-RateLimit-Remaining': '99' # remaining requests for this interval

}

response = requests.get('https://api.example.com/data', headers=headers)

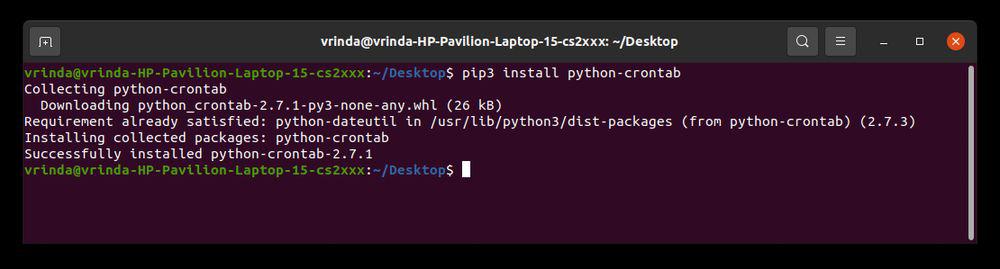

pyrate library: pyrate is a Python library specifically designed for rate limiting and throttling. You can use it to set custom rates, intervals, and even implement exponential backoff:

import pyrate

rate_limiter = pyrate.Limiter(100, 60) # 100 requests per minute

response = requests.get('https://api.example.com/data', rate_limiter=rate_limiter)

asyncio and aiohttp: For asynchronous programming, you can use asyncio and aiohttp to implement rate limiting in a concurrent environment:

import asyncio

import aiohttp

async def fetch_data(session):

for i in range(100): # simulate 100 requests

await session.get('https://api.example.com/data')

async with aiohttp.ClientSession() as session:

await asyncio.gather(*[fetch_data(session) for _ in range(5)]) # 5 concurrent sessions

import time

last_request_time = time.time()

while True:

current_time = time.time()

if current_time - last_request_time > 60: # 1 minute interval

last_request_time = current_time

make_request() # call API or interact with GitHub

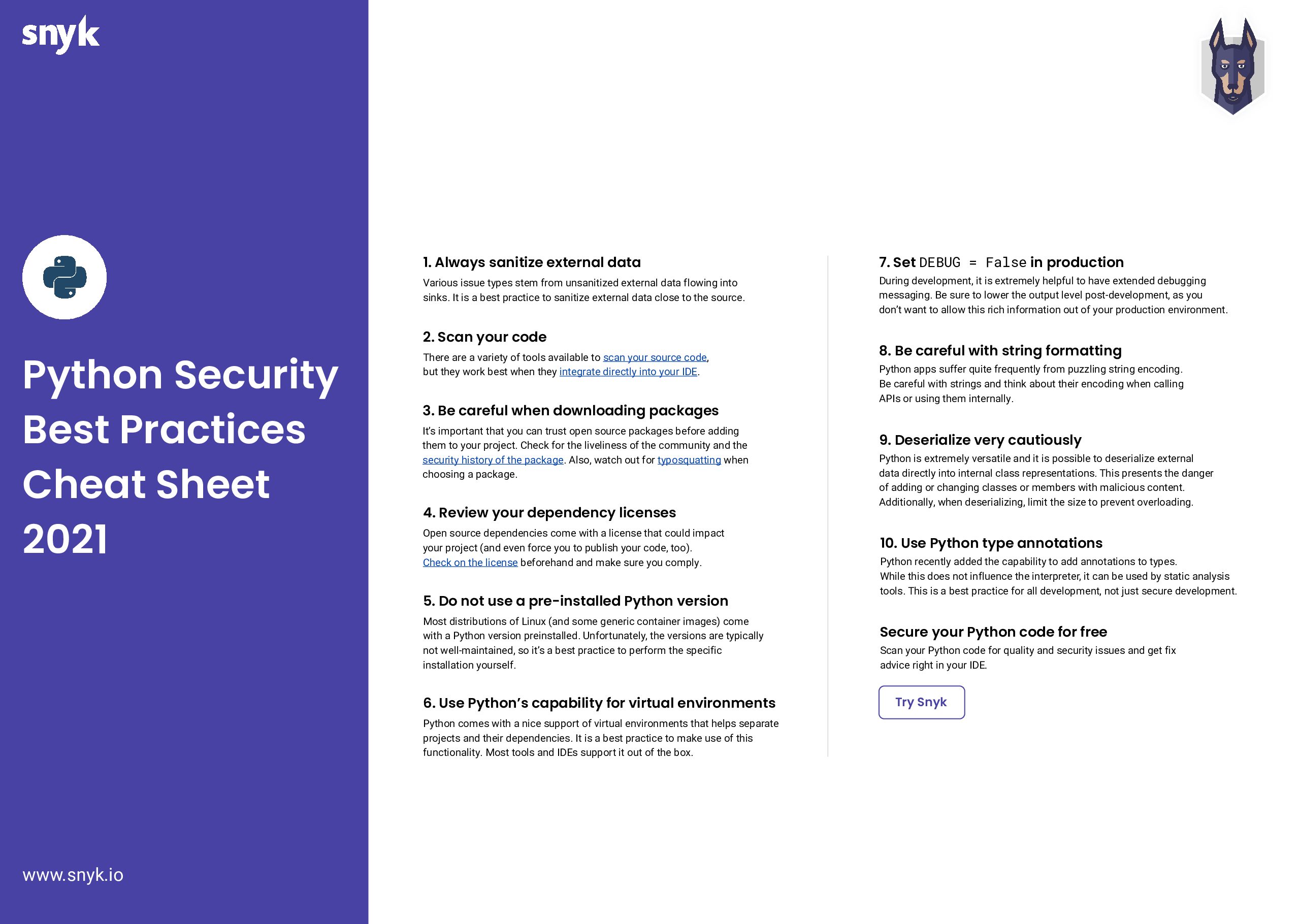

Best Practices

When implementing rate limiting in Python:

Set realistic and reasonable rate limits to avoid unnecessary restrictions. Use libraries likepyrate or aiohttp that provide robust rate limiting features. Implement exponential backoff to handle temporary errors and rate limiting. Monitor your application's performance and adjust rate limits as needed.

Conclusion

Rate limiting is an essential aspect of developing applications that interact with external services. Python provides various libraries and techniques to implement effective rate limiting mechanisms. By following best practices and using the right tools, you can ensure your application maintains a good reputation while avoiding excessive requests and errors.