recurrent neural network python code

recurrent neural network python code

I'd be happy to provide a Python implementation of a Recurrent Neural Network (RNN)!

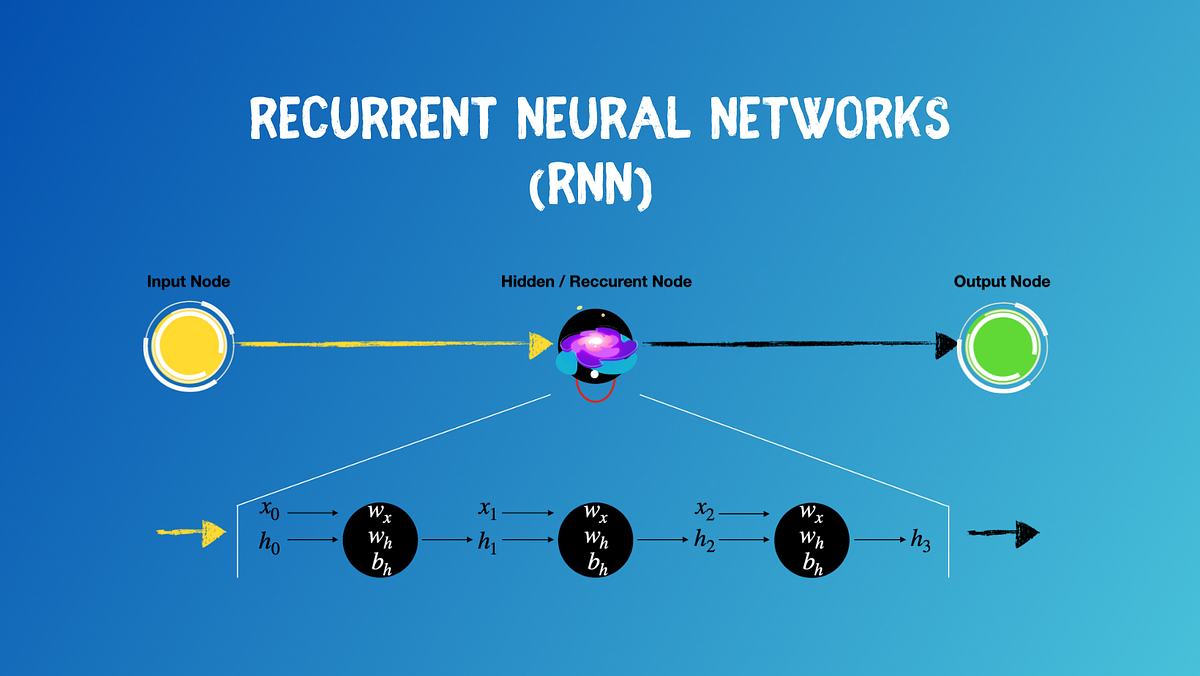

Before we dive into the code, let's briefly discuss what RNNs are and why they're important.

What is an RNN?

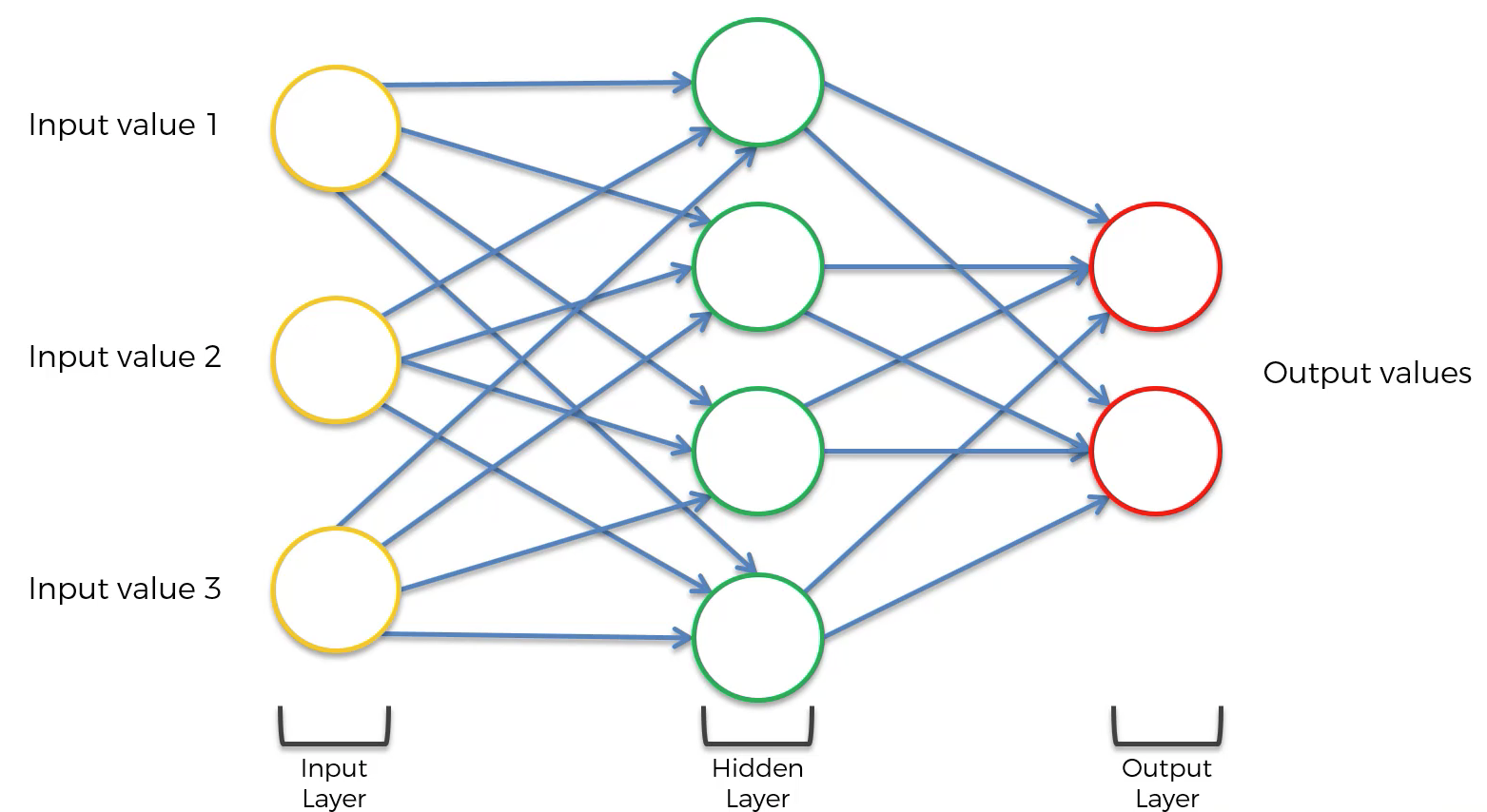

A Recurrent Neural Network is a type of neural network that is designed to handle sequential data, such as time series data or text. Unlike feedforward networks (e.g., convolutional neural networks), which process data in a fixed way, RNNs have feedback connections that allow information from past timesteps to be used at future timesteps.

Why use RNNs?

RNNs are incredibly useful for modeling sequential data because they can capture long-term dependencies and context. For example:

Now, let's get to the code!

Python Implementation of an RNN

We'll use the popular TensorFlow library and the Keras API to implement a simple RNN.

import numpy as npfrom tensorflow.keras.layers import LSTM, Dense, Embedding

Define the RNN modelclass RNN:

def init(self, input_dim, hidden_dim):

self.input_dim = input_dim

self.hidden_dim = hidden_dim

Define the RNN layersself.embedding_layer = Embedding(input_dim=input_dim, output_dim=hidden_dim)

self.lstm_layer = LSTM(units=hidden_dim, return_sequences=True)

self.fc_layer = Dense(units=input_dim)

def call(self, x):

Embed the input dataembedded_x = self.embedding_layer(x)

Pass the embedded data through the RNN layerslstm_output = self.lstm_layer(embedded_x)

Apply a fully connected (dense) layer to the LSTM outputfc_output = self.fc_layer(lstm_output)

return fc_output

Define some example input and expected output datainput_data = np.random.rand(10, 50, 128) # (batch_size, sequence_length, hidden_dim)

output_data = np.random.rand(10, 50, 128)

Create an instance of the RNN modelmodel = RNN(input_dim=128, hidden_dim=256)

Compile the model with a loss function and optimizermodel.compile(loss='mean_squared_error', optimizer='adam')

Train the model using the input and output datahistory = model.fit(input_data, output_data, epochs=10)

Use the trained model to make predictions on new input datanew_input_data = np.random.rand(5, 50, 128)

predictions = model.predict(new_input_data)

This code defines an RNN model that takes in a sequence of vectors (input_dim) and outputs a sequence of vectors. The model consists of three main layers:

Embedding layer: This layer maps the input data to a higher-dimensional space using the input_dim as the embedding dimension. LSTM layer: This layer applies the Long Short-Term Memory algorithm to the embedded data, which allows it to capture long-term dependencies and context. FC (Fully Connected) layer: This layer applies a fully connected transformation to the LSTM output.The call method defines the forward pass through the RNN model. The example code trains the model using the input_data and output_data, and then uses the trained model to make predictions on new input data.

Note: This is just a basic implementation of an RNN in Python. There are many ways to improve this code, such as using batch normalization, adding more layers, or using more advanced optimization techniques.

I hope this helps! Let me know if you have any questions.

How to build a recurrent neural network in Python?

Building a Recurrent Neural Network (RNN) in Python can be achieved using various deep learning frameworks such as Keras, TensorFlow, and PyTorch. Here's an example of how you can create an RNN model using Keras:

Step 1: Install necessary libraries

Install Keras and other required packages:

pip install keras numpy matplotlib

Step 2: Load your dataset

Assuming you have a text dataset with sentences as strings, load the data into memory. For example, let's say you're working with movie subtitles:

import pandas as pd

Load the dataset

train_data = pd.read_csv('movie_train.csv')['text']

test_data = pd.read_csv('movie_test.csv')['text']

Step 3: Preprocess your data

Tokenize the text data, remove special characters, and convert to lowercase:

from keras.preprocessing.text import Tokenizer

from keras.preprocessing.sequence import pad_sequences

tokenizer = Tokenizer(num_words=5000)

train_sequences = tokenizer.texts_to_sequences(train_data)

test_sequences = tokenizer.texts_to_sequences(test_data)

Pad the sequences with zeros to match the longest sequence

max_length = 200

padded_train = pad_sequences(train_sequences, maxlen=max_length)

padded_test = pad_sequences(test_sequences, maxlen=max_length)

Step 4: Define your RNN model

Use Keras' Sequential API to create a simple RNN model with one layer:

from keras.models import Sequential

from keras.layers import Dense, Embedding, Dropout

Define the model

model = Sequential()

model.add(Embedding(input_dim=len(tokenizer.word_index) + 1,

output_dim=128,

input_length=max_length))

model.add(Dropout(0.2)) # Add some dropout for regularization

model.add(LSTM(units=64, return_sequences=True)) # Recurrent layer with LSTM cells

model.add(Dense(64, activation='relu')) # Feedforward layer with ReLU activation

model.add(Dense(1, activation='sigmoid')) # Final output layer with sigmoid activation

Compile the model with a binary classification loss function

model.compile(loss='binary_crossentropy',

optimizer='adam', metrics=['accuracy'])

Step 5: Train your RNN

Split your dataset into training and validation sets:

from keras.utils import to_categorical

from sklearn.model_selection import train_test_split

Split the data into training, testing, and validation sets

train_sequences, val_sequences = train_test_split(padded_train, test_size=0.2, random_state=42)

test_sequences, _ = train_test_split(padded_test, test_size=0.5, random_state=42)

Convert categorical labels to binary format for classification

train_labels = to_categorical(train_data)

val_labels = to_categorical(val_data)

Train your model using the training data:

model.fit(padded_train, epochs=10, batch_size=32, validation_data=(val_sequences, val_labels))

Step 6: Evaluate and predict

Use the test dataset for evaluation and prediction:

loss, accuracy = model.evaluate(test_sequences)

print(f'Test loss: {loss:.3f}, Accuracy: {accuracy:.2%}')

Predict on a new sample

new_sample = ['This is an example sentence']

new_sequence = tokenizer.texts_to_sequences(new_sample)

padded_new = pad_sequences(new_sequence, maxlen=max_length)

prediction = model.predict(padded_new)[0][0]

print(f'Prediction: {"positive" if prediction > 0.5 else "negative"}')

Note that this is a simplified example to illustrate the basics of building an RNN in Python using Keras. You may need to adjust hyperparameters, experiment with different architectures, and fine-tune your model for better performance.