How to make generative AI in Python?

How to make generative AI in Python?

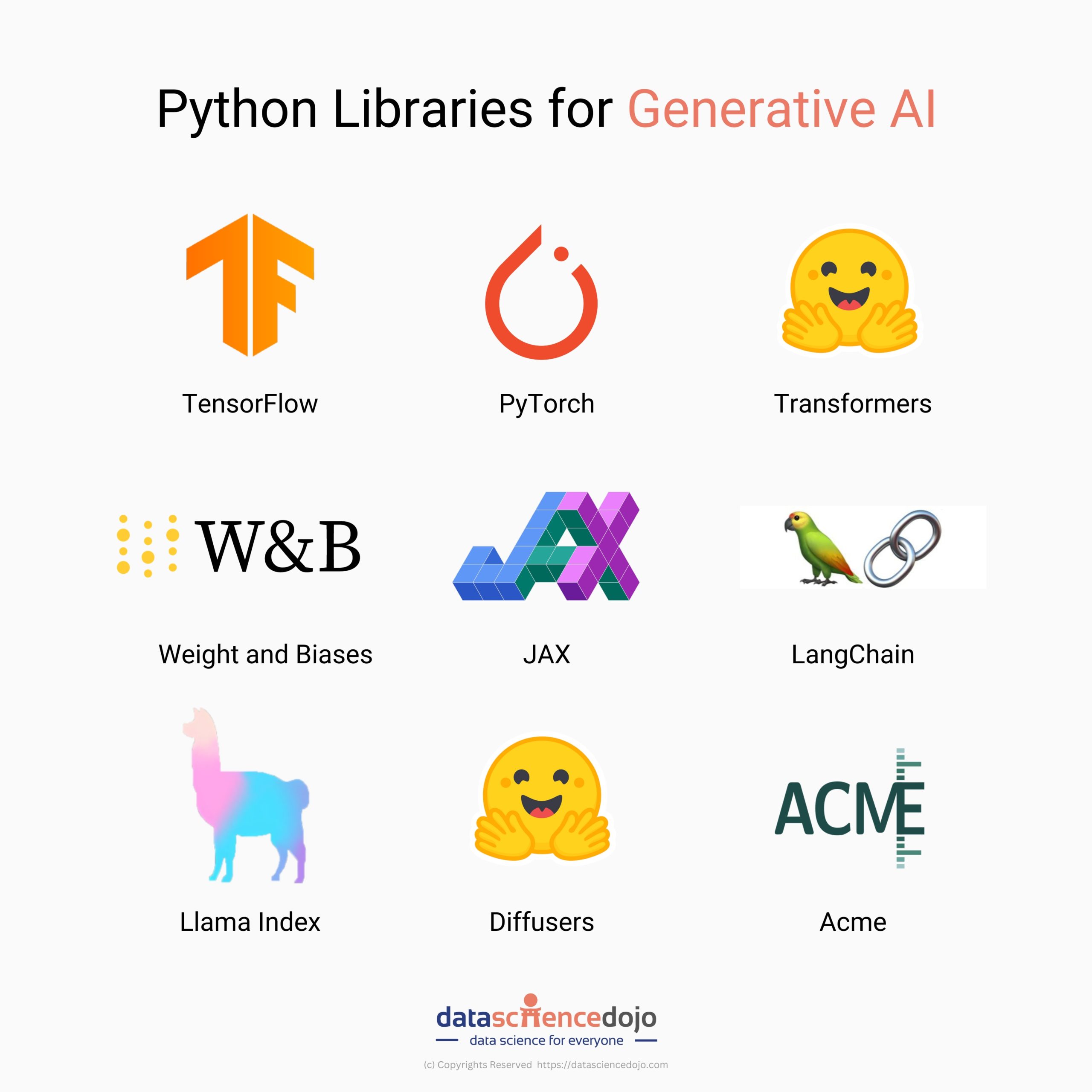

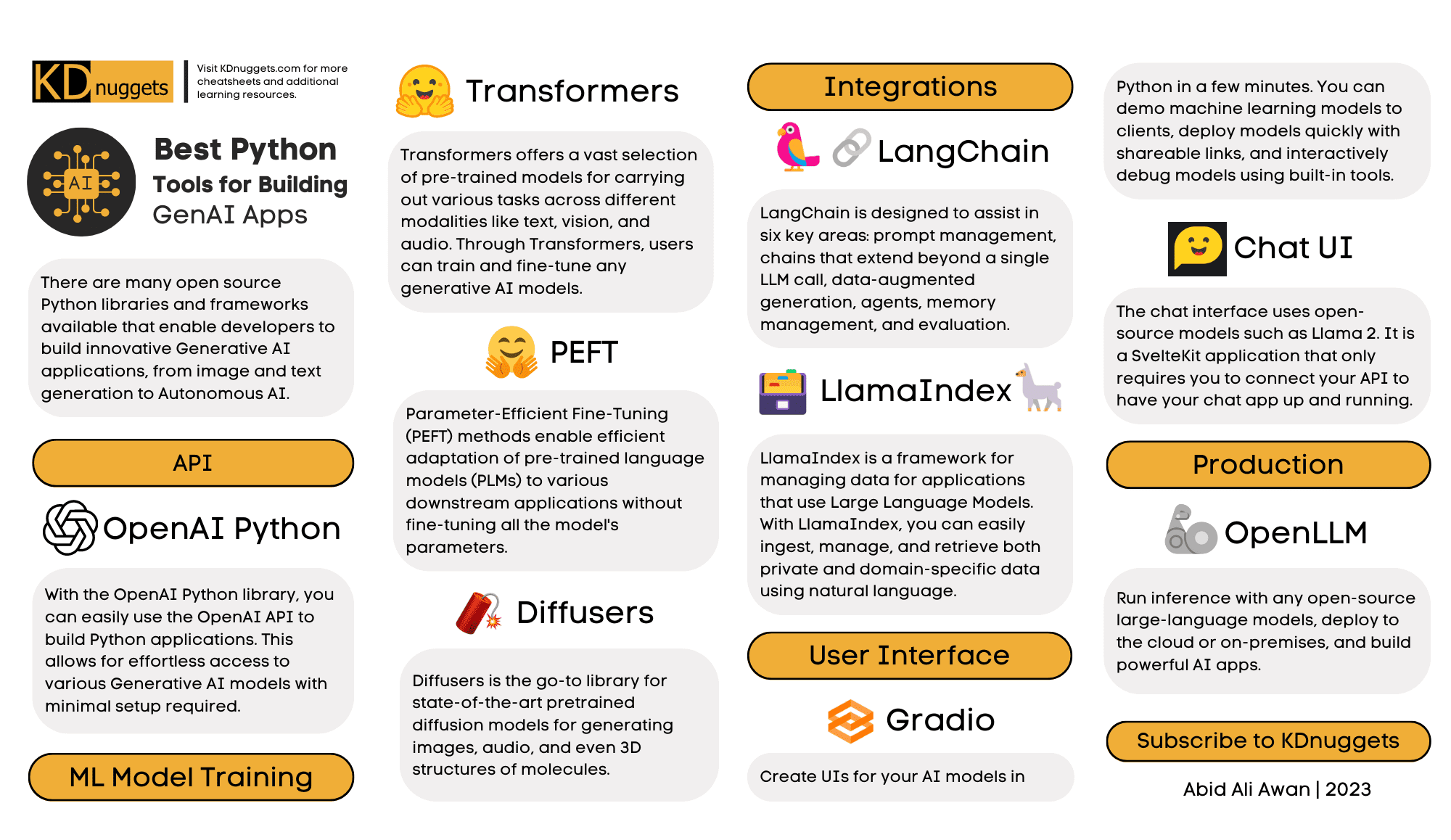

To create a generative AI model using Python, you'll need a deep learning library such as TensorFlow, PyTorch, or Keras. Here's an overview of how to build one:

Step 1: Choose a Task

Generative models are trained on a specific task, like generating text, images, or music. For this example, let's focus on text generation.

Step 2: Collect Data

Gather a dataset that contains the type of text you want your model to generate (e.g., articles, stories, or even social media posts). Ensure the data is well-formatted and split into training and testing sets (80-20% split is typical).

Step 3: Preprocess Text Data

Clean and preprocess your text data. This includes:

Tokenization: Break down text into individual words (tokens) or subwords. Normalizing case: Convert all text to lowercase or uppercase for consistency. Removing punctuation and special characters: Use libraries like NLTK or spaCy for this step. Vectorizing text: Represent each token as a vector using techniques like bag-of-words, TF-IDF, or word embeddings (e.g., Word2Vec, GloVe).Step 4: Choose an Architecture

Select a suitable generative model architecture. Common choices include:

Recurrent Neural Networks (RNNs): Sequential models with LSTM or GRU cells are effective for text generation. Variational Autoencoders (VAEs): Encoder-decoder architectures with VAE components can generate new texts.Step 5: Implement the Model

Use your chosen library and implement the model architecture. For RNNs, this involves defining:

An input layer (e.g., word embeddings) A recurrent cell (e.g., LSTM or GRU) to process sequential data A output layer for generating textFor VAEs, you'll need to define:

An encoder network that maps input data to a latent space A decoder network that generates new texts based on the encoded representation A loss function to optimize (e.g., reconstruction loss, KL divergence)Step 6: Train the Model

Train your model using the training set. You can use techniques like:

Maximum Likelihood Estimation (MLE) Stochastic Gradient Descent (SGD) with Adam or RMSProp optimizers Early stopping to prevent overfittingStep 7: Evaluate and Refine

Evaluate your model's performance using metrics like perplexity, BLEU score, or ROUGE score. Refine the model by:

Hyperparameter tuning Data augmentation (e.g., adding more text data) Model ensembling (combining multiple models' predictions)Step 8: Use the Model

Once you're satisfied with your model's performance, use it to generate new texts! You can:

Sample from the model's output distribution Use the model as a component in more complex systems (e.g., chatbots, content generation platforms)Some popular Python libraries for generative AI include:

TensorFlow: A widely used and well-established library with extensive support for RNNs and VAEs. PyTorch: A dynamic and easy-to-use library with strong support for RNNs and VAEs. Keras: A high-level neural networks API that can be used with TensorFlow or Theano.Remember to keep in mind the specific requirements of your project, such as computational resources, data quality, and desired output. With these steps and libraries, you'll be well on your way to creating a generative AI model using Python!

How to build generative AI with Python?

Building a generative AI model using Python can be achieved through various libraries and frameworks such as TensorFlow, Keras, PyTorch, or Scikit-learn. Here's an overview of how you can get started:

Step 1: Choose a Library

For building generative models, I recommend starting with TensorFlow or PyTorch. Both have excellent support for deep learning, which is crucial for most generative AI applications. If you're new to machine learning, Keras might be a more accessible option due to its user-friendly API and ability to run on top of TensorFlow or Theano.

Step 2: Choose an Algorithm

Some popular algorithms for generating AI include:

Generative Adversarial Networks (GANs): Train two neural networks simultaneously: a generator that produces synthetic data and a discriminator that evaluates the generated data. Variational Autoencoders (VAEs): Use an encoder to map your input data into a latent space, then use a decoder to reconstruct the original data from the latent representation. VAEs are great for generative tasks where you want to preserve some structure in the input data.Step 3: Prepare Your Data

For many generative AI applications, you'll need large datasets containing diverse and varied examples of what you want your model to generate (e.g., images, text, music). You may need to preprocess or augment your data to better suit your chosen algorithm.

Step 4: Implement Your Model

Use the chosen library and algorithm to implement your generative AI model. This will typically involve defining neural network architectures, specifying loss functions, optimizing parameters with backpropagation, and possibly implementing additional techniques like data augmentation, regularization, or early stopping.

Step 5: Train and Evaluate Your Model

Train your model using a training dataset, then evaluate its performance on a separate validation set. You'll need to assess both the quality of generated samples and any metrics specific to your use case (e.g., log likelihood for VAEs).

Some popular Python libraries for generative AI include:

TensorFlow: A popular open-source framework developed by Google, ideal for large-scale deep learning projects. PyTorch: Another popular open-source framework known for its simplicity and flexibility, making it a great choice for rapid prototyping and development. Keras: A high-level neural networks API that can run on top of TensorFlow, Theano, or Microsoft Cognitive Toolkit (CNTK), offering an easy-to-use interface and pre-built layers for deep learning models.To illustrate the process, let's consider an example of building a simple GAN using Keras:

from keras.models import Model

from keras.layers import Input, Dense

Define input dimensions

input_dim = 1000

Define generator architecture

generator_input = Input(shape=(1,))

x = Dense(128, activation='relu')(generator_input)

x = Dense(input_dim, activation='tanh')(x)

Define discriminator architecture

discriminator_input = Input(shape=(input_dim,))

x = Dense(128, activation='relu')(discriminator_input)

x = Dense(1, activation='sigmoid')(x)

Compile generator and discriminator

generator = Model(generator_input, x)

generator.compile(optimizer='adam', loss='binary_crossentropy')

discriminator = Model(discriminator_input, x)

discriminator.compile(optimizer='adam', loss='binary_crossentropy')

Train GAN

for epoch in range(100):

Generate fake data

z = np.random.normal(0, 1, (batch_size, input_dim))

fake_data = generator.predict(z)

Train discriminator

discriminator.trainable = True

discriminator_loss = discriminator.train_on_batch(real_data, real_labels) + discriminator.train_on_batch(fake_data, fake_labels)

print('Discriminator loss: ', discriminator_loss)

Train generator

generator.trainable = True

generator_loss = generator.train_on_batch(z, fake_labels)

print('Generator loss: ', generator_loss)

This code snippet demonstrates the basic structure of a GAN using Keras. Of course, you'll need to adapt this example to your specific use case and experiment with various hyperparameters to achieve optimal results.

Conclusion

Building generative AI models in Python involves selecting an algorithm, preparing data, implementing your model, training it, and evaluating its performance. By leveraging popular libraries like TensorFlow or PyTorch, you can create complex AI models that generate innovative content or augment existing datasets. Remember to stay up-to-date with the latest developments in deep learning research and experiment with different approaches to find what works best for your specific use case.