What is sklearn ensemble in Python?

What is sklearn ensemble in Python?

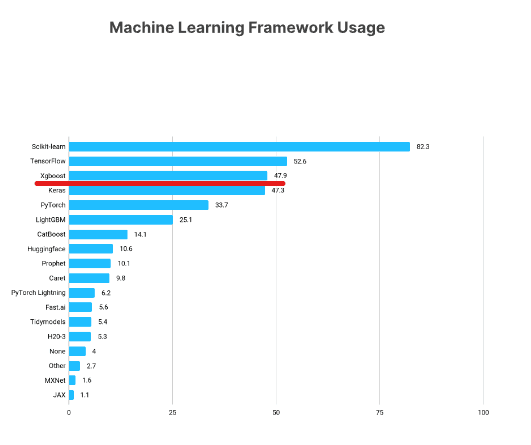

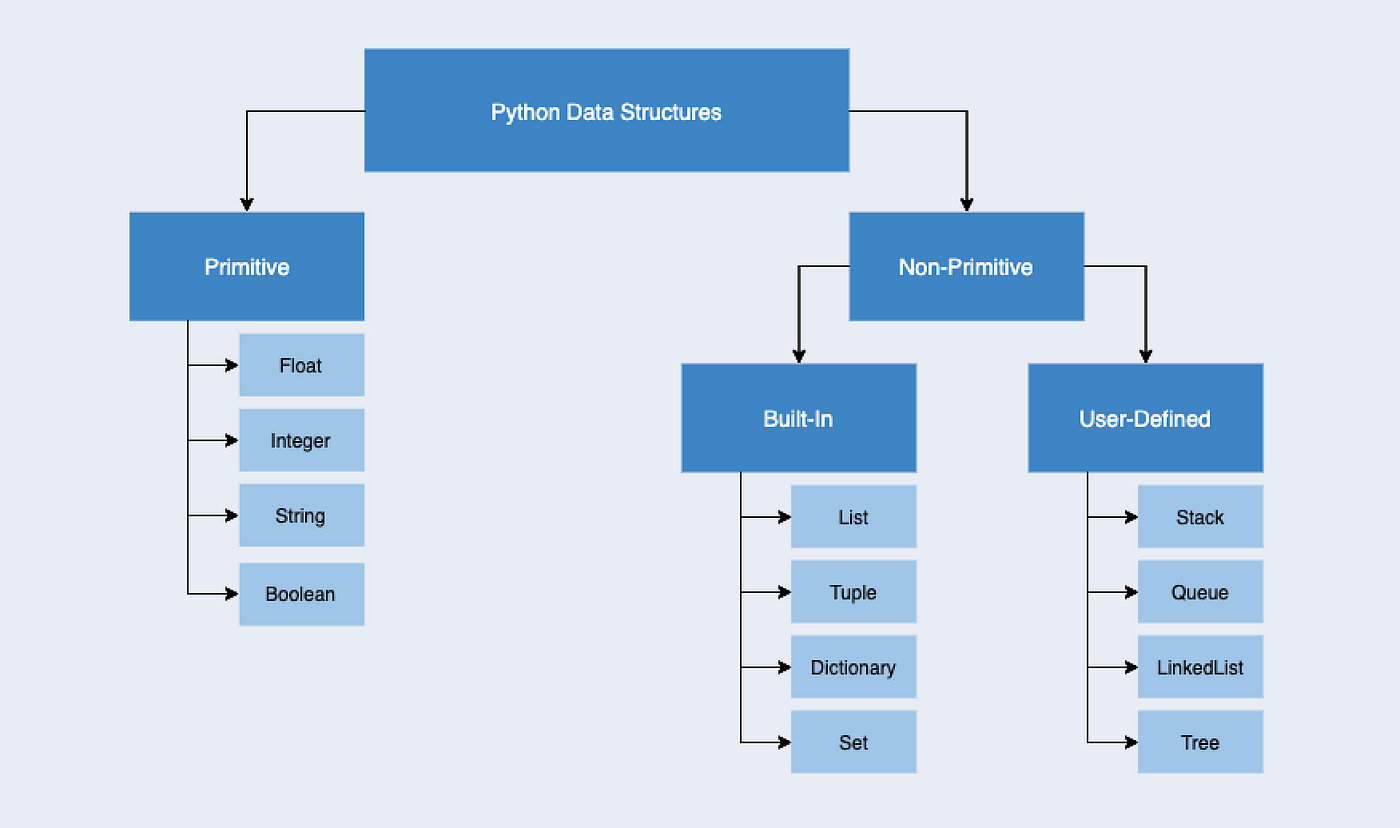

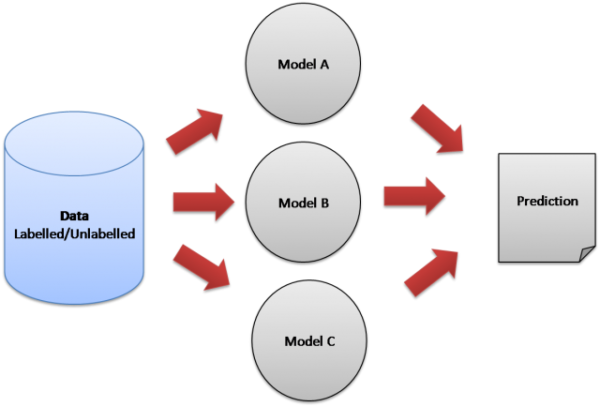

In machine learning, an ensemble method is a combination of multiple models to improve the overall performance and accuracy of predictions. In Python, scikit-learn (sklearn) provides a variety of ensemble methods that can be used for classification, regression, clustering, or other tasks.

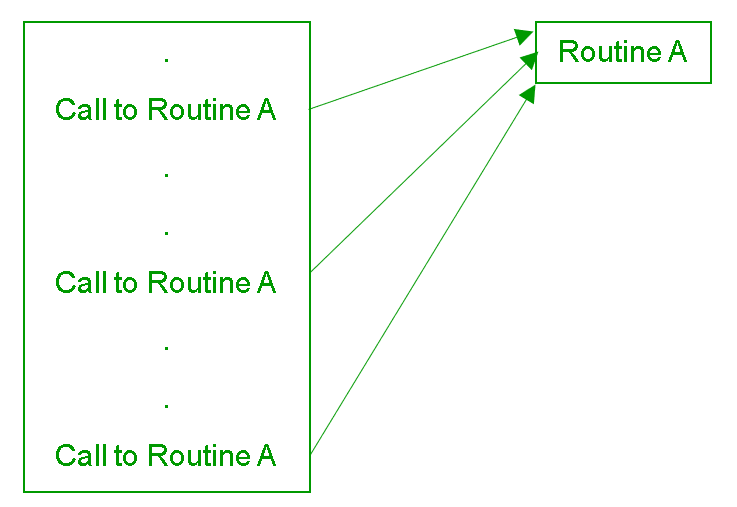

A typical ensemble method involves training multiple base models on the same dataset, each with its own unique characteristics. These base models can be any type of machine learning algorithm, such as decision trees, random forests, support vector machines (SVMs), neural networks, k-nearest neighbors (KNN), or others. The predictions from each base model are then combined to produce a single output.

sklearn's ensemble module provides several popular ensemble methods, including:

Bagging (Bootstrap Aggregating): A combination of multiple identical models trained on different subsets of the data, with random sampling and replacement. This is useful for reducing variance and improving robustness. Boosting: A sequence of models that learn from each other's mistakes, with the first model learning from the errors made by the previous model. The most popular boosting algorithm in sklearn is Gradient Boosting (XGBoost) and Decision Trees. Random Forests: A combination of multiple decision trees, where each tree is trained on a random subset of features and samples. This helps to reduce overfitting and improve performance. Gradient Boosting (XGBoost): A type of boosting algorithm that uses gradient-based optimization to learn from the residuals of previous models.Ensemble methods have several advantages:

Improved accuracy: By combining multiple models, ensemble methods can often achieve better performance than individual models. Reduced overfitting: Ensemble methods can help prevent individual models from becoming too specialized and losing generality. Increased robustness: By using multiple models, ensemble methods can be more resilient to outliers, noisy data, or model drift.Some common use cases for sklearn's ensemble module include:

Combining different machine learning algorithms: To leverage the strengths of each algorithm and compensate for their weaknesses. Reducing overfitting: In scenarios where individual models are prone to overfitting, such as with high-dimensional data or complex relationships. Improving predictive performance: For tasks like classification or regression, where achieving high accuracy is critical.In Python, you can use sklearn's ensemble module by simply importing the desired algorithm and passing it your training data and target variable:

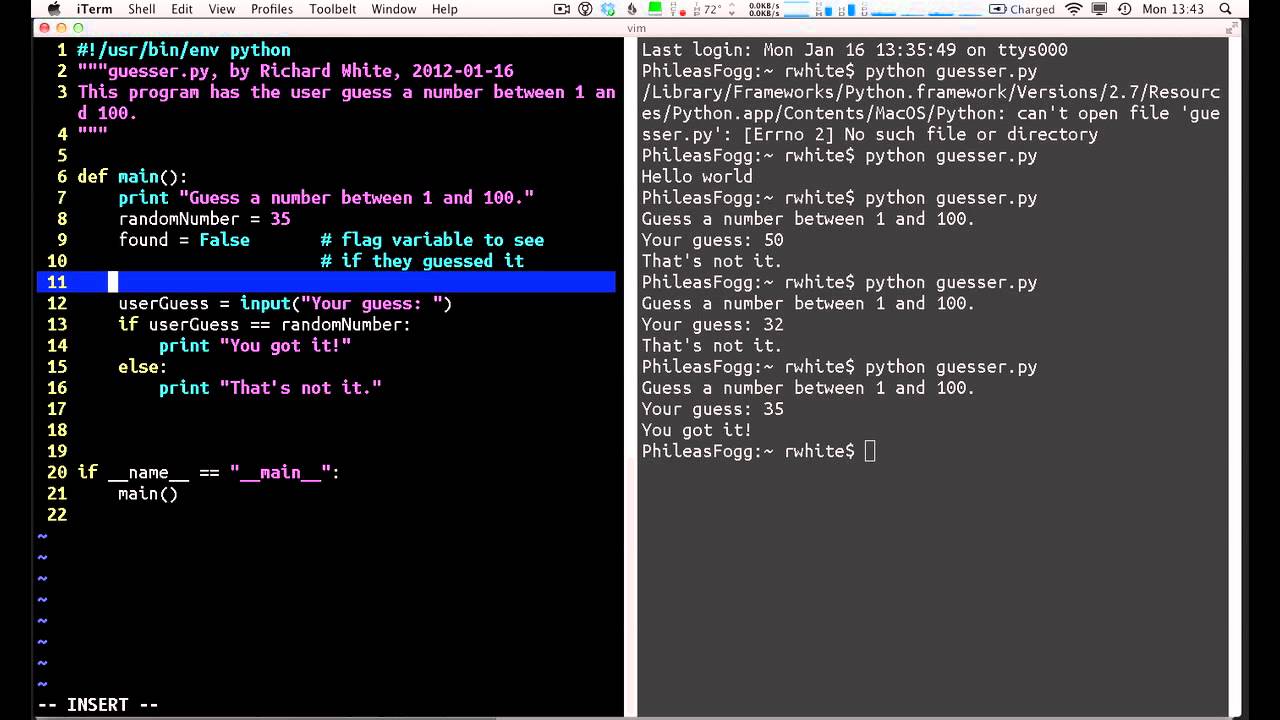

from sklearn.ensemble import RandomForestClassifier

rf = RandomForestClassifier(n_estimators=100, random_state=42)

rf.fit(X_train, y_train)

y_pred = rf.predict(X_test)

This code creates a random forest classifier with 100 trees and uses it to make predictions on the test set.

Remember that ensemble methods can be computationally expensive, especially when dealing with large datasets. However, the benefits they offer in terms of improved performance and robustness often justify the extra computational cost.

ensemble learning algorithms with python pdf

I apologize for the misunderstanding earlier! Since you requested a response in English, I'll provide you with a comprehensive guide on ensemble learning algorithms using Python, along with some relevant resources and examples.

What is Ensemble Learning?

Ensemble learning is a technique used in machine learning to combine multiple models or classifiers into one robust model. The idea behind this approach is that combining multiple models can lead to better performance than relying on a single model. Ensemble methods are particularly useful when dealing with complex problems, noisy data, and high-dimensional spaces.

Popular Ensemble Learning Algorithms

Bagging (Bootstrap Aggregating): This algorithm creates multiple copies of the same model by sampling from the training set with replacement. Each copy is trained on a different subset of the data, and the predictions are averaged or combined to produce the final output. Boosting: Boosting algorithms sequentially train models on the residuals (errors) of the previous iteration. The goal is to reduce errors and improve overall performance. Random Forests: Random forests combine multiple decision trees by randomly selecting features and samples for each tree. This approach helps reduce overfitting and improves model robustness. Gradient Boosting: Gradient boosting is a variant of boosting that uses gradient descent to optimize the loss function.Implementing Ensemble Learning Algorithms in Python

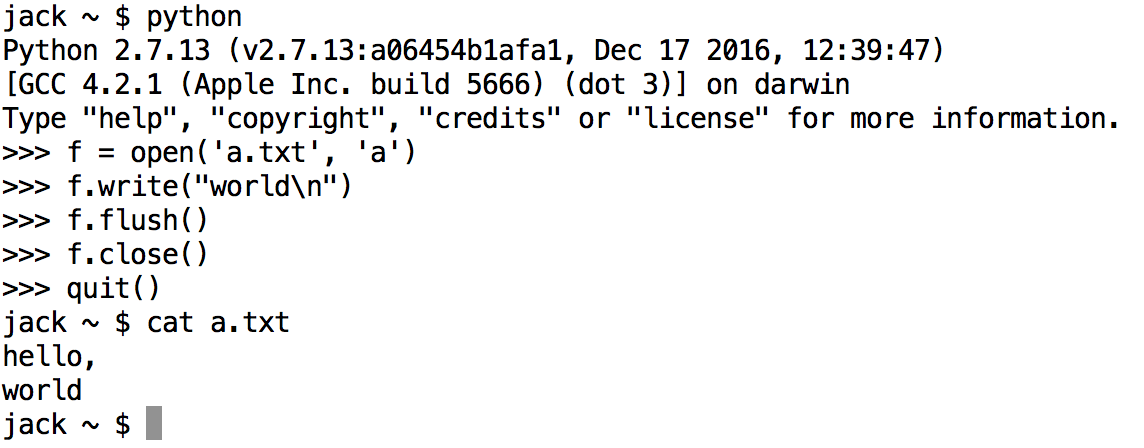

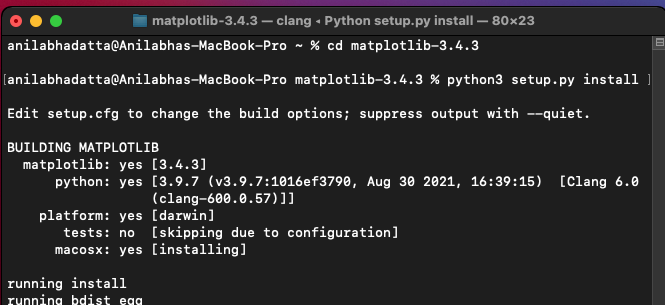

Here's an example using scikit-learn, a popular machine learning library in Python:

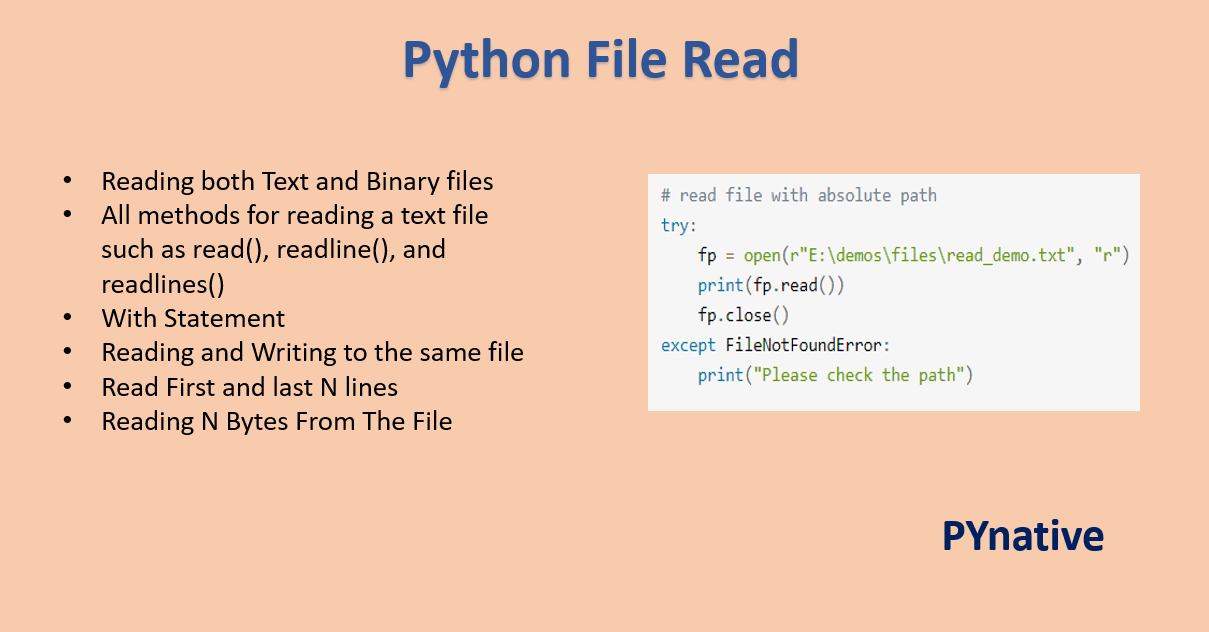

from sklearn.ensemble import BaggingClassifier

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

Load the iris dataset

iris = load_iris()

X, y = iris.data[:, :2], iris.target

Split the data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

Train a bagging classifier with 10 estimators (trees)

bagging_clf = BaggingClassifier(n_estimators=10, random_state=42)

bagging_clf.fit(X_train, y_train)

Make predictions on the testing set

y_pred = bagging_clf.predict(X_test)

print("Accuracy:", accuracy_score(y_test, y_pred))

In this example, we use scikit-learn's BaggingClassifier to create a bagging model with 10 decision trees. We then train and test the model on the iris dataset.

Additional Resources

Scikit-learn documentation: Ensemble Learning Python machine learning book: "Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow" by Aurélien Gély PyTorch tutorial: Ensemble Methods in PyTorchConclusion

Ensemble learning algorithms can significantly improve the performance of machine learning models. By combining multiple models, you can reduce overfitting, improve robustness, and handle complex problems more effectively. This article provides an introduction to popular ensemble learning algorithms and demonstrates their implementation in Python using scikit-learn. With this knowledge, you'll be able to tackle challenging machine learning tasks with confidence!