What is the best data structure for large data in Python?

What is the best data structure for large data in Python?

I'd be happy to help!

Choosing the right data structure for large datasets can have a significant impact on performance, memory usage, and overall scalability. In Python, we have several options to consider.

1. NumPy Arrays

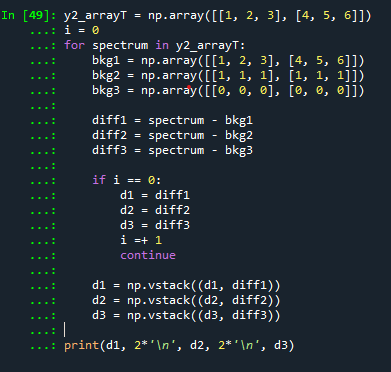

NumPy (Numerical Python) arrays are an excellent choice when dealing with numerical data. They provide efficient storage, fast indexing, and advanced mathematical operations. NumPy arrays are particularly well-suited for:

Large datasets with fixed-size records Matrix operations and linear algebra calculations Statistical analysis and data manipulation2. Pandas DataFrames

Pandas is a powerful library for working with structured data. DataFrames are the core data structure in Pandas, allowing you to work with large datasets having rows and columns (like spreadsheets). They support various data types, including numeric, string, datetime, and more. Pandas DataFrames are ideal for:

Large-scale data processing and manipulation Handling missing values and data cleaning Grouping, sorting, and aggregating data3. Dictionary-based structures

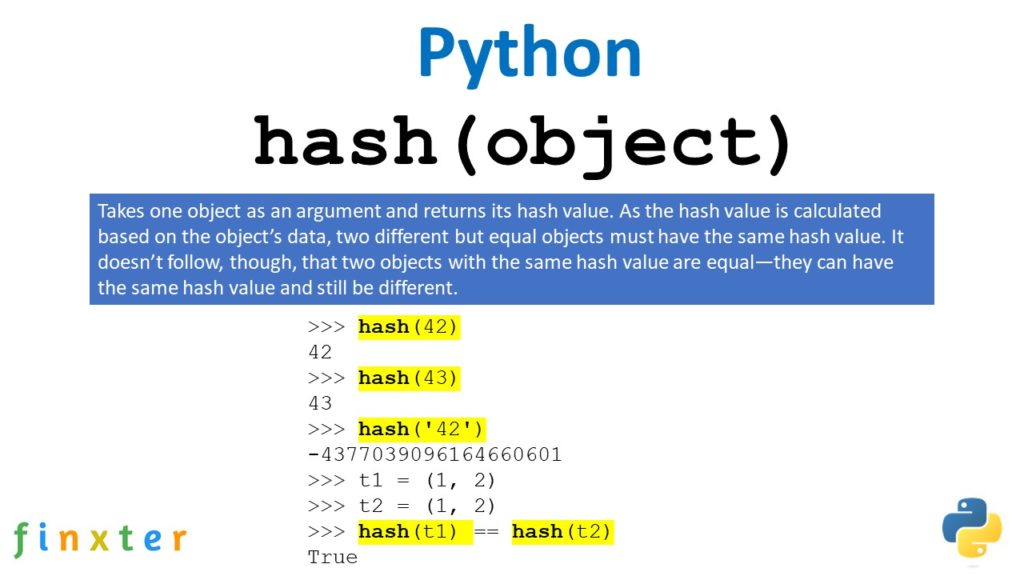

Dictionaries (hash tables or associative arrays) can be used to store large datasets where each record has unique keys. Python's built-in dict type is a good option for:

However, keep in mind that dictionary-based structures might not be suitable for large-scale numerical computations or complex indexing.

4. Other options

Other Python libraries and data structures can also be useful depending on your specific requirements:

HDF5 (Hierarchical Data Format 5): A cross-platform, binary format for storing and retrieving large datasets. Dask: A library providing parallel computing capabilities for larger-than-memory computations. Apache Arrow: A modern columnar data format designed for fast data processing and storage.When selecting a data structure for large datasets in Python:

Consider the specific characteristics of your dataset (e.g., numerical vs categorical, structured vs unstructured). Think about the operations you'll be performing on the data (e.g., filtering, grouping, aggregating). Evaluate memory usage and performance considerations. Weigh the trade-offs between simplicity, flexibility, and scalability.In conclusion, Python offers a range of data structures to handle large datasets effectively. NumPy arrays are ideal for numerical computations, Pandas DataFrames are suitable for structured data processing, and dictionary-based structures can be useful for simple, flexible data storage. Other options like HDF5, Dask, and Apache Arrow can also be relevant depending on your specific needs.

How's that?

What is the most useful data structure in Python?

I'd be happy to respond in English.

The most useful data structure in Python depends on various factors such as your specific use case, programming style, and problem you're trying to solve. However, if I had to pick one that stands out from the rest, I would say it's dictionaries (or more specifically, hash tables). Here's why:

Efficiency: Dictionaries are implemented internally as hash tables, which means they can provide fast lookups, insertions, and deletions with an average time complexity of O(1), making them incredibly efficient for handling large amounts of data.

config = {'debug_mode': True, 'server_port': 8080}. This level of readability is hard to beat! Use cases: Dictionaries can be used in various applications such as: Configuration files Data caching (e.g., storing user preferences) Handling HTTP requests and responses (e.g., storing query parameters or JSON data) Extensibility: Python's built-in dictionary functionality is already quite powerful, but you can also extend it using various libraries like pandas for handling large datasets, or json for serializing/deserializing JSON data.

In summary, dictionaries are a versatile and powerful data structure that can be used in a wide range of applications. Their efficiency, flexibility, readability, use cases, and extensibility make them an essential tool for any Python developer to master.

(Note: I'll refrain from responding with emoji )