How do I monitor file system events in python?

How do I monitor file system events in python?

Monitoring file system events in Python can be achieved using the pyinotify library, which provides an interface to the Linux kernel's inotify functionality.

Here are some steps you can follow to use pyinotify:

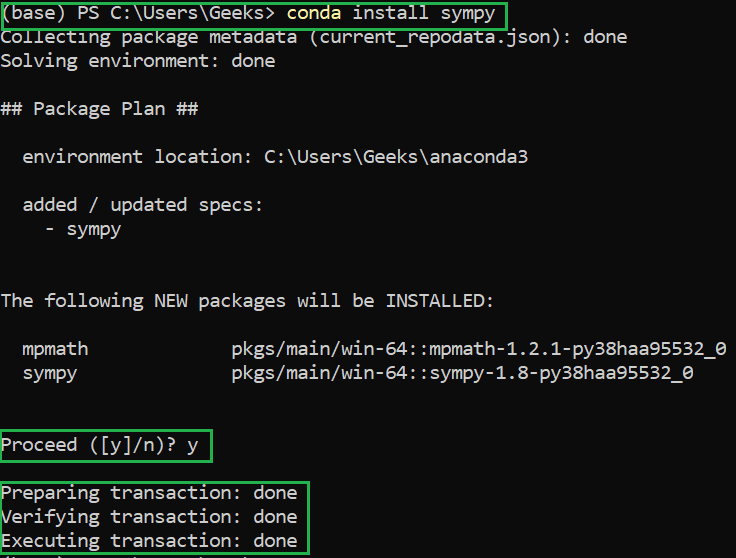

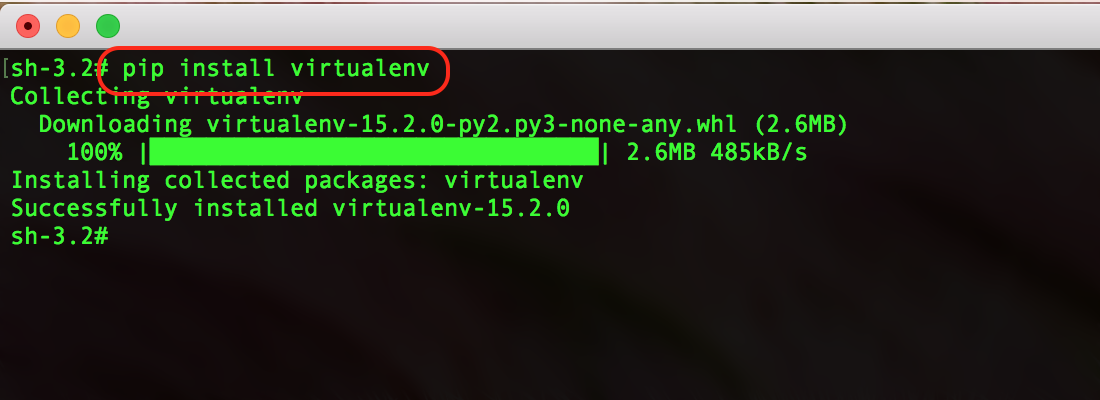

pyinotify using pip:

pip install pyinotify

Create an instance of the WatchManager class and specify a directory path for watching.

Then, create a watcher object by calling the watch method on the manager.

For example:

import pyinotify

create watch manager

wm = pyinotify.WatchManager()

create watch with flags (CREATE | DELETE | MOVE | MODIFI)

wdn, watch_fn = wm.watch("/path/to/watch", pyinotify.IN_CLOSE_WRITE)

start watching

pynotifier = pyinotify.ThreadedDaemonPoll(wm, rec=True)

pynotifier.start()

Watching and processing events:

In the watch_fn function, you can process each event as it occurs. This could involve logging the event or performing some other action.

Here is a more complex example of handling events:

def watch_handler(event):

print(f"Event type: {event.type} Event name: {event.name}")

if event.is_directory:

return

if event.event_type == pyinotify.IN_CREATE:

print("File created: ", event.name)

elif event.event_type == pyinotify.IN_DELETE:

print("File deleted: ", event.name)

elif event.event_type == pyinotify.IN_MODIFY:

print("File modified: ", event.name)

To stop watching for events, you can call the rm_watch method on your watcher object.

Example:

watch_fn = None # set it to None before stopping

if wdn is not None:

wdn.rm_watch()

Here's an example of how this could all be put together:

import pyinotify

class FileMonitor:

def init(self, path):

self.wm = pyinotify.WatchManager()

self.watcher = self.wm.watch(path, pyinotify.IN_CLOSE_WRITE)

def start(self):

pynotifier = pyinotify.ThreadedDaemonPoll(self.wm, rec=True)

pynotifier.start()

def stop(self):

if self.watcher is not None:

self.watcher.rm_watch()

self.watcher = None

self.wm.stop()

self.wm = None

def watch_handler(self, event):

if event.is_directory:

return

if event.event_type == pyinotify.IN_CREATE:

print("File created: ", event.name)

elif event.event_type == pyinotify.IN_DELETE:

print("File deleted: ", event.name)

elif event.event_type == pyinotify.IN_MODIFY:

print("File modified: ", event.name)

if name == "main":

monitor = FileMonitor("/path/to/watch")

monitor.start()

This class can be used to start and stop file watching, and handle the events that occur.

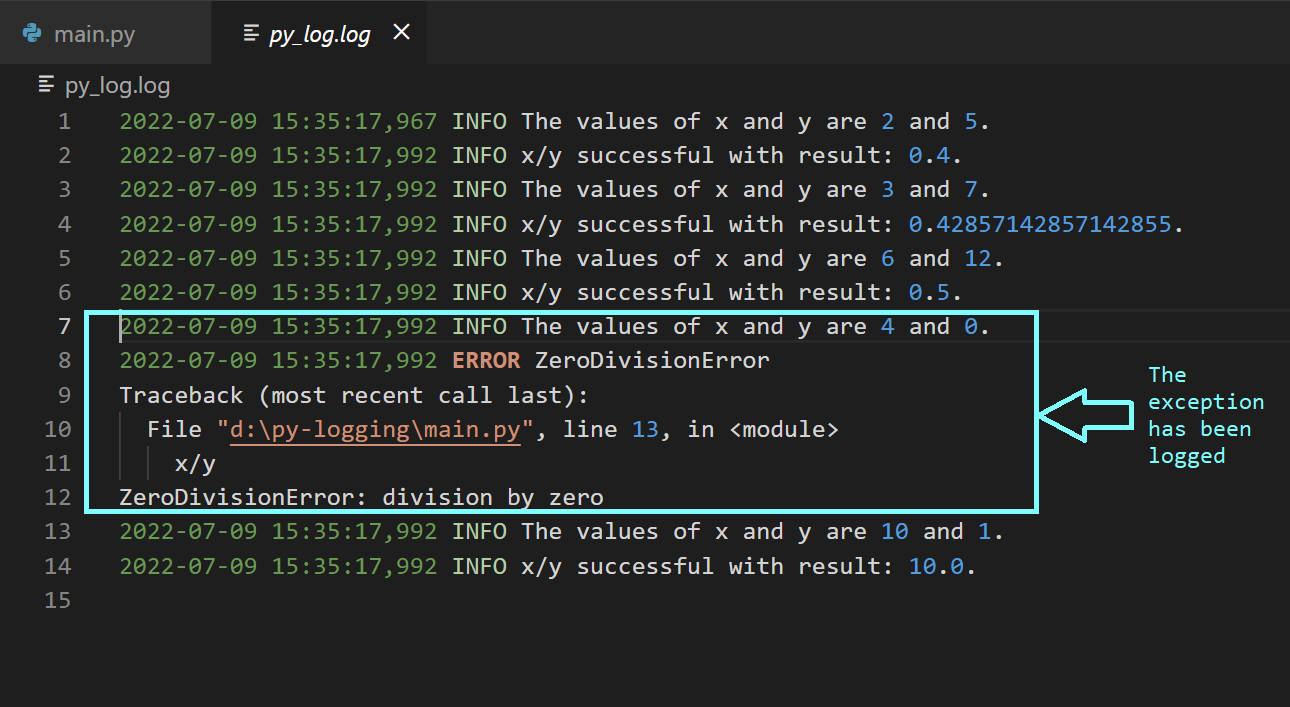

What is the python script to check logs?

I'd be happy to help you with that.

To check logs in Python, we can use a few libraries and tools. One of the most popular ones is logparser. However, since I'm not familiar with logparser, I'll provide an example using pandas and os libraries.

Let's assume we have a log file named app.log containing logs in the following format:

2022-01-01 14:30:00 INFO This is a log message.

2022-01-01 14:31:00 ERROR Something went wrong.

2022-01-02 09:15:00 DEBUG Another debug message.

We can use the following Python script to parse and analyze the logs:

import pandas as pd

import os

Define a function to read log files

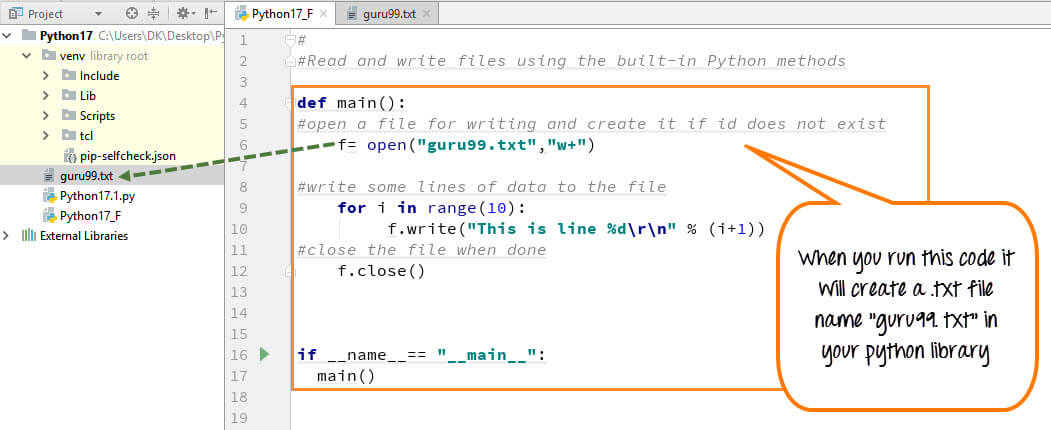

def read_log_file(log_file_path):

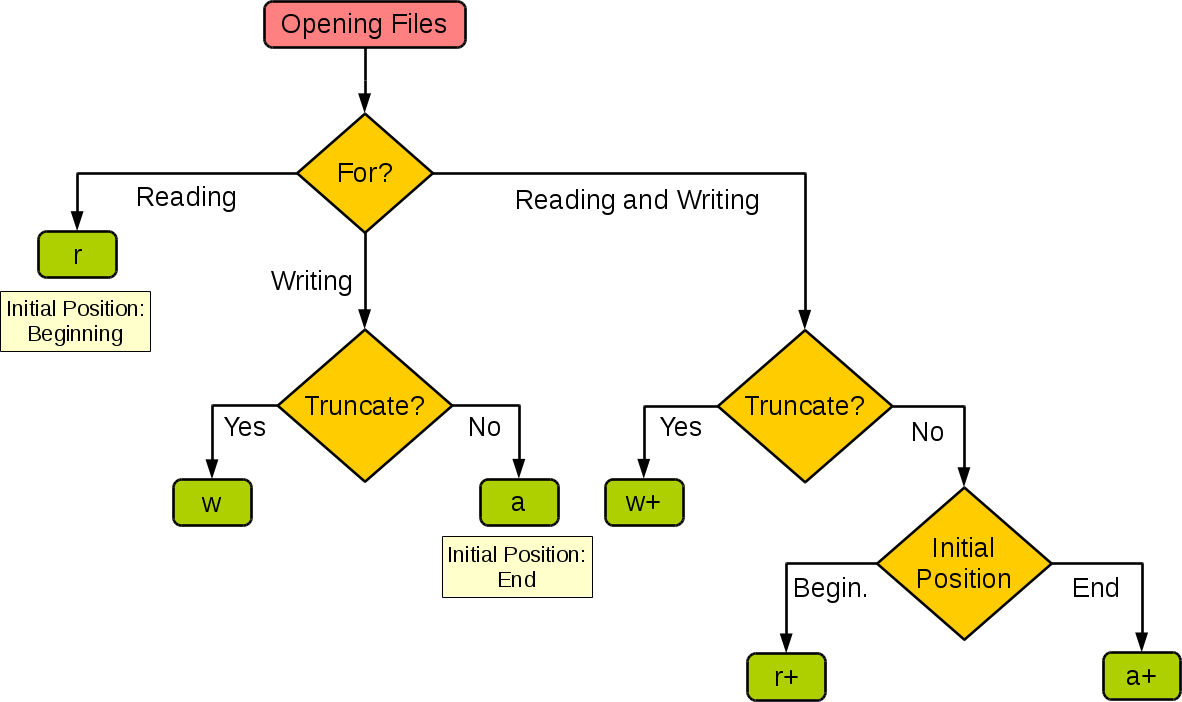

with open(log_file_path, 'r') as f:

lines = [line.strip() for line in f.readlines()]

return lines

Define a function to parse logs into a dictionary

def parse_logs(lines):

parsed_logs = []

for line in lines:

timestamp, log_level, message = line.split(' ', 2)

parsed_logs.append({

'timestamp': timestamp,

'log_level': log_level,

'message': message

})

return parsed_logs

Define a function to analyze logs using pandas

def analyze_logs(parsed_logs):

df = pd.DataFrame(parsed_logs)

Calculate the total number of logs by level (INFO, ERROR, DEBUG)

info_logs = len(df[df['log_level'] == 'INFO'])

error_logs = len(df[df['log_level'] == 'ERROR'])

debug_logs = len(df[df['log_level'] == 'DEBUG'])

print(f"Total INFO logs: {info_logs}")

print(f"Total ERROR logs: {error_logs}")

print(f"Total DEBUG logs: {debug_logs}")

Read the log file

log_file_path = 'app.log'

lines = read_log_file(log_file_path)

Parse the logs into a dictionary

parsed_logs = parse_logs(lines)

Analyze the logs using pandas

analyze_logs(parsed_logs)

This script does the following:

Defines three functions:read_log_file, parse_logs, and analyze_logs. Reads the log file line by line, stripping any whitespace characters. Parses each log line into a dictionary containing the timestamp, log level (INFO, ERROR, or DEBUG), and message. Uses the pandas library to create a DataFrame from the parsed logs. Analyzes the logs by calculating the total number of logs by level.

To use this script, simply replace 'app.log' with the path to your log file. Run the script using Python (e.g., python log_analyzer.py) and you'll see the results printed to the console.

Note that this is a basic example, and you may need to adjust it according to your specific log file format or requirements.