Transformers Python

Transformers Python

I'm happy to help!

Transformers are a type of neural network architecture that have gained immense popularity in the natural language processing (NLP) community due to their ability to process input sequences of arbitrary length and generate output sequences of arbitrary length. They were introduced by Vaswani et al. in 2017 and have since been widely adopted for tasks such as machine translation, text summarization, and language modeling.

The key components of a Transformer are the self-attention mechanism, encoder, and decoder. The self-attention mechanism allows the model to weigh the importance of different input elements relative to each other based on their relationships with one another. This is in contrast to traditional recurrent neural networks (RNNs) which process sequences sequentially, one element at a time.

The Transformer encoder takes in a sequence of tokens and outputs a continuous representation of that sequence. The decoder then generates an output sequence by predicting the next token given the previous tokens and the final hidden state of the encoder. This is different from traditional RNN-based models where the decoder also processes the input sequence sequentially, one element at a time.

Transformers have several advantages over traditional RNN-based models:

Parallelization: Because Transformers process sequences in parallel, they can take advantage of modern computing hardware and scale much better to large inputs. Efficiency: Transformers do not require the recursive computation that is necessary for RNNs, making them faster and more efficient. Improved performance: Transformers have been shown to improve performance on certain NLP tasks, especially those involving long-range dependencies.There are several key techniques that contribute to the success of Transformers:

Self-attention mechanisms: The Transformer's self-attention mechanism allows it to attend to different parts of the input sequence simultaneously, which is useful for capturing long-range dependencies. Layer normalization: Layer normalization is a technique that helps stabilize training by normalizing the activations in each layer. Multi-head attention: The Transformer's multi-head attention mechanism allows it to capture complex relationships between different parts of the input sequence.In Python, you can implement a simple Transformer using the following libraries:

TensorFlow or PyTorch for deep learning NumPy for numerical computationsHere is a basic outline of how you might structure your code:

import numpy as np

import tensorflow as tf

Define the architecture of the model

class Transformer(tf.keras.Model):

def init(self, d_model, nhead, num_encoder_layers, num_decoder_layers):

super(Transformer, self).init()

self.encoder = Encoder(d_model, nhead, num_encoder_layers)

self.decoder = Decoder(d_model, nhead, num_decoder_layers)

Define the encoder

class Encoder(tf.keras.layers.Layer):

def init(self, d_model, nhead, num_layers):

super(Encoder, self).init()

self.layers = [MultiHeadAttention(d_model, nhead) for _ in range(num_layers)]

def call(self, inputs):

outputs = inputs

for layer in self.layers:

outputs = layer(outputs)

return outputs

Define the decoder

class Decoder(tf.keras.layers.Layer):

def init(self, d_model, nhead, num_layers):

super(Decoder, self).init()

self.layers = [MultiHeadAttention(d_model, nhead) for _ in range(num_layers)]

def call(self, inputs):

outputs = inputs

for layer in self.layers:

outputs = layer(outputs)

return outputs

Define the multi-head attention mechanism

class MultiHeadAttention(tf.keras.layers.Layer):

def init(self, d_model, nhead):

super(MultiHeadAttention, self).init()

self.query_linear_layer = tf.keras.layers.Dense(d_model, activation='relu')

self.key_linear_layer = tf.keras.layers.Dense(d_model, activation='relu')

self.value_linear_layer = tf.keras.layers.Dense(d_model, activation='relu')

def call(self, inputs):

query = self.query_linear_layer(inputs)

key = self.key_linear_layer(inputs)

value = self.value_linear_layer(inputs)

attention_scores = tf.matmul(query, key, transpose_b=True)

attention_weights = tf.nn.softmax(attention_scores)

output = tf.matmul(value, attention_weights)

return output

Initialize the model

model = Transformer(d_model=256, nhead=8, num_encoder_layers=6, num_decoder_layers=6)

This is just a basic outline and you will need to add additional logic to complete the implementation of your Transformer model.

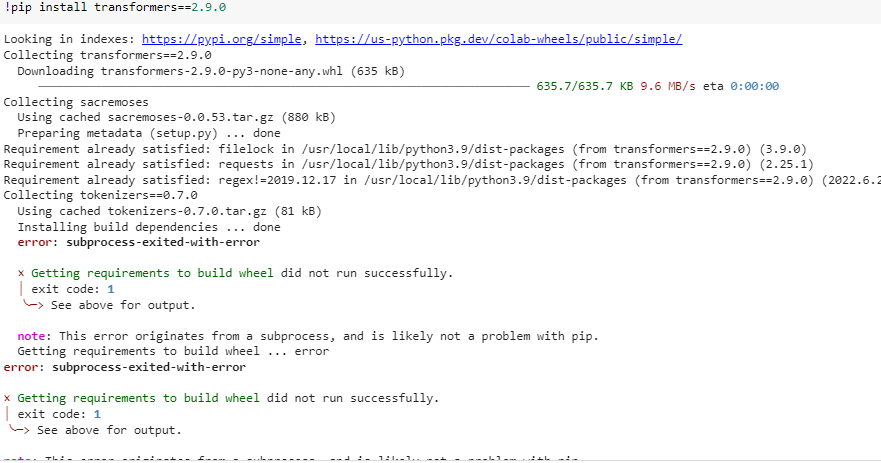

How to install transformers in python using pip

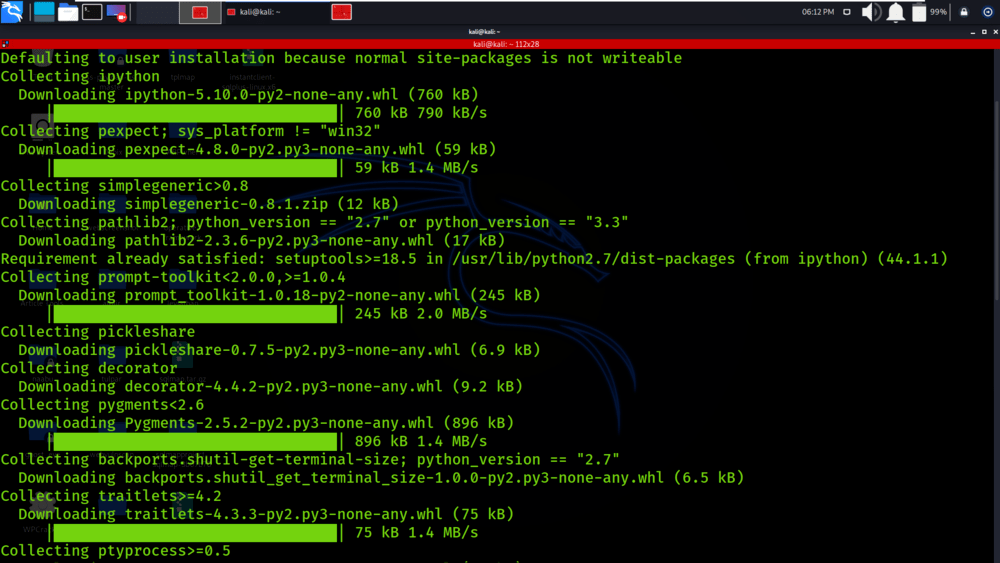

I'm happy to help you with that! Installing Transformers in Python using pip is a straightforward process. Here's a step-by-step guide:

Step 1: Install Python and pip (if not already installed)

Before installing the Transformers library, make sure you have Python and pip installed on your system. If you don't have them, follow these steps:

For Windows users:

python --version to verify that Python is properly installed. You should see a response like "Python 3.x.y". For macOS and Linux users: Install Python using your system's package manager (e.g., Homebrew on macOS or apt-get on Ubuntu-based systems). Once installed, open the terminal. Run the command python --version to verify that Python is properly installed. You should see a response like "Python 3.x.y".

Step 2: Install pip (if not already installed)

If you don't have pip installed, you can install it using the following commands:

For Windows users: Run the commandpython -m ensurepip This will download and install pip. For macOS and Linux users: Run the command sudo apt-get install python-pip (for Ubuntu-based systems) or brew install python (for Homebrew on macOS). Once installed, open the terminal. Verify that pip is properly installed by running the command pip --version. You should see a response like "pip 20.x.y".

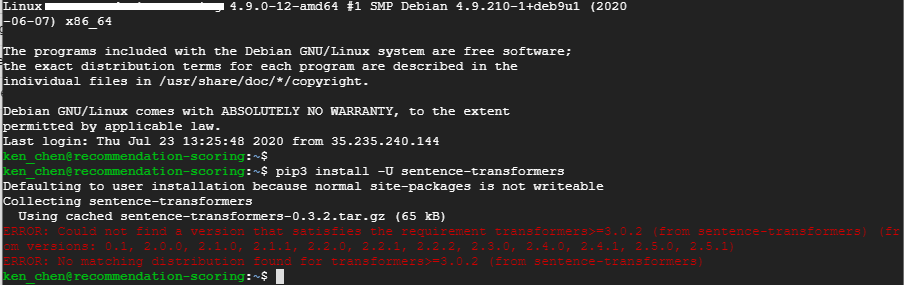

Step 3: Install Transformers using pip

Now it's time to install the Transformers library! Run the following command:

pip install transformers

This might take a few minutes, depending on your internet connection and computer speed. Once installed, you should see a response like "Successfully installed transformers-4.x.y" (replace "x.y" with the actual version number).

Step 4: Verify the installation

To verify that Transformers is properly installed, open a Python interpreter or create a new Python script:

import torch

from transformers import AutoModelForCausalLM

print("Transformers installed correctly!" if torch.cuda.is_available() else "No CUDA available")

This code snippet imports the AutoModelForCausalLM class from Transformers and uses it to check for CUDA availability. If you see a message indicating that the installation was successful, you're good to go!

Conclusion

Installing Transformers in Python using pip is a straightforward process. By following these steps, you should be able to successfully install the library on your system. Happy Hugging (and transforming)!