Python script to check url status and send email alerts

Python script to check url status and send email alerts

Here is a Python script that uses the requests library to check the status of URLs and send email alerts using Gmail:

import requests

import smtplib

from email.mime.multipart import MIMEMultipart

from email.mime.text import MIMEText

import datetime

import time

Set up your Gmail credentials

GMAIL_USERNAME = "[email protected]"

GMAIL_PASSWORD = "your_password"

Set up the SMTP server

SMTP_SERVER = "smtp.gmail.com"

SMTP_PORT = 587

def check_url_status(url):

"""

Check the status of a URL using requests library.

"""

try:

response = requests.get(url, timeout=5)

if response.status_code == 200:

return True

else:

return False

except (requests.ConnectionError, requests.Timeout):

return False

def send_email_alert(subject, message):

"""

Send an email alert using Gmail.

"""

Set up the email message

msg = MIMEMultipart()

msg['From'] = GMAIL_USERNAME

msg['To'] = '[email protected]'

msg['Subject'] = subject

Add the body of the email

body = MIMEText(message, 'plain')

msg.attach(body)

Set up the SMTP server

server = smtplib.SMTP(SMTP_SERVER, SMTP_PORT)

server.starttls()

server.login(GMAIL_USERNAME, GMAIL_PASSWORD)

server.sendmail(msg['From'], msg['To'], msg.as_string())

server.quit()

def monitor_urls(urls):

"""

Monitor a list of URLs and send an email alert if any of them are down.

"""

while True:

for url in urls:

if not check_url_status(url):

subject = "URL DOWN: {}".format(url)

message = "The URL {} is currently down.".format(url)

send_email_alert(subject, message)

time.sleep(60) # Check every minute

Example usage

urls_to_monitor = ['http://www.example.com', 'http://www.example2.com']

monitor_urls(urls_to_monitor)

This script monitors a list of URLs and sends an email alert if any of them are down. You can customize it to your needs by changing the GMAIL_USERNAME, GMAIL_PASSWORD, SMTP_SERVER, SMTP_PORT, urls_to_monitor variables.

Here's how you can use this script:

Save this code in a file namedurl_monitor.py Install the requests library by running pip install requests Replace "[email protected]" with your actual Gmail email address Replace "your_password" with your actual Gmail password Run the script using Python: python url_monitor.py

This script will run indefinitely, checking each URL every minute and sending an email alert if any of them are down. You can stop it by pressing Ctrl+C.

Remember to always handle exceptions and potential errors in a production environment!

Feel free to modify or ask questions about this script!

How to extract text from website using Python

Here are the steps to extract text from a website using Python:

Step 1: Choose a Library

Python has several libraries that can be used for web scraping, including BeautifulSoup and Scrapy. For this example, we will use BeautifulSoup.

Step 2: Install Beautiful Soup

You can install Beautiful Soup by running the following command in your terminal or command prompt:

pip install beautifulsoup4

Step 3: Send an HTTP Request

Next, you need to send an HTTP request to the website you want to scrape. You can use the requests library for this.

import requests

url = "https://www.example.com"

response = requests.get(url)

Step 4: Parse the HTML Content

Once you have received the response, you need to parse the HTML content using Beautiful Soup.

from bs4 import BeautifulSoup

soup = BeautifulSoup(response.content, 'html.parser')

Step 5: Find and Extract the Text

Now that you have the parsed HTML content, you can use Beautiful Soup's methods to find the text you're interested in. For example, if you want to extract all the paragraphs from the webpage:

text = ''

for paragraph in soup.find_all('p'):

text += paragraph.text + 'n'

Step 6: Remove Unwanted Characters

Sometimes, the extracted text may contain unwanted characters such as newline characters or extra spaces. You can use Python's string methods to remove these characters:

text = text.replace('n', ' ').replace(' ', ' ')

Step 7: Handle Any Errors

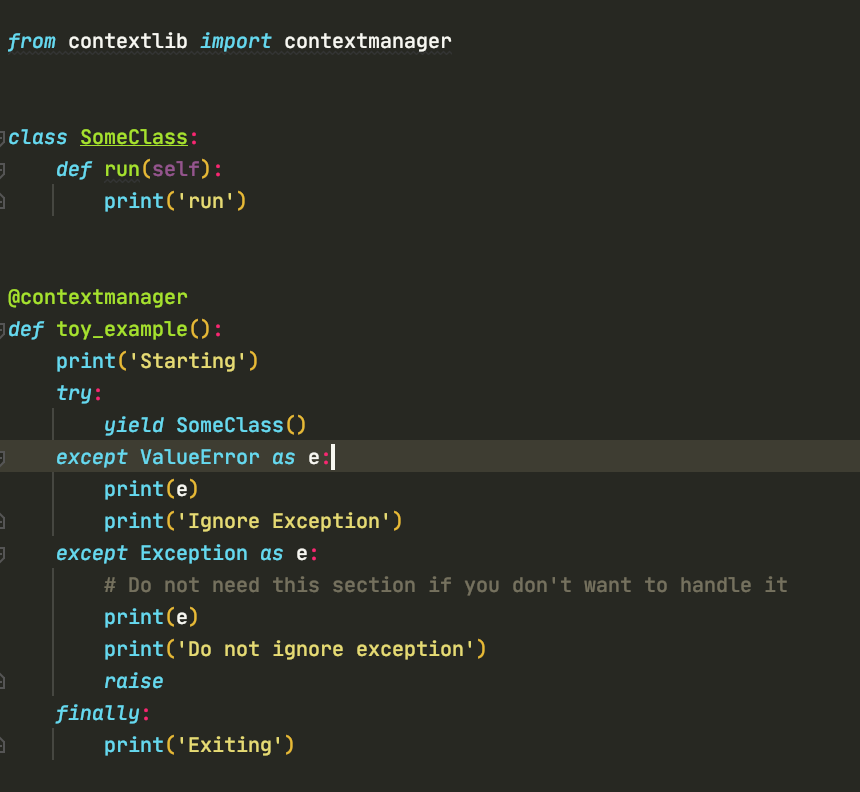

When scraping websites, you may encounter errors such as broken links or missing data. It's a good idea to handle these errors by adding error handling code.

Here is the complete Python script:

import requests

from bs4 import BeautifulSoup

url = "https://www.example.com"

try:

response = requests.get(url)

if response.status_code == 200:

soup = BeautifulSoup(response.content, 'html.parser')

text = ''

for paragraph in soup.find_all('p'):

text += paragraph.text + 'n'

text = text.replace('n', ' ').replace(' ', ' ')

print(text)

else:

print(f"Failed to get the webpage. Status code: {response.status_code}")

except Exception as e:

print(f"An error occurred: {str(e)}")

Tips and Variations

Here are some tips and variations for web scraping:

Handle Cookies: Some websites use cookies to track user sessions. You may need to handle these cookies by sending the cookie data with your HTTP request. Use Regular Expressions: You can use regular expressions to extract specific patterns from the webpage, such as phone numbers or email addresses. Handle JavaScript-generated Content: Many websites use JavaScript to generate content dynamically. You may need to use a library like Selenium to render the JavaScript and then scrape the resulting HTML content.Remember that web scraping should be done responsibly and in accordance with the website's terms of service and robots.txt file.