Does Python support parallel programming?

Does Python support parallel programming?

Python supports parallel programming through various libraries and frameworks that enable developers to take advantage of multiple CPU cores, GPUs, or distributed computing resources. Here are some ways to achieve parallelism in Python:

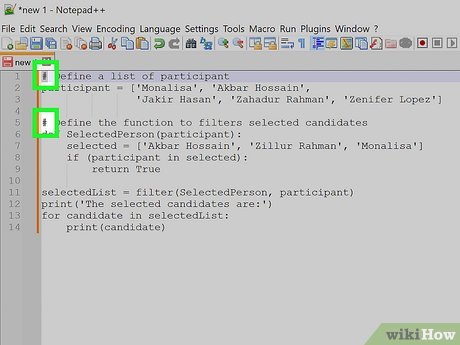

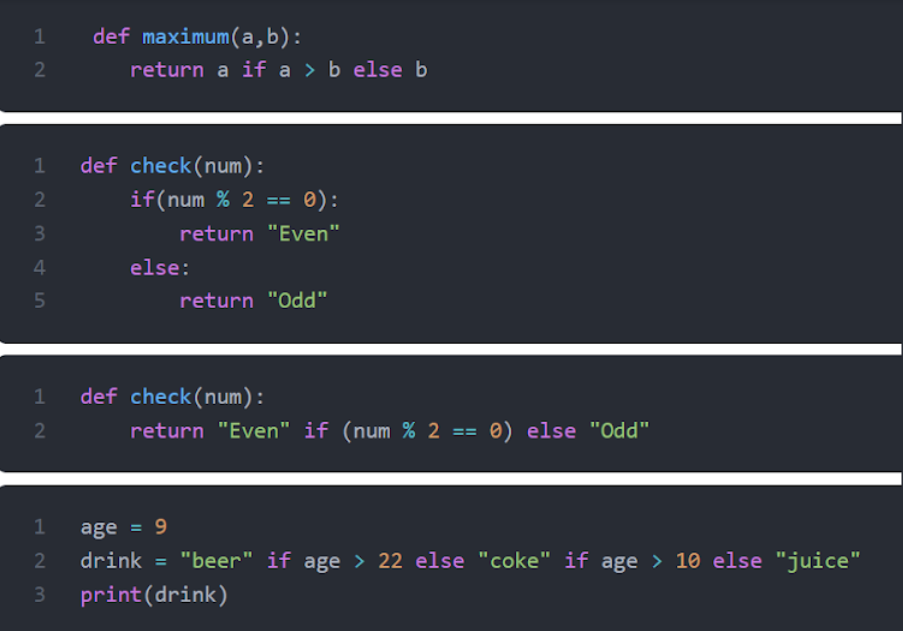

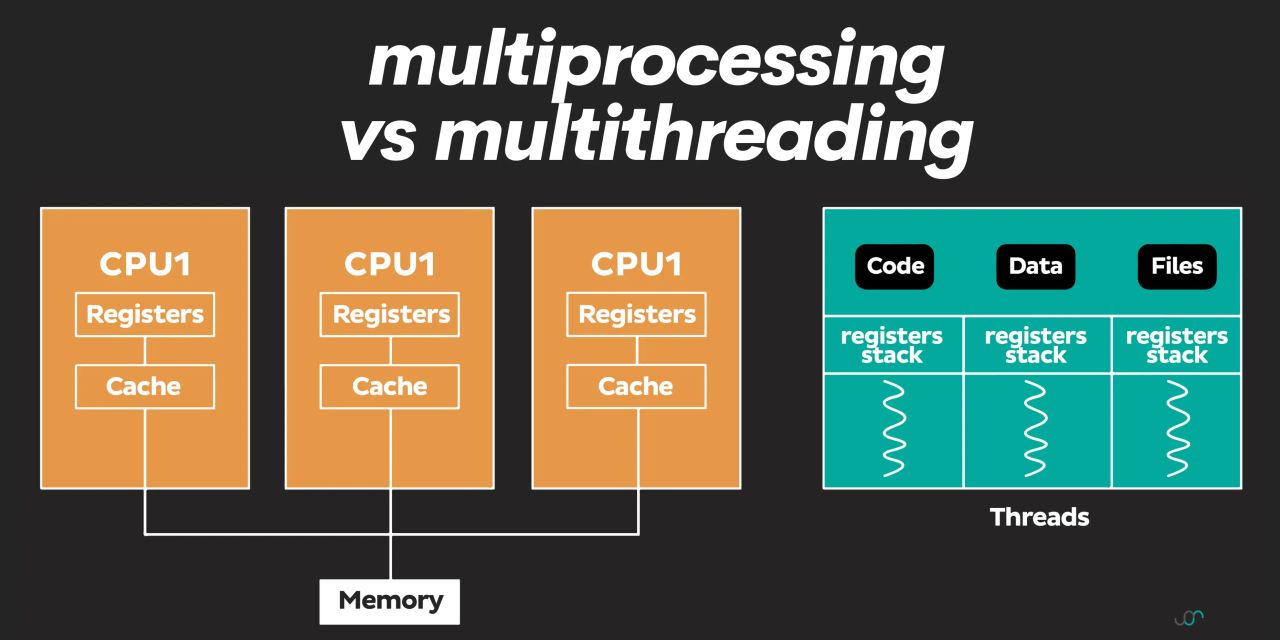

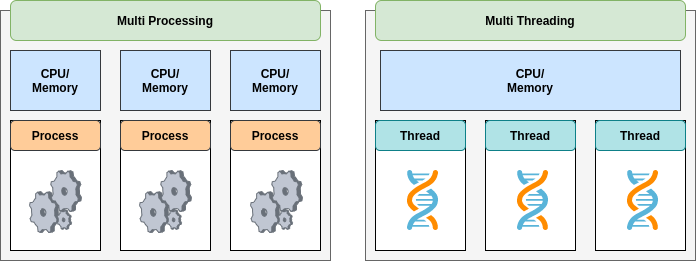

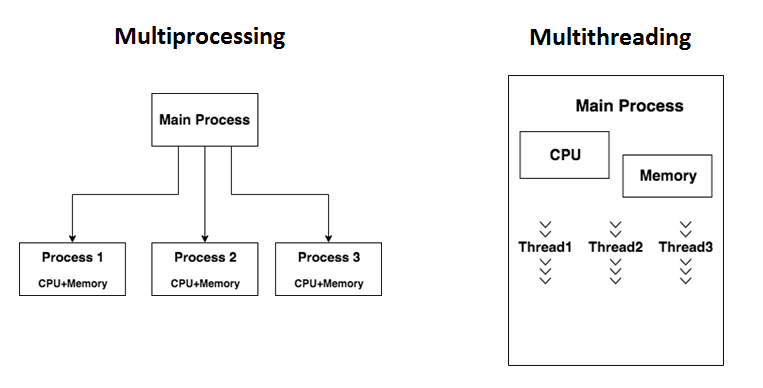

Multi-processing (multiprocessing): Python's multiprocessing module allows you to create multiple processes that can run concurrently, sharing memory space through a Manager process. This is useful for I/O-bound tasks, such as data processing or web scraping.Example:

Multi-threading (threading): Python's threading module enables you to create multiple threads that can run concurrently within the same process. This is suitable for CPU-bound tasks, such as scientific simulations or data analysis.from multiprocessing import Pooldef worker(x):

return x * x

if name == 'main':

pool = Pool(processes=4)

result = pool.map(worker, [1, 2, 3, 4])

print(result) # Output: [1, 4, 9, 16]

Example:

Concurrent.futures: This library provides a high-level interface for parallelism, allowing you to execute tasks asynchronously using threads or processes.import threadingdef worker():

print("Thread started")

for i in range(5):

print(f"Thread {i}")

threads = []

for i in range(4):

t = threading.Thread(target=worker)

threads.append(t)

t.start()

for t in threads:

t.join()

Example:

Distributed computing: Python has several libraries and frameworks for distributed computing, such as: Dask: A flexible parallel computing library that allows you to scale up your computations. Ray: A high-performance distributed computing framework that can run tasks on multiple machines.import concurrent.futuresdef worker(x):

return x * x

with concurrent.futures.ProcessPoolExecutor() as executor:

results = list(executor.map(worker, [1, 2, 3, 4]))

print(results) # Output: [1, 4, 9, 16]

Example (using Dask):

GPU-accelerated computing: Python has libraries like: TensorFlow: A popular open-source machine learning framework that can run computations on GPUs. PyTorch: Another widely-used deep learning library with GPU support.import dask.dataframe as dddf = dd.read_csv("data.csv")

Perform some computation...result = df.groupby('column').sum().compute()

print(result)

Example (using TensorFlow):

import tensorflow as tfwith tf.device('/gpu:0'):

x = tf.constant([1, 2, 3])

y = tf.square(x)

print(y) # Output: [1, 4, 9]

In summary, Python offers various libraries and frameworks for parallel programming, making it an excellent choice for building scalable and efficient applications.

Python concurrent vs multiprocessing

The age-old debate: concurrent vs multiprocessing in Python! Let's dive into the details.

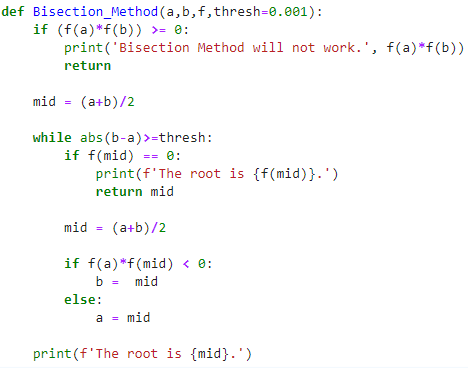

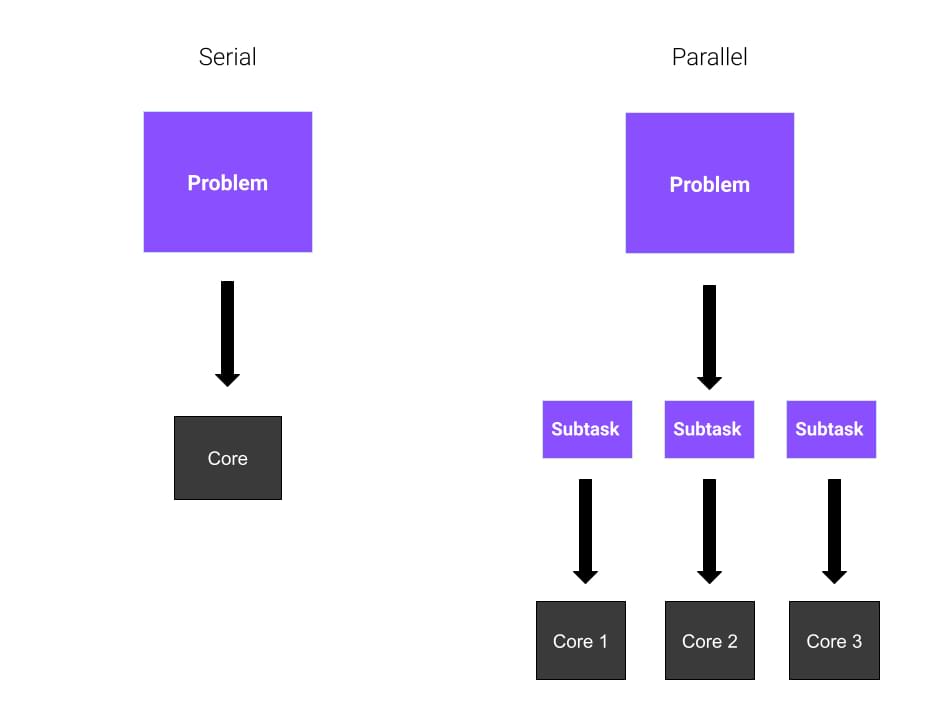

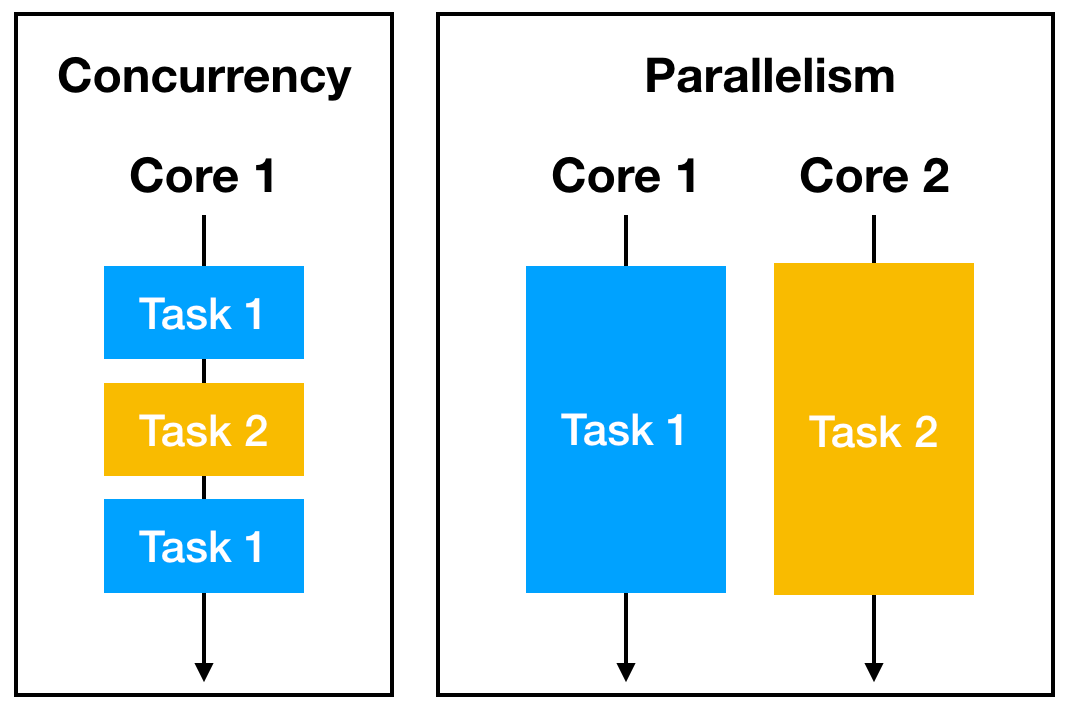

Concurrent vs Multiprocessing

When it comes to parallel processing, developers often face a crucial decision: should I use concurrency or multiprocessing? The answer depends on your specific needs and problem domain.

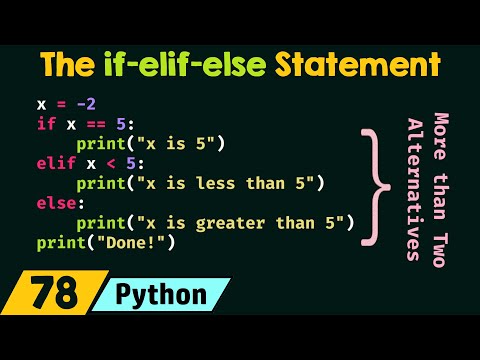

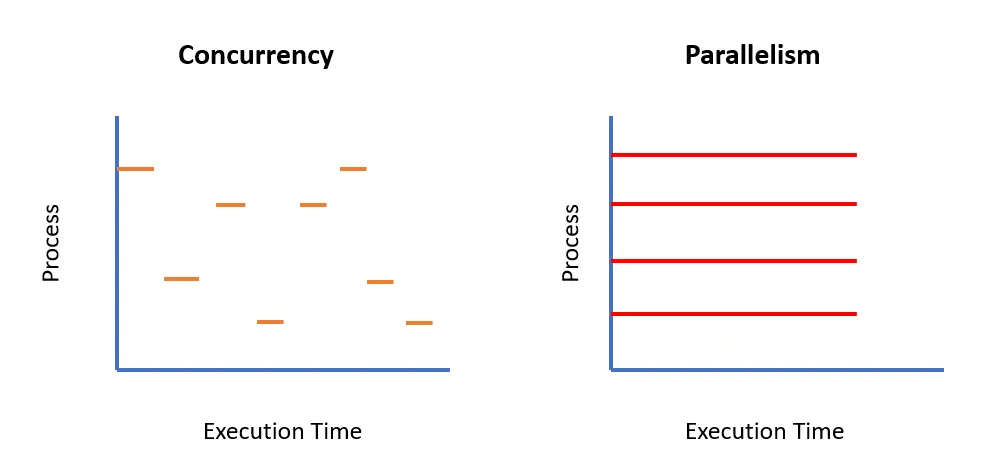

Concurrency

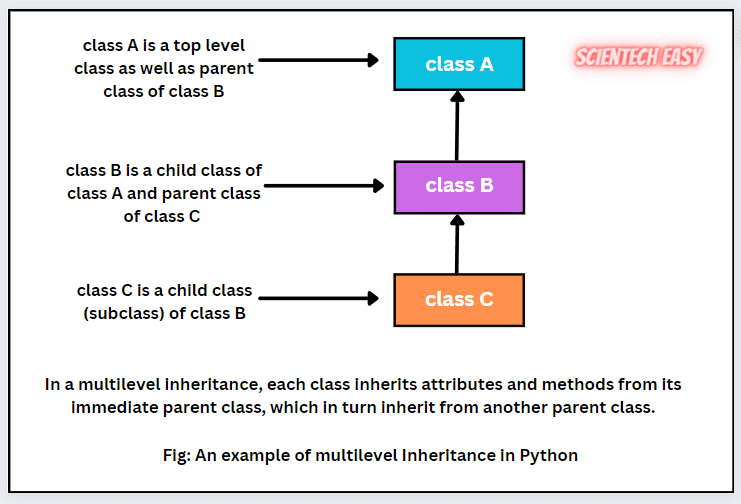

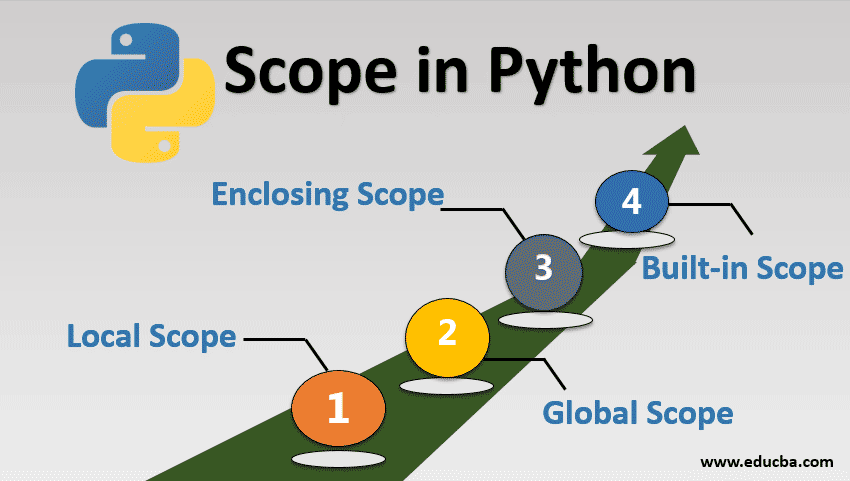

Concurrency is when multiple threads run simultaneously, sharing the same memory space. In Python, you can achieve concurrency using:

Threading: Create multiple threads using thethreading module. Each thread runs a separate function, and they share the same memory. Async I/O: Use libraries like asyncio or trio to perform asynchronous input/output operations.

Concurrency is great for:

However, concurrency has its limitations:

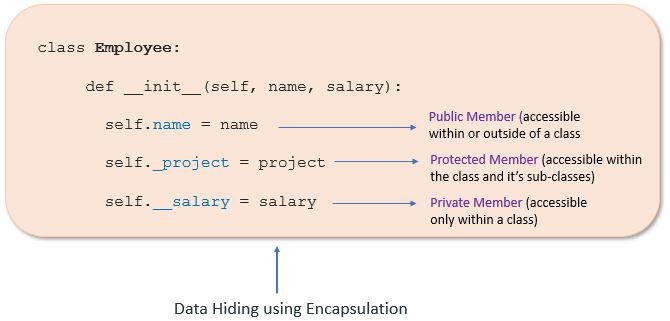

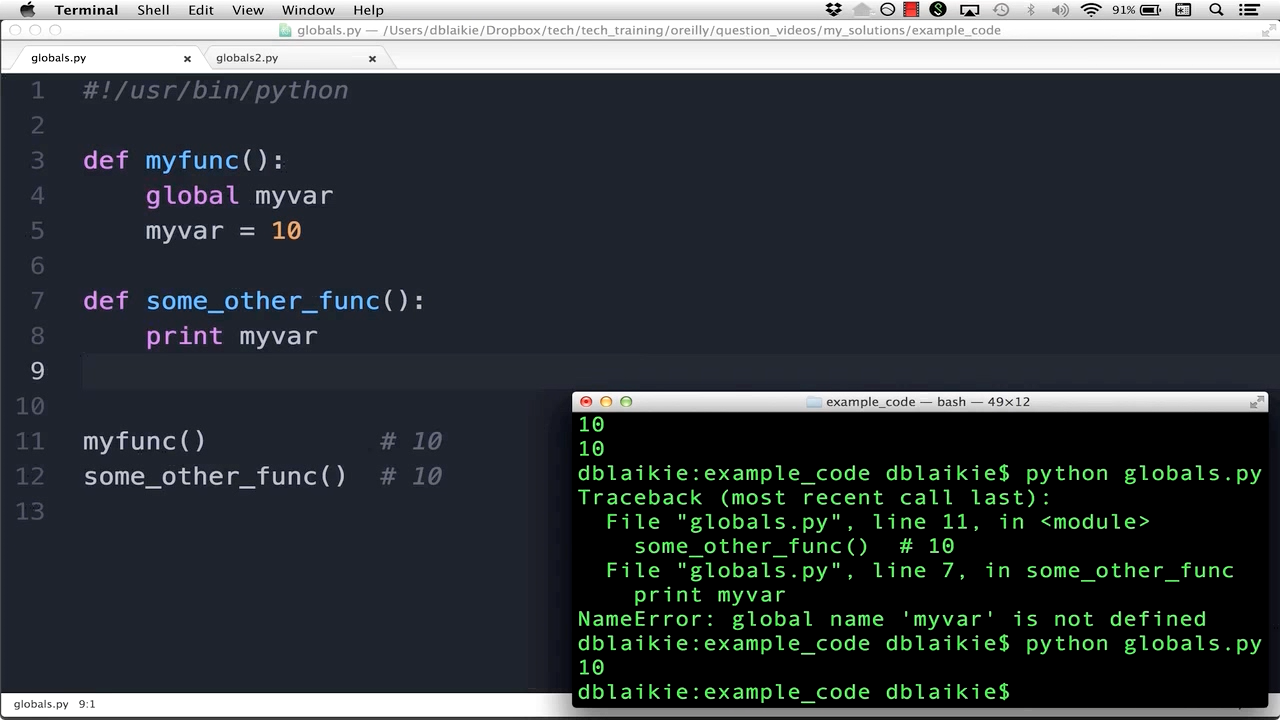

Python's Global Interpreter Lock (GIL) prevents true parallel execution. This can lead to performance issues. Context switching between threads can introduce additional overhead.Multiprocessing

Multiprocessing is when you create separate processes that run concurrently, each with its own memory space. In Python, you can use the multiprocessing module to spawn multiple processes.

Multiprocessing is excellent for:

CPU-bound tasks (e.g., scientific simulations) Handling large datasets or computationsHowever, multiprocessing also has its drawbacks:

Creating and managing processes incurs overhead. Data must be explicitly shared between processes using mechanisms like queues or pipes.When to Choose Each

Consider the following factors when deciding between concurrency and multiprocessing:

Task characteristics: CPU-bound tasks are better suited for multiprocessing, while I/O-intensive tasks (e.g., web scraping) might benefit from concurrency. Memory constraints: If your tasks require a lot of memory, concurrency can lead to increased memory usage due to thread sharing. Multiprocessing, on the other hand, allows each process to have its own dedicated memory space. Communication requirements: If you need to share data between tasks, multiprocessing might be more suitable since processes have separate memory spaces. Performance expectations: Concurrency can provide faster response times for I/O-bound tasks, while multiprocessing is generally better suited for CPU-bound tasks.Conclusion

In conclusion, concurrency and multiprocessing are both valuable tools in the Python developer's toolbox. While concurrency excels at handling many small tasks or cooperative multitasking, multiprocessing shines when dealing with CPU-bound tasks or large datasets. By understanding the strengths and weaknesses of each approach, you'll be better equipped to tackle complex problems and optimize your code for optimal performance.

Now, go forth and conquer those parallel processing challenges!