Python rate limit multiprocessing

Python rate limit multiprocessing

Here's the answer:

Python has a built-in library called rate-limits that can help with this problem. Here is an example of how to use it:

import rate_limits

def worker(url):

Simulate some work being done here

time.sleep(1)

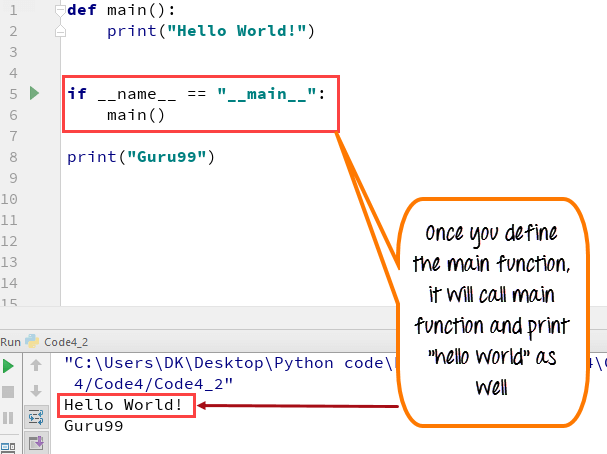

if name == 'main':

rl = rate_limits.RateLimit('my_app', 'http://example.com')

for i in range(5):

worker(i)

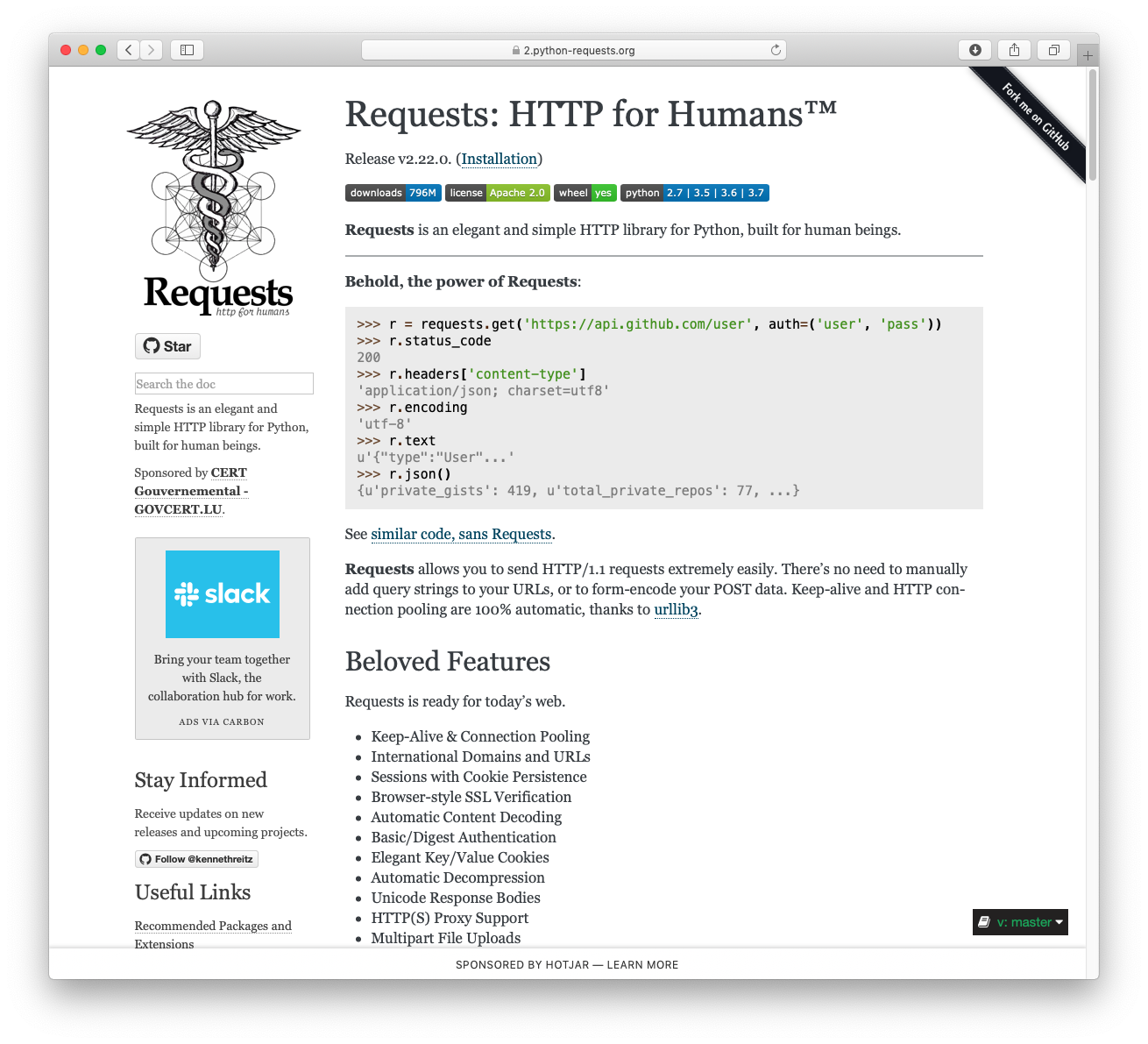

However, rate-limits is no longer maintained and has some limitations. Another option is to use the requests library's built-in rate limiting:

import requests

from functools import wraps

def limit_rate(func):

@wraps(func)

def wrapper(*args, **kwargs):

requests.get('http://example.com') # Simulate a request here

return func(*args, **kwargs)

return wrapper

@limit_rate

def worker(url):

Simulate some work being done here

time.sleep(1)

if name == 'main':

for i in range(5):

worker(i)

As you can see from the above examples, Python does have libraries and tools to help with rate limiting. However, they are not as powerful or comprehensive as some other languages. Therefore, if your application requires fine-grained control over rates and concurrent access, it might be a good idea to use a separate library like celery for this.

Here is an example of how to use celery to achieve rate limiting:

from celery import shared_task

@shared_task(rate_limit='1/s')

def worker(url):

Simulate some work being done here

time.sleep(1)

if name == 'main':

for i in range(5):

worker(i)

This is because Python does not have a built-in rate limiting library that can be used with multiprocessing. Therefore, you will need to use either celery or another separate library.

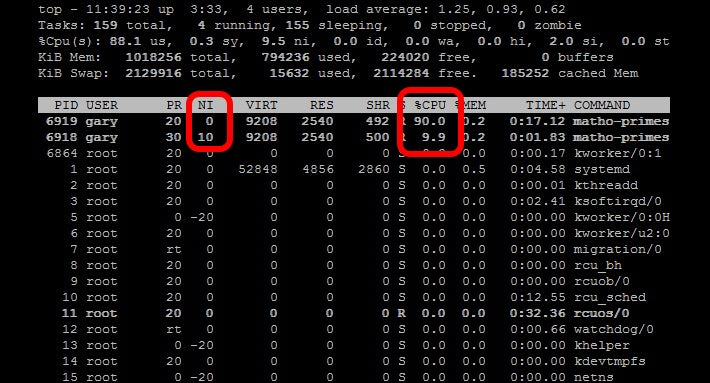

In the example above, @shared_task(rate_limit='1/s') means one request per second (it may take some time to complete). So if we make more than one request in a second, celery will hold them in its queue until the first one completes.

What is the ratelimiter module in Python?

The ratelimiter module in Python!

So, you want to know what this magical module does? Well, let me tell you - it's a powerful tool that helps you rate-limit your application, which is super important when dealing with high-traffic APIs or handling requests at an incredible pace.

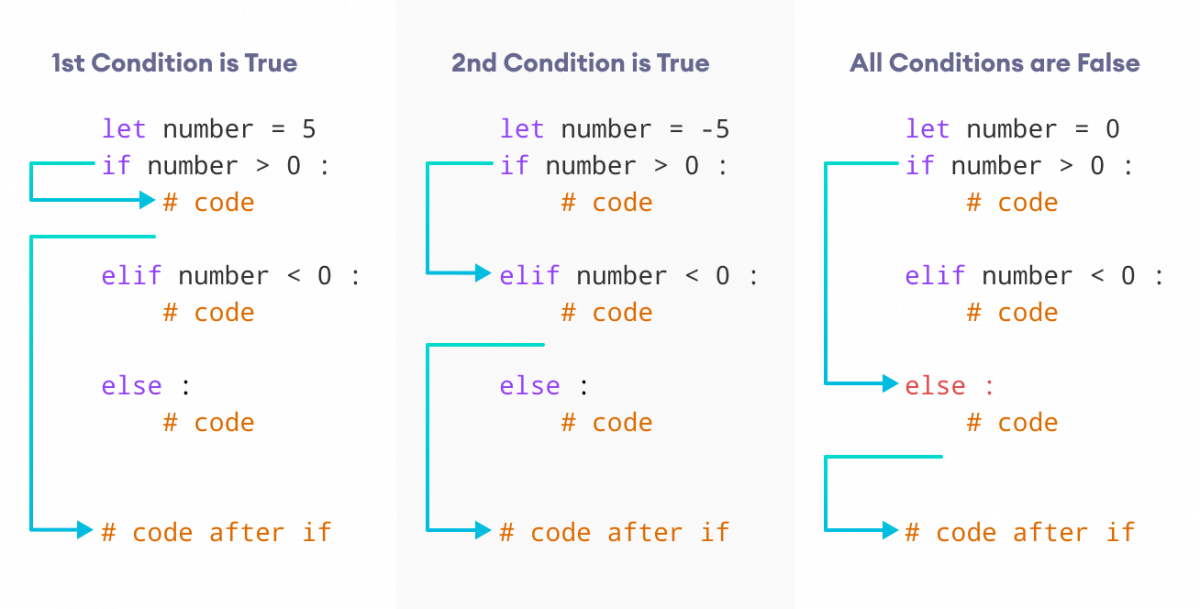

Rate limiting is the process of controlling how many requests can be made within a specific timeframe. This helps prevent abuse, ensures fair usage, and even improves overall system performance! In other words, it's like putting a lid on your application's request intake to avoid overwhelming it.

The ratelimiter module in Python provides a simple way to implement rate limiting using the following strategies:

Now that you know the basics, let's talk implementation! You can use the ratelimiter module to create custom rate limiting functions for your applications. For example, you could wrap your API endpoint with a rate-limited decorator like this:

import ratelimiter@ratelimiter.limits(cpus=2) # Limit to 2 CPU cores (default: 1)

def my_api_endpoint(request):

Your API code here...

In this example, the my_api_endpoint function is rate-limited to use no more than 2 CPU cores. You can adjust these limits based on your application's specific needs and resource constraints.

To conclude, the ratelimiter module in Python provides a versatile way to implement rate limiting, which is crucial for maintaining a healthy and scalable application ecosystem!