What is tuning in Python?

What is tuning in Python?

I'm happy to explain the concept of "tuning" in Python!

In machine learning and deep learning, tuning refers to the process of adjusting the hyperparameters of a model to achieve better performance or accuracy. In other words, it's about fine-tuning the settings that control how well your model works.

Hyperparameters are parameters that you set before training a model, rather than during training. For instance, in a neural network, some common hyperparameters include:

The number of hidden layers and their sizes (e.g., 128 neurons in the first layer, then 256, etc.) The activation functions used for each layer The learning rate (how quickly the model adapts to new data) Regularization techniques like dropout or L1/L2 regularizationWhen you create a neural network or other machine learning model in Python using popular libraries like TensorFlow, Keras, or PyTorch, you typically need to set these hyperparameters before training. However, the optimal values for these hyperparameters can depend on various factors, such as:

The size and complexity of your dataset The type of problem you're trying to solve (e.g., classification, regression, etc.) The specific model architectureThat's where tuning comes in! By experimenting with different combinations of hyperparameter values, you can find the sweet spot that maximizes your model's performance. This might involve:

Grid search: Trying all possible combinations of hyperparameters within a predetermined range. Random search: Sampling random hyperparameter combinations to reduce computational costs. Bayesian optimization: Using probabilistic models to guide the search process and avoid getting stuck in local optima.To make this process more efficient, Python provides various libraries for automated hyperparameter tuning:

Optuna: A Bayesian optimization library that supports many machine learning frameworks, including TensorFlow and PyTorch. Hyperopt: A Python library that allows you to define a search space and perform grid or random searches. Scikit-optimize: A library that provides several algorithms for optimization, including Bayesian optimization.By leveraging these libraries and techniques, you can significantly reduce the time and effort required to find the best-performing model settings. Happy tuning!

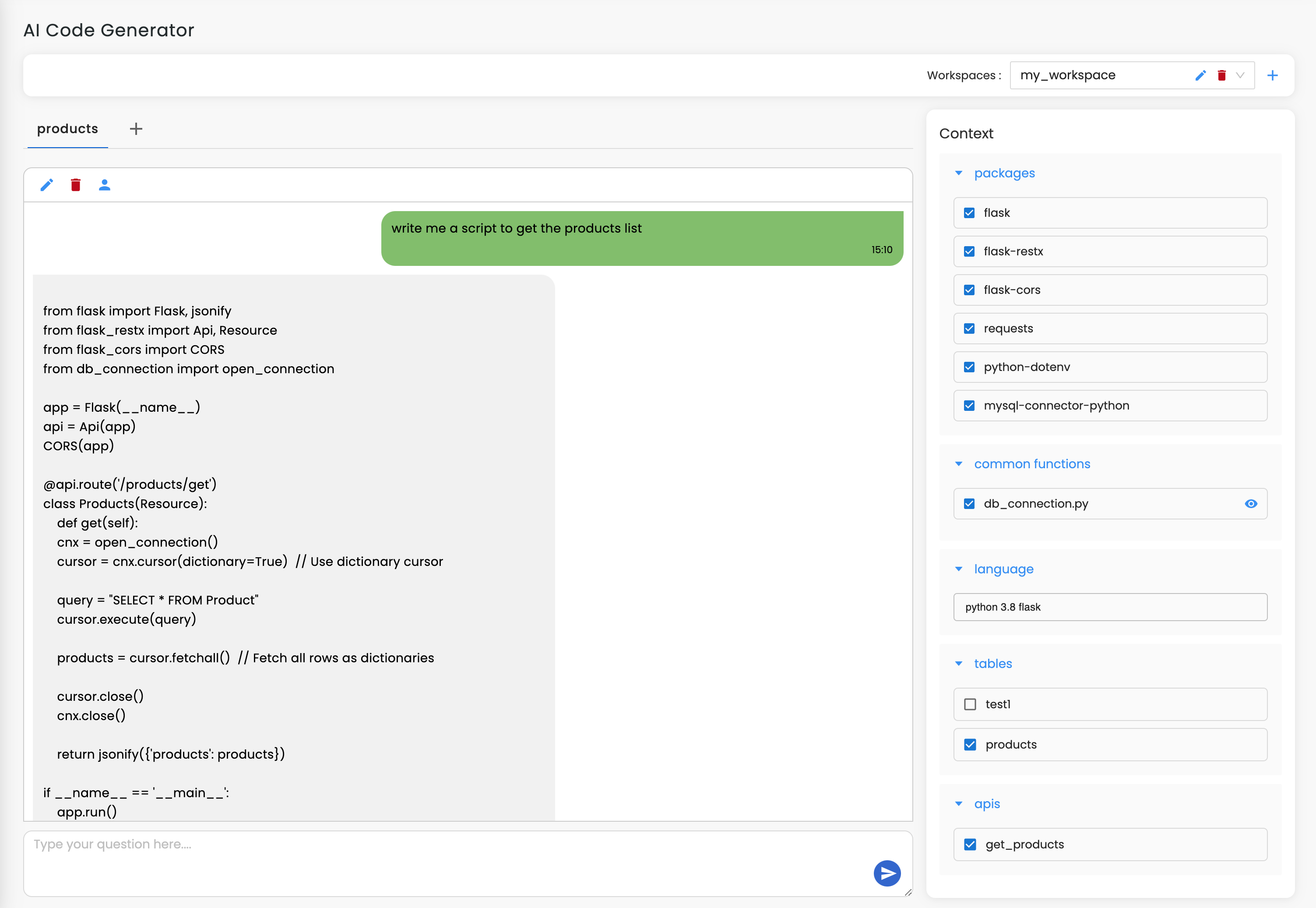

hyperparameter tuning python sklearn

Hyperparameter Tuning with Python and Scikit-Learn!

What is Hyperparameter Tuning?

In machine learning, hyperparameters are parameters that are set before training a model on your data. For example, the number of hidden layers in a neural network or the maximum depth of a decision tree. Hyperparameter tuning involves finding the optimal values for these parameters to achieve the best performance for your model.

Why is Hyperparameter Tuning Important?

Hyperparameters can significantly impact the performance of your machine learning models. If you don't tune them correctly, your model may not perform well or even worse, overfit or underfit on your data. Hyperparameter tuning helps you find the sweet spot where your model learns from the training data without memorizing it.

How to Perform Hyperparameter Tuning in Python with Scikit-Learn?

Scikit-Learn provides various tools for hyperparameter tuning, including Grid Search and Randomized Search. Here's a step-by-step guide on how to perform hyperparameter tuning using these methods:

Method 1: Grid Search

Import necessary libraries:Load your dataset and split it into training and testing sets:import numpy as npfrom sklearn.model_selection import GridSearchCV

from sklearn.metrics import accuracy_score

Define the hyperparameter space to search:X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

Initialize the Grid Search object and specify the scoring metric (e.g., accuracy):param_grid = {'n_estimators': [10, 50, 100],'max_depth': [5, 8, 12]}

Perform grid search:grid_search = GridSearchCV(estimator=estimator,param_grid=param_grid,

cv=5,

scoring='accuracy')

Evaluate the best-performing model using the testing set:grid_result = grid_search.fit(X_train, y_train)

best_model = grid_result.best_estimator_y_pred = best_model.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)

print(f"Best Model Accuracy: {accuracy:.3f}")

Method 2: Randomized Search

Import necessary libraries:Load your dataset and split it into training and testing sets:import numpy as npfrom sklearn.model_selection import RandomizedSearchCV

from sklearn.metrics import accuracy_score

Define the hyperparameter space to search:X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

Initialize the Randomized Search object and specify the scoring metric (e.g., accuracy):param_random = {'n_estimators': [10, 50, 100],'max_depth': [5, 8, 12]}

Perform randomized search:random_search = RandomizedSearchCV(estimator=estimator,param_distributions=param_random,

cv=5,

scoring='accuracy',

n_iter=10)

Evaluate the best-performing model using the testing set:random_result = random_search.fit(X_train, y_train)

best_model = random_result.best_estimator_y_pred = best_model.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)

print(f"Best Model Accuracy: {accuracy:.3f}")

Conclusion

Hyperparameter tuning is an essential step in machine learning to find the optimal values for model parameters. Scikit-Learn provides two main methods for hyperparameter tuning: Grid Search and Randomized Search. By using these methods, you can efficiently explore the hyperparameter space and find the best-performing models for your datasets.

Remember to tune your hyperparameters carefully, as they can significantly impact the performance of your machine learning models!