How to clean data in Python for machine learning

How to clean data in Python for machine learning

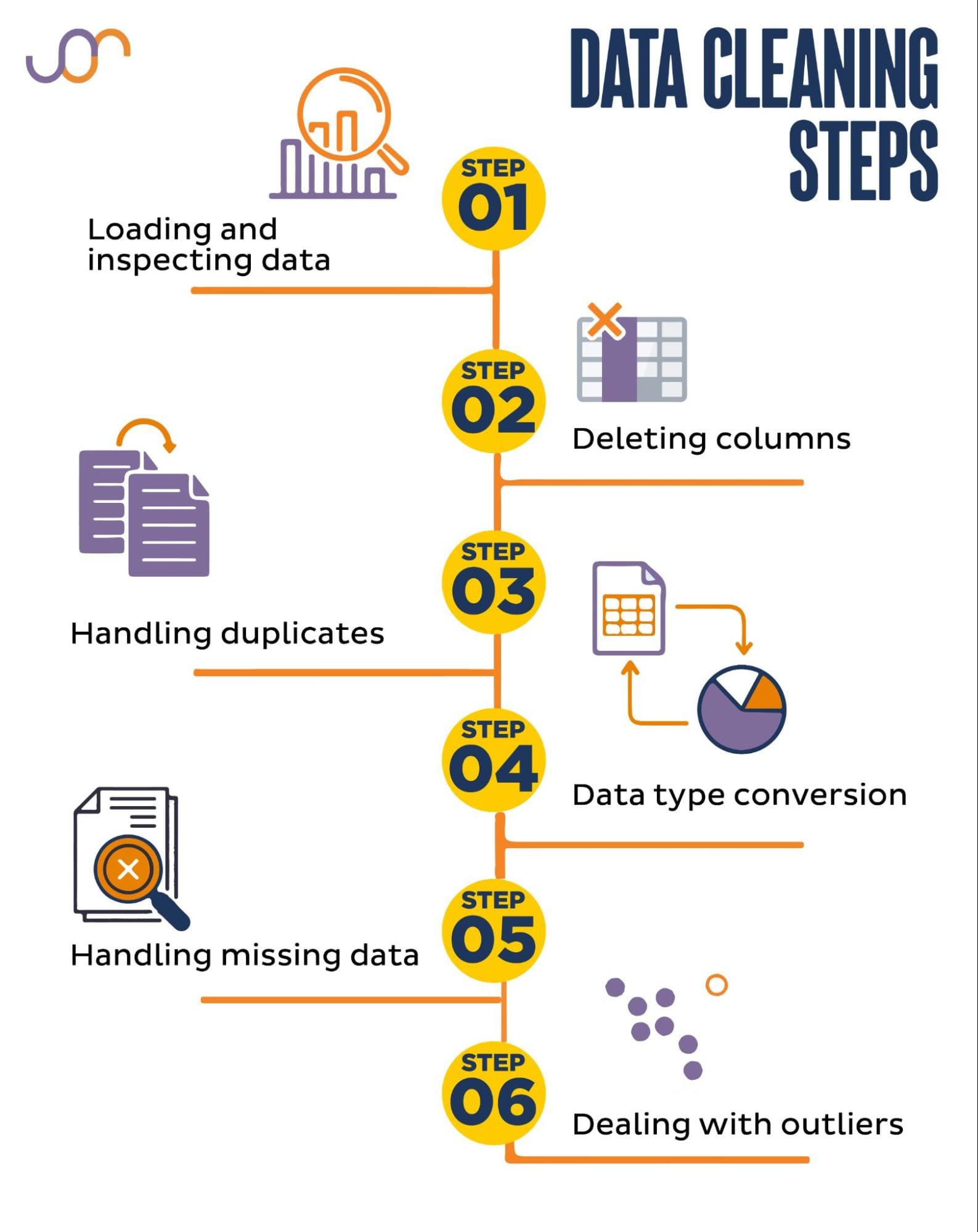

I'm happy to help you with that! Cleaning data is an essential step in the machine learning workflow, as it ensures that your algorithms are trained on high-quality and reliable data. Here's a comprehensive guide on how to clean data in Python for machine learning:

Step 1: Import necessary libraries

You'll need pandas (pd) and NumPy (np) to work with your data. You can install them using pip:

import pandas as pdimport numpy as np

Step 2: Load the dataset

Use pd.read_csv or pd.read_excel to load your dataset into a DataFrame.

df = pd.read_csv('data.csv')

Step 3: Explore the data

Get an overview of your dataset using df.head(), df.info(), and df.describe() to identify missing values, data types, and summary statistics.

Step 4: Handle missing values

Missing values can be a significant issue in machine learning. You can:

Drop rows/columns: Remove entire rows or columns with missing values using df.dropna() or df.drop().Fill with mean/median/mode: Replace missing values with the mean, median, or mode of a specific column using df.fillna(). For example:df = df.dropna() # Drop rows with any missing value

Interpolate: Fill gaps in numerical data using interpolation techniques like linear or polynomial regression.df['age'] = df['age'].fillna(df['age'].mean())

Step 5: Remove duplicates

Remove duplicate rows using df.drop_duplicates() to ensure unique records.

df = df.drop_duplicates()

Step 6: Convert categorical variables

Categorical variables can be represented as:

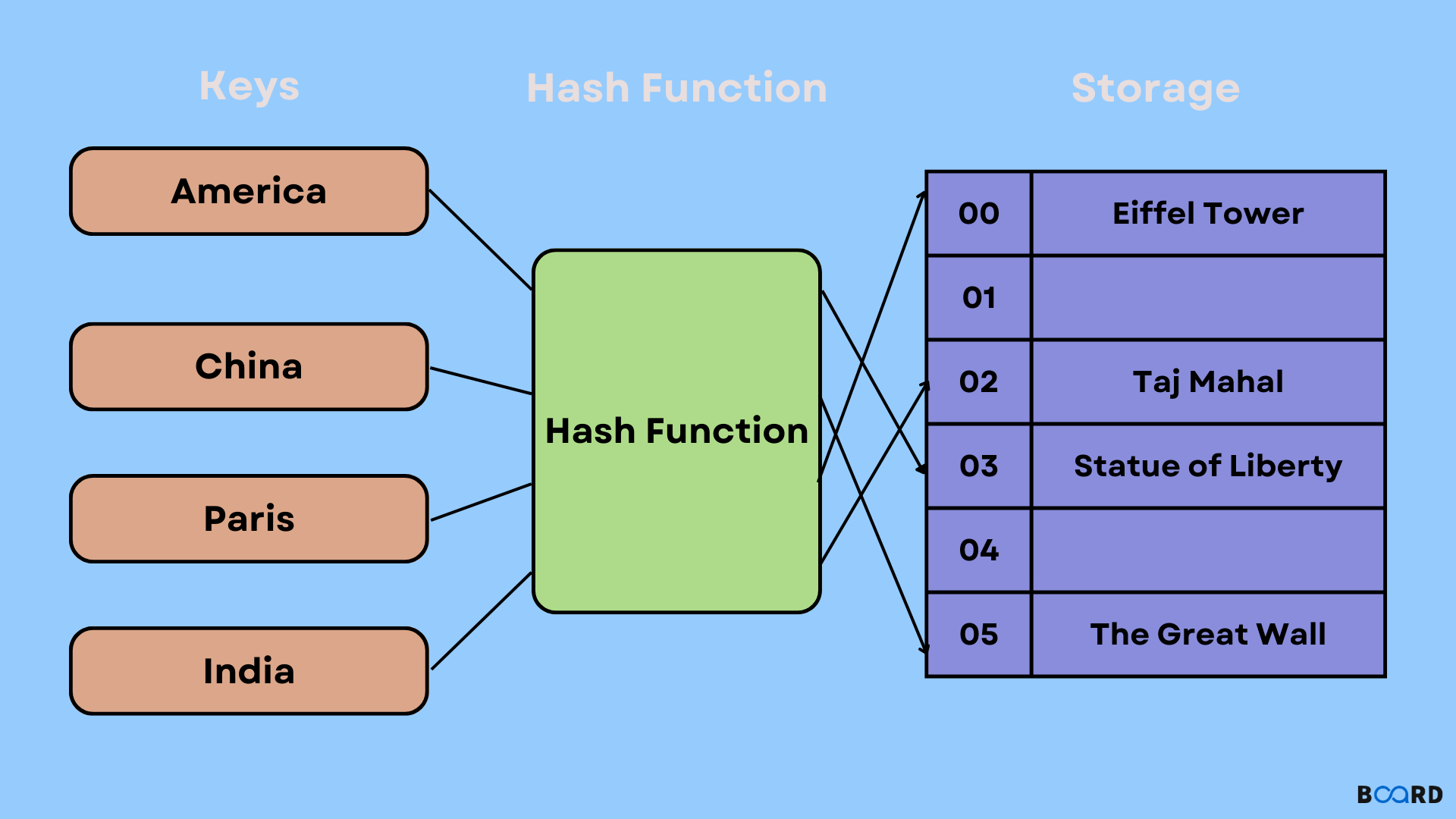

Dummies: One-hot encode categorical variables using pd.get_dummies(). For example:Label encoding: Assign a unique integer label to each category using LabelEncoder from scikit-learn. Hashing: Use sklearn.preprocessing.Hashing to map categories to numerical values.df = pd.get_dummies(df, columns=['category'])

Step 7: Scale/normalize data

Scale or normalize your data to:

Standardize: Scale features to have zero mean and unit variance using StandardScaler from scikit-learn. Min-max scaling: Scale values between a specific range (e.g., 0-1) using MinMaxScaler. Log transformation: Apply logarithmic transformations to non-linear data.Step 8: Remove outliers

Remove extreme or unusual values that can affect model performance:

Interquartile range (IQR): Remove values outside the IQR using df.drop() and the quantiles function from scipy. Z-score: Identify outliers based on a certain number of standard deviations from the mean using zscore from scipy.By following these steps, you'll have cleaned your data and be ready to feed it into machine learning algorithms for modeling. Happy coding!

Python data cleaning libraries

I'm happy to help!

Python has numerous libraries that can help with data cleaning, which is a crucial step in the data analysis process. Here are some of the most popular and widely used libraries for data cleaning in Python:

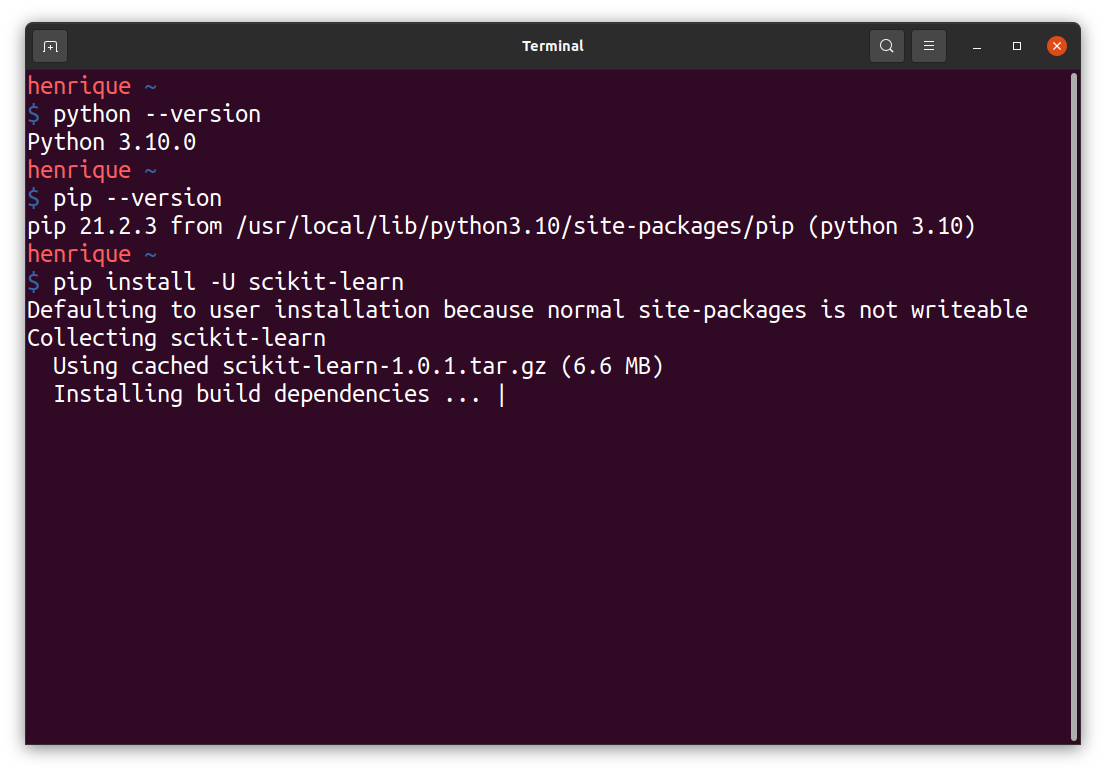

Pandas: Pandas is one of the most popular libraries for data manipulation and analysis in Python. It provides efficient data structures and operations for working with structured data (e.g., tabular data such as spreadsheets or relational databases). Pandas has various functions and methods that can help with cleaning, transforming, and analyzing datasets. NumPy: NumPy is the foundation of most scientific computing and numerical analysis in Python. It provides support for large, multi-dimensional arrays and matrices, along with a wide range of high-level mathematical functions to operate on these arrays. This library is often used alongside Pandas for data cleaning tasks that require numerical computations. OpenRefine: OpenRefine is an open-source data refining tool that can be integrated with Python. It provides a powerful set of tools for data cleaning, including data normalization, deduplication, and reconciliation. OpenRefine also has built-in support for various data formats, such as CSV, JSON, and Excel. Dask: Dask is a flexible parallel computing library that can be used to speed up data cleaning tasks in Python. It allows you to process larger-than-memory datasets by breaking them into smaller chunks, processing each chunk independently, and then combining the results. Scikit-learn: Scikit-learn is a machine learning library for Python that includes various algorithms for classification, regression, clustering, and more. While not primarily designed for data cleaning, scikit-learn provides some useful tools for preprocessing and feature engineering, which can be applied to data cleaning tasks.Some of the common data cleaning operations that these libraries can help with include:

Handling missing or null values Removing duplicates or irrelevant data Normalizing data formats (e.g., converting date columns to a standard format) Transforming data (e.g., aggregating data by group or calculating summary statistics) Identifying and correcting errors in the data (e.g., removing rows with invalid data)These libraries can be used individually or together, depending on your specific data cleaning needs. Here's an example of how you might use Pandas and NumPy to clean a dataset:

import pandas as pd

import numpy as np

Load the dataset using Pandas

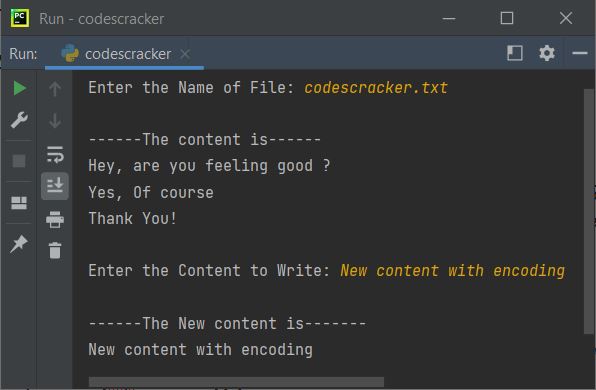

df = pd.read_csv('data.csv')

Remove rows with missing values in certain columns

df.dropna(subset=['column1', 'column2'], inplace=True)

Convert date column to a standard format using NumPy

df['date_column'] = pd.to_datetime(df['date_column']).dt.strftime('%Y-%m-%d')

Group data by category and calculate summary statistics using Pandas

grouped_df = df.groupby('category').agg({'column3': ['min', 'max']})

Save the cleaned dataset to a new CSV file

grouped_df.to_csv('cleaned_data.csv', index=False)

These libraries provide powerful tools for cleaning, transforming, and analyzing data in Python. By combining these libraries with Pandas' data structures and operations, you can efficiently perform complex data cleaning tasks.