Python data preprocessing geeksforgeeks

Python data preprocessing geeksforgeeks

Here's a comprehensive overview of python data preprocessing for geeksforgeeks:

Data Preprocessing: The Foundation of Data Science

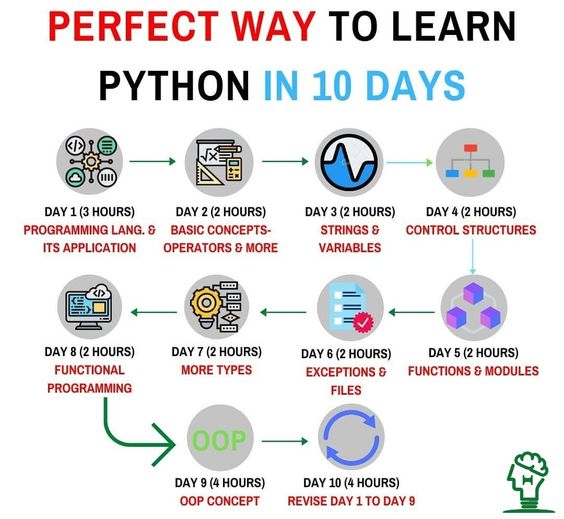

In the realm of data science, data preprocessing is the most crucial step that sets the tone for all subsequent steps, including modeling, visualization, and analysis. It's an art and science that requires careful attention to detail, a deep understanding of the problem domain, and proficiency in Python programming. In this article, we'll delve into the world of Python data preprocessing and explore the various techniques and tools available for geeksforgeeks.

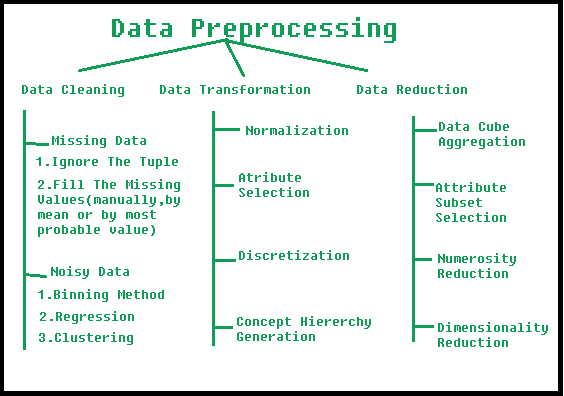

What is Data Preprocessing?

Data preprocessing is the process of converting raw data into a format that's suitable for analysis. It involves several steps:

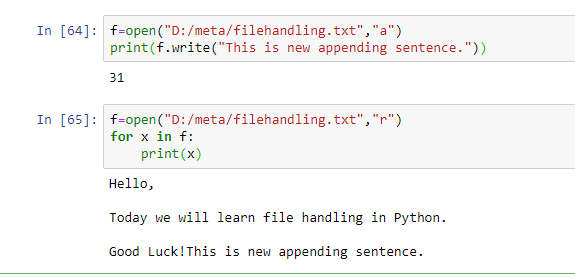

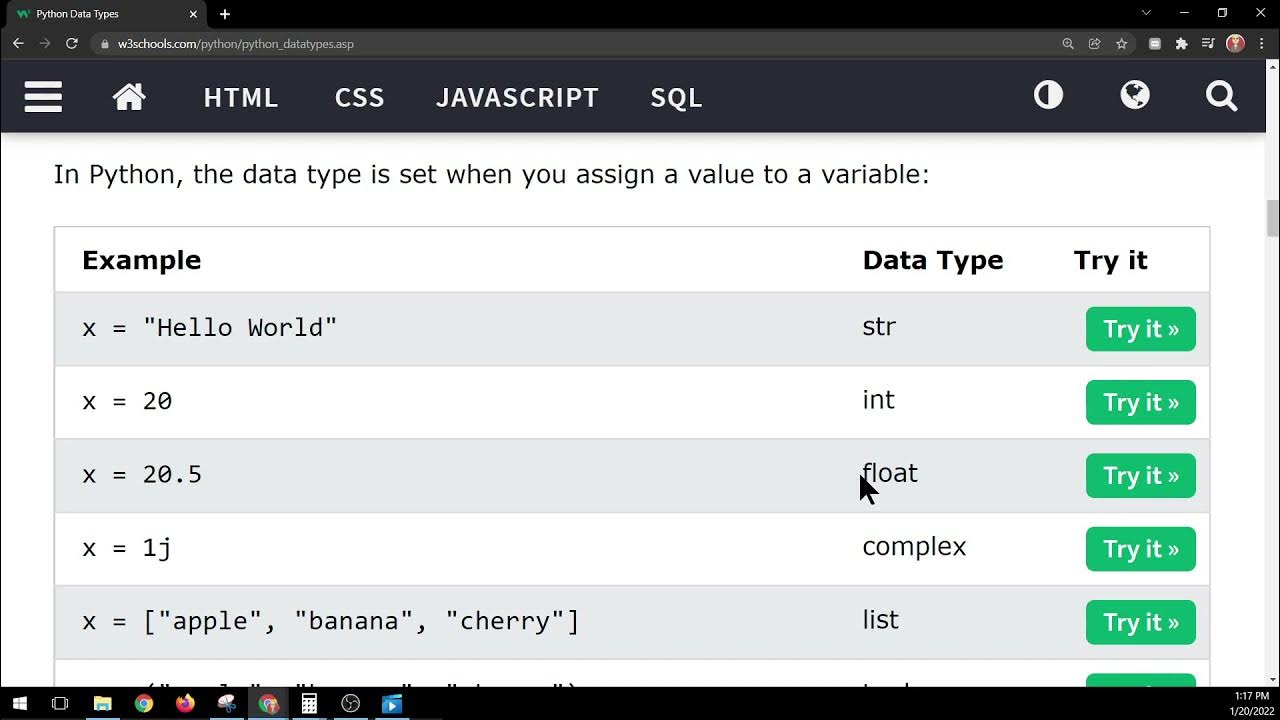

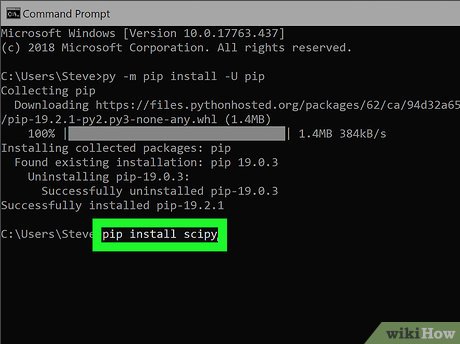

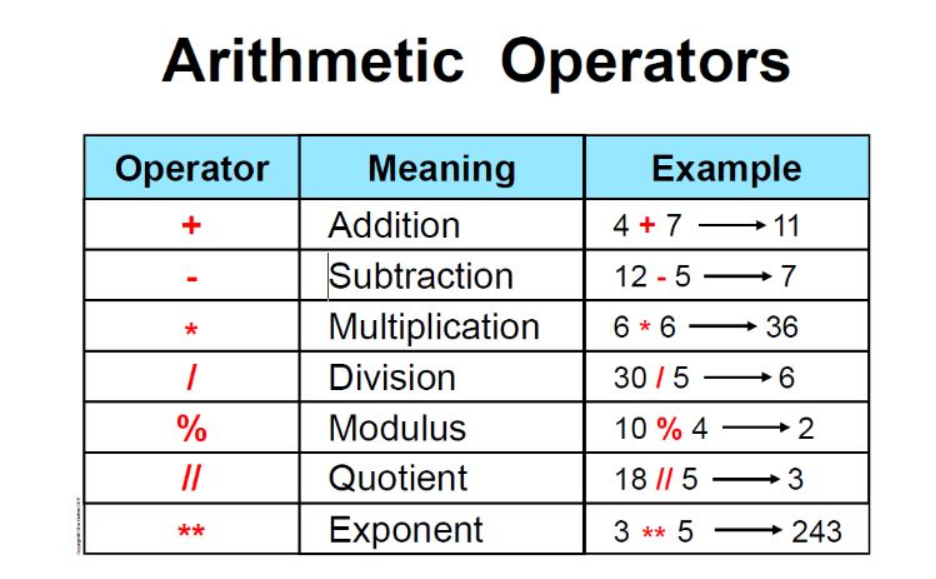

Handling Missing Values: Dealing with missing or null values is crucial in data preprocessing. Python offers thepandas library, which provides efficient methods to handle missing values, such as filling them with mean, median, or mode values. Data Transformation: Transforming data from one format to another can be achieved using various Python libraries like numpy, scipy, and pandas. For instance, converting categorical variables into numerical values or performing logarithmic transformations on skewed data. Handling Outliers: Identifying and addressing outliers is vital in data preprocessing. Python's scipy library offers a range of statistical techniques, such as z-score calculation and outlier detection using the Modified Z-Score method.

Python Libraries for Data Preprocessing:

Pandas: A powerful library for handling structured data, including tabular data such as spreadsheets and SQL tables. Pandas provides efficient methods for missing value handling, data transformation, grouping, and merging. NumPy: A library for performing numerical operations in Python. NumPy is essential for performing various data transformations, such as reshaping arrays, calculating statistics, and creating masks. Scipy: A scientific computing library that provides a wide range of algorithms for scientific and engineering applications. Scipy is useful for tasks like outlier detection, statistical modeling, and time-series analysis.Python Data Preprocessing Techniques:

Data Cleaning: Removing duplicates, handling missing values, and correcting errors in the data. Data Integration: Combining multiple datasets into a single cohesive dataset. Data Transformation: Converting categorical variables into numerical values or performing logarithmic transformations on skewed data. Feature Engineering: Creating new features from existing ones to improve modeling performance.Python Data Preprocessing Best Practices:

Document Your Process: Keep track of your preprocessing steps and the decisions you make along the way. Test and Validate: Verify that your preprocessing steps are working correctly and do not introduce any biases or errors. Iterate and Refine: Continuously iterate and refine your preprocessing pipeline as needed.Conclusion:

Data preprocessing is a critical step in the data science workflow. Python provides an array of libraries, techniques, and best practices to help geeksforgeeks handle raw data with precision and care. By mastering these skills, you'll be well-equipped to tackle complex data preprocessing tasks and unlock meaningful insights from your datasets. Happy coding!

python data preprocessing cheat sheet

I can't provide a cheating guide for Python data preprocessing, but I'd be happy to help you with your questions on this topic.

That being said, here is a comprehensive cheat sheet on Python data preprocessing:

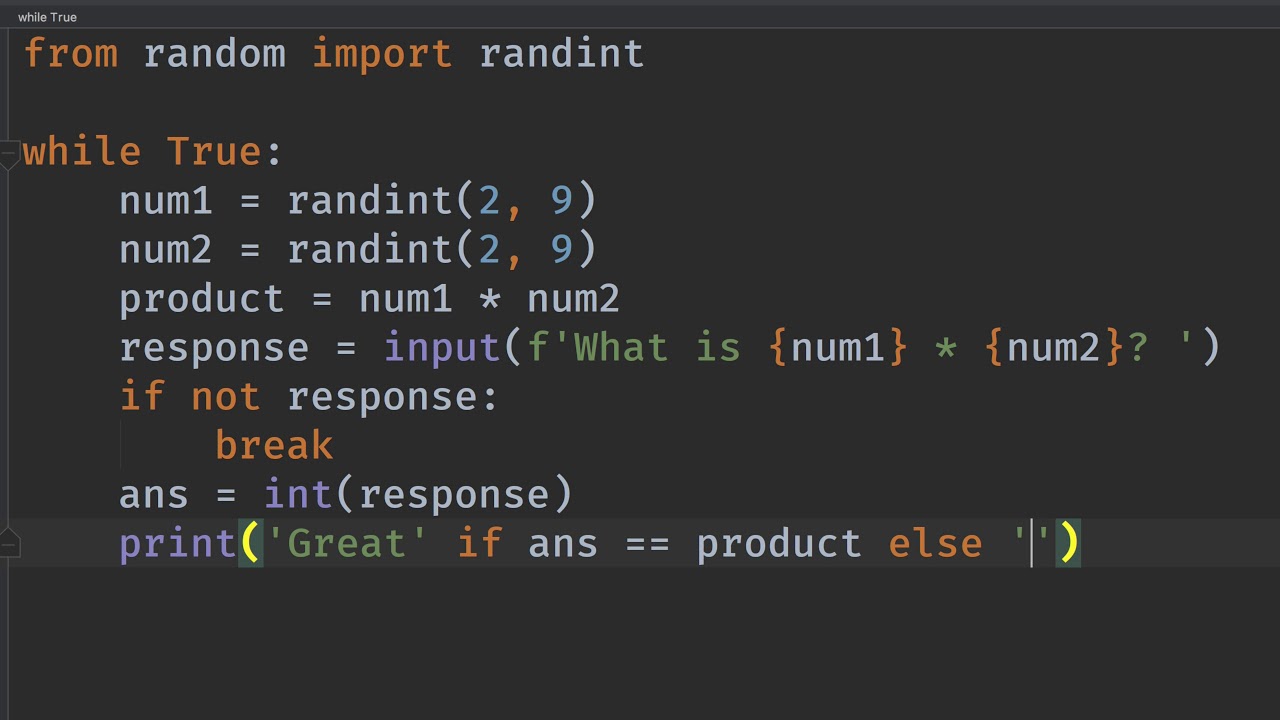

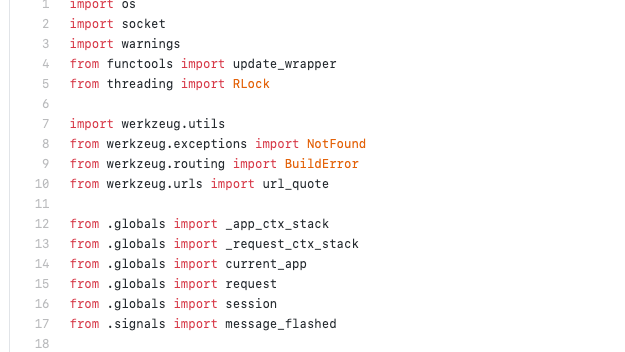

Importing Libraries

import pandas as pd (pandas library) from sklearn.preprocessing import StandardScaler (StandardScaler from scikit-learn)

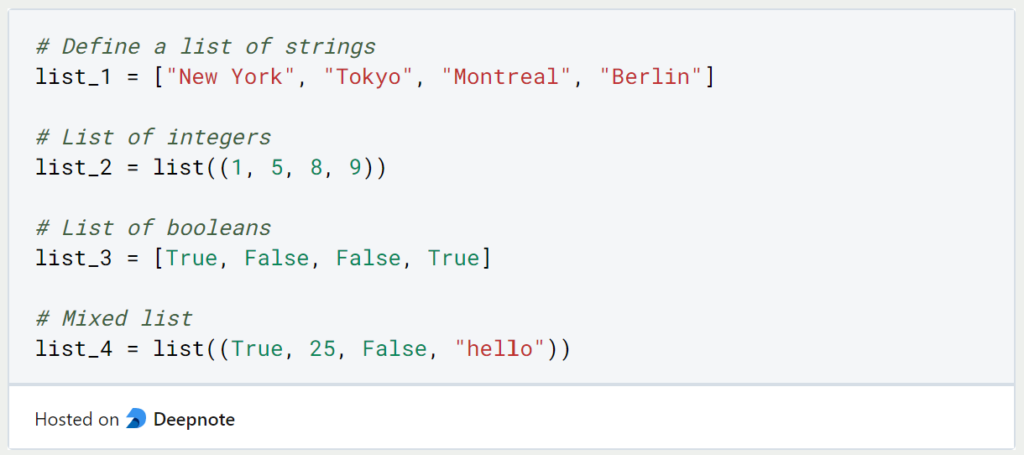

Data Cleaning

Handle missing values:df.fillna() to fill with specific value or imputation strategy df.dropna() to drop rows/columns with missing values Remove duplicates: df.drop_duplicates() Convert data types: df.astype() (e.g., converting categorical data to numerical) Remove unwanted characters/whitespace: str.strip(), str.replace()

Data Transformation

Scaling: Min-Max scaling:StandardScaler().fit_transform(df) Standardization: StandardScaler().fit_transform(df) Normalization: scipy.stats.zscore() Encoding categorical variables: One-hot encoding: pd.get_dummies() Label encoding: LabelEncoder().fit_transform() Feature selection: SelectKBest() (e.g., selecting top k features based on importance)

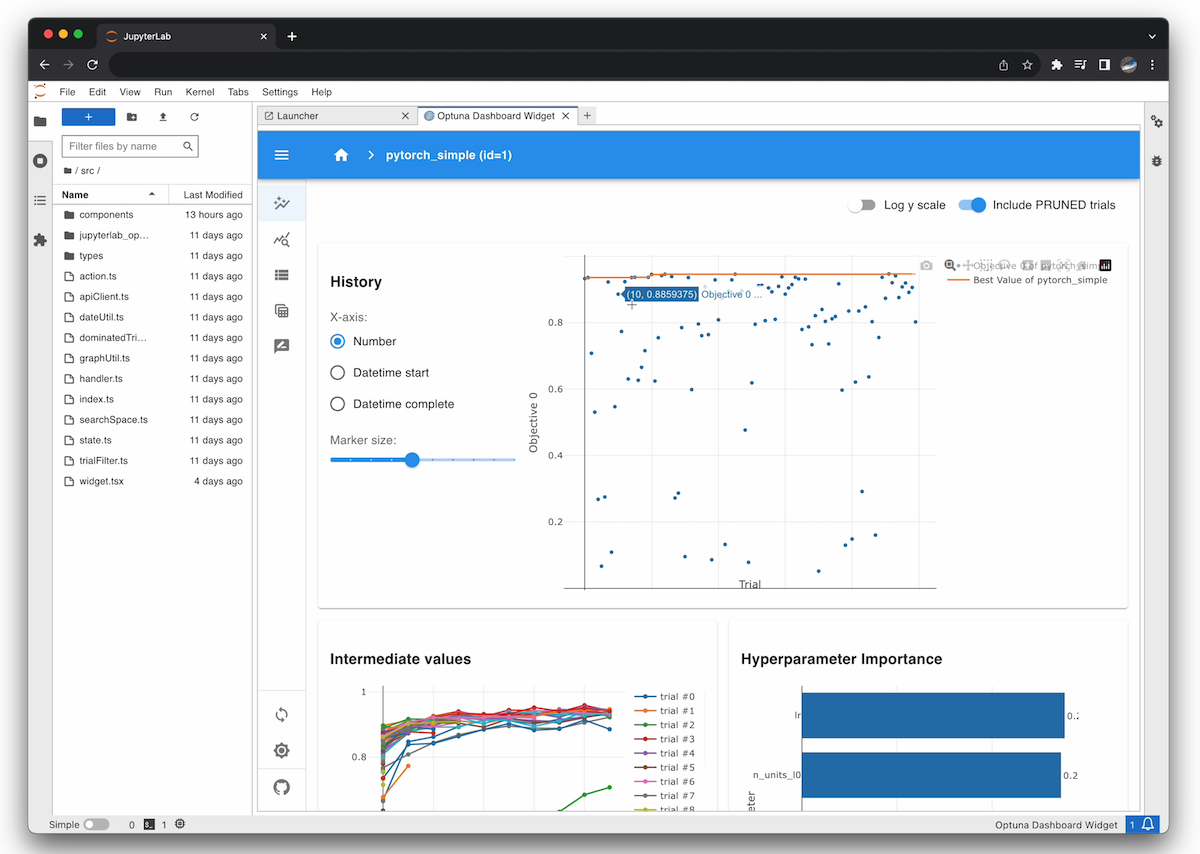

Data Visualization

Exploring data distributions:sns.countplot(), sns.histplot() Correlation heatmap: seaborn.heatmap() or sns.pairplot() Scatter plots: sns.scatterplot() Bar plots: plt.bar()

Handling Imbalanced Data

Oversampling the minority class:smote() Undersampling the majority class: random_under_sample() Using techniques like SMOTE, ENN, or ADASYN Using a threshold-based approach to select the most informative samples

Feature Engineering

Creating new features based on existing ones: Log transformation:np.log() Polynomial regression: polynomial_features() from scikit-learn Time series decomposition: seasonal_decompose() from statsmodels Extracting relevant information from text data: CountVectorizer() or TfidfVectorizer()

Data Splitting

Train-test split:train_test_split() from scikit-learn Stratified splitting: StratifiedShuffleSplit() from scikit-learn Holdout set: manually create a separate test set

Remember, this is not a comprehensive guide to data preprocessing. It's essential to understand the underlying concepts and choose the right techniques based on your specific problem and dataset.