python nlp library

python nlp library

I'm happy to help you with that!

Python has a wide range of NLP (Natural Language Processing) libraries that can be used for various tasks such as text processing, sentiment analysis, language modeling, and more. Here are some of the most popular Python NLP libraries:

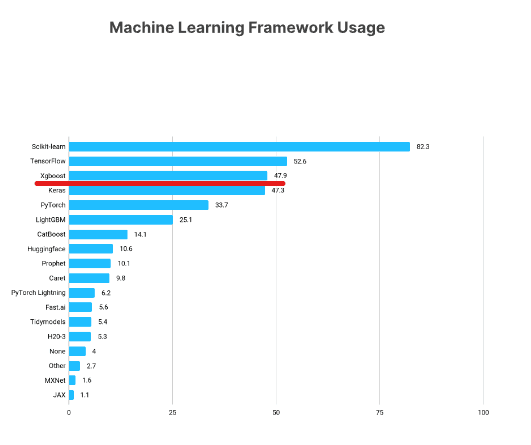

NLTK (Natural Language Toolkit): NLTK is one of the oldest and most widely used NLP libraries in Python. It provides a comprehensive set of tools for tokenizing text, stemming and lemmatizing words, pos-tagging, parsing, and more. spaCy: spaCy is another popular NLP library that focuses on performance and ease of use. It's particularly well-suited for processing modern texts and includes pre-trained models for several languages. Gensim: Gensim (Generative Similarity) is a Python library that allows you to analyze large volumes of text using topic modeling and document similarity analysis. Stanford CoreNLP: Stanford CoreNLP is a Java library, but it has Python bindings via the PyCoreNLP package. It's particularly well-suited for tasks such as named entity recognition (NER), part-of-speech tagging (POS), sentiment analysis, and more. TextBlob: TextBlob is a simple library that provides a simple API for diving into common NLP tasks such as sentiment analysis, word cloud generation, and more. OpenNLP: OpenNLP is a Python library that focuses on maximum entropy models and is particularly well-suited for tasks such as named entity recognition (NER), part-of-speech tagging (POS), and more. TensorFlow and PyTorch: Both TensorFlow and PyTorch are popular deep learning libraries with NLP capabilities. They can be used for tasks such as language modeling, text classification, and more.Some of the most common use cases for Python NLP libraries include:

Sentiment analysis: Determining the emotional tone of a piece of text. Text classification: Classifying text into categories such as spam/not spam, positive/negative sentiment, etc. Named entity recognition (NER): Identifying named entities in text, such as people, places, and organizations. Part-of-speech tagging (POS): Identifying the part of speech for each word in a sentence, such as noun, verb, adjective, etc.Python NLP libraries can be used in a wide range of applications, including:

Text summarization: Summarizing large bodies of text to provide a concise overview. Language translation: Translating text from one language to another. Chatbots and voice assistants: Building conversational interfaces that can understand and respond to user input. Sentiment analysis for social media monitoring: Analyzing the sentiment expressed in social media posts.In conclusion, Python NLP libraries provide a powerful set of tools for analyzing and processing human language. They can be used for a wide range of applications, from sentiment analysis and text classification to named entity recognition and more.

Python natural language processing github

I'd be happy to help you with that.

Python is a popular programming language for natural language processing (NLP) tasks due to its simplicity, flexibility, and extensive library ecosystem. Here are some key GitHub repositories related to NLP in Python:

pycorenlp package. GitHub repository: https://github.com/stanford-nlp/CoreNLP Gensim: A Python library for topic modeling and document similarity analysis that uses Latent Dirichlet Allocation (LDA) and other algorithms to extract meaningful topics from large volumes of text data. GitHub repository: https://github.com/RaRe/LDAvis Scikit-learn: While not exclusively an NLP library, scikit-learn includes modules for text classification, clustering, and topic modeling that can be used in conjunction with other Python packages for more complex NLP tasks. GitHub repository: https://github.com/scikit-learn/scikit-learn TextBlob: A simple API for diving into common NLP tasks such as part-of-speech tagging, noun phrase extraction, sentiment analysis, and language detection. GitHub repository: https://github.com/alvations/textblob

These libraries are widely used in academia, research, and industry for applications like sentiment analysis, topic modeling, named entity recognition, machine translation, and more. Python's simplicity, ease of use, and extensive library ecosystem make it an ideal language for NLP tasks.

Please note that the list above is not exhaustive, but it gives you a good starting point for exploring the many amazing libraries and resources available in the realm of Python-based natural language processing.