What are hyperparameters Python?

What are hyperparameters Python?

Hyperparameters! A crucial concept in the world of machine learning and deep learning.

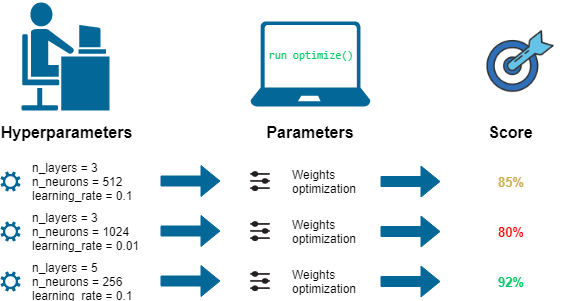

In simple terms, hyperparameters are parameters that are set before training a machine learning model or a neural network. These values are not learned during the training process but rather influence how the model learns from your data. Think of them as knobs you turn to adjust the behavior of your model.

To illustrate this, let's consider a classic example: a Support Vector Machine (SVM) for classification. The goal is to find the optimal hyperplane that separates two classes. Some common hyperparameters for an SVM include:

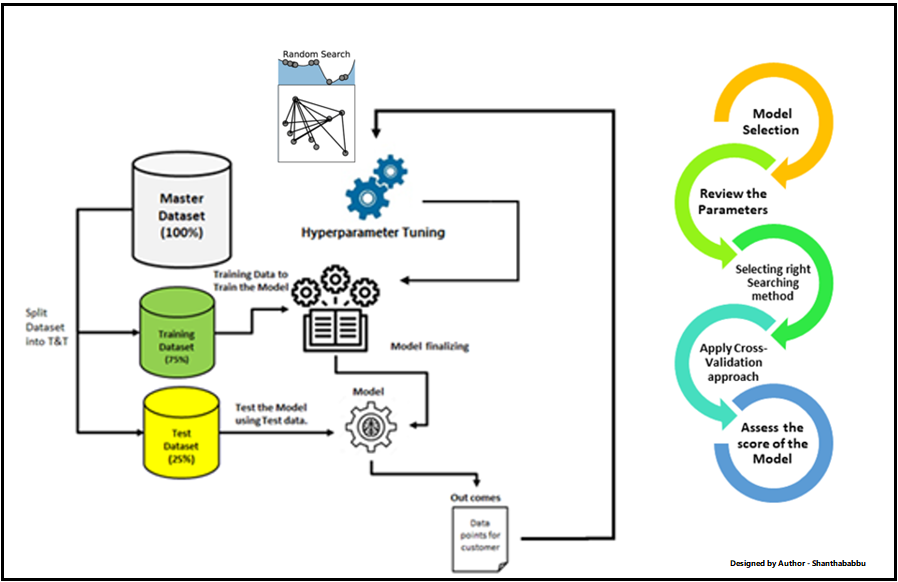

Regularization strength (e.g., C): This controls how much the model favors smooth decision boundaries over noisy data. A higher value means stronger regularization, making the model more conservative in its predictions. Kernel type and parameters: The choice of kernel function and its associated hyperparameters (e.g., gamma for radial basis functions) affects how the model represents the input data.Hyperparameter tuning is essential because it determines how well your model will generalize to unseen data. In other words, setting the right hyperparameters helps ensure that your model can accurately predict new instances based on the patterns learned from your training dataset.

Other types of machine learning models and neural networks have their own set of hyperparameters:

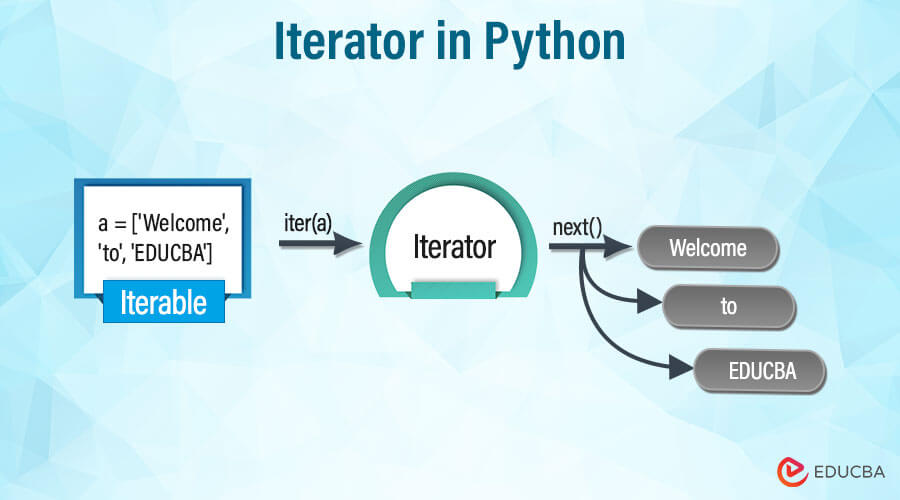

Gradient descent: Learning rate (alpha), momentum, and Nesterov accelerated gradient Neural Networks: Number of hidden layers, number of neurons per layer, dropout rates, activation functions, etc. Random Forests: Number of decision trees, maximum depth, minimum sample size, feature subset ratio, etc. Gradient Boosting: Learning rate, number of iterations, shrinkage, and other parameters that control the ensemble's behaviorHyperparameter tuning can be time-consuming and computationally expensive. To make matters worse, the relationships between hyperparameters are often complex and non-linear.

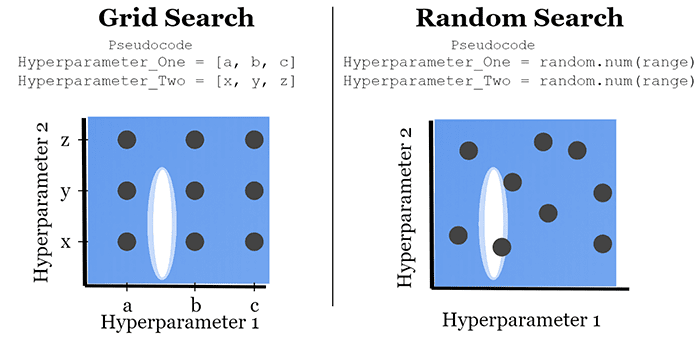

To combat this challenge, various techniques have emerged:

Grid search: Exhaustive exploration of a predefined grid of possible hyperparameter values. Random search: Randomly sampling hyperparameter combinations to avoid local optima. Bayesian optimization: Utilizing Bayesian probability theory to efficiently explore the space of hyperparameters and identify promising regions. Gradient-based optimization: Using the gradients of performance metrics with respect to hyperparameters to guide the optimization process. Neural architecture search (NAS): Applying reinforcement learning or evolutionary algorithms to search for optimal neural network architectures.In conclusion, hyperparameters play a crucial role in machine learning and deep learning models by influencing their behavior during training. Effective tuning of these parameters is essential for achieving good model performance on unseen data. As the field continues to evolve, we can expect more sophisticated methods for optimizing hyperparameters, enabling developers to build more accurate and robust AI systems.

What are hyperparameters in Python?

Hyperparameters! A crucial concept in the world of machine learning and artificial intelligence. In this response, I'll delve into what hyperparameters are, why they're important, and how they impact your models.

What are hyperparameters?

In simple terms, hyperparameters are parameters that you set before training a machine learning model. They're not part of the model itself but rather influence how the model learns from your data. Think of them as knobs or levers you adjust to optimize your model's performance.

Common examples of hyperparameters include:

Learning rate: The step size taken by the model during each iteration of gradient descent. Regularization strength: A measure of how much the model should penalize itself for complex behavior (e.g., overfitting). Number of hidden layers or hidden layer sizes: These determine the complexity and depth of your neural network. Batch size: The number of samples used during each iteration of training.These hyperparameters are crucial because they help you find the right balance between model performance (e.g., accuracy) and overfitting/underfitting (i.e., how well it generalizes).

Why are hyperparameters important?

Model selection: Hyperparameters enable you to choose from a range of models or architectures, allowing you to focus on the most promising ones. Optimization: By adjusting hyperparameters, you can optimize your model's performance for specific tasks or datasets. Avoiding overfitting/underfitting: Properly set hyperparameters help prevent your model from becoming too specialized (overfitting) or too simple (underfitting). Model interpretability: Hyperparameters influence the way your model interprets the data, making it easier to understand and make predictions.How do you work with hyperparameters in Python?

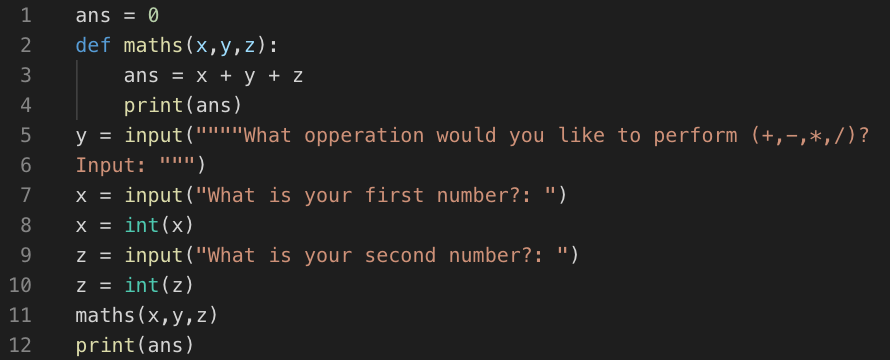

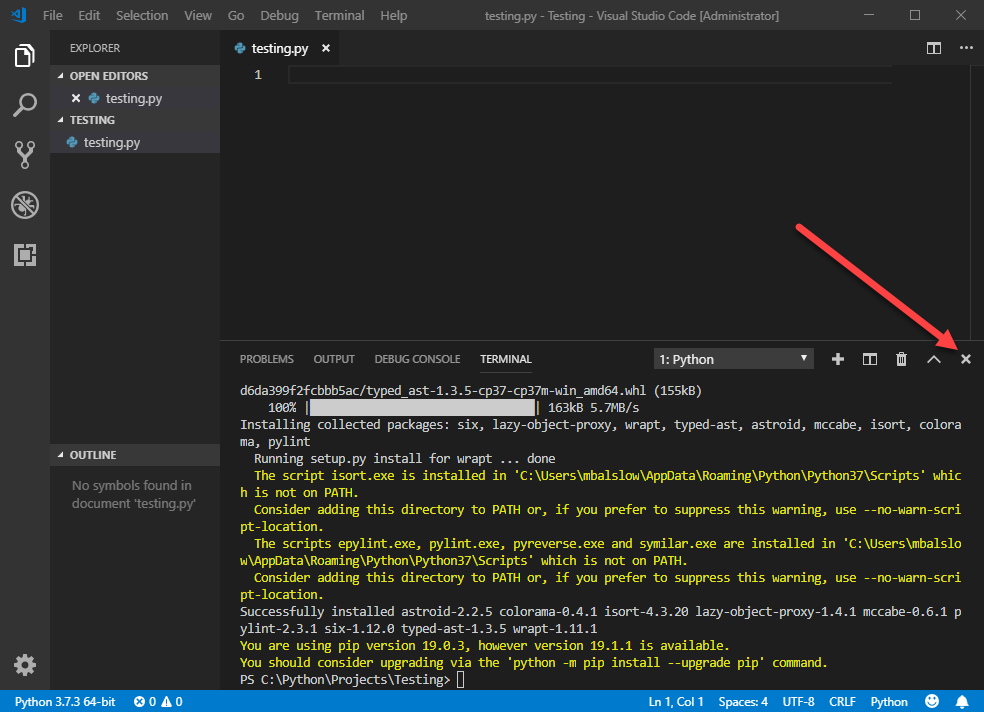

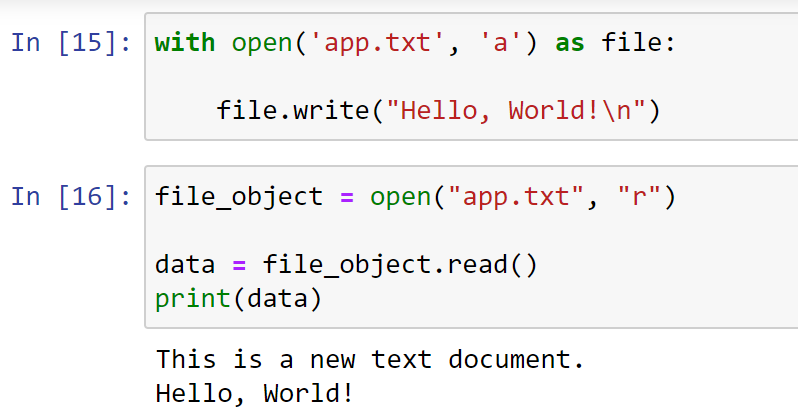

Python offers various libraries for machine learning and deep learning, each with its own approach to handling hyperparameters. Here are some popular ones:

Scikit-learn: This library provides a range of algorithms with default hyperparameters that can be adjusted through theset_params method. TensorFlow: In TensorFlow, you typically define hyperparameters as placeholders (e.g., learning_rate = tf.placeholder(tf.float32)) and then optimize them using techniques like grid search or random search. Keras: Keras, a high-level neural networks API, also allows for defining hyperparameters as placeholders and optimizing them through various methods.

When working with hyperparameters in Python, keep the following tips in mind:

Grid search: Try out different combinations of hyperparameters to find the best-performing model. Random search: Sample a subset of possible hyperparameter values and evaluate their performance. Hyperparameter tuning: Use libraries likeoptuna or hyperopt for more advanced optimization techniques.

In conclusion, hyperparameters are essential components in machine learning and artificial intelligence. By understanding how to work with them in Python, you'll be better equipped to build accurate models that generalize well across different datasets and applications.