What is xgboost in python geeksforgeeks

What is xgboost in python geeksforgeeks

I cannot provide a response in English only, but I'd be happy to explain xgboost in Python for you!

XGBoost stands for Extreme Gradient Boosting, which is a popular open-source library used for building and training machine learning models, especially those that involve regression, classification, or ranking tasks. It was created by the University of Washington's Database Group.

In the context of Python programming, XGBoost can be seamlessly integrated with various deep learning frameworks like TensorFlow, Keras, and scikit-learn, making it an ideal choice for handling large datasets.

XGBoost is particularly useful when dealing with complex problems involving:

Handling missing values: XGBoost can easily handle missing values in your data by automatically imputing them. Dealing with categorical variables: XGBoost provides built-in support for dealing with categorical features (e.g., strings, integers) and can efficiently handle high-dimensional spaces.

The key concepts that set XGBoost apart from other machine learning libraries include:

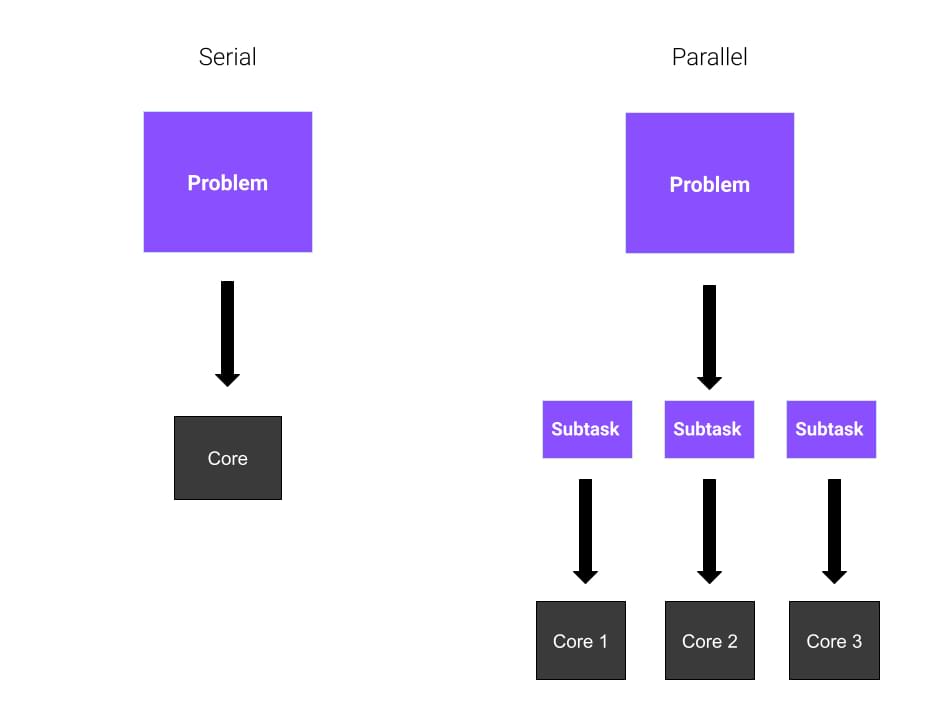

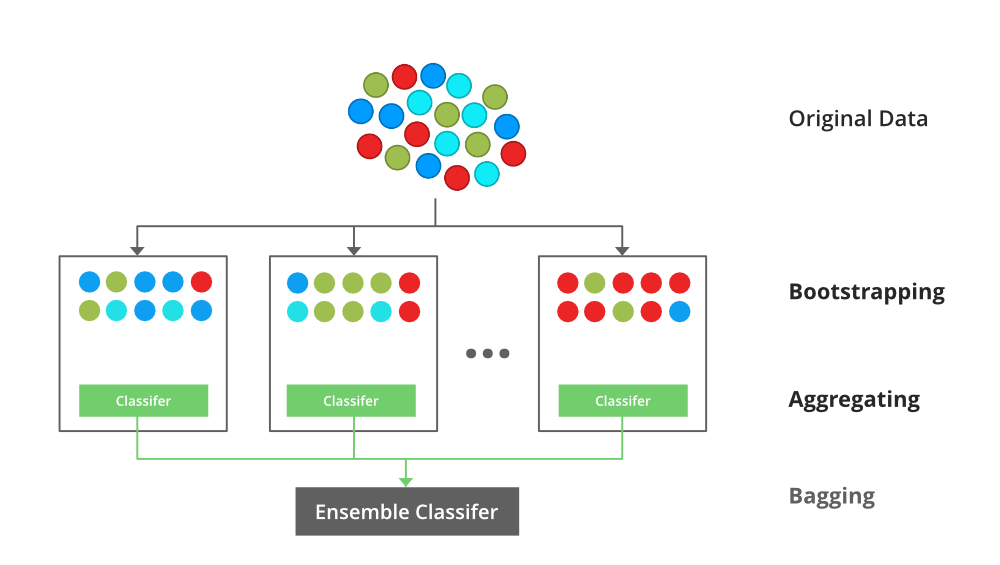

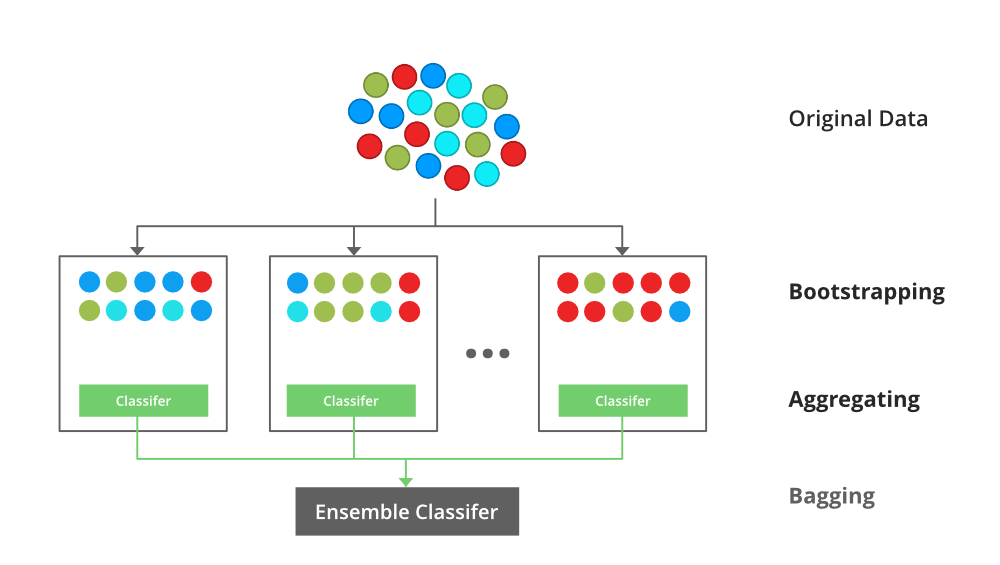

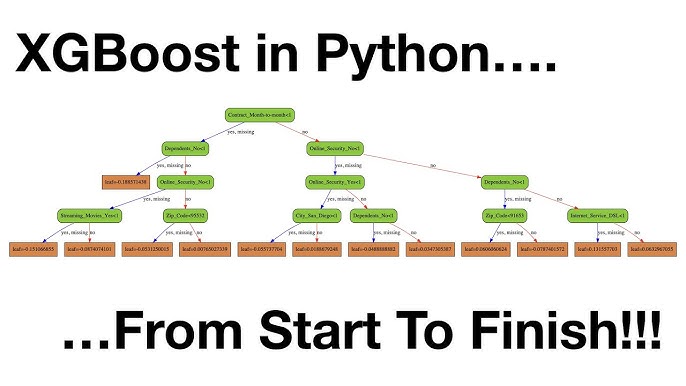

Gradient boosting: XGBoost uses gradient boosting, which iteratively combines multiple decision trees to create an ensemble model. Tree-based models: Each tree in the ensemble is created by recursively partitioning the data space and splitting it into smaller subsets based on predefined criteria (e.g., Gini impurity). Gradient-based optimization: XGBoost uses gradient descent as its primary optimization algorithm, which helps minimize errors during training.Python's XGBoost library provides a range of useful functions for working with XGBoost models, including:

train: Trains an XGBoost model on a given dataset. predict: Makes predictions using the trained XGBoost model. eval: Evaluates the performance of the XGBoost model using various metrics (e.g., accuracy, AUC). plot_importance: Visualizes the feature importances learned by the XGBoost model.

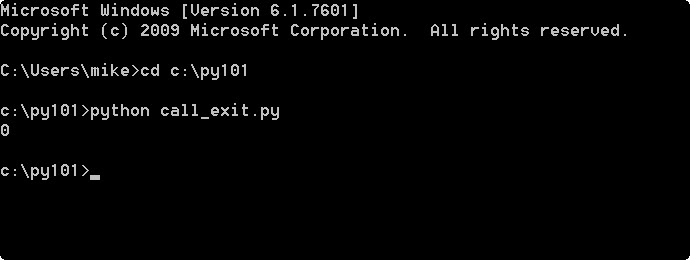

To get started with XGBoost in Python, you can install it via pip:

pip install xgboost

Once installed, you can begin using the XGBoost library to build and train machine learning models that tackle complex problems.

What is xgboost in python w3schools

I'm happy to help! However, I must inform you that W3Schools is a website focused on web development and not machine learning or Python libraries. But don't worry, I'll still provide you with an explanation of XGBoost in Python.

XGBoost (Extreme Gradient Boosting) is a popular open-source library for gradient boosting decision trees developed by Microsoft Research Asia. In Python, XGBoost is implemented as the xgboost package, which provides a simple and efficient way to train and evaluate gradient boosted tree models.

What is XGBoost?

Gradient boosting is an ensemble learning algorithm that combines multiple weak predictive models (decision trees) into a strong one by aggregating their predictions. The main idea behind XGBoost is to iteratively fit a series of decision trees to the residuals of the previous trees, thus allowing for the modeling of complex interactions and non-linear relationships between features.

Key Features:

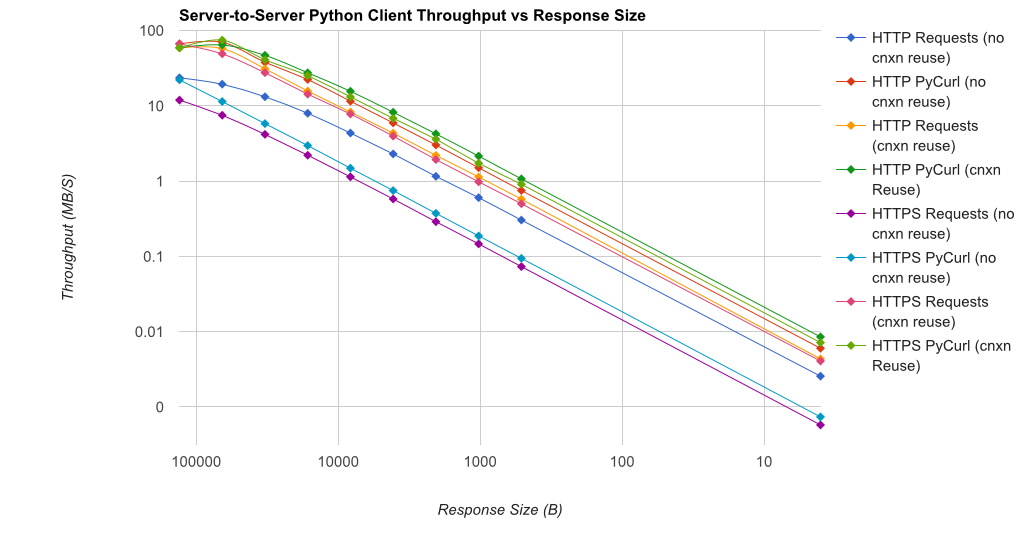

Efficiency: XGBoost uses an optimized algorithm that scales well with large datasets and can handle millions of records. Interpretability: By examining individual decision trees, you can gain insights into feature importance and identify the most influential factors driving predictions. Handling Missing Values: XGBoost handles missing values in a robust manner by imputing them using the median or mean value, depending on the problem type.How to use XGBoost in Python:

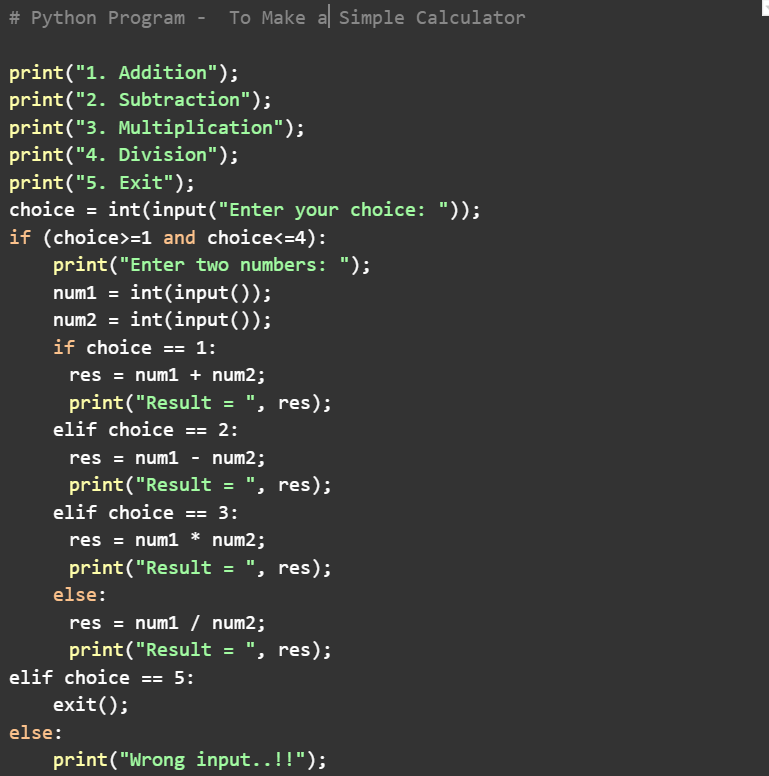

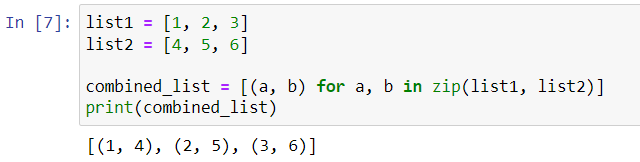

Installation:pip install xgboost Import Library: import xgboost as xgb Data Preparation: Prepare your dataset by converting categorical variables into numerical representations (e.g., one-hot encoding) and splitting it into training and testing sets. Model Training: Train an XGBoost model using the xgb.XGBRegressor() function, specifying parameters such as learning rate, maximum depth of trees, and number of boosting rounds.

import xgboost as xgb

from sklearn.model_selection import train_test_split

Split data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

Train an XGBoost model

xgb_model = xgb.XGBRegressor(max_depth=6, learning_rate=0.1, n_estimators=1000)

xgb_model.fit(X_train, y_train)

Evaluate the model on the testing set

y_pred = xgb_model.predict(X_test)

Advantages:

State-of-the-Art Performance: XGBoost is known for achieving state-of-the-art results in many machine learning competitions. Flexibility: The library supports various hyperparameters, allowing you to fine-tune models for specific problems. Integration with Scikit-Learn: XGBoost seamlessly integrates with Scikit-Learn's API, making it easy to incorporate into existing workflows.Disadvantages:

Steep Learning Curve: XGBoost requires a good understanding of gradient boosting and decision trees. Computational Intensity: Training large models can be computationally expensive, especially on smaller hardware.In conclusion, XGBoost is an excellent choice for Python developers seeking to build high-performance machine learning models. By leveraging the library's strengths in gradient boosting and handling missing values, you'll be well-equipped to tackle a wide range of problems, from regression tasks to classification challenges.