Python gensim tutorial geeksforgeeks

Python gensim tutorial geeksforgeeks

Here is a comprehensive guide to using Gensim for topic modeling and document similarity analysis:

What is Gensim?

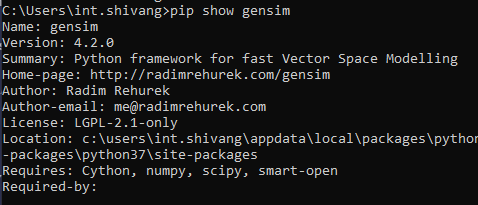

Gensim (Generative Syntax-based Inference Motor) is an open-source Python library developed by Radim Řehůrek. It provides high-performance, scalable, and flexible functionality for processing large volumes of text data. Gensim focuses on topic modeling and document similarity analysis, allowing you to uncover hidden patterns in your data.

Why Use Gensim?

Gensim offers several advantages:

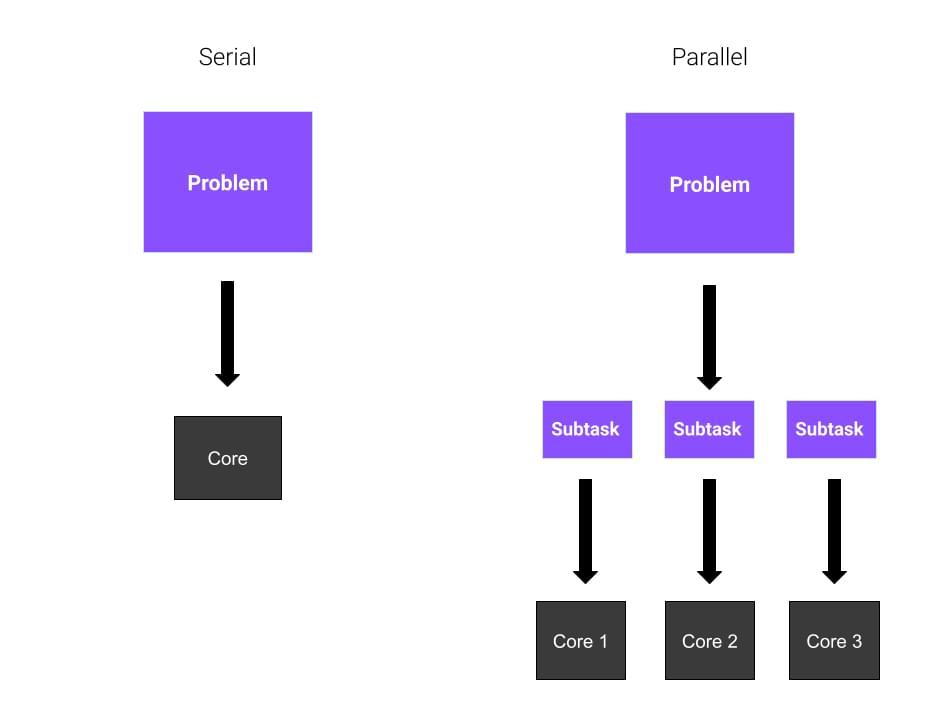

Scalability: Gensim is designed to handle massive datasets and can process huge amounts of text data efficiently. Customizability: You can fine-tune parameters for various algorithms, allowing you to tailor the analysis to your specific needs. Interoperability: Gensim seamlessly integrates with other Python libraries and tools, making it easy to incorporate into your existing workflow.Basic Concepts

Before diving into the tutorial, let's cover some fundamental concepts:

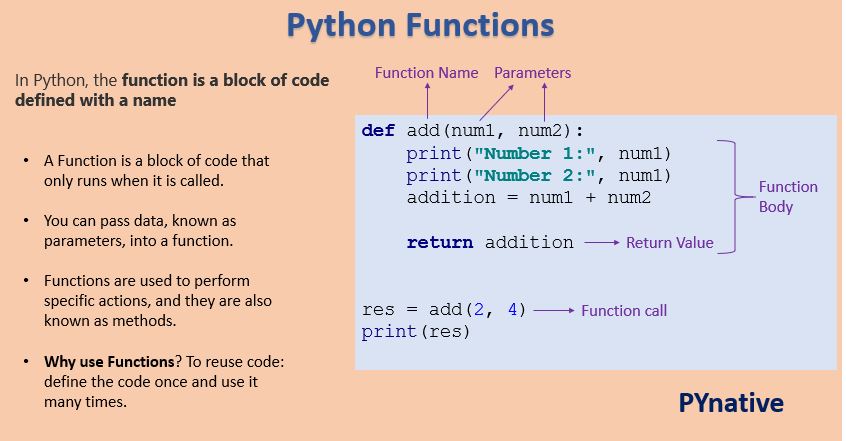

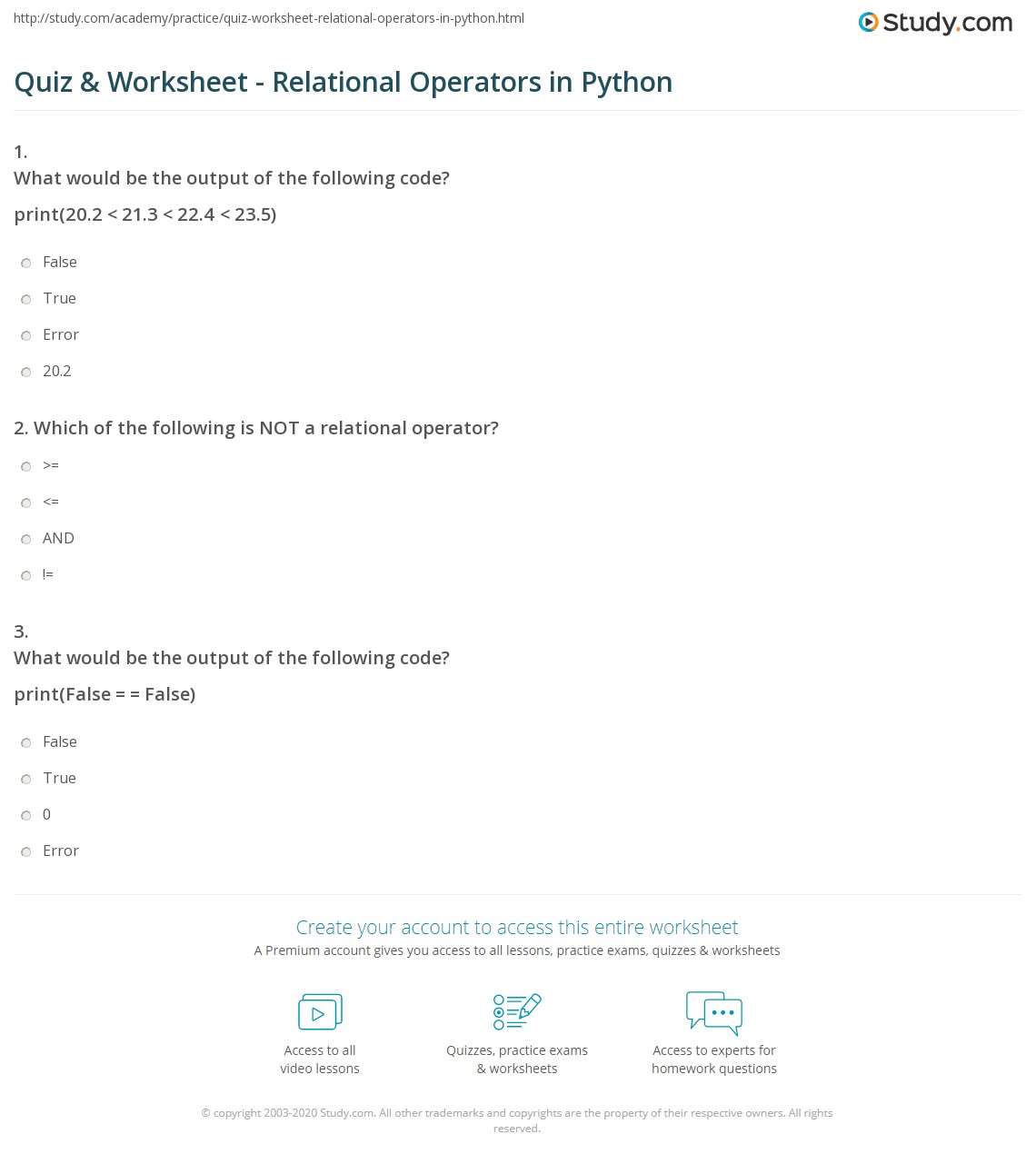

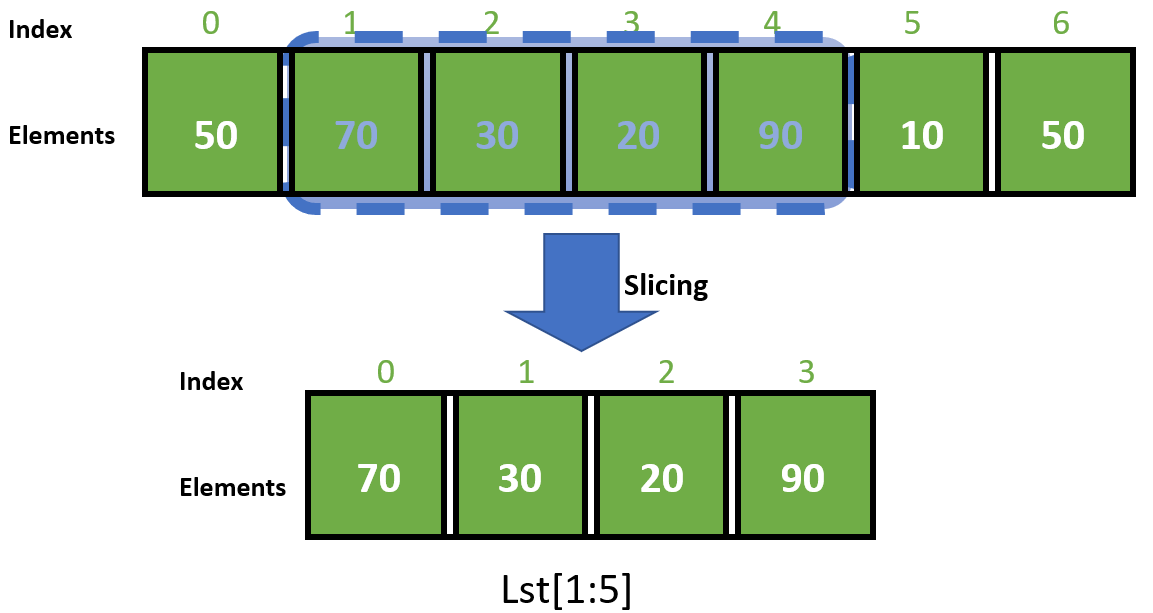

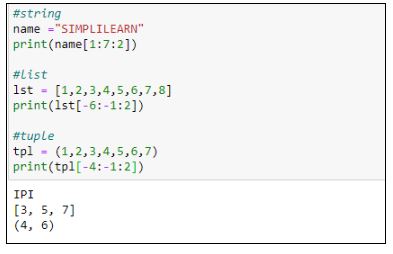

Tokens: Small units of text, such as words or phrases, that make up a document. Bag-of-words: A representation of a document as a set of unique tokens and their frequencies (the bag-of-words model). Topics: Latent structures that capture underlying patterns in the data.Tutorial

We'll now work through an example using Gensim to analyze a collection of articles:

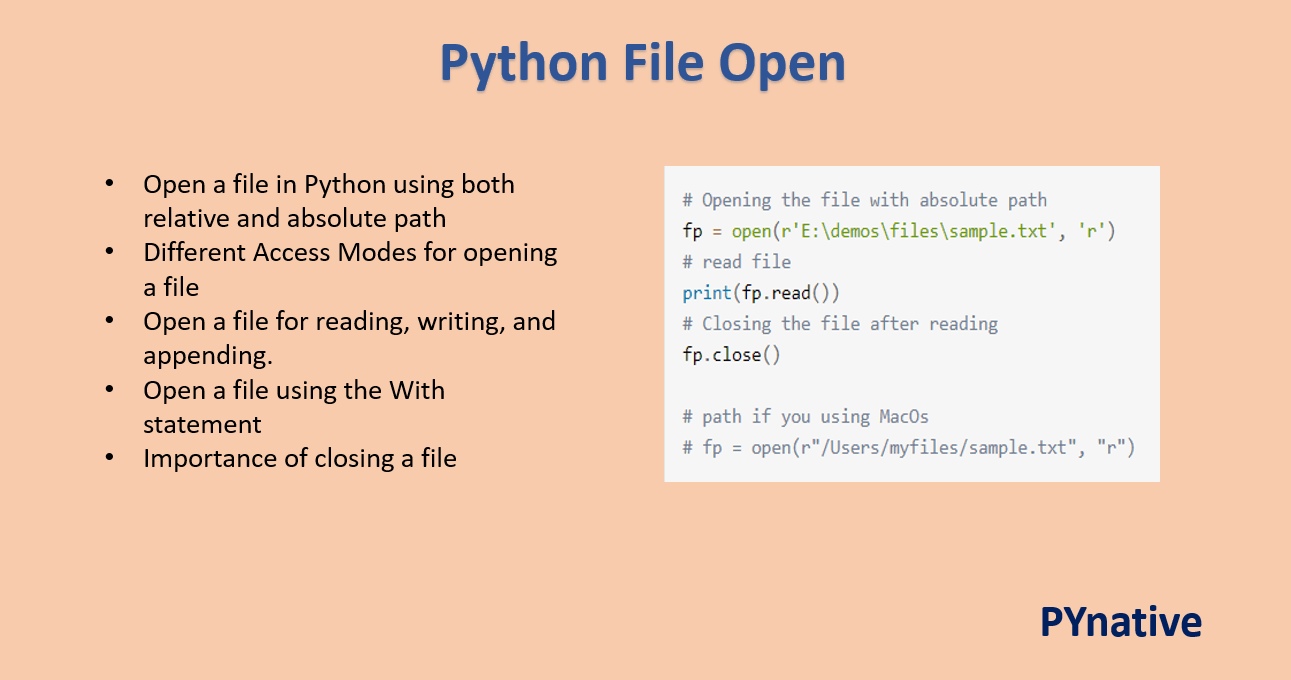

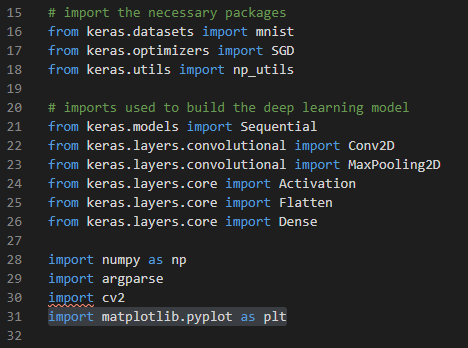

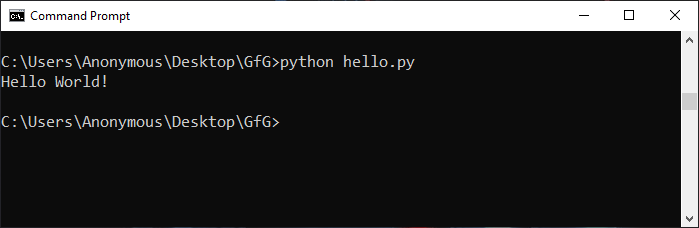

Importing Librariesimport gensim

from gensim.summarization.keypoints import keywords

from gensim.matutils import corpus2dense

Create a list of documents (e.g., articles) and convert them into bag-of-words representations using Gensim's simple_preprocess function:

docs = [...]

documents = [gensim.utils.simple_preprocess(doc, min_len=2, max_len=10000) for doc in docs]

Use the trained model to extract topics from your data:

# Train a topic model using Latent Dirichlet Allocation (LDA)

lda_model = gensim.models.ldamodel.LdaModel(corpus=documents, id2word=id2word, passes=15)

Extract the top words for each topic and calculate the topic similarity:

# Get the topics' weights

topics_weights = lda_model.get_topics()

Extract the top words for each topic

top_words_per_topic = [sorted(topic, key=lambda x: x[1], reverse=True)[:10] for topic in topics_weights]

Calculate the similarity between documents using the cosine similarity metric:

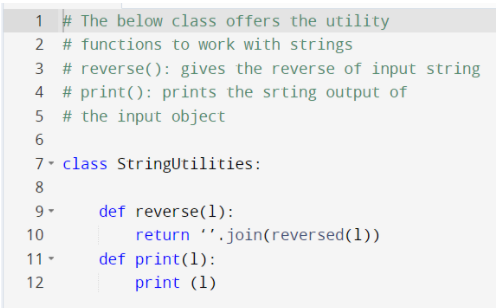

def calculate_similarity(doc_a, doc_b):

Convert the documents into dense vectors

vec_a = corpus2dense(doc_a, num_words=len(id2word)).T.toarray()[0]

vec_b = corpus2dense(doc_b, num_words=len(id2word)).T.toarray()[0]

Calculate the cosine similarity

dot_product = np.dot(vec_a, vec_b)

magnitude_a = np.sqrt(np.dot(vec_a, vec_a))

magnitude_b = np.sqrt(np.dot(vec_b, vec_b))

return dot_product / (magnitude_a * magnitude_b)

Calculate document similarity for two documents

doc_similarity = calculate_similarity(doc1, doc2)

Conclusion

In this tutorial, we've explored the basics of Gensim and demonstrated how to:

Load text data Train a topic model using Latent Dirichlet Allocation (LDA) Analyze topics and calculate their similarity Calculate document similarity using cosine similarityWith Gensim, you can uncover hidden patterns in your data, identify key topics, and analyze document relationships. The library's flexibility and scalability make it an excellent choice for complex text analytics tasks.

Gensim Python

I'd be happy to help you with your inquiry about Gensim, a popular Python library for natural language processing and topic modeling!

Gensim (Generative Syntax-based Model) is an open-source Python library that focuses on generating semantic representations of text using statistical models. It's particularly well-suited for large-scale information retrieval tasks, such as text classification, topic modeling, and document clustering.

Some of the key features of Gensim include:

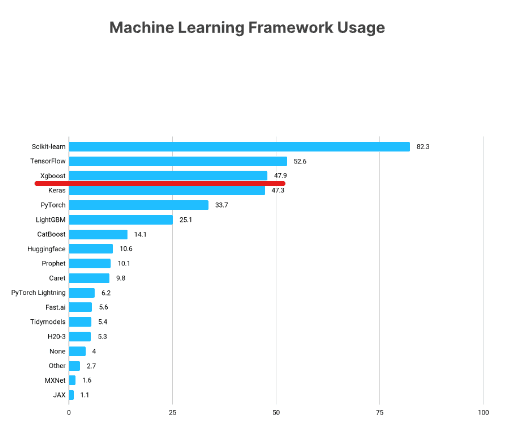

Topic Modeling: Gensim provides implementations of popular topic modeling algorithms like Latent Dirichlet Allocation (LDA), Latent Semantic Analysis (LSA), and Non-Negative Matrix Factorization (NMF). These algorithms allow you to discover latent topics or themes in large collections of text. Document Clustering: Gensim can cluster documents based on their semantic similarity, helping you group related texts together and identify patterns within your dataset. Text Preprocessing: Gensim provides tools for preprocessing text data, including tokenization, stemming, lemmatization, and stopword removal. This helps prepare your text for topic modeling or document clustering tasks. Vector Space Modeling: Gensim supports various vector space models (VSMs) like bag-of-words, TF-IDF, and word embeddings. These VSMs enable you to represent texts as numerical vectors that can be manipulated using linear algebra operations. Parallelization: Gensim offers built-in support for parallelizing computations using the NumPy and SciPy libraries. This can significantly speed up processing times when working with large datasets.Gensim has many applications in natural language processing, information retrieval, and data analysis. For instance:

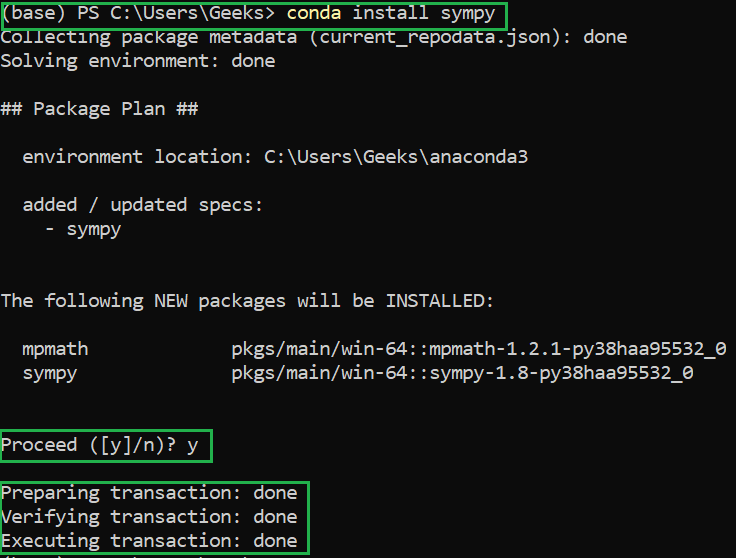

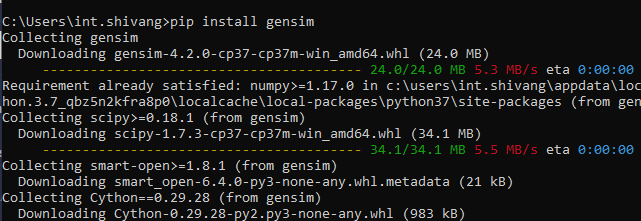

Sentiment Analysis: Use Gensim to analyze customer feedback or social media posts by identifying topics related to sentiment, such as "positive" or "negative". Information Retrieval: Employ Gensim to rank documents based on relevance to a given query or topic. Document Summarization: Utilize Gensim's topic modeling capabilities to generate summaries of long documents by selecting the most important sentences or phrases.To get started with Gensim, you can install it via pip: pip install gensim. Then, explore its documentation and examples on GitHub. Some popular use cases for Gensim include:

For more advanced users, Gensim provides APIs for integrating it with other Python libraries and frameworks. This enables you to build custom workflows and integrate Gensim with other natural language processing tools.

Remember to check out the Gensim documentation and GitHub repository for tutorials, examples, and more information on how to get started with this powerful library!