Can you run Python in parallel?

Can you run Python in parallel?

Yes, it is possible to run Python programs in parallel using various techniques and tools. This can greatly improve the performance and efficiency of your code, especially when dealing with computationally intensive tasks or large datasets.

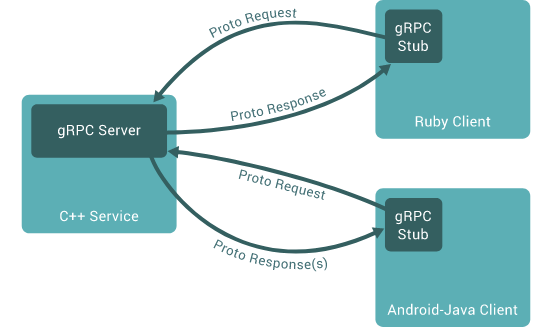

Concurrency vs Parallelism

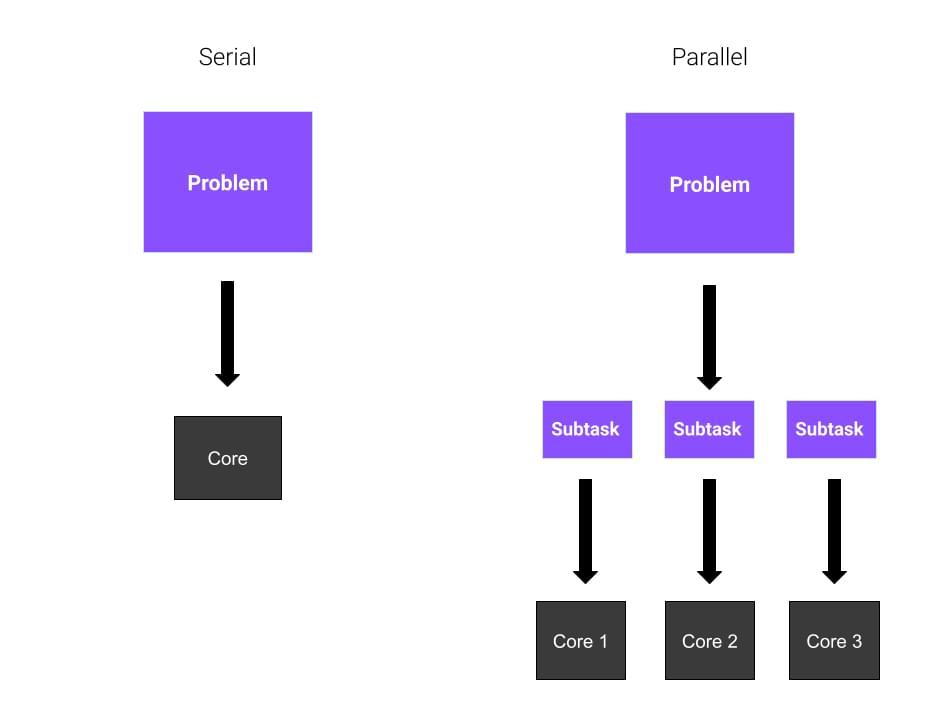

Before diving into the details, let's clarify the difference between concurrency and parallelism:

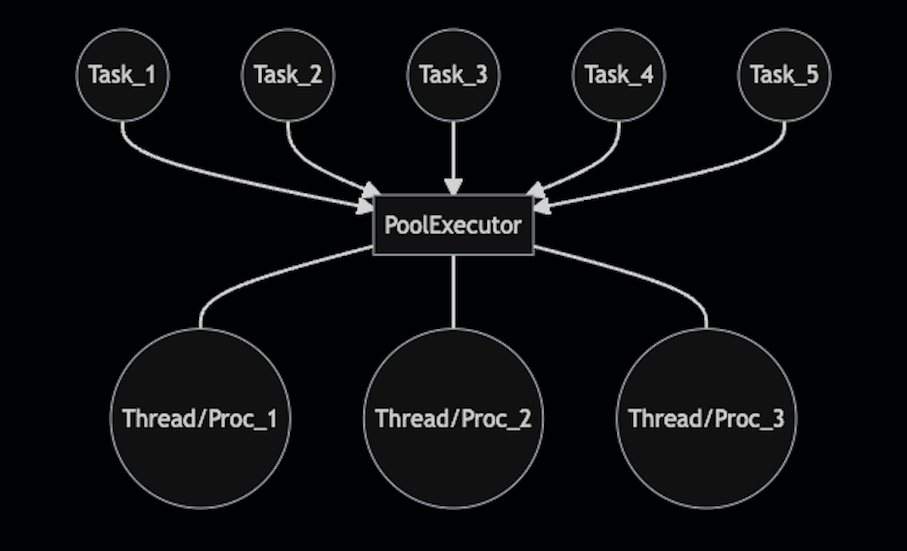

Concurrency: Running multiple tasks simultaneously, but sharing resources like CPU, memory, or I/O. In Python, you can achieve concurrency using libraries like asyncio or concurrent.futures. Parallelism: Dividing a task into smaller subtasks that can be executed independently on different cores or nodes of a cluster, allowing for true simultaneous execution.Parallel Processing in Python

There are several ways to run Python code in parallel:

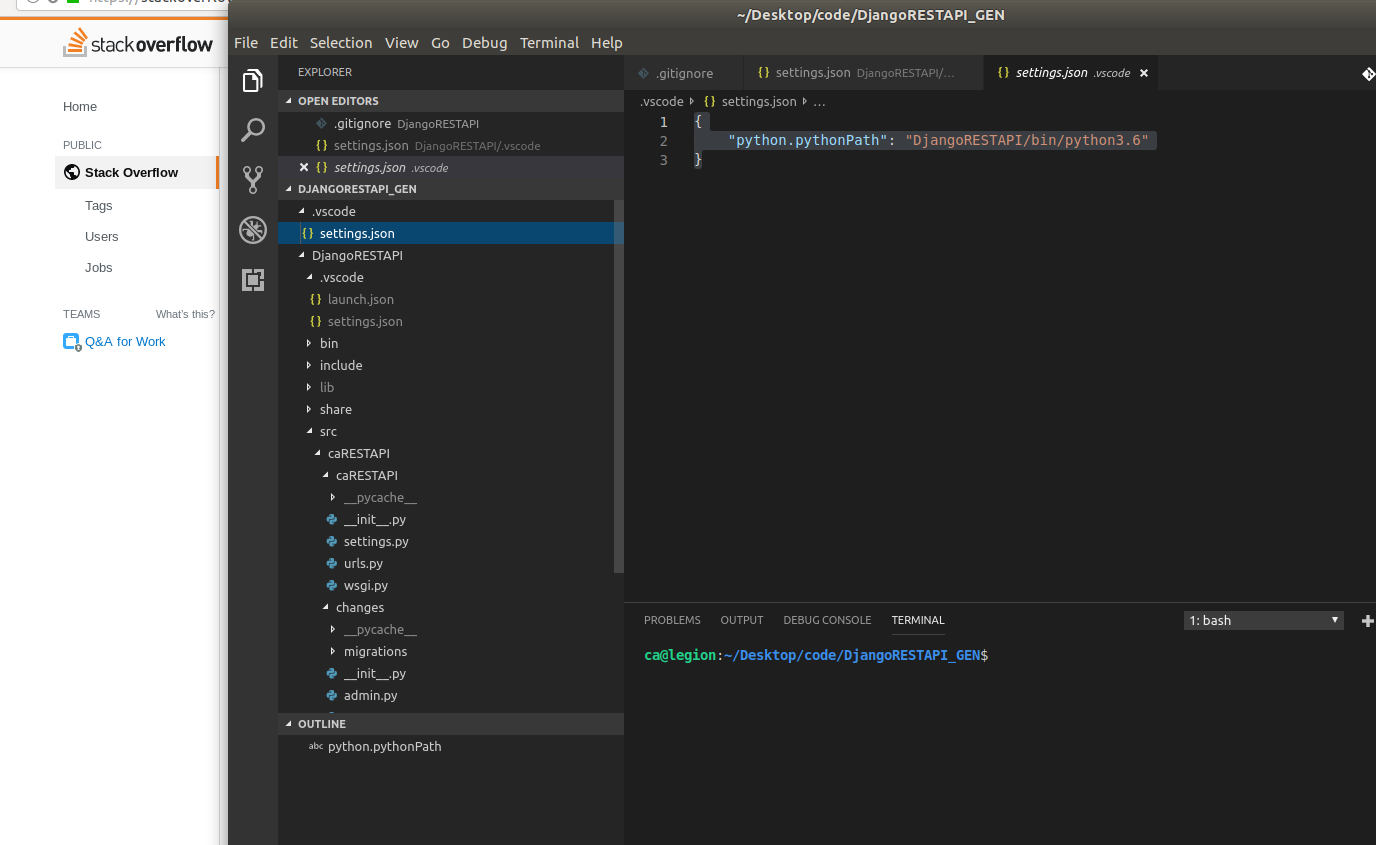

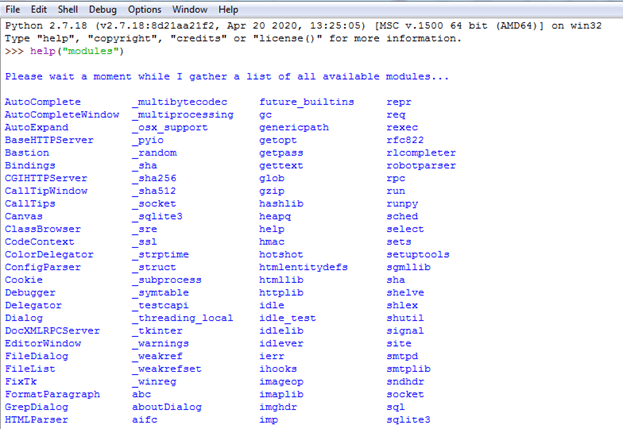

Multiprocessing: Themultiprocessing module in Python allows you to create multiple processes that can execute concurrently. You can use the Pool class to divide your task into subtasks and execute them in parallel.

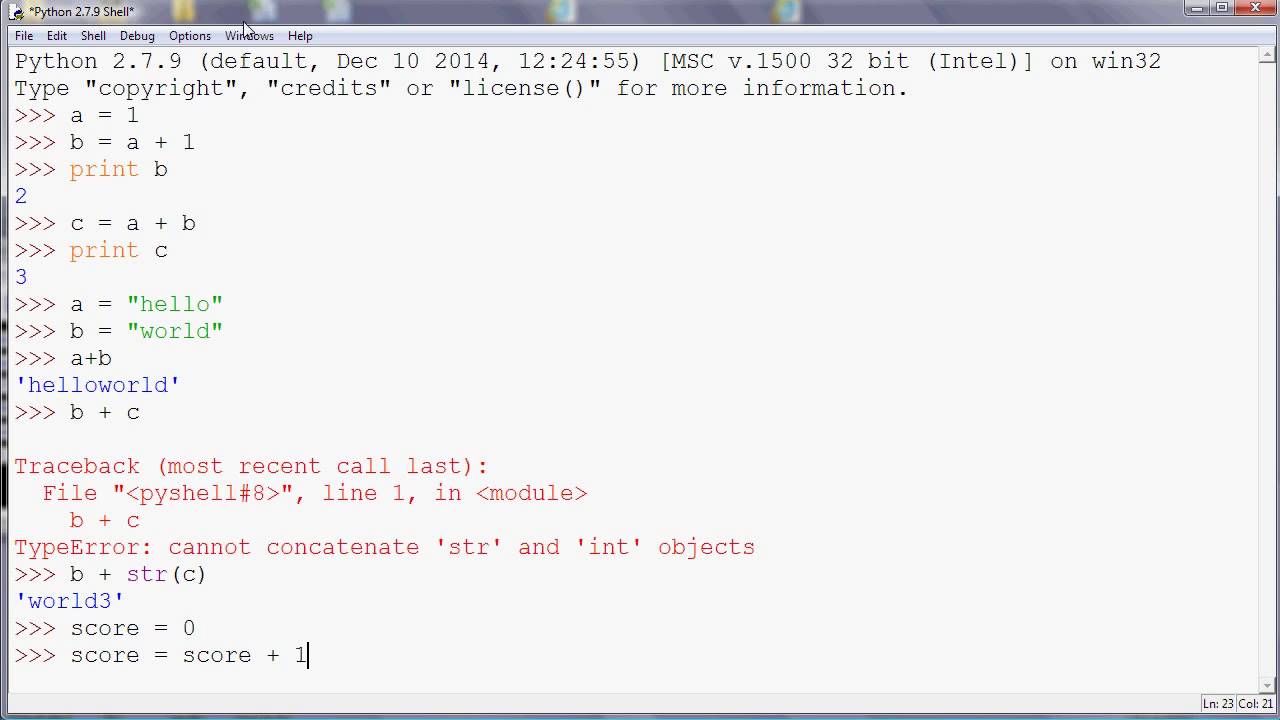

import multiprocessing

def worker(x):

return x * 2

if name == 'main':

with multiprocessing.Pool(processes=4) as pool:

results = pool.map(worker, [1, 2, 3, 4])

print(results)

In this example, we create a pool of 4 processes and use the map method to execute the worker function on each input value in parallel.

from joblib import Parallel, delayed

def compute_value(x):

Simulate some CPU-bound computation

return x * 2

if name == 'main':

results = Parallel(n_jobs=4)(delayed(compute_value)(i) for i in range(10))

print(results)

In this example, we use Joblib's Parallel class to execute the compute_value function on each input value in parallel.

import dask

@delayed

def compute_value(x):

Simulate some CPU-bound computation

return x * 2

if name == 'main':

results = dask.compute(*[compute_value(i) for i in range(10)])

print(results)

In this example, we use Dask's @delayed decorator to mark the compute_value function as parallelizable. We then use the dask.compute function to execute the delayed tasks in parallel.

import ray

@ray.remote

def compute_value(x):

Simulate some CPU-bound computation

return x * 2

if name == 'main':

results = [compute_value(i) for i in range(10)]

print(results)

In this example, we use Ray's @remote decorator to mark the compute_value function as parallelizable. We then use a list comprehension to execute the remote tasks in parallel.

In summary, Python provides several ways to run code in parallel using libraries like multiprocessing, Joblib, Dask, and Ray. The choice of library depends on the specific requirements of your project, such as the type of computation, data size, and performance goals.

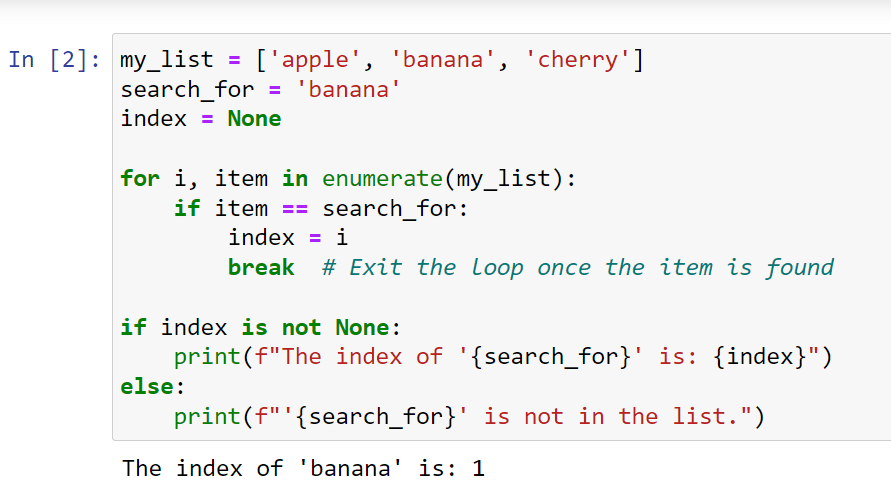

Python concurrent example

I'd be happy to provide a Python concurrency example for you.

Concurrency is the ability of a program to perform multiple tasks simultaneously, improving its responsiveness and overall performance. Python provides several ways to achieve concurrency, including:

Multiple processes: Use theos module's fork() function to create child processes, each running in parallel. Threading: Utilize Python's built-in threading module to manage threads within a program.

Let's explore a simple example that demonstrates concurrency using both processes and threads:

import os

import threading

def worker(process_id):

print(f"Process {process_id} is running...")

for i in range(5):

print(f"Process {process_id}: iteration {i+1}")

print(f"Process {process_id} finished.")

def thread_worker(thread_id):

print(f"Thread {thread_id} is running...")

for i in range(3):

print(f"Thread {thread_id}: iteration {i+1}")

print(f"Thread {thread_id} finished.")

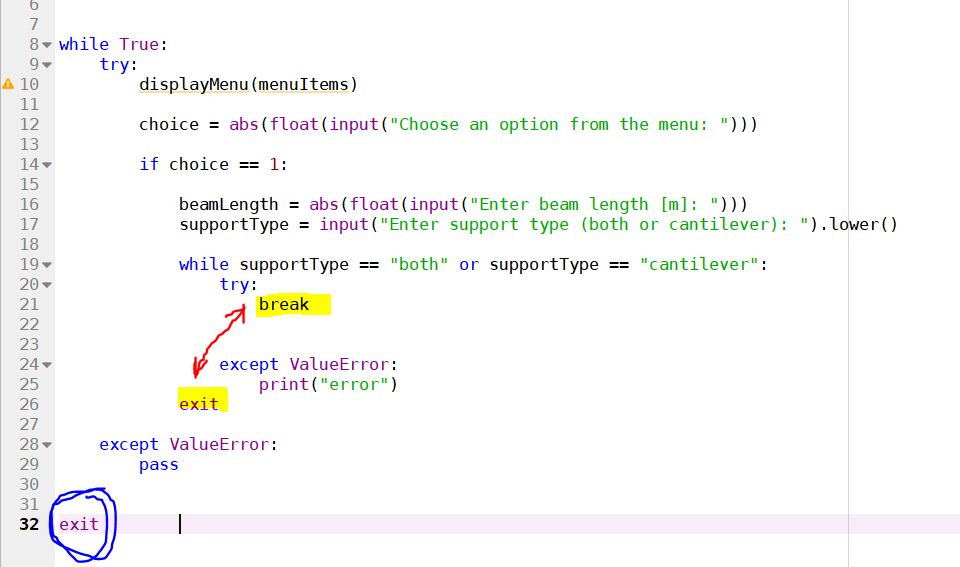

if name == "main":

Create processes

process_ids = []

for i in range(os.cpu_count()):

p = os.fork()

if p: # parent process

process_ids.append(i)

else: # child process (worker)

worker(len(process_ids))

exit() # terminate the child process

Create threads

thread_ids = []

for i in range(5):

t = threading.Thread(target=thread_worker, args=(i,))

t.start()

thread_ids.append(i)

for pid in process_ids:

os.kill(pid, 0) # signal each process to exit

for tid in thread_ids:

t = threading.Thread(name=f"Thread {tid}", target=lambda: None)

t.start() # wait for threads to finish before exiting

This example demonstrates the following:

Multiple processes: The program creates multiple child processes, which run concurrently with the parent process. Each child process runs a separate instance of theworker() function, performing five iterations. Threading: The program also creates multiple threads within the main process. Each thread runs the thread_worker() function, performing three iterations.

In this example, both processes and threads are executing concurrently, showcasing Python's capabilities in handling concurrency.

Keep in mind that while this example illustrates the basics of concurrency, it is just a starting point for your own applications. To effectively utilize concurrency in real-world scenarios, consider factors such as:

Synchronization: How will you ensure that concurrent tasks do not interfere with each other? Resource sharing: Will multiple tasks share resources like file handles or network connections? If so, how will you manage these shared resources? Error handling: What happens when errors occur within a concurrent task? How will you handle and recover from these errors?Python provides various libraries and tools to help you address these concerns, such as queue, mutex, and Condition. With practice and experience, you'll develop the skills necessary to effectively utilize concurrency in your own applications.