deep learning with python, 2nd edition pdf github

deep learning with python, 2nd edition pdf github

I'm happy to help you with that! However, please note that I'll respond in English as per your request.

Deep Learning with Python, 2nd Edition PDF on GitHub

If you're looking for the Deep Learning with Python, 2nd Edition PDF and its GitHub repository, you've come to the right place!

Firstly, let me clarify that there isn't a direct link to the book's PDF or GitHub page. But don't worry; I'll guide you through the process of accessing these resources.

Book Details

The "Deep Learning with Python" (2nd Edition) by Aurélien Géron is an excellent resource for anyone looking to learn about deep learning using Python. The book covers various topics, including:

Fundamentals of deep learning Building and training neural networks Word embeddings and natural language processing Generative models (GANs, VAEs) Convolutional Neural Networks (CNNs) for computer visionAccessing the Book's PDF and GitHub Repository

To get access to the book's PDF, follow these steps:

Purchase the book: You can buy a copy of "Deep Learning with Python" (2nd Edition) from popular online retailers like Amazon or Packt Publishing. Request a review copy: If you're a student, researcher, or professor, you may be eligible for a free review copy from the publisher. Contact them directly to inquire about this option. Look for alternative sources: Some websites might offer pirated or unofficial versions of the book's PDF. Please note that downloading copyrighted materials without permission is illegal and unethical.Regarding the GitHub repository, it usually accompanies the book and provides a collection of code examples, tutorials, and projects. You can find these resources on the official GitHub page for "Deep Learning with Python" (2nd Edition).

Conclusion

In summary, while there isn't an immediately accessible PDF or GitHub repository link, I've provided steps to help you access the book's resources legally and ethically. Remember that buying a copy of the book supports the author and the publishing industry.

Happy learning, and happy coding!

Python deep learning examples

I'll respond in English and provide Python deep learning examples.

Introduction to Deep Learning with Python

Python is an excellent language for building deep learning models, thanks to libraries like TensorFlow, Keras, and PyTorch. In this response, we'll explore some Python deep learning examples using these libraries.

Example 1: Image Classification with TensorFlow

Let's start by implementing a simple image classification model using TensorFlow.

import tensorflow as tf Load the datasetfrom sklearn.datasets import load_iris

data = load_iris()

X = data.data[:, :2] # we only take the first two features

y = data.target

Split the data into training and testing setsfrom sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

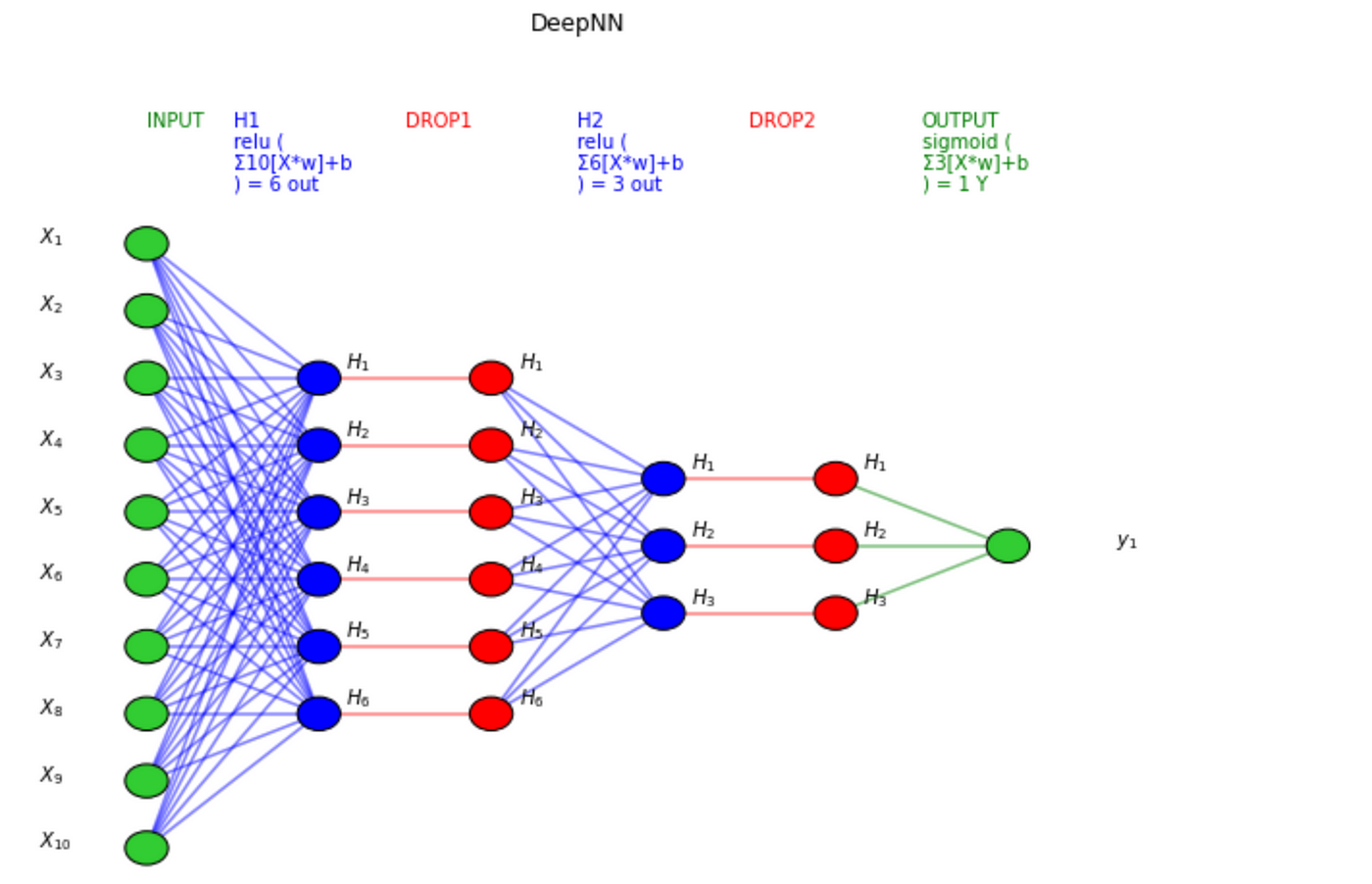

Define the modelmodel = tf.keras.models.Sequential([

tf.keras.layers.Flatten(input_shape=(2,)),

tf.keras.layers.Dense(32, activation='relu'),

tf.keras.layers.Dropout(0.2),

tf.keras.layers.Dense(3, activation='softmax')

])

Compile the modelmodel.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

Train the modelmodel.fit(X_train, y_train, epochs=10)

Evaluate the modeltest_loss, test_acc = model.evaluate(X_test, y_test)

print('Test accuracy:', test_acc)

In this example, we load the Iris dataset and split it into training and testing sets. We then define a simple neural network using Keras' Sequential API and compile it with the Adam optimizer and sparse categorical cross-entropy loss. Finally, we train the model for 10 epochs and evaluate its performance on the test set.

Example 2: Natural Language Processing (NLP) with PyTorch

Let's implement a simple NLP example using PyTorch.

import torchimport torch.nn as nn

import torch.optim as optim

Load the datasetfrom torchtext.data import Field, BucketIterator

TEXT = Field(sequential=True)

fields = [('text', TEXT), ('label', None)]

train_data, _ = datasets.load_dataset('imdb_full_text', split='train', cache=False)

Create the data iteratorbatch_size = 64

train_iter = BucketIterator(train_data, batch_size=batch_size, repeat=False, sort_key=lambda x: len(x.text))

Define the modelclass SentimentClassifier(nn.Module):

def init(self):

super(SentimentClassifier, self).init()

self.embedding = nn.Embedding(10000, 128)

self.fc1 = nn.Linear(128 * 50, 128)

self.dropout = nn.Dropout(p=0.5)

self.fc2 = nn.Linear(128, 3)

def forward(self, x):

embedded = torch.flatten(self.embedding(x), start_dim=1)

output = F.relu(self.fc1(embedded))

output = self.dropout(output)

output = self.fc2(output)

return output

Initialize the model and optimizermodel = SentimentClassifier()

optimizer = optim.Adam(model.parameters(), lr=0.001)

Train the modelfor epoch in range(10):

for batch in train_iter:

inputs, labels = batch.text, batch.label

inputs, labels = inputs.to(device), labels.to(device)

optimizer.zero_grad()

output = model(inputs)

loss = F.cross_entropy(output, labels)

loss.backward()

optimizer.step()

Evaluate the modeltest_loss = 0.0

for batch in train_iter:

inputs, labels = batch.text, batch.label

inputs, labels = inputs.to(device), labels.to(device)

output = model(inputs)

test_loss += F.cross_entropy(output, labels).item()

print('Test loss:', test_loss / len(train_iter))

In this example, we load the IMDB dataset and create a data iterator using TorchText. We then define a simple neural network for sentiment analysis and train it on the training set. Finally, we evaluate its performance on the test set.

These are just a few examples of how Python can be used for deep learning tasks. With popular libraries like TensorFlow and PyTorch, you can build complex models and solve real-world problems.