Python concurrent vs asyncio

Python concurrent vs asyncio

A question that gets to the heart of efficient programming!

Python provides two powerful libraries for concurrent and asynchronous programming: concurrent.futures and asyncio. While both can help you write more efficient, scalable code, they serve different purposes and are used in different situations.

Concurrency using concurrent.futures

The concurrent.futures module is designed to simplify the use of multiple threads or processes to execute concurrently. It provides a high-level interface for working with concurrency, making it easier to write programs that can take advantage of multiple CPU cores or multiple processes.

There are two main classes in concurrent.futures: ThreadPoolExecutor and ProcessPoolExecutor. The former is used for thread-based concurrency (i.e., multiple threads running within a single process), while the latter is used for process-based concurrency (i.e., multiple processes running concurrently).

Here's an example of using ThreadPoolExecutor to execute concurrent tasks:

import concurrent.futuresdef task(x):

print(f"Task {x} started")

Simulate some workfor i in range(5):

print(f"Task {x} step {i}")

print(f"Task {x} finished")

with concurrent.futures.ThreadPoolExecutor(max_workers=3) as executor:

futures = [executor.submit(task, x) for x in range(4)]

for future in futures:

future.result()

In this example, we create a thread pool with three worker threads and submit four tasks (represented by the task function) to execute concurrently. The result() method is used to wait for each task to complete.

Asynchronous programming using asyncio

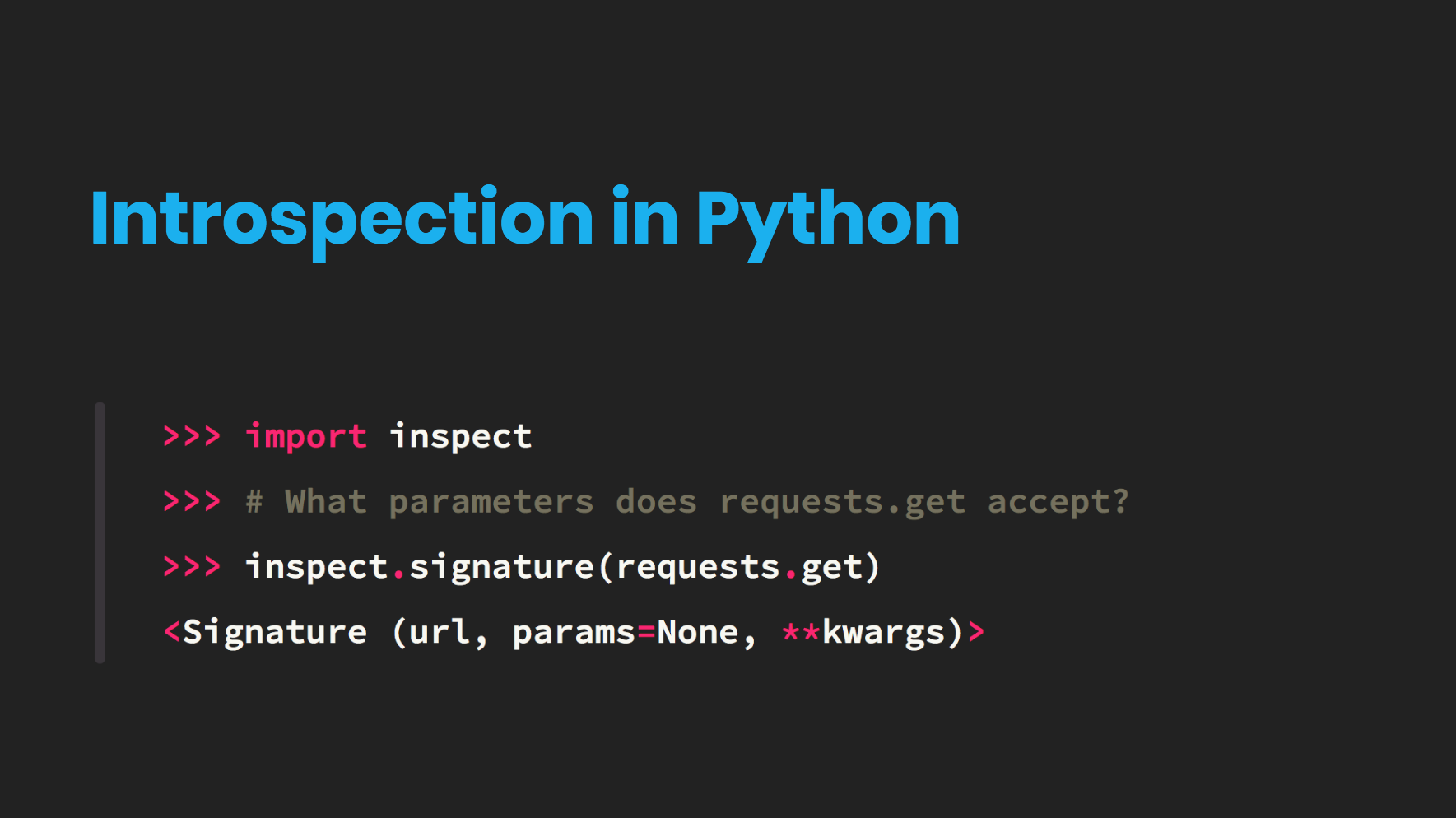

The asyncio module is designed specifically for asynchronous I/O-bound programming. It provides a framework for writing single-threaded, event-driven programs that can take advantage of the await keyword to wait for I/O operations to complete.

asyncio is particularly useful when working with I/O-bound tasks, such as:

Here's an example of using asyncio to execute concurrent asynchronous tasks:

import asyncioasync def task(x):

print(f"Task {x} started")

Simulate some asynchronous workawait asyncio.sleep(1) # simulate some I/O-bound operation

print(f"Task {x} finished")

async def main():

tasks = [task(x) for x in range(4)]

await asyncio.gather(*tasks)

asyncio.run(main())

In this example, we define a coroutine task that simulates some asynchronous work and use the gather() function to run multiple coroutines concurrently. The run() function is used to start the event loop.

Comparison

While both libraries can be used for concurrency, they serve different purposes:

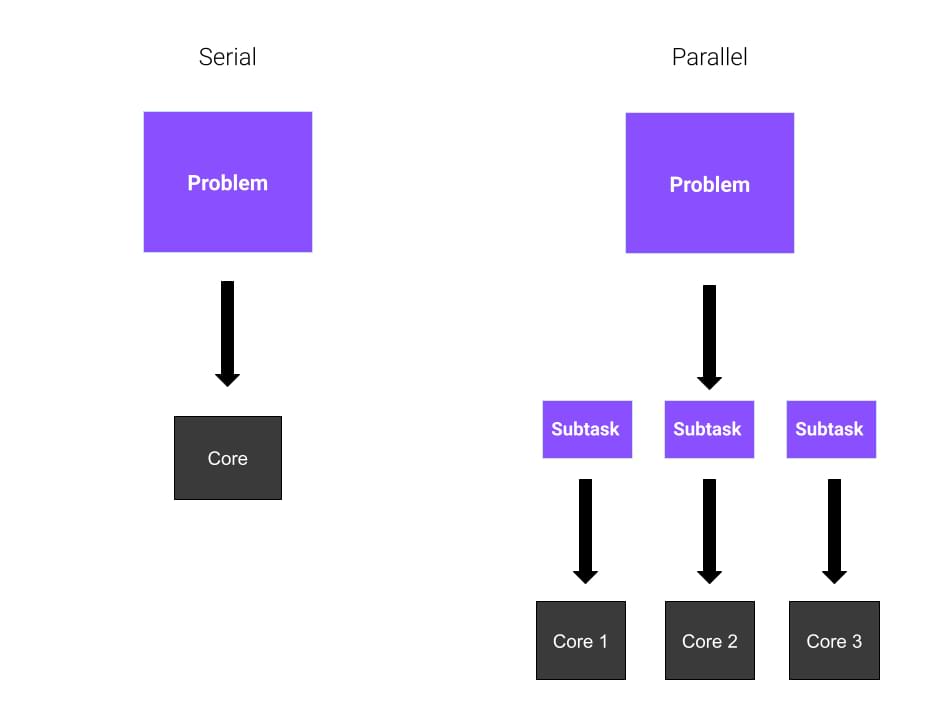

concurrent.futures is better suited for CPU-bound tasks (e.g., processing large datasets) and provides a more traditional threading or process-based approach. asyncio is specifically designed for I/O-bound tasks and provides a framework for single-threaded, event-driven programming.

When choosing between the two, consider the type of task you're trying to accomplish:

If you need to execute CPU-bound tasks concurrently, useconcurrent.futures. If you need to perform I/O-bound operations concurrently (e.g., making multiple network requests), use asyncio.

In summary, both libraries are powerful tools for achieving concurrency in Python. While they share some similarities, they serve different purposes and should be chosen based on the specific requirements of your project.

Does Python support parallel programming?

Python supports parallel programming through various libraries and frameworks that enable developers to take advantage of multiple CPU cores, GPUs, or distributed computing resources. Here are some ways to achieve parallelism in Python:

Multi-processing (multiprocessing): Python's multiprocessing module allows you to create multiple processes that can run concurrently, sharing memory space through a Manager process. This is useful for I/O-bound tasks, such as data processing or web scraping.Example:

Multi-threading (threading): Python's threading module enables you to create multiple threads that can run concurrently within the same process. This is suitable for CPU-bound tasks, such as scientific simulations or data analysis.from multiprocessing import Pooldef worker(x):

return x * x

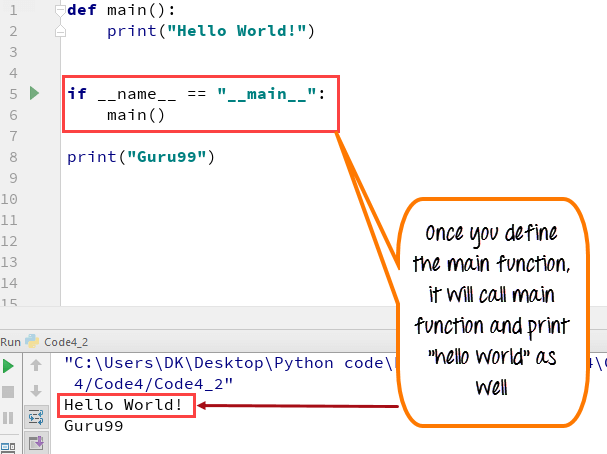

if name == 'main':

pool = Pool(processes=4)

result = pool.map(worker, [1, 2, 3, 4])

print(result) # Output: [1, 4, 9, 16]

Example:

Concurrent.futures: This library provides a high-level interface for parallelism, allowing you to execute tasks asynchronously using threads or processes.import threadingdef worker():

print("Thread started")

for i in range(5):

print(f"Thread {i}")

threads = []

for i in range(4):

t = threading.Thread(target=worker)

threads.append(t)

t.start()

for t in threads:

t.join()

Example:

Distributed computing: Python has several libraries and frameworks for distributed computing, such as: Dask: A flexible parallel computing library that allows you to scale up your computations. Ray: A high-performance distributed computing framework that can run tasks on multiple machines.import concurrent.futuresdef worker(x):

return x * x

with concurrent.futures.ProcessPoolExecutor() as executor:

results = list(executor.map(worker, [1, 2, 3, 4]))

print(results) # Output: [1, 4, 9, 16]

Example (using Dask):

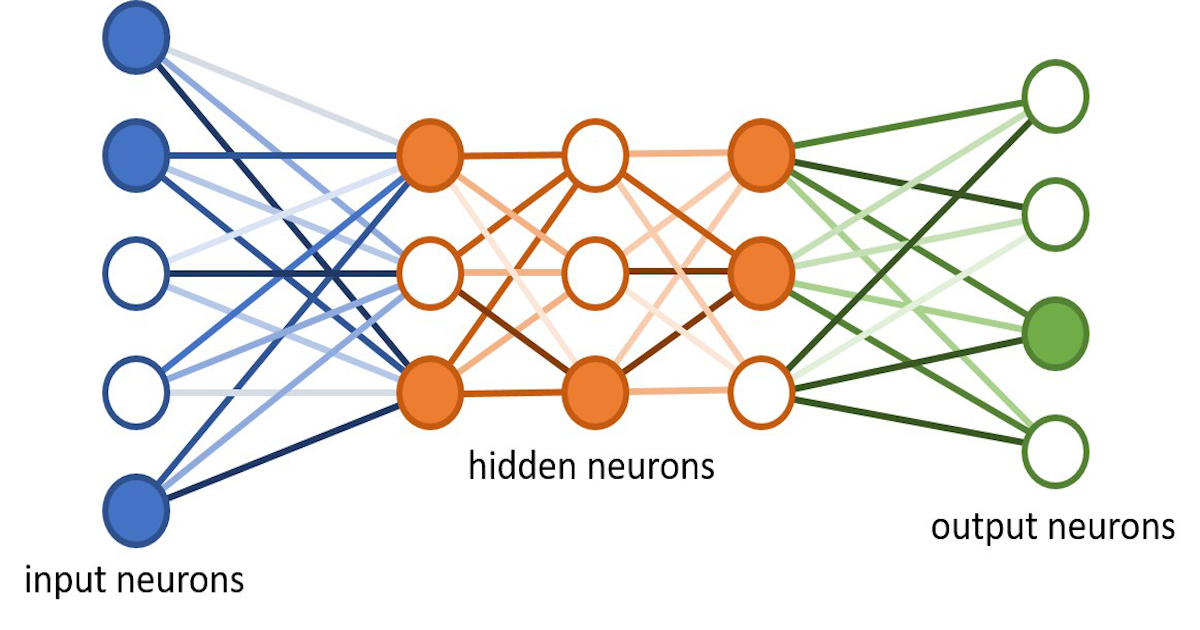

GPU-accelerated computing: Python has libraries like: TensorFlow: A popular open-source machine learning framework that can run computations on GPUs. PyTorch: Another widely-used deep learning library with GPU support.import dask.dataframe as dddf = dd.read_csv("data.csv")

Perform some computation...result = df.groupby('column').sum().compute()

print(result)

Example (using TensorFlow):

import tensorflow as tfwith tf.device('/gpu:0'):

x = tf.constant([1, 2, 3])

y = tf.square(x)

print(y) # Output: [1, 4, 9]

In summary, Python offers various libraries and frameworks for parallel programming, making it an excellent choice for building scalable and efficient applications.