Is Python single threaded or multi?

Is Python single threaded or multi?

I'm glad you asked!

Python is a fascinating programming language, and its concurrency model is an essential aspect of its design.

The Answer:

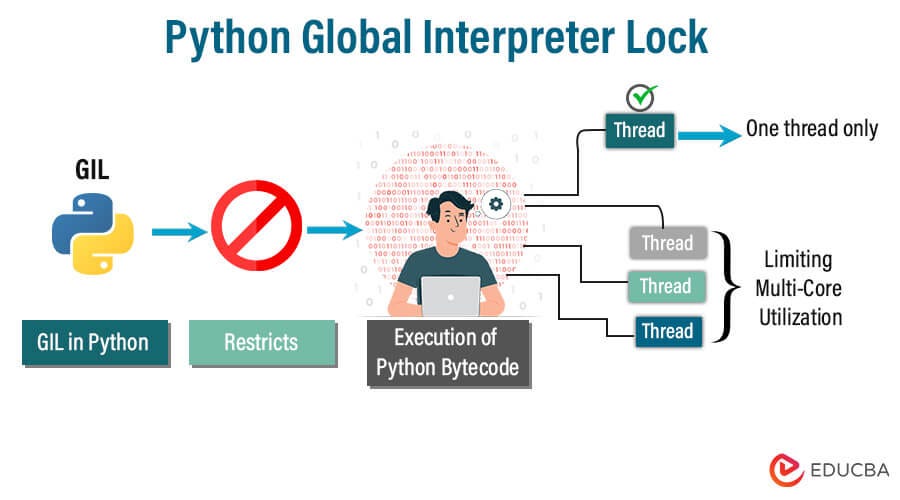

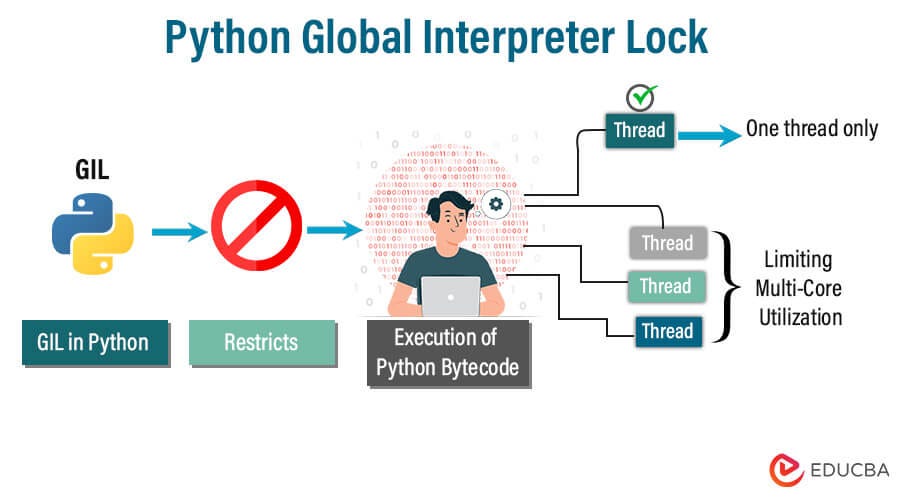

Python is designed to be single-threaded by default, meaning that it runs your code on one thread at a time. This is because Python's Global Interpreter Lock (GIL) ensures that only one thread can execute instructions at any given moment.

In other words, when you write a Python program, it will always run on the main thread, and no other threads are executed concurrently by default.

But wait, there's more!

Python does provide some mechanisms to achieve concurrency, making it possible to run multiple tasks or threads simultaneously. Here are a few examples:

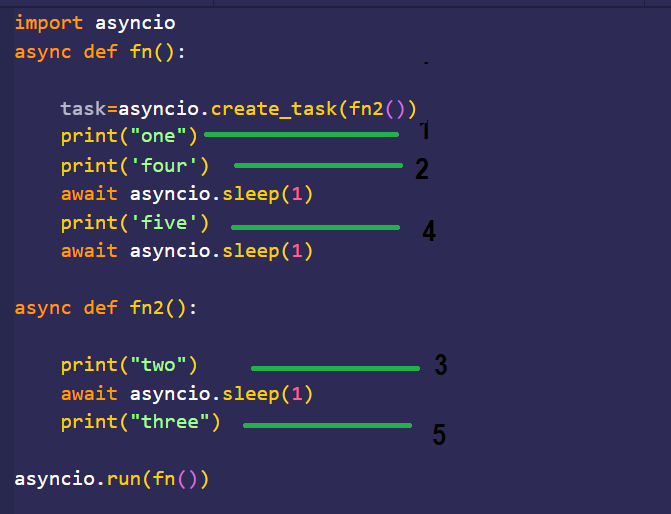

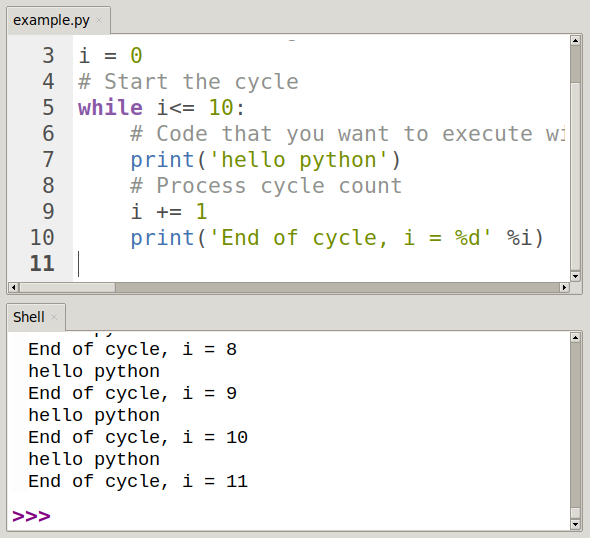

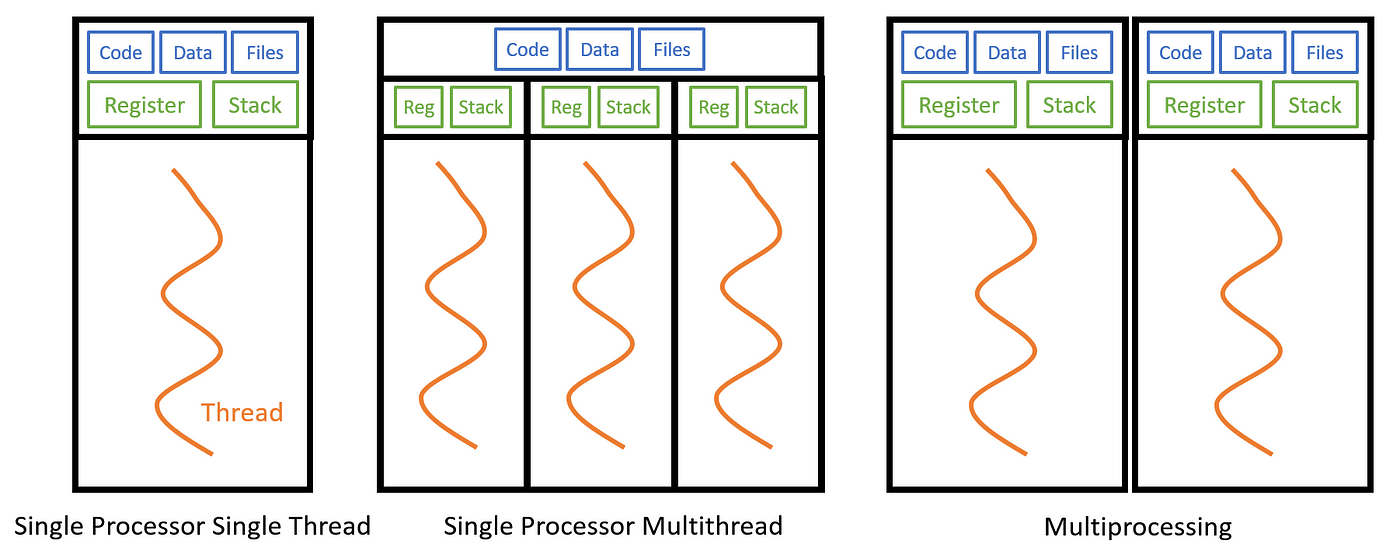

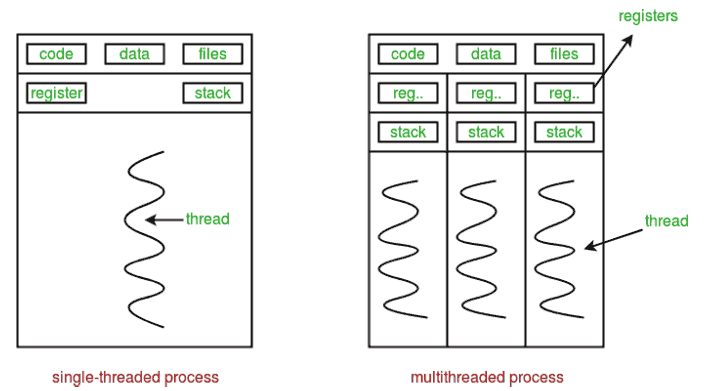

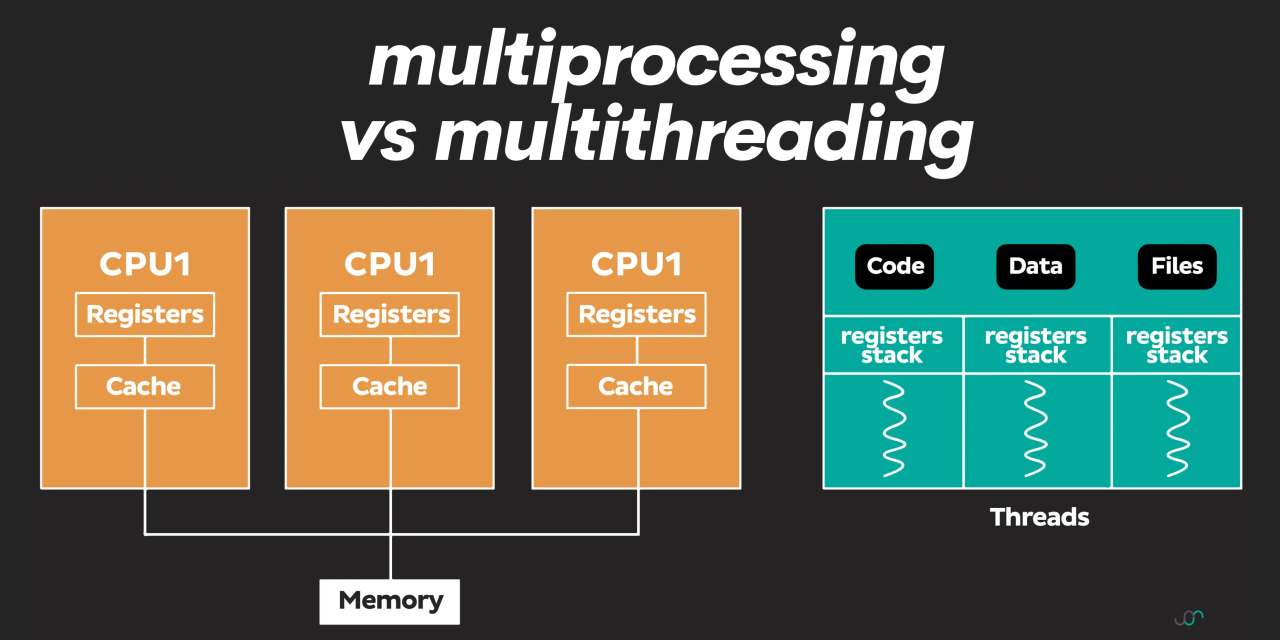

Multi-processing: Themultiprocessing module allows you to create separate processes that can run independently of each other. Each process has its own thread and memory space. Threading: You can use the threading module to create additional threads within your Python program. These threads can execute concurrently, but be aware that they still share the same memory space as the main thread. Asyncio: The asyncio library provides support for asynchronous programming in Python. This allows you to write code that yields control back to the event loop at specific points, making it possible to run multiple tasks concurrently.

Why is this important?

When writing concurrent or parallel code in Python, understanding how threads and processes interact with each other can be crucial. For example:

When usingmultiprocessing, you need to ensure that data sharing between processes is properly handled. With threading, you should consider synchronization primitives (like locks) to coordinate access to shared resources.

By being aware of these nuances, you'll be better equipped to write efficient and effective concurrent code in Python.

In Conclusion:

While Python itself is single-threaded by default, the language provides various mechanisms to achieve concurrency. With multiprocessing, threading, and asyncio libraries, you can create parallel or concurrent tasks that run simultaneously, allowing for improved responsiveness, scalability, and performance in your Python applications.

I hope this clears up any misconceptions about Python's concurrency model!

Is Python truly multithreaded?

The concept of true multithreading is a bit nuanced when it comes to Python.

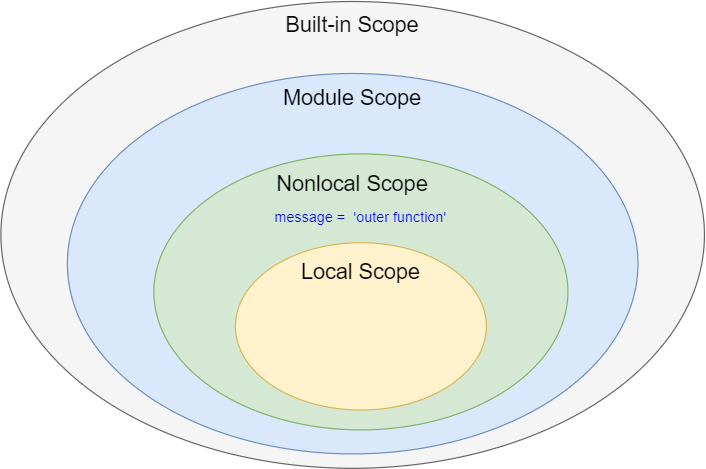

In Python, the Global Interpreter Lock (GIL) is what governs thread execution. The GIL ensures that only one thread can execute Python bytecodes at any given time. This means that threads in Python don't truly run concurrently; instead, they're scheduled by the interpreter and may be paused or resumed based on various factors, such as I/O operations, memory access, or garbage collection.

When you create multiple threads using Python's built-in threading module, what happens under the hood is a process of scheduling. The threads are essentially waiting in line to execute, with the GIL controlling who gets to go next. This means that if your program is CPU-bound (i.e., relying heavily on computational power), you might not see significant performance improvements from using multiple threads.

However, when your code involves I/O operations like network requests, file reads/writes, or database queries, Python's thread support becomes more beneficial. Because these tasks often involve waiting for external resources, the GIL's scheduling mechanism can take advantage of this idle time to switch between threads, potentially improving overall system performance.

To better understand how Python's threading works, let's consider an example:

Imagine a simple web server using the http.server module. If you create multiple threads to handle incoming requests, what happens is that each thread takes turns executing, with the GIL controlling which one gets to process the next request. However, because I/O operations are involved (i.e., reading and responding to HTTP requests), Python's threading mechanism can still provide benefits like improved responsiveness or handling a larger number of concurrent connections.

In summary, while Python is not truly multithreaded in the sense that multiple threads can run concurrently without the GIL's intervention, its thread support can still be beneficial for certain types of I/O-bound operations. For CPU-bound tasks, you might not see significant performance improvements from using multiple threads, but there are still other considerations like memory access and garbage collection that affect overall system performance.

For those seeking more control over threading in Python, the multiprocessing module provides a way to run concurrent processes with true parallel execution. This can be particularly useful for CPU-bound tasks or when you need to execute code in parallel without being limited by the GIL.